Tag:

Branch:

Tree:

21345d2823

main

oxigraph-8.1.1

oxigraph-8.3.2

oxigraph-main

${ noResults }

4769 Commits (21345d282327906faaddd7d93e897fee5b7194bd)

| Author | SHA1 | Message | Date |

|---|---|---|---|

|

|

21345d2823 |

Streaming Compression API for WAL compression. (#9619)

Summary: Implement a streaming compression API (compress/uncompress) to use for WAL compression. The log_writer would use the compress class/API to compress a record before writing it out in chunks. The log_reader would use the uncompress class/API to uncompress the chunks and combine into a single record. Added unit test to verify the API for different sizes/compression types. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9619 Test Plan: make -j24 check Reviewed By: anand1976 Differential Revision: D34437346 Pulled By: sidroyc fbshipit-source-id: b180569ad2ddcf3106380f8758b556cc0ad18382 |

3 years ago |

|

|

6f12599863 |

Support WBWI for keys having timestamps (#9603)

Summary: This PR supports inserting keys to a `WriteBatchWithIndex` for column families that enable user-defined timestamps and reading the keys back. **The index does not have timestamps.** Writing a key to WBWI is unchanged, because the underlying WriteBatch already supports it. When reading the keys back, we need to make sure to distinguish between keys with and without timestamps before comparison. When user calls `GetFromBatchAndDB()`, no timestamp is needed to query the batch, but a timestamp has to be provided to query the db. The assumption is that data in the batch must be newer than data from the db. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9603 Test Plan: make check Reviewed By: ltamasi Differential Revision: D34354849 Pulled By: riversand963 fbshipit-source-id: d25d1f84e2240ce543e521fa30595082fb8db9a0 |

3 years ago |

|

|

8ca433f912 |

Fix test race conditions with OnFlushCompleted() (#9617)

Summary: We often see flaky tests due to `DB::Flush()` or `DBImpl::TEST_WaitForFlushMemTable()` not waiting until event listeners complete. For example, https://github.com/facebook/rocksdb/issues/9084, https://github.com/facebook/rocksdb/issues/9400, https://github.com/facebook/rocksdb/issues/9528, plus two new ones this week: "EventListenerTest.OnSingleDBFlushTest" and "DBFlushTest.FireOnFlushCompletedAfterCommittedResult". I ran a `make check` with the below race condition-coercing patch and fixed issues it found besides old BlobDB. ``` diff --git a/db/db_impl/db_impl_compaction_flush.cc b/db/db_impl/db_impl_compaction_flush.cc index 0e1864788..aaba68c4a 100644 --- a/db/db_impl/db_impl_compaction_flush.cc +++ b/db/db_impl/db_impl_compaction_flush.cc @@ -861,6 +861,8 @@ void DBImpl::NotifyOnFlushCompleted( mutable_cf_options.level0_stop_writes_trigger); // release lock while notifying events mutex_.Unlock(); + bg_cv_.SignalAll(); + sleep(1); { for (auto& info : *flush_jobs_info) { info->triggered_writes_slowdown = triggered_writes_slowdown; ``` The reason I did not fix old BlobDB issues is because it appears to have a fundamental (non-test) issue. In particular, it uses an EventListener to keep track of the files. OnFlushCompleted() could be delayed until even after a compaction involving that flushed file completes, causing the compaction to unexpectedly delete an untracked file. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9617 Test Plan: `make check` including the race condition coercing patch Reviewed By: hx235 Differential Revision: D34384022 Pulled By: ajkr fbshipit-source-id: 2652ded39b415277c5d6a628414345223930514e |

3 years ago |

|

|

3379d1466f |

Fix DBTest2.BackupFileTemperature memory leak (#9610)

Summary: Valgrind was failing with the below error because we forgot to destroy the `BackupEngine` object: ``` ==421173== Command: ./db_test2 --gtest_filter=DBTest2.BackupFileTemperature ==421173== Note: Google Test filter = DBTest2.BackupFileTemperature [==========] Running 1 test from 1 test case. [----------] Global test environment set-up. [----------] 1 test from DBTest2 [ RUN ] DBTest2.BackupFileTemperature --421173-- WARNING: unhandled amd64-linux syscall: 425 --421173-- You may be able to write your own handler. --421173-- Read the file README_MISSING_SYSCALL_OR_IOCTL. --421173-- Nevertheless we consider this a bug. Please report --421173-- it at http://valgrind.org/support/bug_reports.html. [ OK ] DBTest2.BackupFileTemperature (3366 ms) [----------] 1 test from DBTest2 (3371 ms total) [----------] Global test environment tear-down [==========] 1 test from 1 test case ran. (3413 ms total) [ PASSED ] 1 test. ==421173== ==421173== HEAP SUMMARY: ==421173== in use at exit: 13,042 bytes in 195 blocks ==421173== total heap usage: 26,022 allocs, 25,827 frees, 27,555,265 bytes allocated ==421173== ==421173== 8 bytes in 1 blocks are possibly lost in loss record 6 of 167 ==421173== at 0x4838DBF: operator new(unsigned long) (vg_replace_malloc.c:344) ==421173== by 0x8D4606: allocate (new_allocator.h:114) ==421173== by 0x8D4606: allocate (alloc_traits.h:445) ==421173== by 0x8D4606: _M_allocate (stl_vector.h:343) ==421173== by 0x8D4606: reserve (vector.tcc:78) ==421173== by 0x8D4606: rocksdb::BackupEngineImpl::Initialize() (backupable_db.cc:1174) ==421173== by 0x8D5473: Initialize (backupable_db.cc:918) ==421173== by 0x8D5473: rocksdb::BackupEngine::Open(rocksdb::BackupEngineOptions const&, rocksdb::Env*, rocksdb::BackupEngine**) (backupable_db.cc:937) ==421173== by 0x50AC8F: Open (backup_engine.h:585) ==421173== by 0x50AC8F: rocksdb::DBTest2_BackupFileTemperature_Test::TestBody() (db_test2.cc:6996) ... ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9610 Test Plan: ``` $ make -j24 ROCKSDBTESTS_SUBSET=db_test2 valgrind_check_some ``` Reviewed By: akankshamahajan15 Differential Revision: D34371210 Pulled By: ajkr fbshipit-source-id: 68154fcb0c51b28222efa23fa4ee02df8d925a18 |

3 years ago |

|

|

d3a2f284d9 |

Add Temperature info in `NewSequentialFile()` (#9499)

Summary: Add Temperature hints information from RocksDB in API `NewSequentialFile()`. backup and checkpoint operations need to open the source files with `NewSequentialFile()`, which will have the temperature hints. Other operations are not covered. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9499 Test Plan: Added unittest Reviewed By: pdillinger Differential Revision: D34006115 Pulled By: jay-zhuang fbshipit-source-id: 568b34602b76520e53128672bd07e9d886786a2f |

3 years ago |

|

|

67f071fade |

Fixes #9565 (#9586)

Summary: [Compaction::IsTrivialMove]( |

3 years ago |

|

|

f4b2500e12 |

Add last level and non-last level read statistics (#9519)

Summary: Add last level and non-last level read statistics: ``` LAST_LEVEL_READ_BYTES, LAST_LEVEL_READ_COUNT, NON_LAST_LEVEL_READ_BYTES, NON_LAST_LEVEL_READ_COUNT, ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9519 Test Plan: added unittest Reviewed By: siying Differential Revision: D34062539 Pulled By: jay-zhuang fbshipit-source-id: 908644c3050878b4234febdc72e3e19d89af38cd |

3 years ago |

|

|

30b08878d8 |

Make FilterPolicy Customizable (#9590)

Summary: Make FilterPolicy into a Customizable class. Allow new FilterPolicy to be discovered through the ObjectRegistry Pull Request resolved: https://github.com/facebook/rocksdb/pull/9590 Reviewed By: pdillinger Differential Revision: D34327367 Pulled By: mrambacher fbshipit-source-id: 37e7edac90ec9457422b72f359ab8ef48829c190 |

3 years ago |

|

|

2fbc672732 |

Add temperature information to the event listener callbacks (#9591)

Summary: RocksDB try to provide temperature information in the event listener callbacks. The information is not guaranteed, as some operation like backup won't have these information. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9591 Test Plan: Added unittest Reviewed By: siying, pdillinger Differential Revision: D34309339 Pulled By: jay-zhuang fbshipit-source-id: 4aca4f270f99fa49186d85d300da42594663d6d7 |

3 years ago |

|

|

627deb7ceb |

Fix some MultiGet batching stats (#9583)

Summary: The NUM_INDEX_AND_FILTER_BLOCKS_READ_PER_LEVEL, NUM_DATA_BLOCKS_READ_PER_LEVEL, and NUM_SST_READ_PER_LEVEL stats were being recorded only when the last file in a level happened to have hits. They are supposed to be updated for every level. Also, there was some overcounting of GetContextStats. This PR fixes both the problems. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9583 Test Plan: Update the unit test in db_basic_test Reviewed By: akankshamahajan15 Differential Revision: D34308044 Pulled By: anand1976 fbshipit-source-id: b3b36020fda26ba91bc6e0e47d52d58f4d7f656e |

3 years ago |

|

|

39b0d92153 |

Add record to set WAL compression type if enabled (#9556)

Summary: When WAL compression is enabled, add a record (new record type) to store the compression type to indicate that all subsequent records are compressed. The log reader will store the compression type when this record is encountered and use the type to uncompress the subsequent records. Compress and uncompress to be implemented in subsequent diffs. Enabled WAL compression in some WAL tests to check for regressions. Some tests that rely on offsets have been disabled. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9556 Reviewed By: anand1976 Differential Revision: D34308216 Pulled By: sidroyc fbshipit-source-id: 7f10595e46f3277f1ea2d309fbf95e2e935a8705 |

3 years ago |

|

|

f092f0fa5d |

Add subcompaction event API (#9311)

Summary: Add event callback for subcompaction and adds a sub_job_id to identify it. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9311 Reviewed By: ajkr Differential Revision: D33892707 Pulled By: jay-zhuang fbshipit-source-id: 57b5e5e594d61b2112d480c18a79a36751f65a4e |

3 years ago |

|

|

babe56ddba |

Add rate limiter priority to ReadOptions (#9424)

Summary: Users can set the priority for file reads associated with their operation by setting `ReadOptions::rate_limiter_priority` to something other than `Env::IO_TOTAL`. Rate limiting `VerifyChecksum()` and `VerifyFileChecksums()` is the motivation for this PR, so it also includes benchmarks and minor bug fixes to get that working. `RandomAccessFileReader::Read()` already had support for rate limiting compaction reads. I changed that rate limiting to be non-specific to compaction, but rather performed according to the passed in `Env::IOPriority`. Now the compaction read rate limiting is supported by setting `rate_limiter_priority = Env::IO_LOW` on its `ReadOptions`. There is no default value for the new `Env::IOPriority` parameter to `RandomAccessFileReader::Read()`. That means this PR goes through all callers (in some cases multiple layers up the call stack) to find a `ReadOptions` to provide the priority. There are TODOs for cases I believe it would be good to let user control the priority some day (e.g., file footer reads), and no TODO in cases I believe it doesn't matter (e.g., trace file reads). The API doc only lists the missing cases where a file read associated with a provided `ReadOptions` cannot be rate limited. For cases like file ingestion checksum calculation, there is no API to provide `ReadOptions` or `Env::IOPriority`, so I didn't count that as missing. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9424 Test Plan: - new unit tests - new benchmarks on ~50MB database with 1MB/s read rate limit and 100ms refill interval; verified with strace reads are chunked (at 0.1MB per chunk) and spaced roughly 100ms apart. - setup command: `./db_bench -benchmarks=fillrandom,compact -db=/tmp/testdb -target_file_size_base=1048576 -disable_auto_compactions=true -file_checksum=true` - benchmarks command: `strace -ttfe pread64 ./db_bench -benchmarks=verifychecksum,verifyfilechecksums -use_existing_db=true -db=/tmp/testdb -rate_limiter_bytes_per_sec=1048576 -rate_limit_bg_reads=1 -rate_limit_user_ops=true -file_checksum=true` - crash test using IO_USER priority on non-validation reads with https://github.com/facebook/rocksdb/issues/9567 reverted: `python3 tools/db_crashtest.py blackbox --max_key=1000000 --write_buffer_size=524288 --target_file_size_base=524288 --level_compaction_dynamic_level_bytes=true --duration=3600 --rate_limit_bg_reads=true --rate_limit_user_ops=true --rate_limiter_bytes_per_sec=10485760 --interval=10` Reviewed By: hx235 Differential Revision: D33747386 Pulled By: ajkr fbshipit-source-id: a2d985e97912fba8c54763798e04f006ccc56e0c |

3 years ago |

|

|

1cda273dc3 |

Fix a silent data loss for write-committed txn (#9571)

Summary:

The following sequence of events can cause silent data loss for write-committed

transactions.

```

Time thread 1 bg flush

| db->Put("a")

| txn = NewTxn()

| txn->Put("b", "v")

| txn->Prepare() // writes only to 5.log

| db->SwitchMemtable() // memtable 1 has "a"

| // close 5.log,

| // creates 8.log

| trigger flush

| pick memtable 1

| unlock db mutex

| write new sst

| txn->ctwb->Put("gtid", "1") // writes 8.log

| txn->Commit() // writes to 8.log

| // writes to memtable 2

| compute min_log_number_to_keep_2pc, this

| will be 8 (incorrect).

|

| Purge obsolete wals, including 5.log

|

V

```

At this point, writes of txn exists only in memtable. Close db without flush because db thinks the data in

memtable are backed by log. Then reopen, the writes are lost except key-value pair {"gtid"->"1"},

only the commit marker of txn is in 8.log

The reason lies in `PrecomputeMinLogNumberToKeep2PC()` which calls `FindMinPrepLogReferencedByMemTable()`.

In the above example, when bg flush thread tries to find obsolete wals, it uses the information

computed by `PrecomputeMinLogNumberToKeep2PC()`. The return value of `PrecomputeMinLogNumberToKeep2PC()`

depends on three components

- `PrecomputeMinLogNumberToKeepNon2PC()`. This represents the WAL that has unflushed data. As the name of this method suggests, it does not account for 2PC. Although the keys reside in the prepare section of a previous WAL, the column family references the current WAL when they are actually inserted into the memtable during txn commit.

- `prep_tracker->FindMinLogContainingOutstandingPrep()`. This represents the WAL with a prepare section but the txn hasn't committed.

- `FindMinPrepLogReferencedByMemTable()`. This represents the WAL on which some memtables (mutable and immutable) depend for their unflushed data.

The bug lies in `FindMinPrepLogReferencedByMemTable()`. Originally, this function skips checking the column families

that are being flushed, but the unit test added in this PR shows that they should not be. In this unit test, there is

only the default column family, and one of its memtables has unflushed data backed by a prepare section in 5.log.

We should return this information via `FindMinPrepLogReferencedByMemTable()`.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9571

Test Plan:

```

./transaction_test --gtest_filter=*/TransactionTest.SwitchMemtableDuringPrepareAndCommit_WC/*

make check

```

Reviewed By: siying

Differential Revision: D34235236

Pulled By: riversand963

fbshipit-source-id: 120eb21a666728a38dda77b96276c6af72b008b1

|

3 years ago |

|

|

1e403a0c6c |

Fix assertion failure in FastLocalBloomBitsBuilder (#9585)

Summary:

As in

```

db_stress: table/block_based/filter_policy.cc:316: rocksdb::{anonymous}::FastLocalBloomBitsBuilder::FastLocalBloomBitsBuilder(int, std::atomic<long int>*, std::shared_ptr<rocksdb::CacheReservationManager>, bool): Assertion `millibits_per_key >= 1000' failed.

```

This assertion failure was actually happening with our RibbonFilterPolicy

which falls back to Bloom for some cases, often for flush, but was

missing new special logic to skip generating filter for 0 bits per key

case. Fixed by adding the logic in other builtin FilterPolicy

implementations.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9585

Test Plan:

Updated db_bloom_filter_test to do more integration testing

of the RibbonFilterPolicy ("auto Ribbon") class, incl regression test

this with SkipFilterOnEssentiallyZeroBpk

Reviewed By: ajkr

Differential Revision: D34295101

Pulled By: pdillinger

fbshipit-source-id: 3488eb207fc1d67bbbd1301313714aa1b6406e6e

|

3 years ago |

|

|

31031c0210 |

Remove deprecated RemoteCompaction API (#9570)

Summary: Remove deprecated remote compaction APIs `CompactionService::Start()` and `CompactionService::WaitForComplete()`. Please use `CompactionService::StartV2()`, `CompactionService::WaitForCompleteV2()` instead, which provides the same information plus extra data like priority, db_id, etc. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9570 Test Plan: CI Reviewed By: riversand963 Differential Revision: D34255969 Pulled By: jay-zhuang fbshipit-source-id: c6376eccdd1123f1c42ab53771b5f65f8160c325 |

3 years ago |

|

|

8c681087c7 |

Refactor FilterPolicies toward Customizable (#9567)

Summary: Some changes to make it easier to make FilterPolicy customizable. Especially, create distinct classes for the different testing-only and user-facing built-in FilterPolicy modes. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9567 Test Plan: tests updated, with no intended difference in functionality tested. No difference in test performance seen as a result of moving to string-based filter type configuration. Reviewed By: mrambacher Differential Revision: D34234694 Pulled By: pdillinger fbshipit-source-id: 8a94931a9e04c3bcca863a4f524cfd064aaf0122 |

3 years ago |

|

|

a0c569ee1d |

Cancel manual compaction in thread-pool queue (#9557)

Summary: Fix `DisableManualCompaction()` has to wait scheduled manual compaction to start the execution to cancel the job. When a manual compaction in thread-pool queue is cancel, set the job is_canceled to true and clean the resource. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9557 Test Plan: added unittest that will hang without the change Reviewed By: ajkr Differential Revision: D34214910 Pulled By: jay-zhuang fbshipit-source-id: 89dbaee78ddf26eb13ce862c2b15f4a098b36a78 |

3 years ago |

|

|

443d8ef094 |

Fix PinSelf() read-after-free in DB::GetMergeOperands() (#9507)

Summary:

**Context:**

Running the new test `DBMergeOperandTest.MergeOperandReadAfterFreeBug` prior to this fix surfaces the read-after-free bug of PinSef() as below:

```

READ of size 8 at 0x60400002529d thread T0

https://github.com/facebook/rocksdb/issues/5 0x7f199a in rocksdb::PinnableSlice::PinSelf(rocksdb::Slice const&) include/rocksdb/slice.h:171

https://github.com/facebook/rocksdb/issues/6 0x7f199a in rocksdb::DBImpl::GetImpl(rocksdb::ReadOptions const&, rocksdb::Slice const&, rocksdb::DBImpl::GetImplOptions&) db/db_impl/db_impl.cc:1919

https://github.com/facebook/rocksdb/issues/7 0x540d63 in rocksdb::DBImpl::GetMergeOperands(rocksdb::ReadOptions const&, rocksdb::ColumnFamilyHandle*, rocksdb::Slice const&, rocksdb::PinnableSlice*, rocksdb::GetMergeOperandsOptions*, int*) db/db_impl/db_impl.h:203

freed by thread T0 here:

https://github.com/facebook/rocksdb/issues/3 0x1191399 in rocksdb::cache_entry_roles_detail::RegisteredDeleter<rocksdb::Block, (rocksdb::CacheEntryRole)0>::Delete(rocksdb::Slice const&, void*) cache/cache_entry_roles.h:99

https://github.com/facebook/rocksdb/issues/4 0x719348 in rocksdb::LRUHandle::Free() cache/lru_cache.h:205

https://github.com/facebook/rocksdb/issues/5 0x71047f in rocksdb::LRUCacheShard::Release(rocksdb::Cache::Handle*, bool) cache/lru_cache.cc:547

https://github.com/facebook/rocksdb/issues/6 0xa78f0a in rocksdb::Cleanable::DoCleanup() include/rocksdb/cleanable.h:60

https://github.com/facebook/rocksdb/issues/7 0xa78f0a in rocksdb::Cleanable::Reset() include/rocksdb/cleanable.h:38

https://github.com/facebook/rocksdb/issues/8 0xa78f0a in rocksdb::PinnedIteratorsManager::ReleasePinnedData() db/pinned_iterators_manager.h:71

https://github.com/facebook/rocksdb/issues/9 0xd0c21b in rocksdb::PinnedIteratorsManager::~PinnedIteratorsManager() db/pinned_iterators_manager.h:24

https://github.com/facebook/rocksdb/issues/10 0xd0c21b in rocksdb::Version::Get(rocksdb::ReadOptions const&, rocksdb::LookupKey const&, rocksdb::PinnableSlice*, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >*, rocksdb::Status*, rocksdb::MergeContext*, unsigned long*, bool*, bool*, unsigned long*, rocksdb::ReadCallback*, bool*, bool) db/pinned_iterators_manager.h:22

https://github.com/facebook/rocksdb/issues/11 0x7f0fdf in rocksdb::DBImpl::GetImpl(rocksdb::ReadOptions const&, rocksdb::Slice const&, rocksdb::DBImpl::GetImplOptions&) db/db_impl/db_impl.cc:1886

https://github.com/facebook/rocksdb/issues/12 0x540d63 in rocksdb::DBImpl::GetMergeOperands(rocksdb::ReadOptions const&, rocksdb::ColumnFamilyHandle*, rocksdb::Slice const&, rocksdb::PinnableSlice*, rocksdb::GetMergeOperandsOptions*, int*) db/db_impl/db_impl.h:203

previously allocated by thread T0 here:

https://github.com/facebook/rocksdb/issues/1 0x1239896 in rocksdb::AllocateBlock(unsigned long, **rocksdb::MemoryAllocator*)** memory/memory_allocator.h:35

https://github.com/facebook/rocksdb/issues/2 0x1239896 in rocksdb::BlockFetcher::CopyBufferToHeapBuf() table/block_fetcher.cc:171

https://github.com/facebook/rocksdb/issues/3 0x1239896 in rocksdb::BlockFetcher::GetBlockContents() table/block_fetcher.cc:206

https://github.com/facebook/rocksdb/issues/4 0x122eae5 in rocksdb::BlockFetcher::ReadBlockContents() table/block_fetcher.cc:325

https://github.com/facebook/rocksdb/issues/5 0x11b1f45 in rocksdb::Status rocksdb::BlockBasedTable::MaybeReadBlockAndLoadToCache<rocksdb::Block>(rocksdb::FilePrefetchBuffer*, rocksdb::ReadOptions const&, rocksdb::BlockHandle const&, rocksdb::UncompressionDict const&, bool, rocksdb::CachableEntry<rocksdb::Block>*, rocksdb::BlockType, rocksdb::GetContext*, rocksdb::BlockCacheLookupContext*, rocksdb::BlockContents*) const table/block_based/block_based_table_reader.cc:1503

```

Here is the analysis:

- We have [PinnedIteratorsManager](https://github.com/facebook/rocksdb/blob/6.28.fb/db/version_set.cc#L1980) with `Cleanable` capability in our `Version::Get()` path. It's responsible for managing the life-time of pinned iterator and invoking registered cleanup functions during its own destruction.

- For example in case above, the merge operands's clean-up gets associated with this manger in [GetContext::push_operand](https://github.com/facebook/rocksdb/blob/6.28.fb/table/get_context.cc#L405). During PinnedIteratorsManager's [destruction](https://github.com/facebook/rocksdb/blob/6.28.fb/db/pinned_iterators_manager.h#L67), the release function associated with those merge operand data is invoked.

**And that's what we see in "freed by thread T955 here" in ASAN.**

- Bug 🐛: `PinnedIteratorsManager` is local to `Version::Get()` while the data of merge operands need to outlive `Version::Get` and stay till they get [PinSelf()](https://github.com/facebook/rocksdb/blob/6.28.fb/db/db_impl/db_impl.cc#L1905), **which is the read-after-free in ASAN.**

- This bug is likely to be an overlook of `PinnedIteratorsManager` when developing the API `DB::GetMergeOperands` cuz the current logic works fine with the existing case of getting the *merged value* where the operands do not need to live that long.

- This bug was not surfaced much (even in its unit test) due to the release function associated with the merge operands (which are actually blocks put in cache as you can see in `BlockBasedTable::MaybeReadBlockAndLoadToCache` **in "previously allocated by" in ASAN report**) is a cache entry deleter.

The deleter will call `Cache::Release()` which, for LRU cache, won't immediately deallocate the block based on LRU policy [unless the cache is full or being instructed to force erase](https://github.com/facebook/rocksdb/blob/6.28.fb/cache/lru_cache.cc#L521-L531)

- `DBMergeOperandTest.MergeOperandReadAfterFreeBug` makes the cache extremely small to force cache full.

**Summary:**

- Fix the bug by align `PinnedIteratorsManager`'s lifetime with the merge operands

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9507

Test Plan:

- New test `DBMergeOperandTest.MergeOperandReadAfterFreeBug`

- db bench on read path

- Setup (LSM tree with several levels, cache the whole db to avoid read IO, warm cache with readseq to avoid read IO): `TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -benchmarks="fillrandom,readseq -num=1000000 -cache_size=100000000 -write_buffer_size=10000 -statistics=1 -max_bytes_for_level_base=10000 -level0_file_num_compaction_trigger=1``TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -benchmarks="readrandom" -num=1000000 -cache_size=100000000 `

- Actual command run (run 20-run for 20 times and then average the 20-run's average micros/op)

- `for j in {1..20}; do (for i in {1..20}; do rm -rf /dev/shm/rocksdb/ && TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -benchmarks="fillrandom,readseq,readrandom" -num=1000000 -cache_size=100000000 -write_buffer_size=10000 -statistics=1 -max_bytes_for_level_base=10000 -level0_file_num_compaction_trigger=1 | egrep 'readrandom'; done > rr_output_pre.txt && (awk '{sum+=$3; sum_sqrt+=$3^2}END{print sum/20, sqrt(sum_sqrt/20-(sum/20)^2)}' rr_output_pre.txt) >> rr_output_pre_2.txt); done`

- **Result: Pre-change: 3.79193 micros/op; Post-change: 3.79528 micros/op (+0.09%)**

(pre-change)sorted avg micros/op of each 20-run | std of micros/op of each 20-run | (post-change) sorted avg micros/op of each 20-run | std of micros/op of each 20-run

-- | -- | -- | --

3.58355 | 0.265209 | 3.48715 | 0.382076

3.58845 | 0.519927 | 3.5832 | 0.382726

3.66415 | 0.452097 | 3.677 | 0.563831

3.68495 | 0.430897 | 3.68405 | 0.495355

3.70295 | 0.482893 | 3.68465 | 0.431438

3.719 | 0.463806 | 3.71945 | 0.457157

3.7393 | 0.453423 | 3.72795 | 0.538604

3.7806 | 0.527613 | 3.75075 | 0.444509

3.7817 | 0.426704 | 3.7683 | 0.468065

3.809 | 0.381033 | 3.8086 | 0.557378

3.80985 | 0.466011 | 3.81805 | 0.524833

3.8165 | 0.500351 | 3.83405 | 0.529339

3.8479 | 0.430326 | 3.86285 | 0.44831

3.85125 | 0.434108 | 3.8717 | 0.544098

3.8556 | 0.524602 | 3.895 | 0.411679

3.8656 | 0.476383 | 3.90965 | 0.566636

3.8911 | 0.488477 | 3.92735 | 0.608038

3.898 | 0.493978 | 3.9439 | 0.524511

3.97235 | 0.515008 | 3.9623 | 0.477416

3.9768 | 0.519993 | 3.98965 | 0.521481

- CI

Reviewed By: ajkr

Differential Revision: D34030519

Pulled By: hx235

fbshipit-source-id: a99ac585c11704c5ed93af033cb29ba0a7b16ae8

|

3 years ago |

|

|

e24734f843 |

Use -Wno-invalid-offsetof instead of dangerous offset_of hack (#9563)

Summary: After https://github.com/facebook/rocksdb/issues/9515 added a unique_ptr to Status, we see some warnings-as-error in some internal builds like this: ``` stderr: rocksdb/src/db/compaction/compaction_job.cc:2839:7: error: offset of on non-standard-layout type 'struct CompactionServiceResult' [-Werror,-Winvalid-offsetof] {offsetof(struct CompactionServiceResult, status), ^ ~~~~~~ ``` I see three potential solutions to resolving this: * Expand our use of an idiom that works around the warning (see offset_of functions removed in this change, inspired by https://gist.github.com/graphitemaster/494f21190bb2c63c5516) However, this construction is invoking undefined behavior that assumes consistent layout with no compiler-introduced indirection. A compiler incompatible with our assumptions will likely compile the code and exhibit undefined behavior. * Migrate to something in place of offset, like a function mapping CompactionServiceResult* to Status* (for the `status` field). This might be required in the long term. * **Selected:** Use our new C++17 dependency to use offsetof in a well-defined way when the compiler allows it. From a comment on https://gist.github.com/graphitemaster/494f21190bb2c63c5516: > A final note: in C++17, offsetof is conditionally supported, which > means that you can use it on any type (not just standard layout > types) and the compiler will error if it can't compile it correctly. > That appears to be the best option if you can live with C++17 and > don't need constexpr support. The C++17 semantics are confirmed on https://en.cppreference.com/w/cpp/types/offsetof, so we can suppress the warning as long as we accept that we might run into a compiler that rejects the code, and at that point we will find a solution, such as the more intrusive "migrate" solution above. Although this is currently only showing in our buck build, it will surely show up also with make and cmake, so I have updated those configurations as well. Also in the buck build, -Wno-expansion-to-defined does not appear to be needed anymore (both current compiler configurations) so I removed it. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9563 Test Plan: Tried out buck builds with both current compiler configurations Reviewed By: riversand963 Differential Revision: D34220931 Pulled By: pdillinger fbshipit-source-id: d39436008259bd1eaaa87c77be69fb2a5b559e1f |

3 years ago |

|

|

479eb1aad6 |

Hide deprecated, inefficient block-based filter from public API (#9535)

Summary: This change removes the ability to configure the deprecated, inefficient block-based filter in the public API. Options that would have enabled it now use "full" (and optionally partitioned) filters. Existing block-based filters can still be read and used, and a "back door" way to build them still exists, for testing and in case of trouble. About the only way this removal would cause an issue for users is if temporary memory for filter construction greatly increases. In HISTORY.md we suggest a few possible mitigations: partitioned filters, smaller SST files, or setting reserve_table_builder_memory=true. Or users who have customized a FilterPolicy using the CreateFilter/KeyMayMatch mechanism removed in https://github.com/facebook/rocksdb/issues/9501 will have to upgrade their code. (It's long past time for people to move to the new builder/reader customization interface.) This change also introduces some internal-use-only configuration strings for testing specific filter implementations while bypassing some compatibility / intelligence logic. This is intended to hint at a path toward making FilterPolicy Customizable, but it also gives us a "back door" way to configure block-based filter. Aside: updated db_bench so that -readonly implies -use_existing_db Pull Request resolved: https://github.com/facebook/rocksdb/pull/9535 Test Plan: Unit tests updated. Specifically, * BlockBasedTableTest.BlockReadCountTest is tweaked to validate the back door configuration interface and ignoring of `use_block_based_builder`. * BlockBasedTableTest.TracingGetTest is migrated from testing block-based filter access pattern to full filter access patter, by re-ordering some things. * Options test (pretty self-explanatory) Performance test - create with `./db_bench -db=/dev/shm/rocksdb1 -bloom_bits=10 -cache_index_and_filter_blocks=1 -benchmarks=fillrandom -num=10000000 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0` with and without `-use_block_based_filter`, which creates a DB with 21 SST files in L0. Read with `./db_bench -db=/dev/shm/rocksdb1 -readonly -bloom_bits=10 -cache_index_and_filter_blocks=1 -benchmarks=readrandom -num=10000000 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -duration=30` Without -use_block_based_filter: readrandom 464 ops/sec, 689280 KB DB With -use_block_based_filter: readrandom 169 ops/sec, 690996 KB DB No consistent difference with fillrandom Reviewed By: jay-zhuang Differential Revision: D34153871 Pulled By: pdillinger fbshipit-source-id: 31f4a933c542f8f09aca47fa64aec67832a69738 |

3 years ago |

|

|

5c53b9008f |

Fix failure in c_test (#9547)

Summary: When tests are run with TMPD, c_test may fail because TMPD is not created by the test. It results in IO error: No such file or directory: While mkdir if missing: /tmp/rocksdb_test_tmp/rocksdb_c_test-0: No such file or directory Pull Request resolved: https://github.com/facebook/rocksdb/pull/9547 Test Plan: make -j32 c_test; TEST_TMPDIR=/tmp/rocksdb_test ./c_test Reviewed By: riversand963 Differential Revision: D34173298 Pulled By: akankshamahajan15 fbshipit-source-id: 5b5a01f5b842c2487b05b0708c8e9532241db7f8 |

3 years ago |

|

|

95d9cb8357 |

Avoid unnecessary copy of sample_slice map (#9551)

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9551 Reviewed By: riversand963 Differential Revision: D34169574 Pulled By: ezgicicek fbshipit-source-id: 2e88db59b65bda269917a9b0bed17181a4afd281 |

3 years ago |

|

|

a1203edca4 |

Rework VersionStorageInfo::ComputeFilesMarkedForForcedBlobGC a bit (#9548)

Summary: We had a bug in `VersionStorageInfo::ComputeFilesMarkedForForcedBlobGC` related to the edge case where all blob files are part of the "oldest batch", i.e. where only the very oldest file has any linked SSTs. (See https://github.com/facebook/rocksdb/issues/9542) This PR tries to make the logic in this method clearer and also adds a unit test for the problematic case. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9548 Test Plan: `make check` Reviewed By: akankshamahajan15 Differential Revision: D34158959 Pulled By: ltamasi fbshipit-source-id: fbab6d749c569728382aa04f7b7c60c92cca7650 |

3 years ago |

|

|

073ac54739 |

Log blob file space amp and expose it via the rocksdb.blob-stats DB property (#9538)

Summary: Extend the periodic statistics in the info log with the total amount of garbage in blob files and the space amplification pertaining to blob files, where the latter is defined as `total_blob_file_size / (total_blob_file_size - total_blob_garbage_size)`. Also expose the space amp via the `rocksdb.blob-stats` DB property. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9538 Test Plan: `make check` Reviewed By: riversand963 Differential Revision: D34126855 Pulled By: ltamasi fbshipit-source-id: 3153e7a0fe0eca440322db273f4deaabaccc51b2 |

3 years ago |

|

|

b2423f8dde |

Fix off-by-one bug in VersionStorageInfo::ComputeFilesMarkedForForcedBlobGC (#9542)

Summary: Fixes a bug introduced in https://github.com/facebook/rocksdb/issues/9526 where we index one position past the end of a `vector`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9542 Test Plan: `make asan_check` Will add a unit test in a separate PR. Reviewed By: akankshamahajan15 Differential Revision: D34145825 Pulled By: ltamasi fbshipit-source-id: 4e87c948407dee489d669a3e41f59e2fcc1228d8 |

3 years ago |

|

|

c5cd31c12b |

Fix TSAN data race in EventListenerTest.MultiCF (#9528)

Summary:

**Context:**

`EventListenerTest.MultiCF` occasionally failed on TSAN data race as below:

```

WARNING: ThreadSanitizer: data race (pid=2047633)

Read of size 8 at 0x7b6000001440 by main thread:

#0 std::vector<rocksdb::DB*, std::allocator<rocksdb::DB*> >::size() const /usr/bin/../lib/gcc/x86_64-linux-gnu/9/../../../../include/c++/9/bits/stl_vector.h:916:40 (listener_test+0x52337c)

https://github.com/facebook/rocksdb/issues/1 rocksdb::EventListenerTest_MultiCF_Test::TestBody() /home/circleci/project/db/listener_test.cc:384:7 (listener_test+0x52337c)

Previous write of size 8 at 0x7b6000001440 by thread T2:

#0 void std::vector<rocksdb::DB*, std::allocator<rocksdb::DB*> >::_M_realloc_insert<rocksdb::DB* const&>(__gnu_cxx::__normal_iterator<rocksdb::DB**, std::vector<rocksdb::DB*, std::allocator<rocksdb::DB*> > >, rocksdb::DB* const&) /usr/bin/../lib/gcc/x86_64-linux-gnu/9/../../../../include/c++/9/bits/vector.tcc:503:31 (listener_test+0x550654)

https://github.com/facebook/rocksdb/issues/1 std::vector<rocksdb::DB*, std::allocator<rocksdb::DB*> >::push_back(rocksdb::DB* const&) /usr/bin/../lib/gcc/x86_64-linux-gnu/9/../../../../include/c++/9/bits/stl_vector.h:1195:4 (listener_test+0x550654)

https://github.com/facebook/rocksdb/issues/2 rocksdb::TestFlushListener::OnFlushCompleted(rocksdb::DB*, rocksdb::FlushJobInfo const&) /home/circleci/project/db/listener_test.cc:255:18 (listener_test+0x550654)

```

After investigation, it is due to the following:

(1) `ASSERT_OK(Flush(i));` before the read `std::vector::size()` is supposed to be [blocked on `DB::Impl::bg_cv_` for memtable flush to finish](

|

3 years ago |

|

|

320d9a8e8a |

Use a sorted vector instead of a map to store blob file metadata (#9526)

Summary: The patch replaces `std::map` with a sorted `std::vector` for `VersionStorageInfo::blob_files_` and preallocates the space for the `vector` before saving the `BlobFileMetaData` into the new `VersionStorageInfo` in `VersionBuilder::Rep::SaveBlobFilesTo`. These changes reduce the time the DB mutex is held while saving new `Version`s, and using a sorted `vector` also makes lookups faster thanks to better memory locality. In addition, the patch introduces helper methods `VersionStorageInfo::GetBlobFileMetaData` and `VersionStorageInfo::GetBlobFileMetaDataLB` that can be used by clients to perform lookups in the `vector`, and does some general cleanup in the parts of code where blob file metadata are used. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9526 Test Plan: Ran `make check` and the crash test script for a while. Performance was tested using a load-optimized benchmark (`fillseq` with vector memtable, no WAL) and small file sizes so that a significant number of files are produced: ``` numactl --interleave=all ./db_bench --benchmarks=fillseq --allow_concurrent_memtable_write=false --level0_file_num_compaction_trigger=4 --level0_slowdown_writes_trigger=20 --level0_stop_writes_trigger=30 --max_background_jobs=8 --max_write_buffer_number=8 --db=/data/ltamasi-dbbench --wal_dir=/data/ltamasi-dbbench --num=800000000 --num_levels=8 --key_size=20 --value_size=400 --block_size=8192 --cache_size=51539607552 --cache_numshardbits=6 --compression_max_dict_bytes=0 --compression_ratio=0.5 --compression_type=lz4 --bytes_per_sync=8388608 --cache_index_and_filter_blocks=1 --cache_high_pri_pool_ratio=0.5 --benchmark_write_rate_limit=0 --write_buffer_size=16777216 --target_file_size_base=16777216 --max_bytes_for_level_base=67108864 --verify_checksum=1 --delete_obsolete_files_period_micros=62914560 --max_bytes_for_level_multiplier=8 --statistics=0 --stats_per_interval=1 --stats_interval_seconds=20 --histogram=1 --memtablerep=skip_list --bloom_bits=10 --open_files=-1 --subcompactions=1 --compaction_style=0 --min_level_to_compress=3 --level_compaction_dynamic_level_bytes=true --pin_l0_filter_and_index_blocks_in_cache=1 --soft_pending_compaction_bytes_limit=167503724544 --hard_pending_compaction_bytes_limit=335007449088 --min_level_to_compress=0 --use_existing_db=0 --sync=0 --threads=1 --memtablerep=vector --allow_concurrent_memtable_write=false --disable_wal=1 --enable_blob_files=1 --blob_file_size=16777216 --min_blob_size=0 --blob_compression_type=lz4 --enable_blob_garbage_collection=1 --seed=<some value> ``` Final statistics before the patch: ``` Cumulative writes: 0 writes, 700M keys, 0 commit groups, 0.0 writes per commit group, ingest: 284.62 GB, 121.27 MB/s Interval writes: 0 writes, 334K keys, 0 commit groups, 0.0 writes per commit group, ingest: 139.28 MB, 72.46 MB/s ``` With the patch: ``` Cumulative writes: 0 writes, 760M keys, 0 commit groups, 0.0 writes per commit group, ingest: 308.66 GB, 131.52 MB/s Interval writes: 0 writes, 445K keys, 0 commit groups, 0.0 writes per commit group, ingest: 185.35 MB, 93.15 MB/s ``` Total time to complete the benchmark is 2611 seconds with the patch, down from 2986 secs. Reviewed By: riversand963 Differential Revision: D34082728 Pulled By: ltamasi fbshipit-source-id: fc598abf676dce436734d06bb9d2d99a26a004fc |

3 years ago |

|

|

685044dff2 |

Remove timestamp from key in expected state (#9525)

Summary: The keys as part of write batch read from trace file can contain trailing timestamps. This PR removes them before calling `ExpectedState`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9525 Test Plan: make check make crash_test_with_ts Reviewed By: ajkr Differential Revision: D34082358 Pulled By: riversand963 fbshipit-source-id: 78c925659e2a19e4a8278fb4a8ddf5070e265c04 |

3 years ago |

|

|

9745c68eb1 |

Remove deprecated option new_table_reader_for_compaction_inputs (#9443)

Summary: In RocksDB option new_table_reader_for_compaction_inputs has not effect on Compaction or on the behavior of RocksDB library. Therefore, we are removing it in the upcoming 7.0 release. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9443 Test Plan: CircleCI Reviewed By: ajkr Differential Revision: D33788508 Pulled By: akankshamahajan15 fbshipit-source-id: 324ca6f12bfd019e9bd5e1b0cdac39be5c3cec7d |

3 years ago |

|

|

68a9c186d0 |

FilterPolicy API changes for 7.0 (#9501)

Summary: * Inefficient block-based filter is no longer customizable in the public API, though (for now) can still be enabled. * Removed deprecated FilterPolicy::CreateFilter() and FilterPolicy::KeyMayMatch() * Removed `rocksdb_filterpolicy_create()` from C API * Change meaning of nullptr return from GetBuilderWithContext() from "use block-based filter" to "generate no filter in this case." This is a cleaner solution to the proposal in https://github.com/facebook/rocksdb/issues/8250. * Also, when user specifies bits_per_key < 0.5, we now round this down to "no filter" because we expect a filter with >= 80% FP rate is unlikely to be worth the CPU cost of accessing it (esp with cache_index_and_filter_blocks=1 or partition_filters=1). * bits_per_key >= 0.5 and < 1.0 is still rounded up to 1.0 (for 62% FP rate) * This also gives us some support for configuring filters from OPTIONS file as currently saved: `filter_policy=rocksdb.BuiltinBloomFilter`. Opening from such an options file will enable reading filters (an improvement) but not writing new ones. (See Customizable follow-up below.) * Also removed deprecated functions * FilterBitsBuilder::CalculateNumEntry() * FilterPolicy::GetFilterBitsBuilder() * NewExperimentalRibbonFilterPolicy() * Remove default implementations of * FilterBitsBuilder::EstimateEntriesAdded() * FilterBitsBuilder::ApproximateNumEntries() * FilterPolicy::GetBuilderWithContext() * Remove support for "filter_policy=experimental_ribbon" configuration string. * Allow "filter_policy=bloomfilter:n" without bool to discourage use of block-based filter. Some pieces for https://github.com/facebook/rocksdb/issues/9389 Likely follow-up (later PRs): * Refactoring toward FilterPolicy Customizable, so that we can generate filters with same configuration as before when configuring from options file. * Remove support for user enabling block-based filter (ignore `bool use_block_based_builder`) * Some months after this change, we could even remove read support for block-based filter, because it is not critical to DB data preservation. * Make FilterBitsBuilder::FinishV2 to avoid `using FilterBitsBuilder::Finish` mess and add support for specifying a MemoryAllocator (for cache warming) Pull Request resolved: https://github.com/facebook/rocksdb/pull/9501 Test Plan: A number of obsolete tests deleted and new tests or test cases added or updated. Reviewed By: hx235 Differential Revision: D34008011 Pulled By: pdillinger fbshipit-source-id: a39a720457c354e00d5b59166b686f7f59e392aa |

3 years ago |

|

|

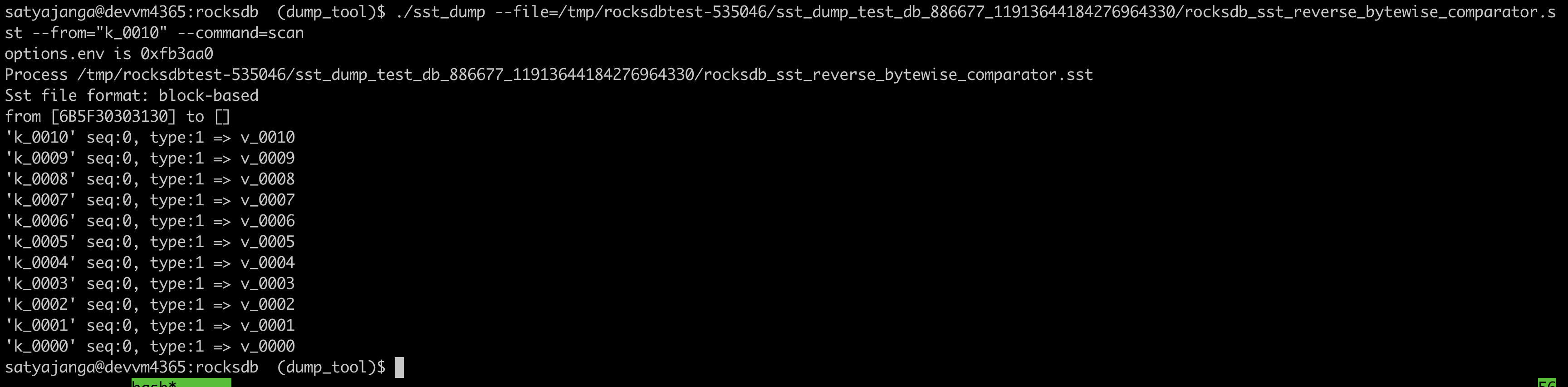

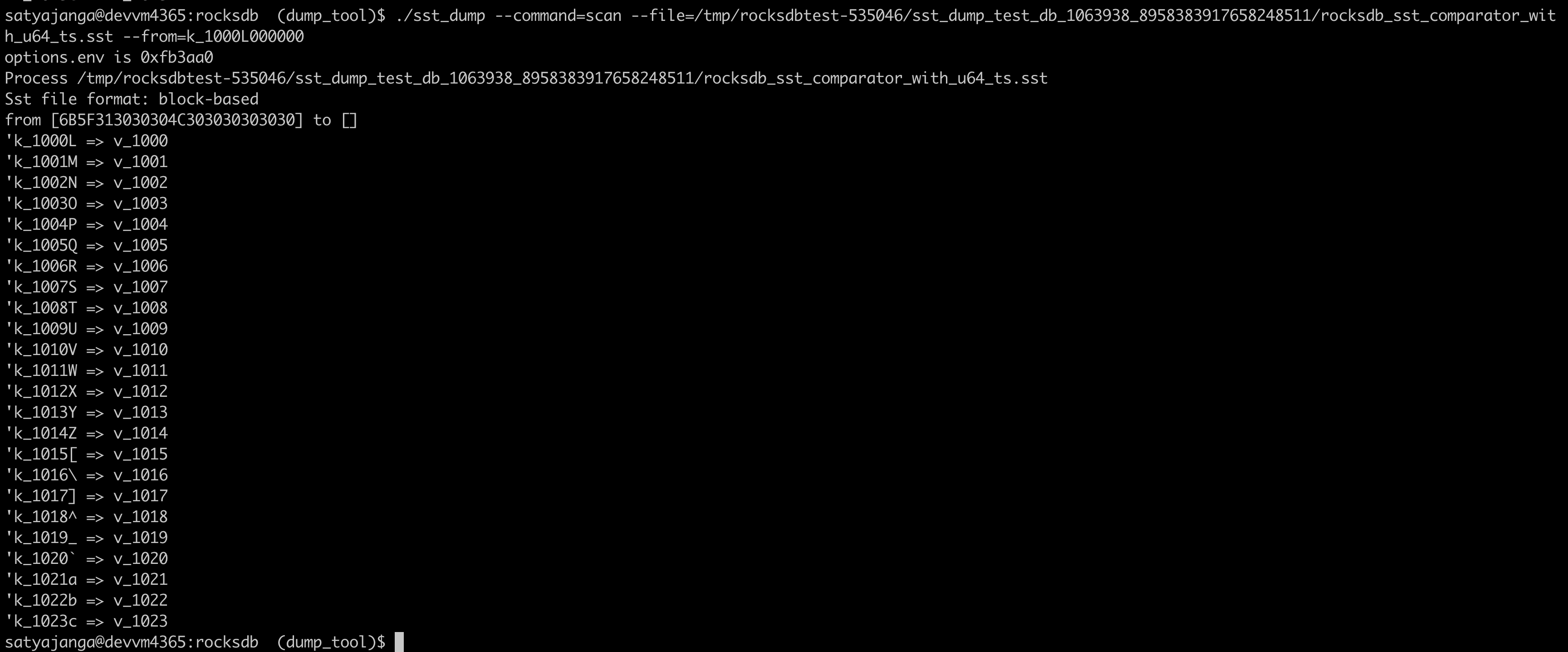

036bbab6f7 |

Use the comparator from the sst file table properties in sst_dump_tool (#9491)

Summary: We introduced a new Comparator for timestamp in user keys. In the sst_dump_tool by default we use BytewiseComparator to read sst files. This change allows us to read comparator_name from table properties in meta data block and use it to read. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9491 Test Plan: added unittests for new functionality. make check   Reviewed By: riversand963 Differential Revision: D33993614 Pulled By: satyajanga fbshipit-source-id: 4b5cf938e6d2cb3931d763bef5baccc900b8c536 |

3 years ago |

|

|

5cb137a860 |

Work around some new clang-analyze failures (#9515)

Summary: ... seen only in internal clang-analyze runs after https://github.com/facebook/rocksdb/issues/9481 * Mostly, this works around falsely reported leaks by using std::unique_ptr in some places where clang-analyze was getting confused. (I didn't see any changes in C++17 that could make our Status implementation leak memory.) * Also fixed SetBGError returning address of a stack variable. * Also fixed another false null deref report by adding an assert. Also, use SKIP_LINK=1 to speed up `make analyze` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9515 Test Plan: Was able to reproduce the reported errors locally and verify they're fixed (except SetBGError). Otherwise, existing tests Reviewed By: hx235 Differential Revision: D34054630 Pulled By: pdillinger fbshipit-source-id: 38600ef3da75ddca307dff96b7a1a523c2885c2e |

3 years ago |

|

|

0cc0543893 |

Mitigate the overhead of building the hash of file locations (#9504)

Summary: The patch builds on the refactoring done in https://github.com/facebook/rocksdb/issues/9494 and improves the performance of building the hash of file locations in `VersionStorageInfo` in two ways. First, the hash building is moved from `AddFile` (which is called under the DB mutex) to a separate post-processing step done as part of `PrepareForVersionAppend` (during which the mutex is *not* held). Second, the space necessary for the hash is preallocated to prevent costly reallocation/rehashing operations. These changes mitigate the overhead of the file location hash, which can be significant with certain workloads where the baseline CPU usage is low (see https://github.com/facebook/rocksdb/issues/9351, which is a workload where keys are sorted, WAL is turned off, the vector memtable implementation is used, and there are lots of small SST files). Fixes https://github.com/facebook/rocksdb/issues/9351 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9504 Test Plan: `make check` ``` numactl --interleave=all ./db_bench --benchmarks=fillseq --allow_concurrent_memtable_write=false --level0_file_num_compaction_trigger=4 --level0_slowdown_writes_trigger=20 --level0_stop_writes_trigger=30 --max_background_jobs=8 --max_write_buffer_number=8 --db=/data/ltamasi-dbbench --wal_dir=/data/ltamasi-dbbench --num=800000000 --num_levels=8 --key_size=20 --value_size=400 --block_size=8192 --cache_size=51539607552 --cache_numshardbits=6 --compression_max_dict_bytes=0 --compression_ratio=0.5 --compression_type=lz4 --bytes_per_sync=8388608 --cache_index_and_filter_blocks=1 --cache_high_pri_pool_ratio=0.5 --benchmark_write_rate_limit=0 --write_buffer_size=16777216 --target_file_size_base=16777216 --max_bytes_for_level_base=67108864 --verify_checksum=1 --delete_obsolete_files_period_micros=62914560 --max_bytes_for_level_multiplier=8 --statistics=0 --stats_per_interval=1 --stats_interval_seconds=20 --histogram=1 --bloom_bits=10 --open_files=-1 --subcompactions=1 --compaction_style=0 --level_compaction_dynamic_level_bytes=true --pin_l0_filter_and_index_blocks_in_cache=1 --soft_pending_compaction_bytes_limit=167503724544 --hard_pending_compaction_bytes_limit=335007449088 --min_level_to_compress=0 --use_existing_db=0 --sync=0 --threads=1 --memtablerep=vector --disable_wal=1 --seed=<some_seed> ``` Final statistics before this patch: ``` Cumulative writes: 0 writes, 697M keys, 0 commit groups, 0.0 writes per commit group, ingest: 283.25 GB, 241.08 MB/s Interval writes: 0 writes, 1264K keys, 0 commit groups, 0.0 writes per commit group, ingest: 525.69 MB, 176.67 MB/s ``` With the patch: ``` Cumulative writes: 0 writes, 759M keys, 0 commit groups, 0.0 writes per commit group, ingest: 308.57 GB, 262.63 MB/s Interval writes: 0 writes, 1555K keys, 0 commit groups, 0.0 writes per commit group, ingest: 646.61 MB, 215.11 MB/s ``` Reviewed By: riversand963 Differential Revision: D34014734 Pulled By: ltamasi fbshipit-source-id: acb2703677451d5ccaa7e9d950844b33d240695b |

3 years ago |

|

|

afc280fdfd |

Enhance new cache key testing & comments (#9329)

Summary: Follow-up to https://github.com/facebook/rocksdb/issues/9126 Added new unit tests to validate some of the claims of guaranteed uniqueness within certain large bounds. Also cleaned up the cache_bench -stress-cache-key tool with better comments and description. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9329 Test Plan: no changes to production code Reviewed By: mrambacher Differential Revision: D33269328 Pulled By: pdillinger fbshipit-source-id: 3a2b684a6b2b15f79dc872e563e3d16563be26de |

3 years ago |

|

|

42e0751b3a |

Clean up VersionStorageInfo a bit (#9494)

Summary: The patch does some cleanup in and around `VersionStorageInfo`: * Renames the method `PrepareApply` to `PrepareAppend` in `Version` to make it clear that it is to be called before appending the `Version` to `VersionSet` (via `AppendVersion`), not before applying any `VersionEdit`s. * Introduces a helper method `VersionStorageInfo::PrepareForVersionAppend` (called by `Version::PrepareAppend`) that encapsulates the population of the various derived data structures in `VersionStorageInfo`, and turns the methods computing the derived structures (`UpdateNumNonEmptyLevels`, `CalculateBaseBytes` etc.) into private helpers. * Changes `Version::PrepareAppend` so it only calls `UpdateAccumulatedStats` if the `update_stats` flag is set. (Earlier, this was checked by the callee.) Related to this, it also moves the call to `ComputeCompensatedSizes` to `VersionStorageInfo::PrepareForVersionAppend`. * Updates and cleans up `version_builder_test`, `version_set_test`, and `compaction_picker_test` so `PrepareForVersionAppend` is called anytime a new `VersionStorageInfo` is set up or saved. This cleanup also involves splitting `VersionStorageInfoTest.MaxBytesForLevelDynamic` into multiple smaller test cases. * Fixes up a bunch of comments that were outdated or just plain incorrect. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9494 Test Plan: Ran `make check` and the crash test script for a while. Reviewed By: riversand963 Differential Revision: D33971666 Pulled By: ltamasi fbshipit-source-id: fda52faac7783041126e4f8dec0fe01bdcadf65a |

3 years ago |

|

|

bec9ab4316 |

Remove deprecated option DBOptions::max_mem_compaction_level (#9446)

Summary: In RocksDB, this option was already marked as "NOT SUPPORTED" for a long time, and setting this option does not have any effect on the behavior of RocksDB library. Therefore, we are removing it in the preparations of the upcoming 7.0 release. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9446 Reviewed By: ajkr Differential Revision: D33793048 Pulled By: bjlemaire fbshipit-source-id: 73316efdb194e90225005246673dae99e65577ae |

3 years ago |

|

|

629e3e1d77 |

Fix spelling in public API (#9490)

Summary: I feel it would be nice if we can fix this spelling error. In `SizeApproximationOptions`, the `include_memtabtles` should be `include_memtables`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9490 Test Plan: make check Reviewed By: hx235 Differential Revision: D33949862 Pulled By: riversand963 fbshipit-source-id: b2be67501b65d4aabb6b8df1bf25eb8d54cc1466 |

3 years ago |

|

|

3122cb4358 |

Revise APIs related to user-defined timestamp (#8946)

Summary: ajkr reminded me that we have a rule of not including per-kv related data in `WriteOptions`. Namely, `WriteOptions` should not include information about "what-to-write", but should just include information about "how-to-write". According to this rule, `WriteOptions::timestamp` (experimental) is clearly a violation. Therefore, this PR removes `WriteOptions::timestamp` for compliance. After the removal, we need to pass timestamp info via another set of APIs. This PR proposes a set of overloaded functions `Put(write_opts, key, value, ts)`, `Delete(write_opts, key, ts)`, and `SingleDelete(write_opts, key, ts)`. Planned to add `Write(write_opts, batch, ts)`, but its complexity made me reconsider doing it in another PR (maybe). For better checking and returning error early, we also add a new set of APIs to `WriteBatch` that take extra `timestamp` information when writing to `WriteBatch`es. These set of APIs in `WriteBatchWithIndex` are currently not supported, and are on our TODO list. Removed `WriteBatch::AssignTimestamps()` and renamed `WriteBatch::AssignTimestamp()` to `WriteBatch::UpdateTimestamps()` since this method require that all keys have space for timestamps allocated already and multiple timestamps can be updated. The constructor of `WriteBatch` now takes a fourth argument `default_cf_ts_sz` which is the timestamp size of the default column family. This will be used to allocate space when calling APIs that do not specify a column family handle. Also, updated `DB::Get()`, `DB::MultiGet()`, `DB::NewIterator()`, `DB::NewIterators()` methods, replacing some assertions about timestamp to returning Status code. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8946 Test Plan: make check ./db_bench -benchmarks=fillseq,fillrandom,readrandom,readseq,deleterandom -user_timestamp_size=8 ./db_stress --user_timestamp_size=8 -nooverwritepercent=0 -test_secondary=0 -secondary_catch_up_one_in=0 -continuous_verification_interval=0 Make sure there is no perf regression by running the following ``` ./db_bench_opt -db=/dev/shm/rocksdb -use_existing_db=0 -level0_stop_writes_trigger=256 -level0_slowdown_writes_trigger=256 -level0_file_num_compaction_trigger=256 -disable_wal=1 -duration=10 -benchmarks=fillrandom ``` Before this PR ``` DB path: [/dev/shm/rocksdb] fillrandom : 1.831 micros/op 546235 ops/sec; 60.4 MB/s ``` After this PR ``` DB path: [/dev/shm/rocksdb] fillrandom : 1.820 micros/op 549404 ops/sec; 60.8 MB/s ``` Reviewed By: ltamasi Differential Revision: D33721359 Pulled By: riversand963 fbshipit-source-id: c131561534272c120ffb80711d42748d21badf09 |

3 years ago |

|

|

920386f2b7 |

Detect (new) Bloom/Ribbon Filter construction corruption (#9342)

Summary: Note: rebase on and merge after https://github.com/facebook/rocksdb/pull/9349, https://github.com/facebook/rocksdb/pull/9345, (optional) https://github.com/facebook/rocksdb/pull/9393 **Context:** (Quoted from pdillinger) Layers of information during new Bloom/Ribbon Filter construction in building block-based tables includes the following: a) set of keys to add to filter b) set of hashes to add to filter (64-bit hash applied to each key) c) set of Bloom indices to set in filter, with duplicates d) set of Bloom indices to set in filter, deduplicated e) final filter and its checksum This PR aims to detect corruption (e.g, unexpected hardware/software corruption on data structures residing in the memory for a long time) from b) to e) and leave a) as future works for application level. - b)'s corruption is detected by verifying the xor checksum of the hash entries calculated as the entries accumulate before being added to the filter. (i.e, `XXPH3FilterBitsBuilder::MaybeVerifyHashEntriesChecksum()`) - c) - e)'s corruption is detected by verifying the hash entries indeed exists in the constructed filter by re-querying these hash entries in the filter (i.e, `FilterBitsBuilder::MaybePostVerify()`) after computing the block checksum (except for PartitionFilter, which is done right after each `FilterBitsBuilder::Finish` for impl simplicity - see code comment for more). For this stage of detection, we assume hash entries are not corrupted after checking on b) since the time interval from b) to c) is relatively short IMO. Option to enable this feature of detection is `BlockBasedTableOptions::detect_filter_construct_corruption` which is false by default. **Summary:** - Implemented new functions `XXPH3FilterBitsBuilder::MaybeVerifyHashEntriesChecksum()` and `FilterBitsBuilder::MaybePostVerify()` - Ensured hash entries, final filter and banding and their [cache reservation ](https://github.com/facebook/rocksdb/issues/9073) are released properly despite corruption - See [Filter.construction.artifacts.release.point.pdf ](https://github.com/facebook/rocksdb/files/7923487/Design.Filter.construction.artifacts.release.point.pdf) for high-level design - Bundled and refactored hash entries's related artifact in XXPH3FilterBitsBuilder into `HashEntriesInfo` for better control on lifetime of these artifact during `SwapEntires`, `ResetEntries` - Ensured RocksDB block-based table builder calls `FilterBitsBuilder::MaybePostVerify()` after constructing the filter by `FilterBitsBuilder::Finish()` - When encountering such filter construction corruption, stop writing the filter content to files and mark such a block-based table building non-ok by storing the corruption status in the builder. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9342 Test Plan: - Added new unit test `DBFilterConstructionCorruptionTestWithParam.DetectCorruption` - Included this new feature in `DBFilterConstructionReserveMemoryTestWithParam.ReserveMemory` as this feature heavily touch ReserveMemory's impl - For fallback case, I run `./filter_bench -impl=3 -detect_filter_construct_corruption=true -reserve_table_builder_memory=true -strict_capacity_limit=true -quick -runs 10 | grep 'Build avg'` to make sure nothing break. - Added to `filter_bench`: increased filter construction time by **30%**, mostly by `MaybePostVerify()` - FastLocalBloom - Before change: `./filter_bench -impl=2 -quick -runs 10 | grep 'Build avg'`: **28.86643s** - After change: - `./filter_bench -impl=2 -detect_filter_construct_corruption=false -quick -runs 10 | grep 'Build avg'` (expect a tiny increase due to MaybePostVerify is always called regardless): **27.6644s (-4% perf improvement might be due to now we don't drop bloom hash entry in `AddAllEntries` along iteration but in bulk later, same with the bypassing-MaybePostVerify case below)** - `./filter_bench -impl=2 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'` (expect acceptable increase): **34.41159s (+20%)** - `./filter_bench -impl=2 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'` (by-passing MaybePostVerify, expect minor increase): **27.13431s (-6%)** - Standard128Ribbon - Before change: `./filter_bench -impl=3 -quick -runs 10 | grep 'Build avg'`: **122.5384s** - After change: - `./filter_bench -impl=3 -detect_filter_construct_corruption=false -quick -runs 10 | grep 'Build avg'` (expect a tiny increase due to MaybePostVerify is always called regardless - verified by removing MaybePostVerify under this case and found only +-1ns difference): **124.3588s (+2%)** - `./filter_bench -impl=3 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'`(expect acceptable increase): **159.4946s (+30%)** - `./filter_bench -impl=3 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'`(by-passing MaybePostVerify, expect minor increase) : **125.258s (+2%)** - Added to `db_stress`: `make crash_test`, `./db_stress --detect_filter_construct_corruption=true` - Manually smoke-tested: manually corrupted the filter construction in some db level tests with basic PUT and background flush. As expected, the error did get returned to users in subsequent PUT and Flush status. Reviewed By: pdillinger Differential Revision: D33746928 Pulled By: hx235 fbshipit-source-id: cb056426be5a7debc1cd16f23bc250f36a08ca57 |

3 years ago |

|

|

a495448eea |

Revisit #9118 for compaction outputs (#9480)

Summary: Crash test recently started showing failures as in https://github.com/facebook/rocksdb/issues/9118 but for files created by compaction. This change applies a similar fix. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9480 Test Plan: Updated / extended unit test. (Some re-arranging to do the simpler compaction testing before this special case.) Reviewed By: ltamasi Differential Revision: D33909835 Pulled By: pdillinger fbshipit-source-id: 58e4b44e4ecc2d21e4df2c2d8440ec0633aa1f6c |

3 years ago |

|

|

980b9ff385 |

Add more micro-benchmark tests (#9436)

Summary: * Add more micro-benchmark tests * Expose an API in DBImpl for waiting for compactions (still not visible to the user) * Add argument name for ribbon_bench * remove benchmark run from CI, as it runs too long. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9436 Test Plan: CI Reviewed By: riversand963 Differential Revision: D33777836 Pulled By: jay-zhuang fbshipit-source-id: c05de3bc082cc05b5d019f00b324e774bf4bbd96 |

3 years ago |

|

|

f6d7ec1d02 |

Ignore `total_order_seek` in DB::Get (#9427)

Summary: Apparently setting total_order_seek=true for DB::Get was intended to allow accurate read semantics if the current prefix extractor doesn't match what was used to generate SST files on disk. But since prefix_extractor was made a mutable option in 5.14.0, we have been able to detect this case and provide the correct semantics regardless of the total_order_seek option. Since that time, the option has only made Get() slower in a reasonably common case: prefix_extractor unchanged and whole_key_filtering=false. So this change primarily removes unnecessary effect of total_order_seek on Get. Also cleans up some related comments. Also adds a -total_order_seek option to db_bench and canonicalizes handling of ReadOptions in db_bench so that command line options have the expected association with library features. (There is potential for change in regression test behavior, but the old behavior is likely indefensible, or some other inconsistency would need to be fixed.) TODO in follow-up work: there should be no reason for Get() to depend on current prefix extractor at all. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9427 Test Plan: Unit tests updated. Performance (using db_bench update) Create DB with `TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -benchmarks=fillrandom -num=10000000 -disable_wal=1 -write_buffer_size=10000000 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=12 -whole_key_filtering=0` Test with and without `-total_order_seek` on `TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -use_existing_db -readonly -benchmarks=readrandom -num=10000000 -duration=40 -disable_wal=1 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=12` Before this change, total_order_seek=false: 25188 ops/sec Before this change, total_order_seek=true: 1222 ops/sec (~20x slower) After this change, total_order_seek=false: 24570 ops/sec After this change, total_order_seek=true: 25012 ops/sec (indistinguishable) Reviewed By: siying Differential Revision: D33753458 Pulled By: pdillinger fbshipit-source-id: bf892f34907a5e407d9c40bd4d42f0adbcbe0014 |

3 years ago |

|

|

42cca28ebb |

Remove deprecated API AdvancedColumnFamilyOptions::rate_limit_delay_max_milliseconds (#9455)

Summary: **Context/Summary:** AdvancedColumnFamilyOptions::rate_limit_delay_max_milliseconds has been marked as deprecated and it's time to actually remove the code. - Keep `soft_rate_limit`/`hard_rate_limit` in `cf_mutable_options_type_info` to prevent throwing `InvalidArgument` in `GetColumnFamilyOptionsFromMap` when reading an option file still with these options (e.g, old option file generated from RocksDB before the deprecation) - Keep `soft_rate_limit`/`hard_rate_limit` in under `OptionsOldApiTest.GetOptionsFromMapTest` to test the case mentioned above. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9455 Test Plan: Rely on my eyeball and CI Reviewed By: ajkr Differential Revision: D33811664 Pulled By: hx235 fbshipit-source-id: 866859427fe710354a90f1095057f80116365ff0 |

3 years ago |

|

|

d10c5c08d3 |

Remove iter_start_seqnum and preserve_deletes (#9430)

Summary: According to https://github.com/facebook/rocksdb/blob/6.27.fb/db/db_impl/db_impl.cc#L2896:L2911 and https://github.com/facebook/rocksdb/blob/6.27.fb/db/db_impl/db_impl_open.cc#L203:L208, we are going to remove `iter_start_seqnum` and `preserve_deletes` starting from RocksDB 7.0 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9430 Test Plan: make check and CI Reviewed By: ajkr Differential Revision: D33753639 Pulled By: riversand963 fbshipit-source-id: c80aab8e8d8fc33e52472fed524ed703d0ffc8b6 |

3 years ago |

|

|

74ccd1931e |

Remove deprecated option DBOptions::skip_log_error_on_recovery (#9434)

Summary: In RocksDB DBOptions::skip_log_error_on_recovery is marked as "NOT SUPPORTED" for a long time, and setting this option does not have any effect on the behavior of RocksDB library. Therefore, we are removing it in the upcoming 7.0 release. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9434 Test Plan: CircleCI Reviewed By: ajkr Differential Revision: D33763015 Pulled By: akankshamahajan15 fbshipit-source-id: 11f09643298da6c02d3dcdb090b996f4c3cfdd76 |

3 years ago |

|

|

22321e1027 |

Remove unused API base_background_compactions (#9462)

Summary: The API is deprecated long time ago. Clean up the codebase by removing it. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9462 Test Plan: CI, fake release: D33835220 Reviewed By: riversand963 Differential Revision: D33835103 Pulled By: jay-zhuang fbshipit-source-id: 6d2dc12c8e7fdbe2700865a3e61f0e3f78bd8184 |

3 years ago |

|

|

dd203ed604 |

Disallow a combination of options (#9348)

Summary: Disallow `immutable_db_opts.use_direct_io_for_flush_and_compaction == true` and `mutable_db_opts.writable_file_max_buffer_size == 0`, since it causes `WritableFileWriter::Append()` to loop forever and does not make much sense in direct IO. This combination of options itself does not make much sense: asking RocksDB to do direct IO but not allowing RocksDB to allocate a buffer. We should detect this false combination and warn user early, no matter whether the application is running on a platform that supports direct IO or not. In the case of platform **not** supporting direct IO, it's ok if the user learns about this and then finds that direct IO is not supported. One tricky thing: the constructor of `WritableFileWriter` is being used in our unit tests, and it's impossible to return status code from constructor. Since we do not throw, I put an assertion for now. Fortunately, the constructor is not exposed to external applications. Closing https://github.com/facebook/rocksdb/issues/7109 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9348 Test Plan: make check Reviewed By: ajkr Differential Revision: D33371924 Pulled By: riversand963 fbshipit-source-id: 2a3701ab541cee23bffda8a36cdf37b2d235edfa |

3 years ago |

|

|

78aee6fedc |

Remove obsolete backupable_db.h, utility_db.h (#9438)

Summary: This also removes the obsolete names BackupableDBOptions and UtilityDB. API users must now use BackupEngineOptions and DBWithTTL::Open. In C API, `rocksdb_backupable_db_*` is replaced `rocksdb_backup_engine_*`. Similar renaming in Java API. In reference to https://github.com/facebook/rocksdb/issues/9389 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9438 Test Plan: CI Reviewed By: mrambacher Differential Revision: D33780269 Pulled By: pdillinger fbshipit-source-id: 4a6cfc5c1b4c78bcad790b9d3dd13c5fdf4a1fac |

3 years ago |

|

|

ea89c77f27 |

Fix major bug with MultiGet, DeleteRange, and memtable Bloom (#9453)

Summary: MemTable::MultiGet was not considering range tombstones before querying Bloom filter. This means range tombstones would be skipped for keys (or prefixes) with no other entries in the memtable. This could cause old values for a key (in SST files) to still show up until the range tombstone covering it has been flushed. This is fixed by essentially disabling the memtable Bloom filter when there are any range tombstones. (This could be better optimized in the future, but good enough for now.) Did some other cleanup/optimization in the same code to (more than) offset the cost of checking on range tombstones in more cases. There is now notable improvement when memtable_whole_key_filtering and prefix_extractor are used together (unusual), and this makes MultiGet closer to the Get implementation. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9453 Test Plan: new unit test added. Added memtable Bloom to crash test. Performance testing -------------------- Build WAL-only DB (recovers to memtable): ``` TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -benchmarks=fillrandom -num=1000000 -write_buffer_size=250000000 ``` Query test command, to maximize sensitivity to the changed code: ``` TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -use_existing_db -readonly -benchmarks=multireadrandom -num=10000000 -write_buffer_size=250000000 -memtable_bloom_size_ratio=0.015 -multiread_batched -batch_size=24 -threads=8 -memtable_whole_key_filtering=$MWKF -prefix_size=$PXS ``` (Note -num here is 10x larger for mostly memtable misses) Before & after run simultaneously, average over 10 iterations per data point, ops/sec. MWKF=0 PXS=0 (Bloom disabled) Before: 5724844 After: 6722066 MWKF=0 PXS=7 (prefixes hardly unique; Bloom not useful) Before: 9981319 After: 10237990 MWKF=0 PXS=8 (prefixes unique; Bloom useful) Before: 12081715 After: 12117603 MWKF=1 PXS=0 (whole key Bloom useful) Before: 11944354 After: 12096085 MWKF=1 PXS=7 (whole key Bloom useful in new version; prefixes not useful in old version) Before: 9444299 After: 11826029 MWKF=1 PXS=7 (whole key Bloom useful in new version; prefixes useful in old version) Before: 11784465 After: 11778591 Only in this last case is the 'before' *slightly* faster, perhaps because hashing prefixes is slightly faster than hashing whole keys. Otherwise, 'after' is faster. Reviewed By: ajkr Differential Revision: D33805025 Pulled By: pdillinger fbshipit-source-id: 597523cae4f4eafdf6ae6bb2bc6cb46f83b017bf |

3 years ago |