Tag:

Branch:

Tree:

3bdbf67e1a

main

oxigraph-8.1.1

oxigraph-8.3.2

oxigraph-main

${ noResults }

1207 Commits (3bdbf67e1aa25b5517e2e169a0c7602fbec6279e)

| Author | SHA1 | Message | Date |

|---|---|---|---|

|

|

3bdbf67e1a |

Fix race condition caused by concurrent accesses to forceMmapOff_ when opening Posix WritableFile (#9685)

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9685 Our TSAN reports a race condition as follows when running test ``` gtest-parallel -r 100 ./external_sst_file_test --gtest_filter=ExternalSSTFileTest.MultiThreaded ``` leads to the following ``` WARNING: ThreadSanitizer: data race (pid=2683148) Write of size 1 at 0x556fede63340 by thread T7: #0 rocksdb::(anonymous namespace)::PosixFileSystem::OpenWritableFile(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, rocksdb::FileOptions const&, bool, std::unique_ptr<rocksdb::FSWritableFile, std::default_delete<rocksdb::FSWritableFile> >*, rocksdb::IODebugContext*) internal_repo_rocksdb/repo/env/fs_posix.cc:334 (external_sst_file_test+0xb61ac4) #1 rocksdb::(anonymous namespace)::PosixFileSystem::ReopenWritableFile(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, rocksdb::FileOptions const&, std::unique_ptr<rocksdb::FSWritableFile, std::default_delete<rocksdb::FSWritableFile> >*, rocksdb::IODebugContext*) internal_repo_rocksdb/repo/env/fs_posix.cc:382 (external_sst_file_test+0xb5ba96) #2 rocksdb::CompositeEnv::ReopenWritableFile(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, std::unique_ptr<rocksdb::WritableFile, std::default_delete<rocksdb::WritableFile> >*, rocksdb::EnvOptions const&) internal_repo_rocksdb/repo/env/composite_env.cc:334 (external_sst_file_test+0xa6ab7f) #3 rocksdb::EnvWrapper::ReopenWritableFile(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, std::unique_ptr<rocksdb::WritableFile, std::default_delete<rocksdb::WritableFile> >*, rocksdb::EnvOptions const&) internal_repo_rocksdb/repo/include/rocksdb/env.h:1428 (external_sst_file_test+0x561f3e) Previous read of size 1 at 0x556fede63340 by thread T4: #0 rocksdb::(anonymous namespace)::PosixFileSystem::OpenWritableFile(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, rocksdb::FileOptions const&, bool, std::unique_ptr<rocksdb::FSWritableFile, std::default_delete<rocksdb::FSWritableFile> >*, rocksdb::IODebugContext*) internal_repo_rocksdb/repo/env/fs_posix.cc:328 (external_sst_file_test+0xb61a70) #1 rocksdb::(anonymous namespace)::PosixFileSystem::ReopenWritableFile(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator ... ``` Fix by making sure the following block gets executed only once: ``` if (!checkedDiskForMmap_) { // this will be executed once in the program's lifetime. // do not use mmapWrite on non ext-3/xfs/tmpfs systems. if (!SupportsFastAllocate(fname)) { forceMmapOff_ = true; } checkedDiskForMmap_ = true; } ``` Reviewed By: pdillinger Differential Revision: D34780308 fbshipit-source-id: b761f66b24c8b5b8389d86ea371c8542b8d869d5 |

3 years ago |

|

|

a88d8795ec |

Expand auto recovery to background read errors (#9679)

Summary: Fix and enhance the background error recovery logic to handle the following situations - 1. Background read errors during flush/compaction (previously was resulting in unrecoverable state) 2. Fix auto recovery failure on read/write errors during atomic flush. It was failing due to a bug in setting the resuming_from_bg_err variable in AtomicFlushMemTablesToOutputFiles. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9679 Test Plan: Add new unit tests in error_handler_fs_test Reviewed By: riversand963 Differential Revision: D34770097 Pulled By: anand1976 fbshipit-source-id: 136da973a28d684b9c74bdf668519b0cbbbe1742 |

3 years ago |

|

|

2c8100e60e |

Fix a race condition when disable and enable manual compaction (#9694)

Summary: In https://github.com/facebook/rocksdb/issues/9659, when `DisableManualCompaction()` is issued, the foreground manual compaction thread does not have to wait background compaction thread to finish. Which could be a problem that the user re-enable manual compaction with `EnableManualCompaction()`, it may re-enable the BG compaction which supposed be cancelled. This patch makes the FG compaction wait on `manual_compaction_state.done`, which either be set by BG compaction or Unschedule callback. Then when FG manual compaction thread returns, it should not have BG compaction running. So shared_ptr is no longer needed for `manual_compaction_state`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9694 Test Plan: a StressTest and unittest Reviewed By: ajkr Differential Revision: D34885472 Pulled By: jay-zhuang fbshipit-source-id: e6476175b43e8c59cd49f5c09241036a0716c274 |

3 years ago |

|

|

3da8236837 |

fix: Reusing-Iterator reads stale keys after DeleteRange() performed (#9258)

Summary: fix https://github.com/facebook/rocksdb/issues/9255 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9258 Reviewed By: pdillinger Differential Revision: D34879684 Pulled By: ajkr fbshipit-source-id: 5934f4b7524dc27ecdf1430e0456a0fc02958fc7 |

3 years ago |

|

|

bbdaf63d0f |

Fix a TSAN-reported bug caused by concurrent accesss to std::deque (#9686)

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9686 According to https://www.cplusplus.com/reference/deque/deque/back/, " The container is accessed (neither the const nor the non-const versions modify the container). The last element is potentially accessed or modified by the caller. Concurrently accessing or modifying other elements is safe. " Also according to https://www.cplusplus.com/reference/deque/deque/pop_front/, " The container is modified. The first element is modified. Concurrently accessing or modifying other elements is safe (although see iterator validity above). " In RocksDB, we never pop the last element of `DBImpl::alive_log_files_`. We have been exploiting this fact and the above two properties when ensuring correctness when `DBImpl::alive_log_files_` may be accessed concurrently. Specifically, it can be accessed in the write path when db mutex is released. Sometimes, the log_mute_ is held. It can also be accessed in `FindObsoleteFiles()` when db mutex is always held. It can also be accessed during recovery when db mutex is also held. Given the fact that we never pop the last element of alive_log_files_, we currently do not acquire additional locks when accessing it in `WriteToWAL()` as follows ``` alive_log_files_.back().AddSize(log_entry.size()); ``` This is problematic. Check source code of deque.h ``` back() _GLIBCXX_NOEXCEPT { __glibcxx_requires_nonempty(); ... } pop_front() _GLIBCXX_NOEXCEPT { ... if (this->_M_impl._M_start._M_cur != this->_M_impl._M_start._M_last - 1) { ... ++this->_M_impl._M_start._M_cur; } ... } ``` `back()` will actually call `__glibcxx_requires_nonempty()` first. If `__glibcxx_requires_nonempty()` is enabled and not an empty macro, it will call `empty()` ``` bool empty() { return this->_M_impl._M_finish == this->_M_impl._M_start; } ``` You can see that it will access `this->_M_impl._M_start`, racing with `pop_front()`. Therefore, TSAN will actually catch the bug in this case. To be able to use TSAN on our library and unit tests, we should always coordinate concurrent accesses to STL containers properly. We need to pass information about db mutex and log mutex into `WriteToWAL()`, otherwise it's impossible to know which mutex to acquire inside the function. To fix this, we can catch the tail of `alive_log_files_` by reference, so that we do not have to call `back()` in `WriteToWAL()`. Reviewed By: pdillinger Differential Revision: D34780309 fbshipit-source-id: 1def9821f0c437f2736c6a26445d75890377889b |

3 years ago |

|

|

4dff279b19 |

DisableManualCompaction may fail to cancel an unscheduled task (#9659)

Summary: https://github.com/facebook/rocksdb/issues/9625 didn't change the unschedule condition which was waiting for the background thread to clean-up the compaction. make sure we only unschedule the task when it's scheduled. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9659 Reviewed By: ajkr Differential Revision: D34651820 Pulled By: jay-zhuang fbshipit-source-id: 23f42081b15ec8886cd81cbf131b116e0c74dc2f |

3 years ago |

|

|

95305c44a1 |

Add OpenAndTrimHistory API to support trimming data with specified timestamp (#9410)

Summary: As disscussed in (https://github.com/facebook/rocksdb/issues/9223), Here added a new API named DB::OpenAndTrimHistory, this API will open DB and trim data to the timestamp specofied by **trim_ts** (The data with newer timestamp than specified trim bound will be removed). This API should only be used at a timestamp-enabled db instance recovery. And this PR implemented a new iterator named HistoryTrimmingIterator to support trimming history with a new API named DB::OpenAndTrimHistory. HistoryTrimmingIterator wrapped around the underlying InternalITerator such that keys whose timestamps newer than **trim_ts** should not be returned to the compaction iterator while **trim_ts** is not null. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9410 Reviewed By: ltamasi Differential Revision: D34410207 Pulled By: riversand963 fbshipit-source-id: e54049dc234eccd673244c566b15df58df5a6236 |

3 years ago |

|

|

3b6dc049f7 |

Support user-defined timestamps in write-committed txns (#9629)

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9629 Pessimistic transactions use pessimistic concurrency control, i.e. locking. Keys are locked upon first operation that writes the key or has the intention of writing. For example, `PessimisticTransaction::Put()`, `PessimisticTransaction::Delete()`, `PessimisticTransaction::SingleDelete()` will write to or delete a key, while `PessimisticTransaction::GetForUpdate()` is used by application to indicate to RocksDB that the transaction has the intention of performing write operation later in the same transaction. Pessimistic transactions support two-phase commit (2PC). A transaction can be `Prepared()`'ed and then `Commit()`. The prepare phase is similar to a promise: once `Prepare()` succeeds, the transaction has acquired the necessary resources to commit. The resources include locks, persistence of WAL, etc. Write-committed transaction is the default pessimistic transaction implementation. In RocksDB write-committed transaction, `Prepare()` will write data to the WAL as a prepare section. `Commit()` will write a commit marker to the WAL and then write data to the memtables. While writing to the memtables, different keys in the transaction's write batch will be assigned different sequence numbers in ascending order. Until commit/rollback, the transaction holds locks on the keys so that no other transaction can write to the same keys. Furthermore, the keys' sequence numbers represent the order in which they are committed and should be made visible. This is convenient for us to implement support for user-defined timestamps. Since column families with and without timestamps can co-exist in the same database, a transaction may or may not involve timestamps. Based on this observation, we add two optional members to each `PessimisticTransaction`, `read_timestamp_` and `commit_timestamp_`. If no key in the transaction's write batch has timestamp, then setting these two variables do not have any effect. For the rest of this commit, we discuss only the cases when these two variables are meaningful. read_timestamp_ is used mainly for validation, and should be set before first call to `GetForUpdate()`. Otherwise, the latter will return non-ok status. `GetForUpdate()` calls `TryLock()` that can verify if another transaction has written the same key since `read_timestamp_` till this call to `GetForUpdate()`. If another transaction has indeed written the same key, then validation fails, and RocksDB allows this transaction to refine `read_timestamp_` by increasing it. Note that a transaction can still use `Get()` with a different timestamp to read, but the result of the read should not be used to determine data that will be written later. commit_timestamp_ must be set after finishing writing and before transaction commit. This applies to both 2PC and non-2PC cases. In the case of 2PC, it's usually set after prepare phase succeeds. We currently require that the commit timestamp be chosen after all keys are locked. This means we disallow the `TransactionDB`-level APIs if user-defined timestamp is used by the transaction. Specifically, calling `PessimisticTransactionDB::Put()`, `PessimisticTransactionDB::Delete()`, `PessimisticTransactionDB::SingleDelete()`, etc. will return non-ok status because they specify timestamps before locking the keys. Users are also prompted to use the `Transaction` APIs when they receive the non-ok status. Reviewed By: ltamasi Differential Revision: D31822445 fbshipit-source-id: b82abf8e230216dc89cc519564a588224a88fd43 |

3 years ago |

|

|

ca0ef54f16 |

Rate-limit automatic WAL flush after each user write (#9607)

Summary: **Context:** WAL flush is currently not rate-limited by `Options::rate_limiter`. This PR is to provide rate-limiting to auto WAL flush, the one that automatically happen after each user write operation (i.e, `Options::manual_wal_flush == false`), by adding `WriteOptions::rate_limiter_options`. Note that we are NOT rate-limiting WAL flush that do NOT automatically happen after each user write, such as `Options::manual_wal_flush == true + manual FlushWAL()` (rate-limiting multiple WAL flushes), for the benefits of: - being consistent with [ReadOptions::rate_limiter_priority](https://github.com/facebook/rocksdb/blob/7.0.fb/include/rocksdb/options.h#L515) - being able to turn off some WAL flush's rate-limiting but not all (e.g, turn off specific the WAL flush of a critical user write like a service's heartbeat) `WriteOptions::rate_limiter_options` only accept `Env::IO_USER` and `Env::IO_TOTAL` currently due to an implementation constraint. - The constraint is that we currently queue parallel writes (including WAL writes) based on FIFO policy which does not factor rate limiter priority into this layer's scheduling. If we allow lower priorities such as `Env::IO_HIGH/MID/LOW` and such writes specified with lower priorities occurs before ones specified with higher priorities (even just by a tiny bit in arrival time), the former would have blocked the latter, leading to a "priority inversion" issue and contradictory to what we promise for rate-limiting priority. Therefore we only allow `Env::IO_USER` and `Env::IO_TOTAL` right now before improving that scheduling. A pre-requisite to this feature is to support operation-level rate limiting in `WritableFileWriter`, which is also included in this PR. **Summary:** - Renamed test suite `DBRateLimiterTest to DBRateLimiterOnReadTest` for adding a new test suite - Accept `rate_limiter_priority` in `WritableFileWriter`'s private and public write functions - Passed `WriteOptions::rate_limiter_options` to `WritableFileWriter` in the path of automatic WAL flush. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9607 Test Plan: - Added new unit test to verify existing flush/compaction rate-limiting does not break, since `DBTest, RateLimitingTest` is disabled and current db-level rate-limiting tests focus on read only (e.g, `db_rate_limiter_test`, `DBTest2, RateLimitedCompactionReads`). - Added new unit test `DBRateLimiterOnWriteWALTest, AutoWalFlush` - `strace -ftt -e trace=write ./db_bench -benchmarks=fillseq -db=/dev/shm/testdb -rate_limit_auto_wal_flush=1 -rate_limiter_bytes_per_sec=15 -rate_limiter_refill_period_us=1000000 -write_buffer_size=100000000 -disable_auto_compactions=1 -num=100` - verified that WAL flush(i.e, system-call _write_) were chunked into 15 bytes and each _write_ was roughly 1 second apart - verified the chunking disappeared when `-rate_limit_auto_wal_flush=0` - crash test: `python3 tools/db_crashtest.py blackbox --disable_wal=0 --rate_limit_auto_wal_flush=1 --rate_limiter_bytes_per_sec=10485760 --interval=10` killed as normal **Benchmarked on flush/compaction to ensure no performance regression:** - compaction with rate-limiting (see table 1, avg over 1280-run): pre-change: **915635 micros/op**; post-change: **907350 micros/op (improved by 0.106%)** ``` #!/bin/bash TEST_TMPDIR=/dev/shm/testdb START=1 NUM_DATA_ENTRY=8 N=10 rm -f compact_bmk_output.txt compact_bmk_output_2.txt dont_care_output.txt for i in $(eval echo "{$START..$NUM_DATA_ENTRY}") do NUM_RUN=$(($N*(2**($i-1)))) for j in $(eval echo "{$START..$NUM_RUN}") do ./db_bench --benchmarks=fillrandom -db=$TEST_TMPDIR -disable_auto_compactions=1 -write_buffer_size=6710886 > dont_care_output.txt && ./db_bench --benchmarks=compact -use_existing_db=1 -db=$TEST_TMPDIR -level0_file_num_compaction_trigger=1 -rate_limiter_bytes_per_sec=100000000 | egrep 'compact' done > compact_bmk_output.txt && awk -v NUM_RUN=$NUM_RUN '{sum+=$3;sum_sqrt+=$3^2}END{print sum/NUM_RUN, sqrt(sum_sqrt/NUM_RUN-(sum/NUM_RUN)^2)}' compact_bmk_output.txt >> compact_bmk_output_2.txt done ``` - compaction w/o rate-limiting (see table 2, avg over 640-run): pre-change: **822197 micros/op**; post-change: **823148 micros/op (regressed by 0.12%)** ``` Same as above script, except that -rate_limiter_bytes_per_sec=0 ``` - flush with rate-limiting (see table 3, avg over 320-run, run on the [patch]( |

3 years ago |

|

|

36aec94d85 |

`compression_per_level` should be used for flush and changeable (#9658)

Summary: - Make `compression_per_level` dynamical changeable with `SetOptions`; - Fix a bug that `compression_per_level` is not used for flush; Pull Request resolved: https://github.com/facebook/rocksdb/pull/9658 Test Plan: CI Reviewed By: ajkr Differential Revision: D34700749 Pulled By: jay-zhuang fbshipit-source-id: a23b9dfa7ad03d393c1d71781d19e91de796f49c |

3 years ago |

|

|

4a776d81cc |

Dynamic toggling of BlockBasedTableOptions::detect_filter_construct_corruption (#9654)

Summary: **Context/Summary:** As requested, `BlockBasedTableOptions::detect_filter_construct_corruption` can now be dynamically configured using `DB::SetOptions` after this PR Pull Request resolved: https://github.com/facebook/rocksdb/pull/9654 Test Plan: - New unit test Reviewed By: pdillinger Differential Revision: D34622609 Pulled By: hx235 fbshipit-source-id: c06773ef3d029e6bf1724d3a72dffd37a8ec66d9 |

3 years ago |

|

|

659a16d52b |

Fix bug causing incorrect data returned by snapshot read (#9648)

Summary: This bug affects use cases that meet the following conditions - (has only the default column family or disables WAL) and - has at least one event listener - atomic flush is NOT affected. If the above conditions meet, then RocksDB can release the db mutex before picking all the existing memtables to flush. In the meantime, a snapshot can be created and db's sequence number can still be incremented. The upcoming flush will ignore this snapshot. A later read using this snapshot can return incorrect result. To fix this issue, we call the listeners callbacks after picking the memtables so that we avoid creating snapshots during this interval. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9648 Test Plan: make check Reviewed By: ajkr Differential Revision: D34555456 Pulled By: riversand963 fbshipit-source-id: 1438981e9f069a5916686b1a0ad7627f734cf0ee |

3 years ago |

|

|

db8647969d |

Unschedule manual compaction from thread-pool queue (#9625)

Summary: PR https://github.com/facebook/rocksdb/issues/9557 introduced a race condition between manual compaction foreground thread and background compaction thread. This PR adds the ability to really unschedule manual compaction from thread-pool queue by differentiate tag name for manual compaction and other tasks. Also fix an issue that db `close()` didn't cancel the manual compaction thread. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9625 Test Plan: unittest not hang Reviewed By: ajkr Differential Revision: D34410811 Pulled By: jay-zhuang fbshipit-source-id: cb14065eabb8cf1345fa042b5652d4f788c0c40c |

3 years ago |

|

|

33742c2a9f |

Remove BlockBasedTableOptions.hash_index_allow_collision (#9454)

Summary: BlockBasedTableOptions.hash_index_allow_collision is already deprecated and has no effect. Delete it for preparing 7.0 release. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9454 Test Plan: Run all existing tests. Reviewed By: ajkr Differential Revision: D33805827 fbshipit-source-id: ed8a436d1d083173ec6aef2a762ba02e1eefdc9d |

3 years ago |

|

|

9ed96703d1 |

Add support for BlobDB to ldb (#9630)

Summary: Add the configuration options and help messages of BlobDB to `ldb` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9630 Test Plan: `python ./tools/ldb_test.py` Reviewed By: ltamasi Differential Revision: D34443176 Pulled By: changneng fbshipit-source-id: 5b3f185cdfc2561e06dd37215c7edfbca07dbe80 |

3 years ago |

|

|

87a8b3c8af |

Deflake DBErrorHandlingFSTest.MultiCFWALWriteError (#9496)

Summary: **Context:** As part of https://github.com/facebook/rocksdb/pull/6949, file deletion is disabled for faulty database on the IOError of MANIFEST write/sync and [re-enabled again during `DBImpl::Resume()` if all recovery is completed]( |

3 years ago |

|

|

6f12599863 |

Support WBWI for keys having timestamps (#9603)

Summary: This PR supports inserting keys to a `WriteBatchWithIndex` for column families that enable user-defined timestamps and reading the keys back. **The index does not have timestamps.** Writing a key to WBWI is unchanged, because the underlying WriteBatch already supports it. When reading the keys back, we need to make sure to distinguish between keys with and without timestamps before comparison. When user calls `GetFromBatchAndDB()`, no timestamp is needed to query the batch, but a timestamp has to be provided to query the db. The assumption is that data in the batch must be newer than data from the db. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9603 Test Plan: make check Reviewed By: ltamasi Differential Revision: D34354849 Pulled By: riversand963 fbshipit-source-id: d25d1f84e2240ce543e521fa30595082fb8db9a0 |

3 years ago |

|

|

7ae4da924a |

Update HISTORY.md and version.h for 7.0 release (#9609)

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9609 Reviewed By: riversand963 Differential Revision: D34370309 Pulled By: ajkr fbshipit-source-id: 5fc9306439aefa4b2d61d847534ea6758c30b6a5 |

3 years ago |

|

|

3699b171e4 |

Change enum SizeApproximationFlags to enum class (#9604)

Summary: Change enum SizeApproximationFlags to enum and class and add overloaded operators for the transition between enum class and uint8_t Pull Request resolved: https://github.com/facebook/rocksdb/pull/9604 Test Plan: Circle CI jobs Reviewed By: riversand963 Differential Revision: D34360281 Pulled By: akankshamahajan15 fbshipit-source-id: 6351dfdb717ae3c4530d324c3d37a8ecb01dd1ef |

3 years ago |

|

|

d3a2f284d9 |

Add Temperature info in `NewSequentialFile()` (#9499)

Summary: Add Temperature hints information from RocksDB in API `NewSequentialFile()`. backup and checkpoint operations need to open the source files with `NewSequentialFile()`, which will have the temperature hints. Other operations are not covered. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9499 Test Plan: Added unittest Reviewed By: pdillinger Differential Revision: D34006115 Pulled By: jay-zhuang fbshipit-source-id: 568b34602b76520e53128672bd07e9d886786a2f |

3 years ago |

|

|

559525dcbb |

Add Async Read and Poll APIs in FileSystem (#9564)

Summary: This PR adds support for new APIs Async Read that reads the data asynchronously and Poll API that checks if requested read request has completed or not. Usage: In RocksDB, we are currently planning to prefetch data asynchronously during sequential scanning and RocksDB will call these APIs to prefetch more data in advanced. Design: - ReadAsync API submits the read request to underlying FileSystem in order to read data asynchronously. When read request is completed, callback function will be called. cb_arg is used by RocksDB to track the original request submitted and IOHandle is used by FileSystem to keep track of IO requests at their level. - The Poll API is added in FileSystem because the call could end up handling completions for multiple different files which is not specific to a FSRandomAccessFile instance. There could be multiple outstanding file reads from different files in future and they can complete in any order. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9564 Test Plan: Test will be added in separate PR. Reviewed By: anand1976 Differential Revision: D34226216 Pulled By: akankshamahajan15 fbshipit-source-id: 95e64edafb17f543f7232421d51e2665a3267f69 |

3 years ago |

|

|

f4b2500e12 |

Add last level and non-last level read statistics (#9519)

Summary: Add last level and non-last level read statistics: ``` LAST_LEVEL_READ_BYTES, LAST_LEVEL_READ_COUNT, NON_LAST_LEVEL_READ_BYTES, NON_LAST_LEVEL_READ_COUNT, ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9519 Test Plan: added unittest Reviewed By: siying Differential Revision: D34062539 Pulled By: jay-zhuang fbshipit-source-id: 908644c3050878b4234febdc72e3e19d89af38cd |

3 years ago |

|

|

30b08878d8 |

Make FilterPolicy Customizable (#9590)

Summary: Make FilterPolicy into a Customizable class. Allow new FilterPolicy to be discovered through the ObjectRegistry Pull Request resolved: https://github.com/facebook/rocksdb/pull/9590 Reviewed By: pdillinger Differential Revision: D34327367 Pulled By: mrambacher fbshipit-source-id: 37e7edac90ec9457422b72f359ab8ef48829c190 |

3 years ago |

|

|

2fbc672732 |

Add temperature information to the event listener callbacks (#9591)

Summary: RocksDB try to provide temperature information in the event listener callbacks. The information is not guaranteed, as some operation like backup won't have these information. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9591 Test Plan: Added unittest Reviewed By: siying, pdillinger Differential Revision: D34309339 Pulled By: jay-zhuang fbshipit-source-id: 4aca4f270f99fa49186d85d300da42594663d6d7 |

3 years ago |

|

|

54fb2a8975 |

Change type of cache buffer passed to `Cache::CreateCallback()` to `const void*` (#9595)

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9595 Reviewed By: riversand963 Differential Revision: D34329906 Pulled By: ajkr fbshipit-source-id: 508601856fa9bee4d40f4a68d14d333ef2143d40 |

3 years ago |

|

|

48b9de4a3e |

Mark more OldDefaults as deprecated (#9594)

Summary: `ColumnFamilyOptions::OldDefaults` and `DBOptions::OldDefaults` now deprecated. Were previously overlooked with `Options::OldDefaults` in https://github.com/facebook/rocksdb/issues/9363 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9594 Test Plan: comments only Reviewed By: jay-zhuang Differential Revision: D34318592 Pulled By: pdillinger fbshipit-source-id: 773c97a61e2a8290ae154f363dd61c1f35a9dd16 |

3 years ago |

|

|

725833a424 |

Hide FilterBits{Builder,Reader} from public API (#9592)

Summary: We don't have any evidence of people using these to build custom filters. The recommended way of customizing filter handling is to defer to various built-in policies based on FilterBuildingContext (e.g. to build Monkey filtering policy). With old API, we have evidence of people modifying keys going into filter, but most cases of that can be handled with prefix_extractor. Having FilterBitsBuilder+Reader in the public API is an ogoing hinderance to code evolution (e.g. recent new Finish and MaybePostVerify), and so this change removes them from the public API for 7.0. Maybe they will come back in some form later, but lacking evidence of them providing value in the public API, we want to take back more freedom to evolve these. With this moved to internal-only, there is no rush to clean up the complex Finish signatures, or add memory allocator support, but doing so is much easier with them out of public API, for example to use CacheAllocationPtr without exposing it in the public API. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9592 Test Plan: cosmetic changes only Reviewed By: hx235 Differential Revision: D34315470 Pulled By: pdillinger fbshipit-source-id: 03e03bb66a72c73df2c464d2dbbbae906dd8f99b |

3 years ago |

|

|

627deb7ceb |

Fix some MultiGet batching stats (#9583)

Summary: The NUM_INDEX_AND_FILTER_BLOCKS_READ_PER_LEVEL, NUM_DATA_BLOCKS_READ_PER_LEVEL, and NUM_SST_READ_PER_LEVEL stats were being recorded only when the last file in a level happened to have hits. They are supposed to be updated for every level. Also, there was some overcounting of GetContextStats. This PR fixes both the problems. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9583 Test Plan: Update the unit test in db_basic_test Reviewed By: akankshamahajan15 Differential Revision: D34308044 Pulled By: anand1976 fbshipit-source-id: b3b36020fda26ba91bc6e0e47d52d58f4d7f656e |

3 years ago |

|

|

f092f0fa5d |

Add subcompaction event API (#9311)

Summary: Add event callback for subcompaction and adds a sub_job_id to identify it. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9311 Reviewed By: ajkr Differential Revision: D33892707 Pulled By: jay-zhuang fbshipit-source-id: 57b5e5e594d61b2112d480c18a79a36751f65a4e |

3 years ago |

|

|

a86ee02d34 |

Clarify compiler support release note (#9593)

Summary: in HISTORY.md Pull Request resolved: https://github.com/facebook/rocksdb/pull/9593 Test Plan: release note only Reviewed By: siying Differential Revision: D34318189 Pulled By: pdillinger fbshipit-source-id: ba2eca8bede2d42a3fefd10b954b92cb54f831f2 |

3 years ago |

|

|

babe56ddba |

Add rate limiter priority to ReadOptions (#9424)

Summary: Users can set the priority for file reads associated with their operation by setting `ReadOptions::rate_limiter_priority` to something other than `Env::IO_TOTAL`. Rate limiting `VerifyChecksum()` and `VerifyFileChecksums()` is the motivation for this PR, so it also includes benchmarks and minor bug fixes to get that working. `RandomAccessFileReader::Read()` already had support for rate limiting compaction reads. I changed that rate limiting to be non-specific to compaction, but rather performed according to the passed in `Env::IOPriority`. Now the compaction read rate limiting is supported by setting `rate_limiter_priority = Env::IO_LOW` on its `ReadOptions`. There is no default value for the new `Env::IOPriority` parameter to `RandomAccessFileReader::Read()`. That means this PR goes through all callers (in some cases multiple layers up the call stack) to find a `ReadOptions` to provide the priority. There are TODOs for cases I believe it would be good to let user control the priority some day (e.g., file footer reads), and no TODO in cases I believe it doesn't matter (e.g., trace file reads). The API doc only lists the missing cases where a file read associated with a provided `ReadOptions` cannot be rate limited. For cases like file ingestion checksum calculation, there is no API to provide `ReadOptions` or `Env::IOPriority`, so I didn't count that as missing. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9424 Test Plan: - new unit tests - new benchmarks on ~50MB database with 1MB/s read rate limit and 100ms refill interval; verified with strace reads are chunked (at 0.1MB per chunk) and spaced roughly 100ms apart. - setup command: `./db_bench -benchmarks=fillrandom,compact -db=/tmp/testdb -target_file_size_base=1048576 -disable_auto_compactions=true -file_checksum=true` - benchmarks command: `strace -ttfe pread64 ./db_bench -benchmarks=verifychecksum,verifyfilechecksums -use_existing_db=true -db=/tmp/testdb -rate_limiter_bytes_per_sec=1048576 -rate_limit_bg_reads=1 -rate_limit_user_ops=true -file_checksum=true` - crash test using IO_USER priority on non-validation reads with https://github.com/facebook/rocksdb/issues/9567 reverted: `python3 tools/db_crashtest.py blackbox --max_key=1000000 --write_buffer_size=524288 --target_file_size_base=524288 --level_compaction_dynamic_level_bytes=true --duration=3600 --rate_limit_bg_reads=true --rate_limit_user_ops=true --rate_limiter_bytes_per_sec=10485760 --interval=10` Reviewed By: hx235 Differential Revision: D33747386 Pulled By: ajkr fbshipit-source-id: a2d985e97912fba8c54763798e04f006ccc56e0c |

3 years ago |

|

|

1cda273dc3 |

Fix a silent data loss for write-committed txn (#9571)

Summary:

The following sequence of events can cause silent data loss for write-committed

transactions.

```

Time thread 1 bg flush

| db->Put("a")

| txn = NewTxn()

| txn->Put("b", "v")

| txn->Prepare() // writes only to 5.log

| db->SwitchMemtable() // memtable 1 has "a"

| // close 5.log,

| // creates 8.log

| trigger flush

| pick memtable 1

| unlock db mutex

| write new sst

| txn->ctwb->Put("gtid", "1") // writes 8.log

| txn->Commit() // writes to 8.log

| // writes to memtable 2

| compute min_log_number_to_keep_2pc, this

| will be 8 (incorrect).

|

| Purge obsolete wals, including 5.log

|

V

```

At this point, writes of txn exists only in memtable. Close db without flush because db thinks the data in

memtable are backed by log. Then reopen, the writes are lost except key-value pair {"gtid"->"1"},

only the commit marker of txn is in 8.log

The reason lies in `PrecomputeMinLogNumberToKeep2PC()` which calls `FindMinPrepLogReferencedByMemTable()`.

In the above example, when bg flush thread tries to find obsolete wals, it uses the information

computed by `PrecomputeMinLogNumberToKeep2PC()`. The return value of `PrecomputeMinLogNumberToKeep2PC()`

depends on three components

- `PrecomputeMinLogNumberToKeepNon2PC()`. This represents the WAL that has unflushed data. As the name of this method suggests, it does not account for 2PC. Although the keys reside in the prepare section of a previous WAL, the column family references the current WAL when they are actually inserted into the memtable during txn commit.

- `prep_tracker->FindMinLogContainingOutstandingPrep()`. This represents the WAL with a prepare section but the txn hasn't committed.

- `FindMinPrepLogReferencedByMemTable()`. This represents the WAL on which some memtables (mutable and immutable) depend for their unflushed data.

The bug lies in `FindMinPrepLogReferencedByMemTable()`. Originally, this function skips checking the column families

that are being flushed, but the unit test added in this PR shows that they should not be. In this unit test, there is

only the default column family, and one of its memtables has unflushed data backed by a prepare section in 5.log.

We should return this information via `FindMinPrepLogReferencedByMemTable()`.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9571

Test Plan:

```

./transaction_test --gtest_filter=*/TransactionTest.SwitchMemtableDuringPrepareAndCommit_WC/*

make check

```

Reviewed By: siying

Differential Revision: D34235236

Pulled By: riversand963

fbshipit-source-id: 120eb21a666728a38dda77b96276c6af72b008b1

|

3 years ago |

|

|

31031c0210 |

Remove deprecated RemoteCompaction API (#9570)

Summary: Remove deprecated remote compaction APIs `CompactionService::Start()` and `CompactionService::WaitForComplete()`. Please use `CompactionService::StartV2()`, `CompactionService::WaitForCompleteV2()` instead, which provides the same information plus extra data like priority, db_id, etc. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9570 Test Plan: CI Reviewed By: riversand963 Differential Revision: D34255969 Pulled By: jay-zhuang fbshipit-source-id: c6376eccdd1123f1c42ab53771b5f65f8160c325 |

3 years ago |

|

|

c42d0cf862 |

Add support for decimals to PatternEntry (#9577)

Summary: Add support for doubles to ObjectLibrary::PatternEntry. This support will allow patterns containing a non-integer number to be parsed correctly. Added appropriate test cases to cover this new option. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9577 Reviewed By: pdillinger Differential Revision: D34269763 Pulled By: mrambacher fbshipit-source-id: b5ce16cbd3665c2974ec0f3412ef2b403ef8b155 |

3 years ago |

|

|

a0c569ee1d |

Cancel manual compaction in thread-pool queue (#9557)

Summary: Fix `DisableManualCompaction()` has to wait scheduled manual compaction to start the execution to cancel the job. When a manual compaction in thread-pool queue is cancel, set the job is_canceled to true and clean the resource. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9557 Test Plan: added unittest that will hang without the change Reviewed By: ajkr Differential Revision: D34214910 Pulled By: jay-zhuang fbshipit-source-id: 89dbaee78ddf26eb13ce862c2b15f4a098b36a78 |

3 years ago |

|

|

57418aba51 |

Fix a typo in HISTORY.md for 7.0 (#9574)

Summary: See PR Pull Request resolved: https://github.com/facebook/rocksdb/pull/9574 Reviewed By: ajkr, mrambacher Differential Revision: D34239184 Pulled By: hx235 fbshipit-source-id: 6b5cc70d86b804ab4645bc2cd0243961c2fb00ee |

3 years ago |

|

|

443d8ef094 |

Fix PinSelf() read-after-free in DB::GetMergeOperands() (#9507)

Summary:

**Context:**

Running the new test `DBMergeOperandTest.MergeOperandReadAfterFreeBug` prior to this fix surfaces the read-after-free bug of PinSef() as below:

```

READ of size 8 at 0x60400002529d thread T0

https://github.com/facebook/rocksdb/issues/5 0x7f199a in rocksdb::PinnableSlice::PinSelf(rocksdb::Slice const&) include/rocksdb/slice.h:171

https://github.com/facebook/rocksdb/issues/6 0x7f199a in rocksdb::DBImpl::GetImpl(rocksdb::ReadOptions const&, rocksdb::Slice const&, rocksdb::DBImpl::GetImplOptions&) db/db_impl/db_impl.cc:1919

https://github.com/facebook/rocksdb/issues/7 0x540d63 in rocksdb::DBImpl::GetMergeOperands(rocksdb::ReadOptions const&, rocksdb::ColumnFamilyHandle*, rocksdb::Slice const&, rocksdb::PinnableSlice*, rocksdb::GetMergeOperandsOptions*, int*) db/db_impl/db_impl.h:203

freed by thread T0 here:

https://github.com/facebook/rocksdb/issues/3 0x1191399 in rocksdb::cache_entry_roles_detail::RegisteredDeleter<rocksdb::Block, (rocksdb::CacheEntryRole)0>::Delete(rocksdb::Slice const&, void*) cache/cache_entry_roles.h:99

https://github.com/facebook/rocksdb/issues/4 0x719348 in rocksdb::LRUHandle::Free() cache/lru_cache.h:205

https://github.com/facebook/rocksdb/issues/5 0x71047f in rocksdb::LRUCacheShard::Release(rocksdb::Cache::Handle*, bool) cache/lru_cache.cc:547

https://github.com/facebook/rocksdb/issues/6 0xa78f0a in rocksdb::Cleanable::DoCleanup() include/rocksdb/cleanable.h:60

https://github.com/facebook/rocksdb/issues/7 0xa78f0a in rocksdb::Cleanable::Reset() include/rocksdb/cleanable.h:38

https://github.com/facebook/rocksdb/issues/8 0xa78f0a in rocksdb::PinnedIteratorsManager::ReleasePinnedData() db/pinned_iterators_manager.h:71

https://github.com/facebook/rocksdb/issues/9 0xd0c21b in rocksdb::PinnedIteratorsManager::~PinnedIteratorsManager() db/pinned_iterators_manager.h:24

https://github.com/facebook/rocksdb/issues/10 0xd0c21b in rocksdb::Version::Get(rocksdb::ReadOptions const&, rocksdb::LookupKey const&, rocksdb::PinnableSlice*, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >*, rocksdb::Status*, rocksdb::MergeContext*, unsigned long*, bool*, bool*, unsigned long*, rocksdb::ReadCallback*, bool*, bool) db/pinned_iterators_manager.h:22

https://github.com/facebook/rocksdb/issues/11 0x7f0fdf in rocksdb::DBImpl::GetImpl(rocksdb::ReadOptions const&, rocksdb::Slice const&, rocksdb::DBImpl::GetImplOptions&) db/db_impl/db_impl.cc:1886

https://github.com/facebook/rocksdb/issues/12 0x540d63 in rocksdb::DBImpl::GetMergeOperands(rocksdb::ReadOptions const&, rocksdb::ColumnFamilyHandle*, rocksdb::Slice const&, rocksdb::PinnableSlice*, rocksdb::GetMergeOperandsOptions*, int*) db/db_impl/db_impl.h:203

previously allocated by thread T0 here:

https://github.com/facebook/rocksdb/issues/1 0x1239896 in rocksdb::AllocateBlock(unsigned long, **rocksdb::MemoryAllocator*)** memory/memory_allocator.h:35

https://github.com/facebook/rocksdb/issues/2 0x1239896 in rocksdb::BlockFetcher::CopyBufferToHeapBuf() table/block_fetcher.cc:171

https://github.com/facebook/rocksdb/issues/3 0x1239896 in rocksdb::BlockFetcher::GetBlockContents() table/block_fetcher.cc:206

https://github.com/facebook/rocksdb/issues/4 0x122eae5 in rocksdb::BlockFetcher::ReadBlockContents() table/block_fetcher.cc:325

https://github.com/facebook/rocksdb/issues/5 0x11b1f45 in rocksdb::Status rocksdb::BlockBasedTable::MaybeReadBlockAndLoadToCache<rocksdb::Block>(rocksdb::FilePrefetchBuffer*, rocksdb::ReadOptions const&, rocksdb::BlockHandle const&, rocksdb::UncompressionDict const&, bool, rocksdb::CachableEntry<rocksdb::Block>*, rocksdb::BlockType, rocksdb::GetContext*, rocksdb::BlockCacheLookupContext*, rocksdb::BlockContents*) const table/block_based/block_based_table_reader.cc:1503

```

Here is the analysis:

- We have [PinnedIteratorsManager](https://github.com/facebook/rocksdb/blob/6.28.fb/db/version_set.cc#L1980) with `Cleanable` capability in our `Version::Get()` path. It's responsible for managing the life-time of pinned iterator and invoking registered cleanup functions during its own destruction.

- For example in case above, the merge operands's clean-up gets associated with this manger in [GetContext::push_operand](https://github.com/facebook/rocksdb/blob/6.28.fb/table/get_context.cc#L405). During PinnedIteratorsManager's [destruction](https://github.com/facebook/rocksdb/blob/6.28.fb/db/pinned_iterators_manager.h#L67), the release function associated with those merge operand data is invoked.

**And that's what we see in "freed by thread T955 here" in ASAN.**

- Bug 🐛: `PinnedIteratorsManager` is local to `Version::Get()` while the data of merge operands need to outlive `Version::Get` and stay till they get [PinSelf()](https://github.com/facebook/rocksdb/blob/6.28.fb/db/db_impl/db_impl.cc#L1905), **which is the read-after-free in ASAN.**

- This bug is likely to be an overlook of `PinnedIteratorsManager` when developing the API `DB::GetMergeOperands` cuz the current logic works fine with the existing case of getting the *merged value* where the operands do not need to live that long.

- This bug was not surfaced much (even in its unit test) due to the release function associated with the merge operands (which are actually blocks put in cache as you can see in `BlockBasedTable::MaybeReadBlockAndLoadToCache` **in "previously allocated by" in ASAN report**) is a cache entry deleter.

The deleter will call `Cache::Release()` which, for LRU cache, won't immediately deallocate the block based on LRU policy [unless the cache is full or being instructed to force erase](https://github.com/facebook/rocksdb/blob/6.28.fb/cache/lru_cache.cc#L521-L531)

- `DBMergeOperandTest.MergeOperandReadAfterFreeBug` makes the cache extremely small to force cache full.

**Summary:**

- Fix the bug by align `PinnedIteratorsManager`'s lifetime with the merge operands

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9507

Test Plan:

- New test `DBMergeOperandTest.MergeOperandReadAfterFreeBug`

- db bench on read path

- Setup (LSM tree with several levels, cache the whole db to avoid read IO, warm cache with readseq to avoid read IO): `TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -benchmarks="fillrandom,readseq -num=1000000 -cache_size=100000000 -write_buffer_size=10000 -statistics=1 -max_bytes_for_level_base=10000 -level0_file_num_compaction_trigger=1``TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -benchmarks="readrandom" -num=1000000 -cache_size=100000000 `

- Actual command run (run 20-run for 20 times and then average the 20-run's average micros/op)

- `for j in {1..20}; do (for i in {1..20}; do rm -rf /dev/shm/rocksdb/ && TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -benchmarks="fillrandom,readseq,readrandom" -num=1000000 -cache_size=100000000 -write_buffer_size=10000 -statistics=1 -max_bytes_for_level_base=10000 -level0_file_num_compaction_trigger=1 | egrep 'readrandom'; done > rr_output_pre.txt && (awk '{sum+=$3; sum_sqrt+=$3^2}END{print sum/20, sqrt(sum_sqrt/20-(sum/20)^2)}' rr_output_pre.txt) >> rr_output_pre_2.txt); done`

- **Result: Pre-change: 3.79193 micros/op; Post-change: 3.79528 micros/op (+0.09%)**

(pre-change)sorted avg micros/op of each 20-run | std of micros/op of each 20-run | (post-change) sorted avg micros/op of each 20-run | std of micros/op of each 20-run

-- | -- | -- | --

3.58355 | 0.265209 | 3.48715 | 0.382076

3.58845 | 0.519927 | 3.5832 | 0.382726

3.66415 | 0.452097 | 3.677 | 0.563831

3.68495 | 0.430897 | 3.68405 | 0.495355

3.70295 | 0.482893 | 3.68465 | 0.431438

3.719 | 0.463806 | 3.71945 | 0.457157

3.7393 | 0.453423 | 3.72795 | 0.538604

3.7806 | 0.527613 | 3.75075 | 0.444509

3.7817 | 0.426704 | 3.7683 | 0.468065

3.809 | 0.381033 | 3.8086 | 0.557378

3.80985 | 0.466011 | 3.81805 | 0.524833

3.8165 | 0.500351 | 3.83405 | 0.529339

3.8479 | 0.430326 | 3.86285 | 0.44831

3.85125 | 0.434108 | 3.8717 | 0.544098

3.8556 | 0.524602 | 3.895 | 0.411679

3.8656 | 0.476383 | 3.90965 | 0.566636

3.8911 | 0.488477 | 3.92735 | 0.608038

3.898 | 0.493978 | 3.9439 | 0.524511

3.97235 | 0.515008 | 3.9623 | 0.477416

3.9768 | 0.519993 | 3.98965 | 0.521481

- CI

Reviewed By: ajkr

Differential Revision: D34030519

Pulled By: hx235

fbshipit-source-id: a99ac585c11704c5ed93af033cb29ba0a7b16ae8

|

3 years ago |

|

|

420d51b9a0 |

Update Java API for FilterPolicy changes (#9569)

Summary: Obsolete block-based filter no longer in public API, from https://github.com/facebook/rocksdb/issues/9535 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9569 Test Plan: existing tests Reviewed By: jay-zhuang Differential Revision: D34243579 Pulled By: pdillinger fbshipit-source-id: ec5127d9bb9cc3f70501c531829a735bffdd1418 |

3 years ago |

|

|

479eb1aad6 |

Hide deprecated, inefficient block-based filter from public API (#9535)

Summary: This change removes the ability to configure the deprecated, inefficient block-based filter in the public API. Options that would have enabled it now use "full" (and optionally partitioned) filters. Existing block-based filters can still be read and used, and a "back door" way to build them still exists, for testing and in case of trouble. About the only way this removal would cause an issue for users is if temporary memory for filter construction greatly increases. In HISTORY.md we suggest a few possible mitigations: partitioned filters, smaller SST files, or setting reserve_table_builder_memory=true. Or users who have customized a FilterPolicy using the CreateFilter/KeyMayMatch mechanism removed in https://github.com/facebook/rocksdb/issues/9501 will have to upgrade their code. (It's long past time for people to move to the new builder/reader customization interface.) This change also introduces some internal-use-only configuration strings for testing specific filter implementations while bypassing some compatibility / intelligence logic. This is intended to hint at a path toward making FilterPolicy Customizable, but it also gives us a "back door" way to configure block-based filter. Aside: updated db_bench so that -readonly implies -use_existing_db Pull Request resolved: https://github.com/facebook/rocksdb/pull/9535 Test Plan: Unit tests updated. Specifically, * BlockBasedTableTest.BlockReadCountTest is tweaked to validate the back door configuration interface and ignoring of `use_block_based_builder`. * BlockBasedTableTest.TracingGetTest is migrated from testing block-based filter access pattern to full filter access patter, by re-ordering some things. * Options test (pretty self-explanatory) Performance test - create with `./db_bench -db=/dev/shm/rocksdb1 -bloom_bits=10 -cache_index_and_filter_blocks=1 -benchmarks=fillrandom -num=10000000 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0` with and without `-use_block_based_filter`, which creates a DB with 21 SST files in L0. Read with `./db_bench -db=/dev/shm/rocksdb1 -readonly -bloom_bits=10 -cache_index_and_filter_blocks=1 -benchmarks=readrandom -num=10000000 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -duration=30` Without -use_block_based_filter: readrandom 464 ops/sec, 689280 KB DB With -use_block_based_filter: readrandom 169 ops/sec, 690996 KB DB No consistent difference with fillrandom Reviewed By: jay-zhuang Differential Revision: D34153871 Pulled By: pdillinger fbshipit-source-id: 31f4a933c542f8f09aca47fa64aec67832a69738 |

3 years ago |

|

|

d6e1e6f37a |

Add commit_timestamp and read_timestamp to Pessimistic transaction (#9537)

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9537 Add `Transaction::SetReadTimestampForValidation()` and `Transaction::SetCommitTimestamp()` APIs with default implementation returning `Status::NotSupported()`. Currently, calling these two APIs do not have any effect. Also add checks to `PessimisticTransactionDB` to enforce that column families in the same db either - disable user-defined timestamp - enable 64-bit timestamp Just to clarify, a `PessimisticTransactionDB` can have some column families without timestamps as well as column families that enable timestamp. Each `PessimisticTransaction` can have two optional timestamps, `read_timestamp_` used for additional validation and `commit_timestamp_` which denotes when the transaction commits. For now, we are going to support `WriteCommittedTxn` (in a series of subsequent PRs) Once set, we do not allow decreasing `read_timestamp_`. The `commit_timestamp_` must be greater than `read_timestamp_` for each transaction and must be set before commit, unless the transaction does not involve any column family that enables user-defined timestamp. TransactionDB builds on top of RocksDB core `DB` layer. Though `DB` layer assumes that user-defined timestamps are byte arrays, `TransactionDB` uses uint64_t to store timestamps. When they are passed down, they are still interpreted as byte-arrays by `DB`. Reviewed By: ltamasi Differential Revision: D31567959 fbshipit-source-id: b0b6b69acab5d8e340cf174f33e8b09f1c3d3502 |

3 years ago |

|

|

5c53b9008f |

Fix failure in c_test (#9547)

Summary: When tests are run with TMPD, c_test may fail because TMPD is not created by the test. It results in IO error: No such file or directory: While mkdir if missing: /tmp/rocksdb_test_tmp/rocksdb_c_test-0: No such file or directory Pull Request resolved: https://github.com/facebook/rocksdb/pull/9547 Test Plan: make -j32 c_test; TEST_TMPDIR=/tmp/rocksdb_test ./c_test Reviewed By: riversand963 Differential Revision: D34173298 Pulled By: akankshamahajan15 fbshipit-source-id: 5b5a01f5b842c2487b05b0708c8e9532241db7f8 |

3 years ago |

|

|

fe9d495112 |

Return different Status based on ObjectRegistry::NewObject calls (#9333)

Summary: This fix addresses https://github.com/facebook/rocksdb/issues/9299. If attempting to create a new object via the ObjectRegistry and a factory is not found, the ObjectRegistry will return a "NotSupported" status. This is the same behavior as previously. If the factory is found but could not successfully create the object, an "InvalidArgument" status is returned. If the factory returned a reason why (in the errmsg), this message will be in the returned status. In practice, there are two options in the ConfigOptions that control how these errors are propagated: - If "ignore_unknown_options=true", then both InvalidArgument and NotSupported status codes will be swallowed internally. Both cases will return success - If "ignore_unsupported_options=true", then having no factory will return success but a failing factory will return an error - If both options are false, both cases (no and failing factory) will return errors. In practice this likely only changes Customizable that may be partially available. For example, the JEMallocMemoryAllocator is a built-in allocator that is registered with the system but may not be compiled in. In this case, the status code for this allocator changed from NotSupported("JEMalloc not available") to InvalidArgumen("JEMalloc not available"). Other Customizable builtins/plugins would have the same semantics. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9333 Reviewed By: pdillinger Differential Revision: D33517681 Pulled By: mrambacher fbshipit-source-id: 8033052d4a4a7b88c2d9f90147b1b4467e51f6fd |

3 years ago |

|

|

073ac54739 |

Log blob file space amp and expose it via the rocksdb.blob-stats DB property (#9538)

Summary: Extend the periodic statistics in the info log with the total amount of garbage in blob files and the space amplification pertaining to blob files, where the latter is defined as `total_blob_file_size / (total_blob_file_size - total_blob_garbage_size)`. Also expose the space amp via the `rocksdb.blob-stats` DB property. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9538 Test Plan: `make check` Reviewed By: riversand963 Differential Revision: D34126855 Pulled By: ltamasi fbshipit-source-id: 3153e7a0fe0eca440322db273f4deaabaccc51b2 |

3 years ago |

|

|

320d9a8e8a |

Use a sorted vector instead of a map to store blob file metadata (#9526)

Summary: The patch replaces `std::map` with a sorted `std::vector` for `VersionStorageInfo::blob_files_` and preallocates the space for the `vector` before saving the `BlobFileMetaData` into the new `VersionStorageInfo` in `VersionBuilder::Rep::SaveBlobFilesTo`. These changes reduce the time the DB mutex is held while saving new `Version`s, and using a sorted `vector` also makes lookups faster thanks to better memory locality. In addition, the patch introduces helper methods `VersionStorageInfo::GetBlobFileMetaData` and `VersionStorageInfo::GetBlobFileMetaDataLB` that can be used by clients to perform lookups in the `vector`, and does some general cleanup in the parts of code where blob file metadata are used. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9526 Test Plan: Ran `make check` and the crash test script for a while. Performance was tested using a load-optimized benchmark (`fillseq` with vector memtable, no WAL) and small file sizes so that a significant number of files are produced: ``` numactl --interleave=all ./db_bench --benchmarks=fillseq --allow_concurrent_memtable_write=false --level0_file_num_compaction_trigger=4 --level0_slowdown_writes_trigger=20 --level0_stop_writes_trigger=30 --max_background_jobs=8 --max_write_buffer_number=8 --db=/data/ltamasi-dbbench --wal_dir=/data/ltamasi-dbbench --num=800000000 --num_levels=8 --key_size=20 --value_size=400 --block_size=8192 --cache_size=51539607552 --cache_numshardbits=6 --compression_max_dict_bytes=0 --compression_ratio=0.5 --compression_type=lz4 --bytes_per_sync=8388608 --cache_index_and_filter_blocks=1 --cache_high_pri_pool_ratio=0.5 --benchmark_write_rate_limit=0 --write_buffer_size=16777216 --target_file_size_base=16777216 --max_bytes_for_level_base=67108864 --verify_checksum=1 --delete_obsolete_files_period_micros=62914560 --max_bytes_for_level_multiplier=8 --statistics=0 --stats_per_interval=1 --stats_interval_seconds=20 --histogram=1 --memtablerep=skip_list --bloom_bits=10 --open_files=-1 --subcompactions=1 --compaction_style=0 --min_level_to_compress=3 --level_compaction_dynamic_level_bytes=true --pin_l0_filter_and_index_blocks_in_cache=1 --soft_pending_compaction_bytes_limit=167503724544 --hard_pending_compaction_bytes_limit=335007449088 --min_level_to_compress=0 --use_existing_db=0 --sync=0 --threads=1 --memtablerep=vector --allow_concurrent_memtable_write=false --disable_wal=1 --enable_blob_files=1 --blob_file_size=16777216 --min_blob_size=0 --blob_compression_type=lz4 --enable_blob_garbage_collection=1 --seed=<some value> ``` Final statistics before the patch: ``` Cumulative writes: 0 writes, 700M keys, 0 commit groups, 0.0 writes per commit group, ingest: 284.62 GB, 121.27 MB/s Interval writes: 0 writes, 334K keys, 0 commit groups, 0.0 writes per commit group, ingest: 139.28 MB, 72.46 MB/s ``` With the patch: ``` Cumulative writes: 0 writes, 760M keys, 0 commit groups, 0.0 writes per commit group, ingest: 308.66 GB, 131.52 MB/s Interval writes: 0 writes, 445K keys, 0 commit groups, 0.0 writes per commit group, ingest: 185.35 MB, 93.15 MB/s ``` Total time to complete the benchmark is 2611 seconds with the patch, down from 2986 secs. Reviewed By: riversand963 Differential Revision: D34082728 Pulled By: ltamasi fbshipit-source-id: fc598abf676dce436734d06bb9d2d99a26a004fc |

3 years ago |

|

|

9745c68eb1 |

Remove deprecated option new_table_reader_for_compaction_inputs (#9443)

Summary: In RocksDB option new_table_reader_for_compaction_inputs has not effect on Compaction or on the behavior of RocksDB library. Therefore, we are removing it in the upcoming 7.0 release. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9443 Test Plan: CircleCI Reviewed By: ajkr Differential Revision: D33788508 Pulled By: akankshamahajan15 fbshipit-source-id: 324ca6f12bfd019e9bd5e1b0cdac39be5c3cec7d |

3 years ago |

|

|

68a9c186d0 |

FilterPolicy API changes for 7.0 (#9501)

Summary: * Inefficient block-based filter is no longer customizable in the public API, though (for now) can still be enabled. * Removed deprecated FilterPolicy::CreateFilter() and FilterPolicy::KeyMayMatch() * Removed `rocksdb_filterpolicy_create()` from C API * Change meaning of nullptr return from GetBuilderWithContext() from "use block-based filter" to "generate no filter in this case." This is a cleaner solution to the proposal in https://github.com/facebook/rocksdb/issues/8250. * Also, when user specifies bits_per_key < 0.5, we now round this down to "no filter" because we expect a filter with >= 80% FP rate is unlikely to be worth the CPU cost of accessing it (esp with cache_index_and_filter_blocks=1 or partition_filters=1). * bits_per_key >= 0.5 and < 1.0 is still rounded up to 1.0 (for 62% FP rate) * This also gives us some support for configuring filters from OPTIONS file as currently saved: `filter_policy=rocksdb.BuiltinBloomFilter`. Opening from such an options file will enable reading filters (an improvement) but not writing new ones. (See Customizable follow-up below.) * Also removed deprecated functions * FilterBitsBuilder::CalculateNumEntry() * FilterPolicy::GetFilterBitsBuilder() * NewExperimentalRibbonFilterPolicy() * Remove default implementations of * FilterBitsBuilder::EstimateEntriesAdded() * FilterBitsBuilder::ApproximateNumEntries() * FilterPolicy::GetBuilderWithContext() * Remove support for "filter_policy=experimental_ribbon" configuration string. * Allow "filter_policy=bloomfilter:n" without bool to discourage use of block-based filter. Some pieces for https://github.com/facebook/rocksdb/issues/9389 Likely follow-up (later PRs): * Refactoring toward FilterPolicy Customizable, so that we can generate filters with same configuration as before when configuring from options file. * Remove support for user enabling block-based filter (ignore `bool use_block_based_builder`) * Some months after this change, we could even remove read support for block-based filter, because it is not critical to DB data preservation. * Make FilterBitsBuilder::FinishV2 to avoid `using FilterBitsBuilder::Finish` mess and add support for specifying a MemoryAllocator (for cache warming) Pull Request resolved: https://github.com/facebook/rocksdb/pull/9501 Test Plan: A number of obsolete tests deleted and new tests or test cases added or updated. Reviewed By: hx235 Differential Revision: D34008011 Pulled By: pdillinger fbshipit-source-id: a39a720457c354e00d5b59166b686f7f59e392aa |

3 years ago |

|

|

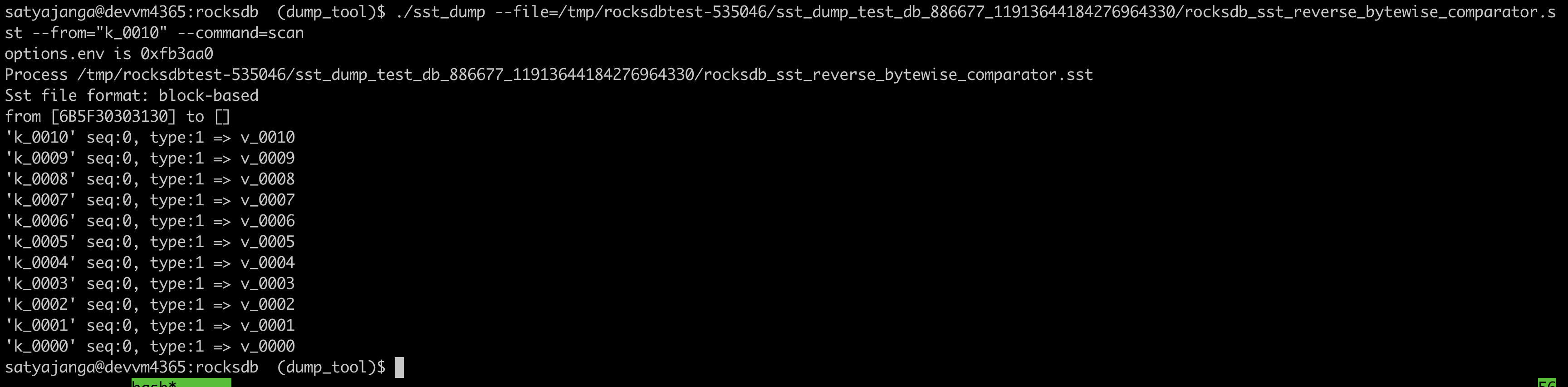

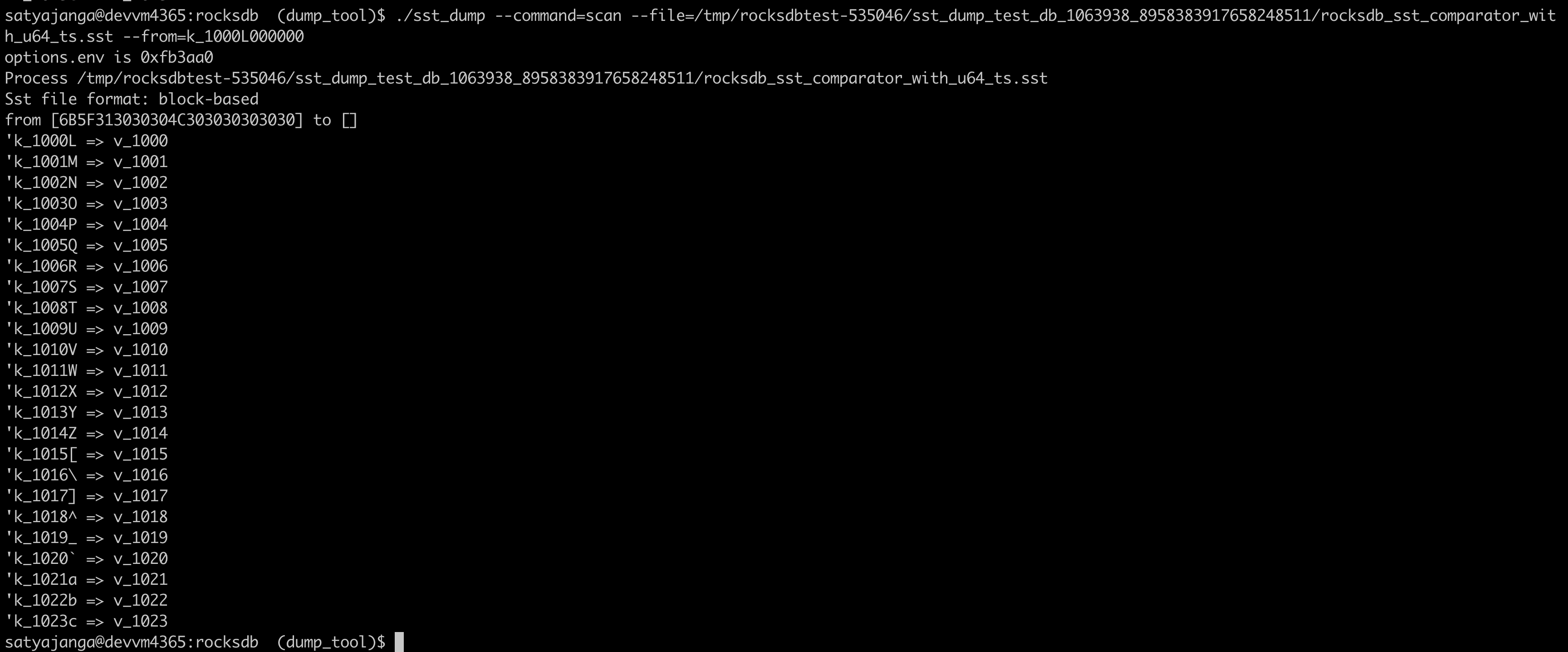

036bbab6f7 |

Use the comparator from the sst file table properties in sst_dump_tool (#9491)

Summary: We introduced a new Comparator for timestamp in user keys. In the sst_dump_tool by default we use BytewiseComparator to read sst files. This change allows us to read comparator_name from table properties in meta data block and use it to read. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9491 Test Plan: added unittests for new functionality. make check   Reviewed By: riversand963 Differential Revision: D33993614 Pulled By: satyajanga fbshipit-source-id: 4b5cf938e6d2cb3931d763bef5baccc900b8c536 |

3 years ago |

|

|

bbe4763ee4 |

Remove Deprecated overloads of DB::GetApproximateSizes (#9458)

Summary: In RocksDB few overloads of DB::GetApproximateSizes are marked as DEPRECATED_FUNC, and we are removing it in the upcoming 7.0 release. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9458 Test Plan: CircleCI Reviewed By: riversand963 Differential Revision: D34043791 Pulled By: akankshamahajan15 fbshipit-source-id: 815c0ad283a6627c4b241479c7d40ce03a758493 |

3 years ago |

|

|

98942a297d |

Update HISTORY for PR 9504 (#9513)

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9513 Reviewed By: riversand963 Differential Revision: D34046181 Pulled By: ltamasi fbshipit-source-id: a5d8d3bf84e5c13bdc6cbd5ba1b4216bad9adfc5 |

3 years ago |

|

|

fd3e0f43b3 |

Require C++17 (#9481)

Summary: Drop support for some old compilers by requiring C++17 standard (or higher). See https://github.com/facebook/rocksdb/issues/9388 First modification based on this is to remove some conditional compilation in slice.h (also better for ODR) Also in this PR: * Fix some Makefile formatting that seems to affect ASSERT_STATUS_CHECKED config in some cases * Add c_test to NON_PARALLEL_TEST in Makefile * Fix a clang-analyze reported "potential leak" in lru_cache_test * Better "compatibility" definition of DEFINE_uint32 for old versions of gflags * Fix a linking problem with shared libraries in Makefile (`./random_test: error while loading shared libraries: librocksdb.so.6.29: cannot open shared object file: No such file or directory`) * Always set ROCKSDB_SUPPORT_THREAD_LOCAL and use thread_local (from C++11) * TODO in later PR: clean up that obsolete flag * Fix a cosmetic typo in c.h (https://github.com/facebook/rocksdb/issues/9488) Pull Request resolved: https://github.com/facebook/rocksdb/pull/9481 Test Plan: CircleCI config substantially updated. * Upgrade to latest Ubuntu images for each release * Generally prefer Ubuntu 20, but keep a couple Ubuntu 16 builds with oldest supported compilers, to ensure compatibility * Remove .circleci/cat_ignore_eagain except for Ubuntu 16 builds, because this is to work around a kernel bug that should not affect anything but Ubuntu 16. * Remove designated gcc-9 build, because the default linux build now uses GCC 9 from Ubuntu 20. * Add some `apt-key add` to fix some apt "couldn't be verified" errors * Generally drop SKIP_LINK=1; work-around no longer needed * Generally `add-apt-repository` before `apt-get update` as manual testing indicated the reverse might not work. Travis: * Use gcc-7 by default (remove specific gcc-7 and gcc-4.8 builds) * TODO in later PR: fix s390x "Assembler messages: Error: invalid switch -march=z14" failure AppVeyor: * Completely dropped because we are dropping VS2015 support and CircleCI covers VS >= 2017 Also local testing with old gflags (out of necessity when using ROCKSDB_NO_FBCODE=1). Reviewed By: mrambacher Differential Revision: D33946377 Pulled By: pdillinger fbshipit-source-id: ae077c823905b45370a26c0103ada119459da6c1 |

3 years ago |