Tag:

Branch:

Tree:

7a07afe82e

main

oxigraph-8.1.1

oxigraph-8.3.2

oxigraph-main

${ noResults }

117 Commits (7a07afe82e0f6d83d143c22e6f8cce8e6f2fefcc)

| Author | SHA1 | Message | Date |

|---|---|---|---|

|

|

4720ba4391 |

Remove RocksDB LITE (#11147)

Summary: We haven't been actively mantaining RocksDB LITE recently and the size must have been gone up significantly. We are removing the support. Most of changes were done through following comments: unifdef -m -UROCKSDB_LITE `git grep -l ROCKSDB_LITE | egrep '[.](cc|h)'` by Peter Dillinger. Others changes were manually applied to build scripts, CircleCI manifests, ROCKSDB_LITE is used in an expression and file db_stress_test_base.cc. Pull Request resolved: https://github.com/facebook/rocksdb/pull/11147 Test Plan: See CI Reviewed By: pdillinger Differential Revision: D42796341 fbshipit-source-id: 4920e15fc2060c2cd2221330a6d0e5e65d4b7fe2 |

2 years ago |

|

|

cc6f323705 |

Include estimated bytes deleted by range tombstones in compensated file size (#10734)

Summary: compensate file sizes in compaction picking so files with range tombstones are preferred, such that they get compacted down earlier as they tend to delete a lot of data. This PR adds a `compensated_range_deletion_size` field in FileMeta that is computed during Flush/Compaction and persisted in MANIFEST. This value is added to `compensated_file_size` which will be used for compaction picking. Currently, for a file in level L, `compensated_range_deletion_size` is set to the estimated bytes deleted by range tombstone of this file in all levels > L. This helps to reduce space amp when data in older levels are covered by range tombstones in level L. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10734 Test Plan: - Added unit tests. - benchmark to check if the above definition `compensated_range_deletion_size` is reducing space amp as intended, without affecting write amp too much. The experiment set up favorable for this optimization: large range tombstone issued infrequently. Command used: ``` ./db_bench -benchmarks=fillrandom,waitforcompaction,stats,levelstats -use_existing_db=false -avoid_flush_during_recovery=true -write_buffer_size=33554432 -level_compaction_dynamic_level_bytes=true -max_background_jobs=8 -max_bytes_for_level_base=134217728 -target_file_size_base=33554432 -writes_per_range_tombstone=500000 -range_tombstone_width=5000000 -num=50000000 -benchmark_write_rate_limit=8388608 -threads=16 -duration=1800 --max_num_range_tombstones=1000000000 ``` In this experiment, each thread wrote 16 range tombstones over the duration of 30 minutes, each range tombstone has width 5M that is the 10% of the key space width. Results shows this PR generates a smaller DB size. Compaction stats from this PR: ``` Level Files Size Score Read(GB) Rn(GB) Rnp1(GB) Write(GB) Wnew(GB) Moved(GB) W-Amp Rd(MB/s) Wr(MB/s) Comp(sec) CompMergeCPU(sec) Comp(cnt) Avg(sec) KeyIn KeyDrop Rblob(GB) Wblob(GB) ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ L0 2/0 31.54 MB 0.5 0.0 0.0 0.0 8.4 8.4 0.0 1.0 0.0 63.4 135.56 110.94 544 0.249 0 0 0.0 0.0 L4 3/0 96.55 MB 0.8 18.5 6.7 11.8 18.4 6.6 0.0 2.7 65.3 64.9 290.08 284.03 108 2.686 284M 1957K 0.0 0.0 L5 15/0 404.41 MB 1.0 19.1 7.7 11.4 18.8 7.4 0.3 2.5 66.6 65.7 292.93 285.34 220 1.332 293M 3808K 0.0 0.0 L6 143/0 4.12 GB 0.0 45.0 7.5 37.5 41.6 4.1 0.0 5.5 71.2 65.9 647.00 632.66 251 2.578 739M 47M 0.0 0.0 Sum 163/0 4.64 GB 0.0 82.6 21.9 60.7 87.2 26.5 0.3 10.4 61.9 65.4 1365.58 1312.97 1123 1.216 1318M 52M 0.0 0.0 ``` Compaction stats from main: ``` Level Files Size Score Read(GB) Rn(GB) Rnp1(GB) Write(GB) Wnew(GB) Moved(GB) W-Amp Rd(MB/s) Wr(MB/s) Comp(sec) CompMergeCPU(sec) Comp(cnt) Avg(sec) KeyIn KeyDrop Rblob(GB) Wblob(GB) ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ L0 0/0 0.00 KB 0.0 0.0 0.0 0.0 8.4 8.4 0.0 1.0 0.0 60.5 142.12 115.89 569 0.250 0 0 0.0 0.0 L4 3/0 85.68 MB 1.0 17.7 6.8 10.9 17.6 6.7 0.0 2.6 62.7 62.3 289.05 281.79 112 2.581 272M 2309K 0.0 0.0 L5 11/0 293.73 MB 1.0 18.8 7.5 11.2 18.5 7.2 0.5 2.5 64.9 63.9 296.07 288.50 220 1.346 288M 4365K 0.0 0.0 L6 130/0 3.94 GB 0.0 51.5 7.6 43.9 47.9 3.9 0.0 6.3 67.2 62.4 784.95 765.92 258 3.042 848M 51M 0.0 0.0 Sum 144/0 4.31 GB 0.0 88.0 21.9 66.0 92.3 26.3 0.5 11.0 59.6 62.5 1512.19 1452.09 1159 1.305 1409M 58M 0.0 0.0``` Reviewed By: ajkr Differential Revision: D39834713 Pulled By: cbi42 fbshipit-source-id: fe9341040b8704a8fbb10cad5cf5c43e962c7e6b |

2 years ago |

|

|

98d5db5c2e |

Sort L0 files by newly introduced epoch_num (#10922)

Summary:

**Context:**

Sorting L0 files by `largest_seqno` has at least two inconvenience:

- File ingestion and compaction involving ingested files can create files of overlapping seqno range with the existing files. `force_consistency_check=true` will catch such overlap seqno range even those harmless overlap.

- For example, consider the following sequence of events ("key@n" indicates key at seqno "n")

- insert k1@1 to memtable m1

- ingest file s1 with k2@2, ingest file s2 with k3@3

- insert k4@4 to m1

- compact files s1, s2 and result in new file s3 of seqno range [2, 3]

- flush m1 and result in new file s4 of seqno range [1, 4]. And `force_consistency_check=true` will think s4 and s3 has file reordering corruption that might cause retuning an old value of k1

- However such caught corruption is a false positive since s1, s2 will not have overlapped keys with k1 or whatever inserted into m1 before ingest file s1 by the requirement of file ingestion (otherwise the m1 will be flushed first before any of the file ingestion completes). Therefore there in fact isn't any file reordering corruption.

- Single delete can decrease a file's largest seqno and ordering by `largest_seqno` can introduce a wrong ordering hence file reordering corruption

- For example, consider the following sequence of events ("key@n" indicates key at seqno "n", Credit to ajkr for this example)

- an existing SST s1 contains only k1@1

- insert k1@2 to memtable m1

- ingest file s2 with k3@3, ingest file s3 with k4@4

- insert single delete k5@5 in m1

- flush m1 and result in new file s4 of seqno range [2, 5]

- compact s1, s2, s3 and result in new file s5 of seqno range [1, 4]

- compact s4 and result in new file s6 of seqno range [2] due to single delete

- By the last step, we have file ordering by largest seqno (">" means "newer") : s5 > s6 while s6 contains a newer version of the k1's value (i.e, k1@2) than s5, which is a real reordering corruption. While this can be caught by `force_consistency_check=true`, there isn't a good way to prevent this from happening if ordering by `largest_seqno`

Therefore, we are redesigning the sorting criteria of L0 files and avoid above inconvenience. Credit to ajkr , we now introduce `epoch_num` which describes the order of a file being flushed or ingested/imported (compaction output file will has the minimum `epoch_num` among input files'). This will avoid the above inconvenience in the following ways:

- In the first case above, there will no longer be overlap seqno range check in `force_consistency_check=true` but `epoch_number` ordering check. This will result in file ordering s1 < s2 < s4 (pre-compaction) and s3 < s4 (post-compaction) which won't trigger false positive corruption. See test class `DBCompactionTestL0FilesMisorderCorruption*` for more.

- In the second case above, this will result in file ordering s1 < s2 < s3 < s4 (pre-compacting s1, s2, s3), s5 < s4 (post-compacting s1, s2, s3), s5 < s6 (post-compacting s4), which are correct file ordering without causing any corruption.

**Summary:**

- Introduce `epoch_number` stored per `ColumnFamilyData` and sort CF's L0 files by their assigned `epoch_number` instead of `largest_seqno`.

- `epoch_number` is increased and assigned upon `VersionEdit::AddFile()` for flush (or similarly for WriteLevel0TableForRecovery) and file ingestion (except for allow_behind_true, which will always get assigned as the `kReservedEpochNumberForFileIngestedBehind`)

- Compaction output file is assigned with the minimum `epoch_number` among input files'

- Refit level: reuse refitted file's epoch_number

- Other paths needing `epoch_number` treatment:

- Import column families: reuse file's epoch_number if exists. If not, assign one based on `NewestFirstBySeqNo`

- Repair: reuse file's epoch_number if exists. If not, assign one based on `NewestFirstBySeqNo`.

- Assigning new epoch_number to a file and adding this file to LSM tree should be atomic. This is guaranteed by us assigning epoch_number right upon `VersionEdit::AddFile()` where this version edit will be apply to LSM tree shape right after by holding the db mutex (e.g, flush, file ingestion, import column family) or by there is only 1 ongoing edit per CF (e.g, WriteLevel0TableForRecovery, Repair).

- Assigning the minimum input epoch number to compaction output file won't misorder L0 files (even through later `Refit(target_level=0)`). It's due to for every key "k" in the input range, a legit compaction will cover a continuous epoch number range of that key. As long as we assign the key "k" the minimum input epoch number, it won't become newer or older than the versions of this key that aren't included in this compaction hence no misorder.

- Persist `epoch_number` of each file in manifest and recover `epoch_number` on db recovery

- Backward compatibility with old db without `epoch_number` support is guaranteed by assigning `epoch_number` to recovered files by `NewestFirstBySeqno` order. See `VersionStorageInfo::RecoverEpochNumbers()` for more

- Forward compatibility with manifest is guaranteed by flexibility of `NewFileCustomTag`

- Replace `force_consistent_check` on L0 with `epoch_number` and remove false positive check like case 1 with `largest_seqno` above

- Due to backward compatibility issue, we might encounter files with missing epoch number at the beginning of db recovery. We will still use old L0 sorting mechanism (`NewestFirstBySeqno`) to check/sort them till we infer their epoch number. See usages of `EpochNumberRequirement`.

- Remove fix https://github.com/facebook/rocksdb/pull/5958#issue-511150930 and their outdated tests to file reordering corruption because such fix can be replaced by this PR.

- Misc:

- update existing tests with `epoch_number` so make check will pass

- update https://github.com/facebook/rocksdb/pull/5958#issue-511150930 tests to verify corruption is fixed using `epoch_number` and cover universal/fifo compaction/CompactRange/CompactFile cases

- assert db_mutex is held for a few places before calling ColumnFamilyData::NewEpochNumber()

Pull Request resolved: https://github.com/facebook/rocksdb/pull/10922

Test Plan:

- `make check`

- New unit tests under `db/db_compaction_test.cc`, `db/db_test2.cc`, `db/version_builder_test.cc`, `db/repair_test.cc`

- Updated tests (i.e, `DBCompactionTestL0FilesMisorderCorruption*`) under https://github.com/facebook/rocksdb/pull/5958#issue-511150930

- [Ongoing] Compatibility test: manually run

|

2 years ago |

|

|

5cf6ab6f31 |

Ran clang-format on db/ directory (#10910)

Summary: Ran `find ./db/ -type f | xargs clang-format -i`. Excluded minor changes it tried to make on db/db_impl/. Everything else it changed was directly under db/ directory. Included minor manual touchups mentioned in PR commit history. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10910 Reviewed By: riversand963 Differential Revision: D40880683 Pulled By: ajkr fbshipit-source-id: cfe26cda05b3fb9a72e3cb82c286e21d8c5c4174 |

2 years ago |

|

|

e466173d5c |

Print stack traces on frozen tests in CI (#10828)

Summary: Instead of existing calls to ps from gnu_parallel, call a new wrapper that does ps, looks for unit test like processes, and uses pstack or gdb to print thread stack traces. Also, using `ps -wwf` instead of `ps -wf` ensures output is not cut off. For security, CircleCI runs with security restrictions on ptrace (/proc/sys/kernel/yama/ptrace_scope = 1), and this change adds a work-around to `InstallStackTraceHandler()` (only used by testing tools) to allow any process from the same user to debug it. (I've also touched >100 files to ensure all the unit tests call this function.) Pull Request resolved: https://github.com/facebook/rocksdb/pull/10828 Test Plan: local manual + temporary infinite loop in a unit test to observe in CircleCI Reviewed By: hx235 Differential Revision: D40447634 Pulled By: pdillinger fbshipit-source-id: 718a4c4a5b54fa0f9af2d01a446162b45e5e84e1 |

2 years ago |

|

|

e484b81eee |

Sync dir containing CURRENT after RenameFile on CURRENT as much as possible (#10573)

Summary:

**Context:**

Below crash test revealed a bug that directory containing CURRENT file (short for `dir_contains_current_file` below) was not always get synced after a new CURRENT is created and being called with `RenameFile` as part of the creation.

This bug exposes a risk that such un-synced directory containing the updated CURRENT can’t survive a host crash (e.g, power loss) hence get corrupted. This then will be followed by a recovery from a corrupted CURRENT that we don't want.

The root-cause is that a nullptr `FSDirectory* dir_contains_current_file` sometimes gets passed-down to `SetCurrentFile()` hence in those case `dir_contains_current_file->FSDirectory::FsyncWithDirOptions()` will be skipped (which otherwise will internally call`Env/FS::SyncDic()` )

```

./db_stress --acquire_snapshot_one_in=10000 --adaptive_readahead=1 --allow_data_in_errors=True --avoid_unnecessary_blocking_io=0 --backup_max_size=104857600 --backup_one_in=100000 --batch_protection_bytes_per_key=8 --block_size=16384 --bloom_bits=134.8015470676662 --bottommost_compression_type=disable --cache_size=8388608 --checkpoint_one_in=1000000 --checksum_type=kCRC32c --clear_column_family_one_in=0 --compact_files_one_in=1000000 --compact_range_one_in=1000000 --compaction_pri=2 --compaction_ttl=100 --compression_max_dict_buffer_bytes=511 --compression_max_dict_bytes=16384 --compression_type=zstd --compression_use_zstd_dict_trainer=1 --compression_zstd_max_train_bytes=65536 --continuous_verification_interval=0 --data_block_index_type=0 --db=$db --db_write_buffer_size=1048576 --delpercent=5 --delrangepercent=0 --destroy_db_initially=0 --disable_wal=0 --enable_compaction_filter=0 --enable_pipelined_write=1 --expected_values_dir=$exp --fail_if_options_file_error=1 --file_checksum_impl=none --flush_one_in=1000000 --get_current_wal_file_one_in=0 --get_live_files_one_in=1000000 --get_property_one_in=1000000 --get_sorted_wal_files_one_in=0 --index_block_restart_interval=4 --ingest_external_file_one_in=0 --iterpercent=10 --key_len_percent_dist=1,30,69 --level_compaction_dynamic_level_bytes=True --mark_for_compaction_one_file_in=10 --max_background_compactions=20 --max_bytes_for_level_base=10485760 --max_key=10000 --max_key_len=3 --max_manifest_file_size=16384 --max_write_batch_group_size_bytes=64 --max_write_buffer_number=3 --max_write_buffer_size_to_maintain=0 --memtable_prefix_bloom_size_ratio=0.001 --memtable_protection_bytes_per_key=1 --memtable_whole_key_filtering=1 --mmap_read=1 --nooverwritepercent=1 --open_metadata_write_fault_one_in=0 --open_read_fault_one_in=0 --open_write_fault_one_in=0 --ops_per_thread=100000000 --optimize_filters_for_memory=1 --paranoid_file_checks=1 --partition_pinning=2 --pause_background_one_in=1000000 --periodic_compaction_seconds=0 --prefix_size=5 --prefixpercent=5 --prepopulate_block_cache=1 --progress_reports=0 --read_fault_one_in=1000 --readpercent=45 --recycle_log_file_num=0 --reopen=0 --ribbon_starting_level=999 --secondary_cache_fault_one_in=32 --secondary_cache_uri=compressed_secondary_cache://capacity=8388608 --set_options_one_in=10000 --snapshot_hold_ops=100000 --sst_file_manager_bytes_per_sec=0 --sst_file_manager_bytes_per_truncate=0 --subcompactions=3 --sync_fault_injection=1 --target_file_size_base=2097 --target_file_size_multiplier=2 --test_batches_snapshots=1 --top_level_index_pinning=1 --use_full_merge_v1=1 --use_merge=1 --value_size_mult=32 --verify_checksum=1 --verify_checksum_one_in=1000000 --verify_db_one_in=100000 --verify_sst_unique_id_in_manifest=1 --wal_bytes_per_sync=524288 --write_buffer_size=4194 --writepercent=35

```

```

stderr:

WARNING: prefix_size is non-zero but memtablerep != prefix_hash

db_stress: utilities/fault_injection_fs.cc:748: virtual rocksdb::IOStatus rocksdb::FaultInjectionTestFS::RenameFile(const std::string &, const std::string &, const rocksdb::IOOptions &, rocksdb::IODebugContext *): Assertion `tlist.find(tdn.second) == tlist.end()' failed.`

```

**Summary:**

The PR ensured the non-test path pass down a non-null dir containing CURRENT (which is by current RocksDB assumption just db_dir) by doing the following:

- Renamed `directory_to_fsync` as `dir_contains_current_file` in `SetCurrentFile()` to tighten the association between this directory and CURRENT file

- Changed `SetCurrentFile()` API to require `dir_contains_current_file` being passed-in, instead of making it by default nullptr.

- Because `SetCurrentFile()`'s `dir_contains_current_file` is passed down from `VersionSet::LogAndApply()` then `VersionSet::ProcessManifestWrites()` (i.e, think about this as a chain of 3 functions related to MANIFEST update), these 2 functions also got refactored to require `dir_contains_current_file`

- Updated the non-test-path callers of these 3 functions to obtain and pass in non-nullptr `dir_contains_current_file`, which by current assumption of RocksDB, is the `FSDirectory* db_dir`.

- `db_impl` path will obtain `DBImpl::directories_.getDbDir()` while others with no access to such `directories_` are obtained on the fly by creating such object `FileSystem::NewDirectory(..)` and manage it by unique pointers to ensure short life time.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/10573

Test Plan:

- `make check`

- Passed the repro db_stress command

- For future improvement, since we currently don't assert dir containing CURRENT to be non-nullptr due to https://github.com/facebook/rocksdb/pull/10573#pullrequestreview-1087698899, there is still chances that future developers mistakenly pass down nullptr dir containing CURRENT thus resulting skipped sync dir and cause the bug again. Therefore a smarter test (e.g, such as quoted from ajkr "(make) unsynced data loss to be dropping files corresponding to unsynced directory entries") is still needed.

Reviewed By: ajkr

Differential Revision: D39005886

Pulled By: hx235

fbshipit-source-id: 336fb9090d0cfa6ca3dd580db86268007dde7f5a

|

2 years ago |

|

|

911c0208b9 |

WritableFileWriter tries to skip operations after failure (#10489)

Summary: A flag in WritableFileWriter is introduced to remember error has happened. Subsequent operations will fail with an assertion. Those operations, except Close() are not supposed to be called anyway. This change will help catch bug in tests and stress tests and limit damage of a potential bug of continue writing to a file after a failure. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10489 Test Plan: Fix existing unit tests and watch crash tests for a while. Reviewed By: anand1976 Differential Revision: D38473277 fbshipit-source-id: 09aafb971e56cfd7f9ef92ad15b883f54acf1366 |

2 years ago |

|

|

56dbcb4f72 |

Deflake ChargeFileMetadataTestWithParam/ChargeFileMetadataTestWithParam.Basic/0 (#10481)

Summary:

**Context/summary:**

`ChargeFileMetadataTestWithParam/ChargeFileMetadataTestWithParam.Basic/0 ` relies on `DBImpl::BackgroundCallCompaction:PurgedObsoleteFiles` happens before verifying `EXPECT_EQ(file_metadata_charge_only_cache->GetCacheCharge(),

1 * CacheReservationManagerImpl<

CacheEntryRole::kFileMetadata>::GetDummyEntrySize());` or `EXPECT_EQ(file_metadata_charge_only_cache->GetCacheCharge(), 0);` to ensure appropriate cache reservation release is done before checking.

However, this might not be the case under some timing delay and spurious wake-up as coerced below.

```

diff --git a/db/db_impl/db_impl_compaction_flush.cc b/db/db_impl/db_impl_compaction_flush.cc

index 4378f3212..3e4f60853 100644

--- a/db/db_impl/db_impl_compaction_flush.cc

+++ b/db/db_impl/db_impl_compaction_flush.cc

@@ -2989,6 +2989,8 @@ void DBImpl::BackgroundCallCompaction(PrepickedCompaction* prepicked_compaction,

if (job_context.HaveSomethingToClean() ||

job_context.HaveSomethingToDelete() || !log_buffer.IsEmpty()) {

mutex_.Unlock();

+ bg_cv_.SignalAll();

+ usleep(1000);

// Have to flush the info logs before bg_compaction_scheduled_--

// because if bg_flush_scheduled_ becomes 0 and the lock is

// released, the deconstructor of DB can kick in and destroy all the

// states of DB so info_log might not be available after that point.

// It also applies to access other states that DB owns.

log_buffer.FlushBufferToLog();

if (job_context.HaveSomethingToDelete()) {

PurgeObsoleteFiles(job_context);

TEST_SYNC_POINT("DBImpl::BackgroundCallCompaction:PurgedObsoleteFiles");

}

```

Pull Request resolved: https://github.com/facebook/rocksdb/pull/10481

Test Plan:

The test of interest failed often at the above coercion:

After fix, the test of interest passed at the above coercion:

Reviewed By: jay-zhuang

Differential Revision: D38438256

Pulled By: hx235

fbshipit-source-id: de80ecdb250174f00e7c2f5e4d952695ed56f51e

|

2 years ago |

|

|

fbfcf5cbcd |

Remove unused fields from FileMetaData (temporarily) (#10443)

Summary: FileMetaData::[min|max]_timestamp are not currently being used or tracked by RocksDB, even when user-defined timestamp is enabled. Each of them is a std::string which can occupy 32 bytes. Remove them for now. They may be added back when we have a pressing need for them. When we do add them back, consider store them in a more compact way, e.g. one boolean flag and a byte array of size 16. Per file min/max timestamp bounds are available as table properties. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10443 Test Plan: make check Reviewed By: pdillinger Differential Revision: D38292275 Pulled By: riversand963 fbshipit-source-id: 841dc4e855ad8f8481c80cb020603de9607c9c94 |

2 years ago |

|

|

1e9bf25f61 |

Do not hold mutex when write keys if not necessary (#7516)

Summary: ## Problem Summary RocksDB will acquire the global mutex of db instance for every time when user calls `Write`. When RocksDB schedules a lot of compaction jobs, it will compete the mutex with write thread and it will hurt the write performance. ## Problem Solution: I want to use log_write_mutex to replace the global mutex in most case so that we do not acquire it in write-thread unless there is a write-stall event or a write-buffer-full event occur. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7516 Test Plan: 1. make check 2. CI 3. COMPILE_WITH_TSAN=1 make db_stress make crash_test make crash_test_with_multiops_wp_txn make crash_test_with_multiops_wc_txn make crash_test_with_atomic_flush Reviewed By: siying Differential Revision: D36908702 Pulled By: riversand963 fbshipit-source-id: 59b13881f4f5c0a58fd3ca79128a396d9cd98efe |

2 years ago |

|

|

b397dcd390 |

Change The Way Level Target And Compaction Score Are Calculated (#10057)

Summary: The current level targets for dynamical leveling has a problem: the target level size will dramatically change after a L0->L1 compaction. When there are many L0 bytes, lower level compactions are delayed, but they will be resumed after the L0->L1 compaction finishes, so the expected write amplification benefits might not be realized. The proposal here is to revert the level targetting size, but instead relying on adjusting score for each level to prioritize levels that need to compact most. Basic idea: (1) target level size isn't adjusted, but score is adjusted. The reasoning is that with parallel compactions, holding compactions from happening might not be desirable, but we would like the compactions are scheduled from the level we feel most needed. For example, if we have a extra-large L2, we would like all compactions are scheduled for L2->L3 compactions, rather than L4->L5. This gets complicated when a large L0->L1 compaction is going on. Should we compact L2->L3 or L4->L5. So the proposal for that is: (2) the score is calculated by actual level size / (target size + estimated upper bytes coming down). The reasoning is that if we have a large amount of pending L0/L1 bytes coming down, compacting L2->L3 might be more expensive, as when the L0 bytes are compacted down to L2, the actual L2->L3 fanout would change dramatically. On the other hand, when the amount of bytes coming down to L5, the impacts to L5->L6 fanout are much less. So when calculating target score, we can adjust it by adding estimated downward bytes to the target level size. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10057 Test Plan: Repurpose tests VersionStorageInfoTest.MaxBytesForLevelDynamicWithLargeL0_* tests to cover this scenario. Reviewed By: ajkr Differential Revision: D37539742 fbshipit-source-id: 9c154cbfe92023f918cf5d80875d8776ad4831a4 |

3 years ago |

|

|

deff48bcef |

Add blob source to retrieve blobs in RocksDB (#10198)

Summary: There is currently no caching mechanism for blobs, which is not ideal especially when the database resides on remote storage (where we cannot rely on the OS page cache). As part of this task, we would like to make it possible for the application to configure a blob cache. In this task, we formally introduced the blob source to RocksDB. BlobSource is a new abstraction layer that provides universal access to blobs, regardless of whether they are in the blob cache, secondary cache, or (remote) storage. Depending on user settings, it always fetch blobs from multi-tier cache and storage with minimal cost. Note: The new `MultiGetBlob()` implementation is not included in the current PR. To go faster, we aim to create a separate PR for it in parallel! This PR is a part of https://github.com/facebook/rocksdb/issues/10156 Pull Request resolved: https://github.com/facebook/rocksdb/pull/10198 Reviewed By: ltamasi Differential Revision: D37294735 Pulled By: gangliao fbshipit-source-id: 9cb50422d9dd1bc03798501c2778b6c7520c7a1e |

3 years ago |

|

|

d665afdbf3 |

Account memory of FileMetaData in global memory limit (#9924)

Summary: **Context/Summary:** As revealed by heap profiling, allocation of `FileMetaData` for [newly created file added to a Version](https://github.com/facebook/rocksdb/pull/9924/files#diff-a6aa385940793f95a2c5b39cc670bd440c4547fa54fd44622f756382d5e47e43R774) can consume significant heap memory. This PR is to account that toward our global memory limit based on block cache capacity. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9924 Test Plan: - Previous `make check` verified there are only 2 places where the memory of the allocated `FileMetaData` can be released - New unit test `TEST_P(ChargeFileMetadataTestWithParam, Basic)` - db bench (CPU cost of `charge_file_metadata` in write and compact) - **write micros/op: -0.24%** : `TEST_TMPDIR=/dev/shm/testdb ./db_bench -benchmarks=fillseq -db=$TEST_TMPDIR -charge_file_metadata=1 (remove this option for pre-PR) -disable_auto_compactions=1 -write_buffer_size=100000 -num=4000000 | egrep 'fillseq'` - **compact micros/op -0.87%** : `TEST_TMPDIR=/dev/shm/testdb ./db_bench -benchmarks=fillseq -db=$TEST_TMPDIR -charge_file_metadata=1 -disable_auto_compactions=1 -write_buffer_size=100000 -num=4000000 -numdistinct=1000 && ./db_bench -benchmarks=compact -db=$TEST_TMPDIR -use_existing_db=1 -charge_file_metadata=1 -disable_auto_compactions=1 | egrep 'compact'` table 1 - write #-run | (pre-PR) avg micros/op | std micros/op | (post-PR) micros/op | std micros/op | change (%) -- | -- | -- | -- | -- | -- 10 | 3.9711 | 0.264408 | 3.9914 | 0.254563 | 0.5111933721 20 | 3.83905 | 0.0664488 | 3.8251 | 0.0695456 | -0.3633711465 40 | 3.86625 | 0.136669 | 3.8867 | 0.143765 | 0.5289363078 80 | 3.87828 | 0.119007 | 3.86791 | 0.115674 | **-0.2673865734** 160 | 3.87677 | 0.162231 | 3.86739 | 0.16663 | **-0.2419539978** table 2 - compact #-run | (pre-PR) avg micros/op | std micros/op | (post-PR) micros/op | std micros/op | change (%) -- | -- | -- | -- | -- | -- 10 | 2,399,650.00 | 96,375.80 | 2,359,537.00 | 53,243.60 | -1.67 20 | 2,410,480.00 | 89,988.00 | 2,433,580.00 | 91,121.20 | 0.96 40 | 2.41E+06 | 121811 | 2.39E+06 | 131525 | **-0.96** 80 | 2.40E+06 | 134503 | 2.39E+06 | 108799 | **-0.78** - stress test: `python3 tools/db_crashtest.py blackbox --charge_file_metadata=1 --cache_size=1` killed as normal Reviewed By: ajkr Differential Revision: D36055583 Pulled By: hx235 fbshipit-source-id: b60eab94707103cb1322cf815f05810ef0232625 |

3 years ago |

|

|

c6d326d3d7 |

Track SST unique id in MANIFEST and verify (#9990)

Summary: Start tracking SST unique id in MANIFEST, which is used to verify with SST properties to make sure the SST file is not overwritten or misplaced. A DB option `try_verify_sst_unique_id` is introduced to enable/disable the verification, if enabled, it opens all SST files during DB-open to read the unique_id from table properties (default is false), so it's recommended to use it with `max_open_files = -1` to pre-open the files. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9990 Test Plan: unittests, format-compatible test, mini-crash Reviewed By: anand1976 Differential Revision: D36381863 Pulled By: jay-zhuang fbshipit-source-id: 89ea2eb6b35ed3e80ead9c724eb096083eaba63f |

3 years ago |

|

|

e0c84aa0dc |

Fix a race condition in WAL tracking causing DB open failure (#9715)

Summary: There is a race condition if WAL tracking in the MANIFEST is enabled in a database that disables 2PC. The race condition is between two background flush threads trying to install flush results to the MANIFEST. Consider an example database with two column families: "default" (cfd0) and "cf1" (cfd1). Initially, both column families have one mutable (active) memtable whose data backed by 6.log. 1. Trigger a manual flush for "cf1", creating a 7.log 2. Insert another key to "default", and trigger flush for "default", creating 8.log 3. BgFlushThread1 finishes writing 9.sst 4. BgFlushThread2 finishes writing 10.sst ``` Time BgFlushThread1 BgFlushThread2 | mutex_.Lock() | precompute min_wal_to_keep as 6 | mutex_.Unlock() | mutex_.Lock() | precompute min_wal_to_keep as 6 | join MANIFEST write queue and mutex_.Unlock() | write to MANIFEST | mutex_.Lock() | cfd1->log_number = 7 | Signal bg_flush_2 and mutex_.Unlock() | wake up and mutex_.Lock() | cfd0->log_number = 8 | FindObsoleteFiles() with job_context->log_number == 7 | mutex_.Unlock() | PurgeObsoleteFiles() deletes 6.log V ``` As shown in the above, BgFlushThread2 thinks that the min wal to keep is 6.log because "cf1" has unflushed data in 6.log (cf1.log_number=6). Similarly, BgThread1 thinks that min wal to keep is also 6.log because "default" has unflushed data (default.log_number=6). No WAL deletion will be written to MANIFEST because 6 is equal to `versions_->wals_.min_wal_number_to_keep`, due to https://github.com/facebook/rocksdb/blob/7.1.fb/db/memtable_list.cc#L513:L514. The bg flush thread that finishes last will perform file purging. `job_context.log_number` will be evaluated as 7, i.e. the min wal that contains unflushed data, causing 6.log to be deleted. However, MANIFEST thinks 6.log should still exist. If you close the db at this point, you won't be able to re-open it if `track_and_verify_wal_in_manifest` is true. We must handle the case of multiple bg flush threads, and it is difficult for one bg flush thread to know the correct min wal number until the other bg flush threads have finished committing to the manifest and updated the `cfd::log_number`. To fix this issue, we rename an existing variable `min_log_number_to_keep_2pc` to `min_log_number_to_keep`, and use it to track WAL file deletion in non-2pc mode as well. This variable is updated only 1) during recovery with mutex held, or 2) in the MANIFEST write thread. `min_log_number_to_keep` means RocksDB will delete WALs below it, although there may be WALs above it which are also obsolete. Formally, we will have [min_wal_to_keep, max_obsolete_wal]. During recovery, we make sure that only WALs above max_obsolete_wal are checked and added back to `alive_log_files_`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9715 Test Plan: ``` make check ``` Also ran stress test below (with asan) to make sure it completes successfully. ``` TEST_TMPDIR=/dev/shm/rocksdb OPT=-g ASAN_OPTIONS=disable_coredump=0 \ CRASH_TEST_EXT_ARGS=--compression_type=zstd SKIP_FORMAT_BUCK_CHECKS=1 \ make J=52 -j52 blackbox_asan_crash_test ``` Reviewed By: ltamasi Differential Revision: D34984412 Pulled By: riversand963 fbshipit-source-id: c7b21a8d84751bb55ea79c9f387103d21b231005 |

3 years ago |

|

|

a1203edca4 |

Rework VersionStorageInfo::ComputeFilesMarkedForForcedBlobGC a bit (#9548)

Summary: We had a bug in `VersionStorageInfo::ComputeFilesMarkedForForcedBlobGC` related to the edge case where all blob files are part of the "oldest batch", i.e. where only the very oldest file has any linked SSTs. (See https://github.com/facebook/rocksdb/issues/9542) This PR tries to make the logic in this method clearer and also adds a unit test for the problematic case. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9548 Test Plan: `make check` Reviewed By: akankshamahajan15 Differential Revision: D34158959 Pulled By: ltamasi fbshipit-source-id: fbab6d749c569728382aa04f7b7c60c92cca7650 |

3 years ago |

|

|

036bbab6f7 |

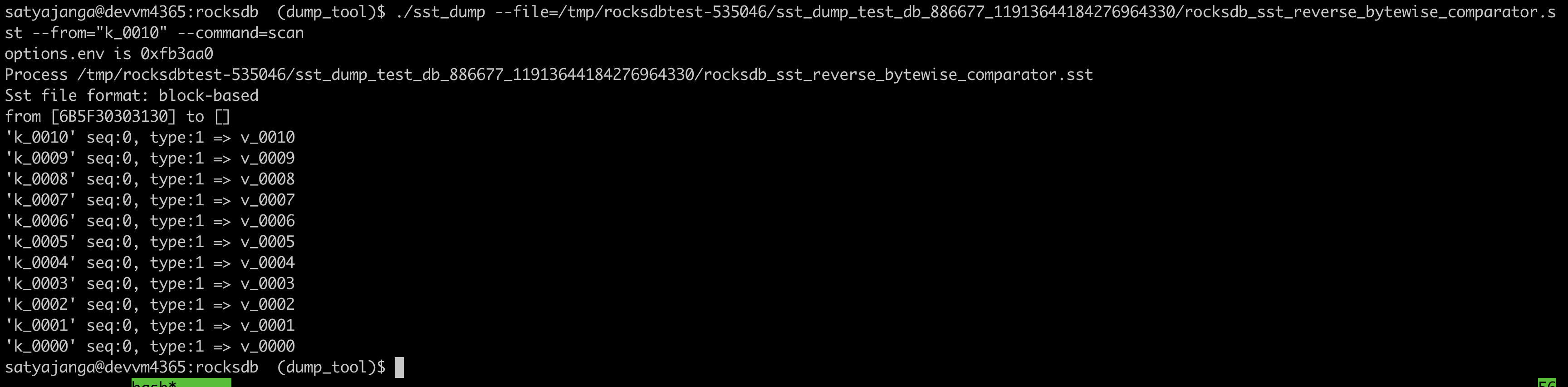

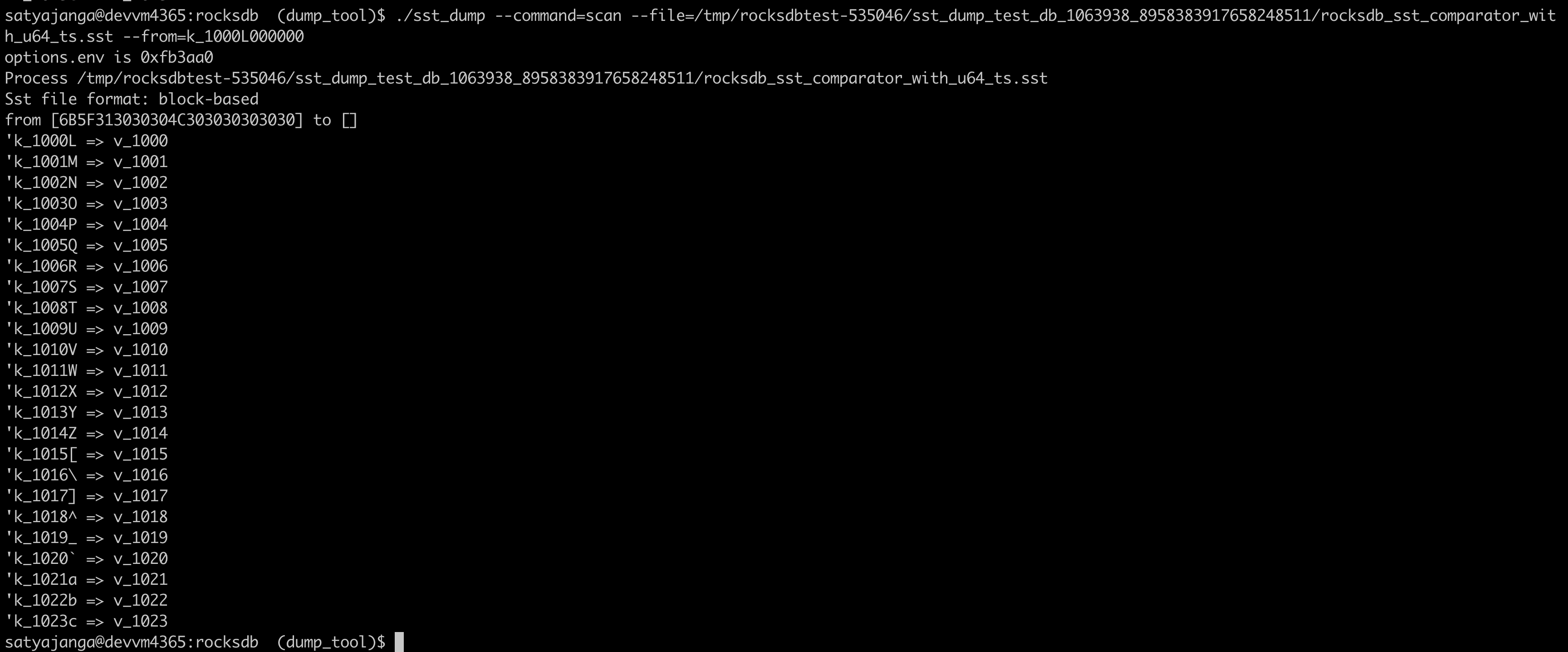

Use the comparator from the sst file table properties in sst_dump_tool (#9491)

Summary: We introduced a new Comparator for timestamp in user keys. In the sst_dump_tool by default we use BytewiseComparator to read sst files. This change allows us to read comparator_name from table properties in meta data block and use it to read. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9491 Test Plan: added unittests for new functionality. make check   Reviewed By: riversand963 Differential Revision: D33993614 Pulled By: satyajanga fbshipit-source-id: 4b5cf938e6d2cb3931d763bef5baccc900b8c536 |

3 years ago |

|

|

42e0751b3a |

Clean up VersionStorageInfo a bit (#9494)

Summary: The patch does some cleanup in and around `VersionStorageInfo`: * Renames the method `PrepareApply` to `PrepareAppend` in `Version` to make it clear that it is to be called before appending the `Version` to `VersionSet` (via `AppendVersion`), not before applying any `VersionEdit`s. * Introduces a helper method `VersionStorageInfo::PrepareForVersionAppend` (called by `Version::PrepareAppend`) that encapsulates the population of the various derived data structures in `VersionStorageInfo`, and turns the methods computing the derived structures (`UpdateNumNonEmptyLevels`, `CalculateBaseBytes` etc.) into private helpers. * Changes `Version::PrepareAppend` so it only calls `UpdateAccumulatedStats` if the `update_stats` flag is set. (Earlier, this was checked by the callee.) Related to this, it also moves the call to `ComputeCompensatedSizes` to `VersionStorageInfo::PrepareForVersionAppend`. * Updates and cleans up `version_builder_test`, `version_set_test`, and `compaction_picker_test` so `PrepareForVersionAppend` is called anytime a new `VersionStorageInfo` is set up or saved. This cleanup also involves splitting `VersionStorageInfoTest.MaxBytesForLevelDynamic` into multiple smaller test cases. * Fixes up a bunch of comments that were outdated or just plain incorrect. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9494 Test Plan: Ran `make check` and the crash test script for a while. Reviewed By: riversand963 Differential Revision: D33971666 Pulled By: ltamasi fbshipit-source-id: fda52faac7783041126e4f8dec0fe01bdcadf65a |

3 years ago |

|

|

255aefb628 |

Add filename to several Corruption messages (#9239)

Summary: This change adds the filename of the offending filen to several place that produce Status objects with code `kCorruption`. This is not an attempt to have every Corruption message in the codebase extended with the filename, but it is a start. The motivation for the change was to quickly diagnose which file is corrupted when a large database is openend and there is not option to copy it offsite for analysis, run strace or install the ldb tool. In the particular case in question, the error message improved from a mere ``` Corruption: checksum mismatch ``` to ``` Corruption: checksum mismatch in file /path/to/db/engine-rocksdb/MANIFEST-000171 ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9239 Reviewed By: jay-zhuang Differential Revision: D33237742 Pulled By: riversand963 fbshipit-source-id: bd42559cfbf786a0a674d091671d1a2bf07bdd31 |

3 years ago |

|

|

88875df821 |

File temperature information should be preserved when restart the DB (#9242)

Summary: Fix a bug that causes file temperature not preserved after DB is restarted, or options.max_manifest_file_size is hit. Also, pass temperature information to NewRandomAccessFile() to allow users to hack a solution where they don't preserve tiering information. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9242 Test Plan: Add a unit test that would fail without the fix. Reviewed By: jay-zhuang Differential Revision: D32818150 fbshipit-source-id: 36aa3f148c60107f7b8e9d65b63b039f9e1a1eec |

3 years ago |

|

|

937fbcbddc |

Track per-SST user-defined timestamp information in MANIFEST (#9092)

Summary: Track per-SST user-defined timestamp information in MANIFEST https://github.com/facebook/rocksdb/issues/8957 Rockdb has supported user-defined timestamp feature. Application can specify a timestamp when writing each k-v pair. When data flush from memory to disk file called SST files, file creation activity will commit to MANIFEST. This commit is for tracking timestamp info in the MANIFEST for each file. The changes involved are as follows: 1) Track max/min timestamp in FileMetaData, and fix invoved codes. 2) Add NewFileCustomTag::kMinTimestamp and NewFileCustomTag::kMinTimestamp in NewFileCustomTag ( in the kNewFile4 part ), and support invoved codes such as VersionEdit Encode and Decode etc. 3) Add unit test code for VersionEdit EncodeDecodeNewFile4, and fix invoved test codes. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9092 Reviewed By: ajkr, akankshamahajan15 Differential Revision: D32252323 Pulled By: riversand963 fbshipit-source-id: d2642898d6e3ad1fef0eb866b98045408bd4e162 |

3 years ago |

|

|

a2b9be42b6 |

Try to start TTL earlier with kMinOverlappingRatio is used (#8749)

Summary: Right now, when options.ttl is set, compactions are triggered around the time when TTL is reached. This might cause extra compactions which are often bursty. This commit tries to mitigate it by picking those files earlier in normal compaction picking process. This is only implemented using kMinOverlappingRatio with Leveled compaction as it is the default value and it is more complicated to change other styles. When a file is aged more than ttl/2, RocksDB starts to boost the compaction priority of files in normal compaction picking process, and hope by the time TTL is reached, very few extra compaction is needed. In order for this to work, another change is made: during a compaction, if an output level file is older than ttl/2, cut output files based on original boundary (if it is not in the last level). This is to make sure that after an old file is moved to the next level, and new data is merged from the upper level, the new data falling into this range isn't reset with old timestamp. Without this change, in many cases, most files from one level will keep having old timestamp, even if they have newer data and we stuck in it. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8749 Test Plan: Add a unit test to test the boosting logic. Will add a unit test to test it end-to-end. Reviewed By: jay-zhuang Differential Revision: D30735261 fbshipit-source-id: 503c2d89250b22911eb99e72b379be154de3428e |

3 years ago |

|

|

e970248602 |

Add support for building on s390x platform (#8962)

Summary: This PR adds support for building on s390x including updating travis CI. It uses the previous work in https://github.com/facebook/rocksdb/pull/6168 and adds some more changes to get all current tests (make check and jni tests) to pass. The tests were run with snappy, lz4, bzip2 and zstd all compiled in. There are a few pieces still needed to get the travis build working that I don't think I can do. adamretter is this something you could help with? 1. A prebuilt https://rocksdb-deps.s3-us-west-2.amazonaws.com/cmake/cmake-3.14.5-Linux-s390x.deb package 2. A https://hub.docker.com/r/evolvedbinary/rocksjava s390x image Not sure if there is more required for travis. Happy to help in any way I can. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8962 Reviewed By: mrambacher Differential Revision: D31802198 Pulled By: pdillinger fbshipit-source-id: 683511466fa6b505f85ba5a9964a268c6151f0c2 |

3 years ago |

|

|

7cc52cd8f5 |

Update HISTORY for PR 8994 (#9017)

Summary: Also, expand on/clarify a comment in `VersionStorageInfoTest`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9017 Reviewed By: riversand963 Differential Revision: D31566130 Pulled By: ltamasi fbshipit-source-id: 1d30c7af084c4de7b2030bc6c768838d65746010 |

3 years ago |

|

|

3e1bf771a3 |

Make it possible to force the garbage collection of the oldest blob files (#8994)

Summary: The current BlobDB garbage collection logic works by relocating the valid blobs from the oldest blob files as they are encountered during compaction, and cleaning up blob files once they contain nothing but garbage. However, with sufficiently skewed workloads, it is theoretically possible to end up in a situation when few or no compactions get scheduled for the SST files that contain references to the oldest blob files, which can lead to increased space amp due to the lack of GC. In order to efficiently handle such workloads, the patch adds a new BlobDB configuration option called `blob_garbage_collection_force_threshold`, which signals to BlobDB to schedule targeted compactions for the SST files that keep alive the oldest batch of blob files if the overall ratio of garbage in the given blob files meets the threshold *and* all the given blob files are eligible for GC based on `blob_garbage_collection_age_cutoff`. (For example, if the new option is set to 0.9, targeted compactions will get scheduled if the sum of garbage bytes meets or exceeds 90% of the sum of total bytes in the oldest blob files, assuming all affected blob files are below the age-based cutoff.) The net result of these targeted compactions is that the valid blobs in the oldest blob files are relocated and the oldest blob files themselves cleaned up (since *all* SST files that rely on them get compacted away). These targeted compactions are similar to periodic compactions in the sense that they force certain SST files that otherwise would not get picked up to undergo compaction and also in the sense that instead of merging files from multiple levels, they target a single file. (Note: such compactions might still include neighboring files from the same level due to the need of having a "clean cut" boundary but they never include any files from any other level.) This functionality is currently only supported with the leveled compaction style and is inactive by default (since the default value is set to 1.0, i.e. 100%). Pull Request resolved: https://github.com/facebook/rocksdb/pull/8994 Test Plan: Ran `make check` and tested using `db_bench` and the stress/crash tests. Reviewed By: riversand963 Differential Revision: D31489850 Pulled By: ltamasi fbshipit-source-id: 44057d511726a0e2a03c5d9313d7511b3f0c4eab |

3 years ago |

|

|

cbb3b25915 |

Print blob file checksums as hex (#8437)

Summary: Currently, blob file checksums are incorrectly dumped as raw bytes in the `ldb manifest_dump` output (i.e. they are not printed as hex). The patch fixes this and also updates some test cases to reflect that the checksum value field in `BlobFileAddition` and `SharedBlobFileMetaData` contains the raw checksum and not a hex string. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8437 Test Plan: `make check` Tested using `ldb manifest_dump` Reviewed By: akankshamahajan15 Differential Revision: D29284170 Pulled By: ltamasi fbshipit-source-id: d11cfb3435b14cd73c8a3d3eb14fa0f9fa1d2228 |

4 years ago |

|

|

d5bd0039b9 |

Rename ImmutableOptions variables (#8409)

Summary: This is the next part of the ImmutableOptions cleanup. After changing the use of ImmutableCFOptions to ImmutableOptions, there were places in the code that had did something like "ImmutableOptions* immutable_cf_options", where "cf" referred to the "old" type. This change simply renames the variables to match the current type. No new functionality is introduced. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8409 Reviewed By: pdillinger Differential Revision: D29166248 Pulled By: mrambacher fbshipit-source-id: 96de97f8e743f5c5160f02246e3ed8269556dc6f |

4 years ago |

|

|

281ac9c89e |

Add CreateFrom methods to Env/FileSystem (#8174)

Summary: - Added CreateFromString method to Env and FilesSystem to replace LoadEnv/Load. This method/signature is a precursor to making these classes extend Customizable. - Added CreateFromSystem to Env. This method standardizes creating an Env from the environment variables. Previously, some places would check TEST_ENV_URI and others would also check TEST_FS_URI. Now the code is more command/standardized. - Added CreateFromFlags to Env. These method allows Env to be create from string options (such as GFLAGS options) in a more standard way. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8174 Reviewed By: zhichao-cao Differential Revision: D28999603 Pulled By: mrambacher fbshipit-source-id: 88e6911e7e91f908458a7fe10a20e93ecbc275fb |

4 years ago |

|

|

f44e69c64a |

Use DbSessionId as cache key prefix when secondary cache is enabled (#8360)

Summary: Currently, we either use the file system inode or a monotonically incrementing runtime ID as the block cache key prefix. However, if we use a monotonically incrementing runtime ID (in the case that the file system does not support inode id generation), in some cases, it cannot ensure uniqueness (e.g., we have secondary cache migrated from host to host). We use DbSessionID (20 bytes) + current file number (at most 10 bytes) as the new cache block key prefix when the secondary cache is enabled. So can accommodate scenarios such as transfer of cache state across hosts. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8360 Test Plan: add the test to lru_cache_test Reviewed By: pdillinger Differential Revision: D29006215 Pulled By: zhichao-cao fbshipit-source-id: 6cff686b38d83904667a2bd39923cd030df16814 |

4 years ago |

|

|

d83542ca83 |

Make it possible to apply only a subrange of table property collectors (#8298)

Summary: This patch does two things: 1) Introduces some aliases in order to eliminate/prevent long-winded type names w/r/t the internal table property collectors (see e.g. `std::vector<std::unique_ptr<IntTblPropCollectorFactory>>`). 2) Makes it possible to apply only a subrange of table property collectors during table building by turning `TableBuilderOptions::int_tbl_prop_collector_factories` from a pointer to a `vector` into a range (i.e. a pair of iterators). Rationale: I plan to introduce a BlobDB related table property collector, which should only be applied during table creation if blob storage is enabled at the moment (which can be changed dynamically). This change will make it possible to include/ exclude the BlobDB related collector as needed without having to introduce a second `vector` of collectors in `ColumnFamilyData` with pretty much the same contents. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8298 Test Plan: `make check` Reviewed By: jay-zhuang Differential Revision: D28430910 Pulled By: ltamasi fbshipit-source-id: a81d28f2c59495865300f43deb2257d2e6977c8e |

4 years ago |

|

|

8948dc8524 |

Make ImmutableOptions struct that inherits from ImmutableCFOptions and ImmutableDBOptions (#8262)

Summary: The ImmutableCFOptions contained a bunch of fields that belonged to the ImmutableDBOptions. This change cleans that up by introducing an ImmutableOptions struct. Following the pattern of Options struct, this class inherits from the DB and CFOption structs (of the Immutable form). Only one structural change (the ImmutableCFOptions::fs was changed to a shared_ptr from a raw one) is in this PR. All of the other changes involve moving the member variables from the ImmutableCFOptions into the ImmutableOptions and changing member variables or function parameters as required for compilation purposes. Follow-on PRs may do a further clean-up of the code, such as renaming variables (such as "ImmutableOptions cf_options") and potentially eliminating un-needed function parameters (there is no longer a need to pass both an ImmutableDBOptions and an ImmutableOptions to a function). Pull Request resolved: https://github.com/facebook/rocksdb/pull/8262 Reviewed By: pdillinger Differential Revision: D28226540 Pulled By: mrambacher fbshipit-source-id: 18ae71eadc879dedbe38b1eb8e6f9ff5c7147dbf |

4 years ago |

|

|

d2ca04e3ed |

Add more LSM info to FilterBuildingContext (#8246)

Summary: Add `num_levels`, `is_bottommost`, and table file creation `reason` to `FilterBuildingContext`, in anticipation of more powerful Bloom-like filter support. To support this, added `is_bottommost` and `reason` to `TableBuilderOptions`, which allowed removing `reason` parameter from `rocksdb::BuildTable`. I attempted to remove `skip_filters` from `TableBuilderOptions`, because filter construction decisions should arise from options, not one-off parameters. I could not completely remove it because the public API for SstFileWriter takes a `skip_filters` parameter, and translating this into an option change would mean awkwardly replacing the table_factory if it is BlockBasedTableFactory with new filter_policy=nullptr option. I marked this public skip_filters option as deprecated because of this oddity. (skip_filters on the read side probably makes sense.) At least `skip_filters` is now largely hidden for users of `TableBuilderOptions` and is no longer used for implementing the optimize_filters_for_hits option. Bringing the logic for that option closer to handling of FilterBuildingContext makes it more obvious that hese two are using the same notion of "bottommost." (Planned: configuration options for Bloom-like filters that generalize `optimize_filters_for_hits`) Recommended follow-up: Try to get away from "bottommost level" naming of things, which is inaccurate (see VersionStorageInfo::RangeMightExistAfterSortedRun), and move to "bottommost run" or just "bottommost." Pull Request resolved: https://github.com/facebook/rocksdb/pull/8246 Test Plan: extended an existing unit test to exercise and check various filter building contexts. Also, existing tests for optimize_filters_for_hits validate some of the "bottommost" handling, which is now closely connected to FilterBuildingContext::is_bottommost through TableBuilderOptions::is_bottommost Reviewed By: mrambacher Differential Revision: D28099346 Pulled By: pdillinger fbshipit-source-id: 2c1072e29c24d4ac404c761a7b7663292372600a |

4 years ago |

|

|

85becd94c1 |

Refactor: use TableBuilderOptions to reduce parameter lists (#8240)

Summary: Greatly reduced the not-quite-copy-paste giant parameter lists of rocksdb::NewTableBuilder, rocksdb::BuildTable, BlockBasedTableBuilder::Rep ctor, and BlockBasedTableBuilder ctor. Moved weird separate parameter `uint32_t column_family_id` of TableFactory::NewTableBuilder into TableBuilderOptions. Re-ordered parameters to TableBuilderOptions ctor, so that `uint64_t target_file_size` is not randomly placed between uint64_t timestamps (was easy to mix up). Replaced a couple of fields of BlockBasedTableBuilder::Rep with a FilterBuildingContext. The motivation for this change is making it easier to pass along more data into new fields in FilterBuildingContext (follow-up PR). Pull Request resolved: https://github.com/facebook/rocksdb/pull/8240 Test Plan: ASAN make check Reviewed By: mrambacher Differential Revision: D28075891 Pulled By: pdillinger fbshipit-source-id: fddb3dbb8260a0e8bdcbb51b877ebabf9a690d4f |

4 years ago |

|

|

5025c7ec09 |

version_set_test.cc: remove a redundent obj copy (#7880)

Summary: Remove redundant obj copy Pull Request resolved: https://github.com/facebook/rocksdb/pull/7880 Reviewed By: akankshamahajan15 Differential Revision: D26921119 Pulled By: riversand963 fbshipit-source-id: f227da688b067870a069e728a67799a8a95fee99 |

4 years ago |

|

|

c20a7cd6c7 |

Apply `sample_for_compression` to all block-based tables (#8105)

Summary: Previously it only applied to block-based tables generated by flush. This restriction was undocumented and blocked a new use case. Now compression sampling applies to all block-based tables we generate when it is enabled. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8105 Test Plan: new unit test Reviewed By: riversand963 Differential Revision: D27317275 Pulled By: ajkr fbshipit-source-id: cd9fcc5178d6515e8cb59c6facb5ac01893cb5b0 |

4 years ago |

|

|

3dff28cf9b |

Use SystemClock* instead of std::shared_ptr<SystemClock> in lower level routines (#8033)

Summary: For performance purposes, the lower level routines were changed to use a SystemClock* instead of a std::shared_ptr<SystemClock>. The shared ptr has some performance degradation on certain hardware classes. For most of the system, there is no risk of the pointer being deleted/invalid because the shared_ptr will be stored elsewhere. For example, the ImmutableDBOptions stores the Env which has a std::shared_ptr<SystemClock> in it. The SystemClock* within the ImmutableDBOptions is essentially a "short cut" to gain access to this constant resource. There were a few classes (PeriodicWorkScheduler?) where the "short cut" property did not hold. In those cases, the shared pointer was preserved. Using db_bench readrandom perf_level=3 on my EC2 box, this change performed as well or better than 6.17: 6.17: readrandom : 28.046 micros/op 854902 ops/sec; 61.3 MB/s (355999 of 355999 found) 6.18: readrandom : 32.615 micros/op 735306 ops/sec; 52.7 MB/s (290999 of 290999 found) PR: readrandom : 27.500 micros/op 871909 ops/sec; 62.5 MB/s (367999 of 367999 found) (Note that the times for 6.18 are prior to revert of the SystemClock). Pull Request resolved: https://github.com/facebook/rocksdb/pull/8033 Reviewed By: pdillinger Differential Revision: D27014563 Pulled By: mrambacher fbshipit-source-id: ad0459eba03182e454391b5926bf5cdd45657b67 |

4 years ago |

|

|

64517d184a |

Make secondary instance use ManifestTailer (#7998)

Summary: This PR - adds a class `ManifestTailer` that inherits from `VersionEditHandlerPointInTime`. `ManifestTailer::Iterate()` can be called multiple times to tail the primary instance's MANIFEST and apply the changes to the secondary, - updates the implementation of `ReactiveVersionSet::ReadAndApply` to use this class, - removes unused code in version_set.cc, - updates existing tests, e.g. removing deleted sync points from unit tests, - adds a new test to address the bug in https://github.com/facebook/rocksdb/issues/7815. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7998 Test Plan: make check Existing and newly-added tests in version_set_test.cc and db_secondary_test.cc Reviewed By: jay-zhuang Differential Revision: D26926641 Pulled By: riversand963 fbshipit-source-id: 8d4dd15db0ba863c213f743e33b5a207e948c980 |

4 years ago |

|

|

4a09d632c4 |

Remove Legacy and Custom FileWrapper classes from header files (#7851)

Summary: Removed the uses of the Legacy FileWrapper classes from the source code. The wrappers were creating an additional layer of indirection/wrapping, as the Env already has a FileSystem. Moved the Custom FileWrapper classes into the CustomEnv, as these classes are really for the private use the the CustomEnv class. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7851 Reviewed By: anand1976 Differential Revision: D26114816 Pulled By: mrambacher fbshipit-source-id: db32840e58d969d3a0fa6c25aaf13d6dcdc74150 |

4 years ago |

|

|

12f1137355 |

Add a SystemClock class to capture the time functions of an Env (#7858)

Summary: Introduces and uses a SystemClock class to RocksDB. This class contains the time-related functions of an Env and these functions can be redirected from the Env to the SystemClock. Many of the places that used an Env (Timer, PerfStepTimer, RepeatableThread, RateLimiter, WriteController) for time-related functions have been changed to use SystemClock instead. There are likely more places that can be changed, but this is a start to show what can/should be done. Over time it would be nice to migrate most (if not all) of the uses of the time functions from the Env to the SystemClock. There are several Env classes that implement these functions. Most of these have not been converted yet to SystemClock implementations; that will come in a subsequent PR. It would be good to unify many of the Mock Timer implementations, so that they behave similarly and be tested similarly (some override Sleep, some use a MockSleep, etc). Additionally, this change will allow new methods to be introduced to the SystemClock (like https://github.com/facebook/rocksdb/issues/7101 WaitFor) in a consistent manner across a smaller number of classes. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7858 Reviewed By: pdillinger Differential Revision: D26006406 Pulled By: mrambacher fbshipit-source-id: ed10a8abbdab7ff2e23d69d85bd25b3e7e899e90 |

4 years ago |

|

|

02418194d7 |

Add more tests for assert status checked (#7524)

Summary: Added 10 more tests that pass the ASSERT_STATUS_CHECKED test. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7524 Reviewed By: akankshamahajan15 Differential Revision: D24323093 Pulled By: ajkr fbshipit-source-id: 28d4106d0ca1740c3b896c755edf82d504b74801 |

4 years ago |

|

|

b1ee191405 |

Fix memory leak for ColumnFamily drop with live iterator (#7749)

Summary: Uncommon bug seen by ASAN with ColumnFamilyTest.LiveIteratorWithDroppedColumnFamily, if the last two references to a ColumnFamilyData are both SuperVersions (during InstallSuperVersion). The fix is to use UnrefAndTryDelete even in SuperVersion::Cleanup but with a parameter to avoid re-entering Cleanup on the same SuperVersion being cleaned up. ColumnFamilyData::Unref is considered unsafe so removed. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7749 Test Plan: ./column_family_test --gtest_filter=*LiveIter* --gtest_repeat=100 Reviewed By: jay-zhuang Differential Revision: D25354304 Pulled By: pdillinger fbshipit-source-id: e78f3a3f67c40013b8432f31d0da8bec55c5321c |

4 years ago |

|

|

80159f6e0b |

Carry over min_log_number_to_keep_2pc in new MANIFEST (#7747)

Summary: When two phase commit is enabled, `VersionSet::min_log_number_to_keep_2pc` is set during flush. But when a new MANIFEST is created, the `min_log_number_to_keep_2pc` is not carried over to the new MANIFEST. So if a new MANIFEST is created and then DB is reopened, the `min_log_number_to_keep_2pc` will be lost. This may cause DB recovery errors. The bug is reproduced in a new unit test in `version_set_test.cc`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7747 Test Plan: The new unit test in `version_set_test.cc` should pass. Reviewed By: jay-zhuang Differential Revision: D25350661 Pulled By: cheng-chang fbshipit-source-id: eee890d5b19f15769069670692e270ae31044ece |

4 years ago |

|

|

eee0af9af1 |

Add full_history_ts_low to column family (#7740)

Summary: Following https://github.com/facebook/rocksdb/issues/7655 and https://github.com/facebook/rocksdb/issues/7657, this PR adds `full_history_ts_low_` to `ColumnFamilyData`. `ColumnFamilyData::full_history_ts_low_` will be used to create `FlushJob` and `CompactionJob`. `ColumnFamilyData::full_history_ts_low` is persisted to the MANIFEST file. An application can only increase its value. Consider the following case: > > The database has a key at ts=950. `full_history_ts_low` is first set to 1000, and then a GC is triggered > and cleans up all data older than 1000. If the application sets `full_history_ts_low` to 900 afterwards, > and tries to read at ts=960, the key at 950 is not seen. From the perspective of the read, the result > is hard to reason. For simplicity, we just do now allow decreasing full_history_ts_low for now. > During recovery, the value of `full_history_ts_low` is restored for each column family if applicable. Note that version edits in the MANIFEST file for the same column family may have `full_history_ts_low` unsorted due to the potential interleaving of `LogAndApply` calls. Only the max will be used to restore the state of the column family. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7740 Test Plan: make check Reviewed By: ltamasi Differential Revision: D25296217 Pulled By: riversand963 fbshipit-source-id: 24acda1df8262cd7cfdc6ce7b0ec56438abe242a |

4 years ago |

|

|

8b6b6aeb1a |

Refactor with VersionEditHandler (#6581)

Summary: Added a few classes in the same class hierarchy to remove code duplication and refactor the logic of reading and processing MANIFEST files. New classes are as follows. ``` class VersionEditHandlerBase; class ListColumnFamiliesHandler : VersionEditHandlerBase; class FileChecksumRetriever : VersionEditHandlerBase; class DumpManifestHandler : VersionEditHandler; ``` Classes that already existed before this PR are as follows. ``` class VersionEditHandler : VersionEditHandlerBase; ``` With these classes, refactored functions: `VersionSet::Recover()`, `VersionSet::ListColumnFamilies()`, `VersionSet::DumpManifest()`, `GetFileChecksumFromManifest()`. Test Plan (devserver): ``` make check COMPILE_WITH_ASAN=1 make check ``` These refactored code, especially recovery-related logic, will be tested intensively by all existing unit tests and stress tests. For example, run ``` make crash_test ``` Verified 3 successful runs on devserver. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6581 Reviewed By: ajkr Differential Revision: D20616217 Pulled By: riversand963 fbshipit-source-id: 048c7743aa4be2623ccd0cc3e61c0027e604e78b |

4 years ago |

|

|

1e40696dd1 |

Track WAL in MANIFEST: LogAndApply WAL events to MANIFEST (#7601)

Summary: When a WAL is synced, an edit is written to MANIFEST. After flushing memtables, the obsoleted WALs are piggybacked to MANIFEST while writing the new L0 files to MANIFEST. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7601 Test Plan: `track_and_verify_wals_in_manifest` is enabled by default for all tests extending `DBBasicTest`, and in db_stress_test. Unit test `wal_edit_test`, `version_edit_test`, and `version_set_test` are also updated. Watch all tests to pass. Reviewed By: ltamasi Differential Revision: D24553957 Pulled By: cheng-chang fbshipit-source-id: 66a569ff1bdced38e22900bd240b73113906e040 |

4 years ago |

|

|

394210f280 |

Remove unused includes (#7604)

Summary: This is a PR generated **semi-automatically** by an internal tool to remove unused includes and `using` statements. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7604 Test Plan: make check Reviewed By: ajkr Differential Revision: D24579392 Pulled By: riversand963 fbshipit-source-id: c4bfa6c6b08da1de186690d37eb73d8fff45aecd |

4 years ago |

|

|

f35f7f2704 |

Fix many tests to run with MEM_ENV and ENCRYPTED_ENV; Introduce a MemoryFileSystem class (#7566)

Summary: This PR does a few things: 1. The MockFileSystem class was split out from the MockEnv. This change would theoretically allow a MockFileSystem to be used by other Environments as well (if we created a means of constructing one). The MockFileSystem implements a FileSystem in its entirety and does not rely on any Wrapper implementation. 2. Make the RocksDB test suite work when MOCK_ENV=1 and ENCRYPTED_ENV=1 are set. To accomplish this, a few things were needed: - The tests that tried to use the "wrong" environment (Env::Default() instead of env_) were updated - The MockFileSystem was changed to support the features it was missing or mishandled (such as recursively deleting files in a directory or supporting renaming of a directory). 3. Updated the test framework to have a ROCKSDB_GTEST_SKIP macro. This can be used to flag tests that are skipped. Currently, this defaults to doing nothing (marks the test as SUCCESS) but will mark the tests as SKIPPED when RocksDB is upgraded to a version of gtest that supports this (gtest-1.10). I have run a full "make check" with MEM_ENV, ENCRYPTED_ENV, both, and neither under both MacOS and RedHat. A few tests were disabled/skipped for the MEM/ENCRYPTED cases. The error_handler_fs_test fails/hangs for MEM_ENV (presumably a timing problem) and I will introduce another PR/issue to track that problem. (I will also push a change to disable those tests soon). There is one more test in DBTest2 that also fails which I need to investigate or skip before this PR is merged. Theoretically, this PR should also allow the test suite to run against an Env loaded from the registry, though I do not have one to try it with currently. Finally, once this is accepted, it would be nice if there was a CircleCI job to run these tests on a checkin so this effort does not become stale. I do not know how to do that, so if someone could write that job, it would be appreciated :) Pull Request resolved: https://github.com/facebook/rocksdb/pull/7566 Reviewed By: zhichao-cao Differential Revision: D24408980 Pulled By: jay-zhuang fbshipit-source-id: 911b1554a4d0da06fd51feca0c090a4abdcb4a5f |

4 years ago |

|

|

1b224324b5 |

Track WAL in MANIFEST: persist WALs to and recover WALs from MANIFEST (#7256)

Summary: This PR makes it able to `LogAndApply` `VersionEdit`s related to WALs, and also be able to `Recover` from MANIFEST with WAL related `VersionEdit`s. The `VersionEdit`s related to WAL are treated similarly as those related to column family operations, they are not applied to versions, but can be in a commit group. Mixing WAL related `VersionEdit`s with other types of edits will make logic in `ProcessManifestWrite` more complicated, so `VersionEdit`s related to WAL can either be WAL additions or deletions, like column family add and drop. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7256 Test Plan: a set of unit tests are added in `version_set_test.cc` Reviewed By: riversand963 Differential Revision: D23123238 Pulled By: cheng-chang fbshipit-source-id: 246be2ed4744fd03fa2738aba408aaa611d0379c |

4 years ago |

|

|

1f9f630b27 |

Store FileSystemPtr object that contains FileSystem ptr (#7180)

Summary:

As part of the IOTracing project, this PR

1. Caches "FileSystemPtr" object(wrapper class that returns file system pointer based on tracing enabled) instead of "FileSystem" pointer.

2. FileSystemPtr object is created using FileSystem pointer and IOTracer

pointer.

3. IOTracer shared_ptr is created in DBImpl and it is passed to different classes through constructor.

4. When tracing is enabled through DB::StartIOTrace, FileSystemPtr

returns FileSystemTracingWrapper pointer for tracing purpose and when

it is disabled underlying FileSystem pointer is returned.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/7180

Test Plan:

make check -j64

COMPILE_WITH_TSAN=1 make check -j64

Reviewed By: anand1976

Differential Revision: D22987117

Pulled By: akankshamahajan15

fbshipit-source-id: 6073617e4c2d5bc363914f3a1f55ae3b0a58fbf1

|

4 years ago |

|

|

e367bc7f4b |

Clean up blob files based on the linked SST set (#7001)

Summary: The earlier `VersionBuilder` code only cleaned up blob files that were marked as entirely consisting of garbage using `VersionEdits` with `BlobFileGarbage`. This covers the cases when table files go through regular compaction, where we iterate through the KVs and thus have an opportunity to calculate the amount of garbage (that is, most cases). However, it does not help when table files are simply dropped (e.g. deletion compactions or the `DeleteFile` API). To deal with such cases, the patch adds logic that cleans up all blob files at the head of the list until the first one with linked SSTs is found. (As an example, let's assume we have blob files with numbers 1..10, and the first one with any linked SSTs is number 8. This means that SSTs in the `Version` only rely on blob files with numbers >= 8, and thus 1..7 are no longer needed.) The code change itself is pretty small; however, changing the logic like this necessitated changes to some tests that have been added recently (namely to the ones that use blob files in isolation, i.e. without any table files referring to them). Some of these cases were fixed by bypassing `VersionBuilder` altogether in order to keep the tests simple (which actually makes them more proper unit tests as well), while the `VersionBuilder` unit tests were fixed by adding dummy table files to the test cases as needed. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7001 Test Plan: `make check` Reviewed By: riversand963 Differential Revision: D22119474 Pulled By: ltamasi fbshipit-source-id: c6547141355667d4291d9661d6518eb741e7b54a |

5 years ago |