Tag:

Branch:

Tree:

8142223b1b

main

oxigraph-8.1.1

oxigraph-8.3.2

oxigraph-main

${ noResults }

25 Commits (8142223b1b32527b00cb3b6e9fb277734bf352d9)

| Author | SHA1 | Message | Date |

|---|---|---|---|

|

|

769b156e65 |

Remove customized naming from InternalKeyComparator (#10343)

Summary: InternalKeyComparator is a thin wrapper around user comparator. Storing a string for name is relatively expensive to this small wrapper for both CPU and memory usage. Try to remove it. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10343 Test Plan: Run existing tests Reviewed By: ajkr Differential Revision: D37772469 fbshipit-source-id: d2d106a8d022193058fd7f6b220108e3d94aca34 |

2 years ago |

|

|

cc23b46da1 |

Support using ZDICT_finalizeDictionary to generate zstd dictionary (#9857)

Summary:

An untrained dictionary is currently simply the concatenation of several samples. The ZSTD API, ZDICT_finalizeDictionary(), can improve such a dictionary's effectiveness at low cost. This PR changes how dictionary is created by calling the ZSTD ZDICT_finalizeDictionary() API instead of creating raw content dictionary (when max_dict_buffer_bytes > 0), and pass in all buffered uncompressed data blocks as samples.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9857

Test Plan:

#### db_bench test for cpu/memory of compression+decompression and space saving on synthetic data:

Set up: change the parameter [here](

|

3 years ago |

|

|

cff0d1e8e6 |

New backup meta schema, with file temperatures (#9660)

Summary: The primary goal of this change is to add support for backing up and restoring (applying on restore) file temperature metadata, without committing to either the DB manifest or the FS reported "current" temperatures being exclusive "source of truth". To achieve this goal, we need to add temperature information to backup metadata, which requires updated backup meta schema. Fortunately I prepared for this in https://github.com/facebook/rocksdb/issues/8069, which began forward compatibility in version 6.19.0 for this kind of schema update. (Previously, backup meta schema was not extensible! Making this schema update public will allow some other "nice to have" features like taking backups with hard links, and avoiding crc32c checksum computation when another checksum is already available.) While schema version 2 is newly public, the default schema version is still 1. Until we change the default, users will need to set to 2 to enable features like temperature data backup+restore. New metadata like temperature information will be ignored with a warning in versions before this change and since 6.19.0. The metadata is considered ignorable because a functioning DB can be restored without it. Some detail: * Some renaming because "future schema" is now just public schema 2. * Initialize some atomics in TestFs (linter reported) * Add temperature hint support to SstFileDumper (used by BackupEngine) Pull Request resolved: https://github.com/facebook/rocksdb/pull/9660 Test Plan: related unit test majorly updated for the new functionality, including some shared testing support for tracking temperatures in a FS. Some other tests and testing hooks into production code also updated for making the backup meta schema change public. Reviewed By: ajkr Differential Revision: D34686968 Pulled By: pdillinger fbshipit-source-id: 3ac1fa3e67ee97ca8a5103d79cc87d872c1d862a |

3 years ago |

|

|

babe56ddba |

Add rate limiter priority to ReadOptions (#9424)

Summary: Users can set the priority for file reads associated with their operation by setting `ReadOptions::rate_limiter_priority` to something other than `Env::IO_TOTAL`. Rate limiting `VerifyChecksum()` and `VerifyFileChecksums()` is the motivation for this PR, so it also includes benchmarks and minor bug fixes to get that working. `RandomAccessFileReader::Read()` already had support for rate limiting compaction reads. I changed that rate limiting to be non-specific to compaction, but rather performed according to the passed in `Env::IOPriority`. Now the compaction read rate limiting is supported by setting `rate_limiter_priority = Env::IO_LOW` on its `ReadOptions`. There is no default value for the new `Env::IOPriority` parameter to `RandomAccessFileReader::Read()`. That means this PR goes through all callers (in some cases multiple layers up the call stack) to find a `ReadOptions` to provide the priority. There are TODOs for cases I believe it would be good to let user control the priority some day (e.g., file footer reads), and no TODO in cases I believe it doesn't matter (e.g., trace file reads). The API doc only lists the missing cases where a file read associated with a provided `ReadOptions` cannot be rate limited. For cases like file ingestion checksum calculation, there is no API to provide `ReadOptions` or `Env::IOPriority`, so I didn't count that as missing. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9424 Test Plan: - new unit tests - new benchmarks on ~50MB database with 1MB/s read rate limit and 100ms refill interval; verified with strace reads are chunked (at 0.1MB per chunk) and spaced roughly 100ms apart. - setup command: `./db_bench -benchmarks=fillrandom,compact -db=/tmp/testdb -target_file_size_base=1048576 -disable_auto_compactions=true -file_checksum=true` - benchmarks command: `strace -ttfe pread64 ./db_bench -benchmarks=verifychecksum,verifyfilechecksums -use_existing_db=true -db=/tmp/testdb -rate_limiter_bytes_per_sec=1048576 -rate_limit_bg_reads=1 -rate_limit_user_ops=true -file_checksum=true` - crash test using IO_USER priority on non-validation reads with https://github.com/facebook/rocksdb/issues/9567 reverted: `python3 tools/db_crashtest.py blackbox --max_key=1000000 --write_buffer_size=524288 --target_file_size_base=524288 --level_compaction_dynamic_level_bytes=true --duration=3600 --rate_limit_bg_reads=true --rate_limit_user_ops=true --rate_limiter_bytes_per_sec=10485760 --interval=10` Reviewed By: hx235 Differential Revision: D33747386 Pulled By: ajkr fbshipit-source-id: a2d985e97912fba8c54763798e04f006ccc56e0c |

3 years ago |

|

|

036bbab6f7 |

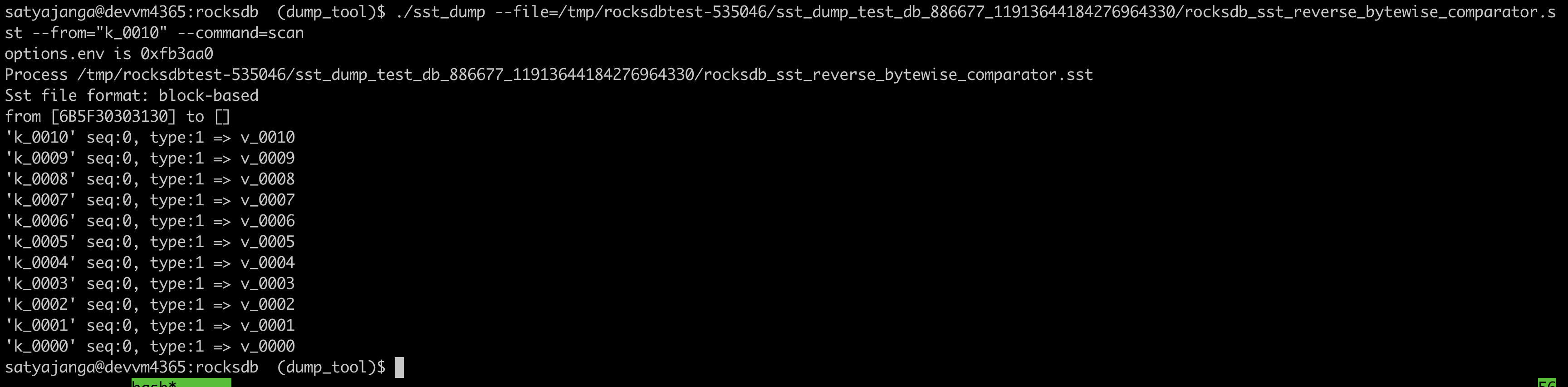

Use the comparator from the sst file table properties in sst_dump_tool (#9491)

Summary: We introduced a new Comparator for timestamp in user keys. In the sst_dump_tool by default we use BytewiseComparator to read sst files. This change allows us to read comparator_name from table properties in meta data block and use it to read. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9491 Test Plan: added unittests for new functionality. make check   Reviewed By: riversand963 Differential Revision: D33993614 Pulled By: satyajanga fbshipit-source-id: 4b5cf938e6d2cb3931d763bef5baccc900b8c536 |

3 years ago |

|

|

fc9d4071f0 |

Fast path for detecting unchanged prefix_extractor (#9407)

Summary: Fixes a major performance regression in 6.26, where extra CPU is spent in SliceTransform::AsString when reads involve a prefix_extractor (Get, MultiGet, Seek). Common case performance is now better than 6.25. This change creates a "fast path" for verifying that the current prefix extractor is unchanged and compatible with what was used to generate a table file. This fast path detects the common case by pointer comparison on the current prefix_extractor and a "known good" prefix extractor (if applicable) that is saved at the time the table reader is opened. The "known good" prefix extractor is saved as another shared_ptr copy (in an existing field, however) to ensure the pointer is not recycled. When the prefix_extractor has changed to a different instance but same compatible configuration (rare, odd), performance is still a regression compared to 6.25, but this is likely acceptable because of the oddity of such a case. The performance of incompatible prefix_extractor is essentially unchanged. Also fixed a minor case (ForwardIterator) where a prefix_extractor could be used via a raw pointer after being freed as a shared_ptr, if replaced via SetOptions. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9407 Test Plan: ## Performance Populate DB with `TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -benchmarks=fillrandom -num=10000000 -disable_wal=1 -write_buffer_size=10000000 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=12` Running head-to-head comparisons simultaneously with `TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -use_existing_db -readonly -benchmarks=seekrandom -num=10000000 -duration=20 -disable_wal=1 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=12` Below each is compared by ops/sec vs. baseline which is version 6.25 (multiple baseline runs because of variable machine load) v6.26: 4833 vs. 6698 (<- major regression!) v6.27: 4737 vs. 6397 (still) New: 6704 vs. 6461 (better than baseline in common case) Disabled fastpath: 4843 vs. 6389 (e.g. if prefix extractor instance changes but is still compatible) Changed prefix size (no usable filter) in new: 787 vs. 5927 Changed prefix size (no usable filter) in new & baseline: 773 vs. 784 Reviewed By: mrambacher Differential Revision: D33677812 Pulled By: pdillinger fbshipit-source-id: 571d9711c461fb97f957378a061b7e7dbc4d6a76 |

3 years ago |

|

|

653c392e47 |

More refactoring ahead of footer & meta changes (#9240)

Summary: I'm working on a new format_version=6 to support context checksum (https://github.com/facebook/rocksdb/issues/9058) and this includes much of the refactoring and test updates to support that change. Test coverage data and manual inspection agree on dead code in block_based_table_reader.cc (removed). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9240 Test Plan: tests enhanced to cover more cases etc. Extreme case performance testing indicates small % regression in fillseq (w/ compaction), though CPU profile etc. doesn't suggest any explanation. There is enhanced correctness checking in Footer::DecodeFrom, but this should be negligible. TEST_TMPDIR=/dev/shm/ ./db_bench -benchmarks=fillseq -memtablerep=vector -allow_concurrent_memtable_write=false -num=30000000 -checksum_type=1 --disable_wal={false,true} (Each is ops/s averaged over 50 runs, run simultaneously with competing configuration for load fairness) Before w/ wal: 454512 After w/ wal: 444820 (-2.1%) Before w/o wal: 1004560 After w/o wal: 998897 (-0.6%) Since this doesn't modify WAL code, one would expect real effects to be larger in w/o wal case. This regression will be corrected in a follow-up PR. Reviewed By: ajkr Differential Revision: D32813769 Pulled By: pdillinger fbshipit-source-id: 444a244eabf3825cd329b7d1b150cddce320862f |

3 years ago |

|

|

dc5de45af8 |

Support readahead during compaction for blob files (#9187)

Summary: The patch adds a new BlobDB configuration option `blob_compaction_readahead_size` that can be used to enable prefetching data from blob files during compaction. This is important when using storage with higher latencies like HDDs or remote filesystems. If enabled, prefetching is used for all cases when blobs are read during compaction, namely garbage collection, compaction filters (when the existing value has to be read from a blob file), and `Merge` (when the value of the base `Put` is stored in a blob file). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9187 Test Plan: Ran `make check` and the stress/crash test. Reviewed By: riversand963 Differential Revision: D32565512 Pulled By: ltamasi fbshipit-source-id: 87be9cebc3aa01cc227bec6b5f64d827b8164f5d |

3 years ago |

|

|

230660be73 |

Improve / clean up meta block code & integrity (#9163)

Summary:

* Checksums are now checked on meta blocks unless specifically

suppressed or not applicable (e.g. plain table). (Was other way around.)

This means a number of cases that were not checking checksums now are,

including direct read TableProperties in Version::GetTableProperties

(fixed in meta_blocks ReadTableProperties), reading any block from

PersistentCache (fixed in BlockFetcher), read TableProperties in

SstFileDumper (ldb/sst_dump/BackupEngine) before table reader open,

maybe more.

* For that to work, I moved the global_seqno+TableProperties checksum

logic to the shared table/ code, because that is used by many utilies

such as SstFileDumper.

* Also for that to work, we have to know when we're dealing with a block

that has a checksum (trailer), so added that capability to Footer based

on magic number, and from there BlockFetcher.

* Knowledge of trailer presence has also fixed a problem where other

table formats were reading blocks including bytes for a non-existant

trailer--and awkwardly kind-of not using them, e.g. no shared code

checking checksums. (BlockFetcher compression type was populated

incorrectly.) Now we only read what is needed.

* Minimized code duplication and differing/incompatible/awkward

abstractions in meta_blocks.{cc,h} (e.g. SeekTo in metaindex block

without parsing block handle)

* Moved some meta block handling code from table_properties*.*

* Moved some code specific to block-based table from shared table/ code

to BlockBasedTable class. The checksum stuff means we can't completely

separate it, but things that don't need to be in shared table/ code

should not be.

* Use unique_ptr rather than raw ptr in more places. (Note: you can

std::move from unique_ptr to shared_ptr.)

Without enhancements to GetPropertiesOfAllTablesTest (see below),

net reduction of roughly 100 lines of code.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9163

Test Plan:

existing tests and

* Enhanced DBTablePropertiesTest.GetPropertiesOfAllTablesTest to verify that

checksums are now checked on direct read of table properties by TableCache

(new test would fail before this change)

* Also enhanced DBTablePropertiesTest.GetPropertiesOfAllTablesTest to test

putting table properties under old meta name

* Also generally enhanced that same test to actually test what it was

supposed to be testing already, by kicking things out of table cache when

we don't want them there.

Reviewed By: ajkr, mrambacher

Differential Revision: D32514757

Pulled By: pdillinger

fbshipit-source-id: 507964b9311d186ae8d1131182290cbd97a99fa9

|

3 years ago |

|

|

d83542ca83 |

Make it possible to apply only a subrange of table property collectors (#8298)

Summary: This patch does two things: 1) Introduces some aliases in order to eliminate/prevent long-winded type names w/r/t the internal table property collectors (see e.g. `std::vector<std::unique_ptr<IntTblPropCollectorFactory>>`). 2) Makes it possible to apply only a subrange of table property collectors during table building by turning `TableBuilderOptions::int_tbl_prop_collector_factories` from a pointer to a `vector` into a range (i.e. a pair of iterators). Rationale: I plan to introduce a BlobDB related table property collector, which should only be applied during table creation if blob storage is enabled at the moment (which can be changed dynamically). This change will make it possible to include/ exclude the BlobDB related collector as needed without having to introduce a second `vector` of collectors in `ColumnFamilyData` with pretty much the same contents. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8298 Test Plan: `make check` Reviewed By: jay-zhuang Differential Revision: D28430910 Pulled By: ltamasi fbshipit-source-id: a81d28f2c59495865300f43deb2257d2e6977c8e |

4 years ago |

|

|

8948dc8524 |

Make ImmutableOptions struct that inherits from ImmutableCFOptions and ImmutableDBOptions (#8262)

Summary: The ImmutableCFOptions contained a bunch of fields that belonged to the ImmutableDBOptions. This change cleans that up by introducing an ImmutableOptions struct. Following the pattern of Options struct, this class inherits from the DB and CFOption structs (of the Immutable form). Only one structural change (the ImmutableCFOptions::fs was changed to a shared_ptr from a raw one) is in this PR. All of the other changes involve moving the member variables from the ImmutableCFOptions into the ImmutableOptions and changing member variables or function parameters as required for compilation purposes. Follow-on PRs may do a further clean-up of the code, such as renaming variables (such as "ImmutableOptions cf_options") and potentially eliminating un-needed function parameters (there is no longer a need to pass both an ImmutableDBOptions and an ImmutableOptions to a function). Pull Request resolved: https://github.com/facebook/rocksdb/pull/8262 Reviewed By: pdillinger Differential Revision: D28226540 Pulled By: mrambacher fbshipit-source-id: 18ae71eadc879dedbe38b1eb8e6f9ff5c7147dbf |

4 years ago |

|

|

d2ca04e3ed |

Add more LSM info to FilterBuildingContext (#8246)

Summary: Add `num_levels`, `is_bottommost`, and table file creation `reason` to `FilterBuildingContext`, in anticipation of more powerful Bloom-like filter support. To support this, added `is_bottommost` and `reason` to `TableBuilderOptions`, which allowed removing `reason` parameter from `rocksdb::BuildTable`. I attempted to remove `skip_filters` from `TableBuilderOptions`, because filter construction decisions should arise from options, not one-off parameters. I could not completely remove it because the public API for SstFileWriter takes a `skip_filters` parameter, and translating this into an option change would mean awkwardly replacing the table_factory if it is BlockBasedTableFactory with new filter_policy=nullptr option. I marked this public skip_filters option as deprecated because of this oddity. (skip_filters on the read side probably makes sense.) At least `skip_filters` is now largely hidden for users of `TableBuilderOptions` and is no longer used for implementing the optimize_filters_for_hits option. Bringing the logic for that option closer to handling of FilterBuildingContext makes it more obvious that hese two are using the same notion of "bottommost." (Planned: configuration options for Bloom-like filters that generalize `optimize_filters_for_hits`) Recommended follow-up: Try to get away from "bottommost level" naming of things, which is inaccurate (see VersionStorageInfo::RangeMightExistAfterSortedRun), and move to "bottommost run" or just "bottommost." Pull Request resolved: https://github.com/facebook/rocksdb/pull/8246 Test Plan: extended an existing unit test to exercise and check various filter building contexts. Also, existing tests for optimize_filters_for_hits validate some of the "bottommost" handling, which is now closely connected to FilterBuildingContext::is_bottommost through TableBuilderOptions::is_bottommost Reviewed By: mrambacher Differential Revision: D28099346 Pulled By: pdillinger fbshipit-source-id: 2c1072e29c24d4ac404c761a7b7663292372600a |

4 years ago |

|

|

85becd94c1 |

Refactor: use TableBuilderOptions to reduce parameter lists (#8240)

Summary: Greatly reduced the not-quite-copy-paste giant parameter lists of rocksdb::NewTableBuilder, rocksdb::BuildTable, BlockBasedTableBuilder::Rep ctor, and BlockBasedTableBuilder ctor. Moved weird separate parameter `uint32_t column_family_id` of TableFactory::NewTableBuilder into TableBuilderOptions. Re-ordered parameters to TableBuilderOptions ctor, so that `uint64_t target_file_size` is not randomly placed between uint64_t timestamps (was easy to mix up). Replaced a couple of fields of BlockBasedTableBuilder::Rep with a FilterBuildingContext. The motivation for this change is making it easier to pass along more data into new fields in FilterBuildingContext (follow-up PR). Pull Request resolved: https://github.com/facebook/rocksdb/pull/8240 Test Plan: ASAN make check Reviewed By: mrambacher Differential Revision: D28075891 Pulled By: pdillinger fbshipit-source-id: fddb3dbb8260a0e8bdcbb51b877ebabf9a690d4f |

4 years ago |

|

|

c20a7cd6c7 |

Apply `sample_for_compression` to all block-based tables (#8105)

Summary: Previously it only applied to block-based tables generated by flush. This restriction was undocumented and blocked a new use case. Now compression sampling applies to all block-based tables we generate when it is enabled. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8105 Test Plan: new unit test Reviewed By: riversand963 Differential Revision: D27317275 Pulled By: ajkr fbshipit-source-id: cd9fcc5178d6515e8cb59c6facb5ac01893cb5b0 |

4 years ago |

|

|

d904233d2f |

Limit buffering for collecting samples for compression dictionary (#7970)

Summary: For dictionary compression, we need to collect some representative samples of the data to be compressed, which we use to either generate or train (when `CompressionOptions::zstd_max_train_bytes > 0`) a dictionary. Previously, the strategy was to buffer all the data blocks during flush, and up to the target file size during compaction. That strategy allowed us to randomly pick samples from as wide a range as possible that'd be guaranteed to land in a single output file. However, some users try to make huge files in memory-constrained environments, where this strategy can cause OOM. This PR introduces an option, `CompressionOptions::max_dict_buffer_bytes`, that limits how much data blocks are buffered before we switch to unbuffered mode (which means creating the per-SST dictionary, writing out the buffered data, and compressing/writing new blocks as soon as they are built). It is not strict as we currently buffer more than just data blocks -- also keys are buffered. But it does make a step towards giving users predictable memory usage. Related changes include: - Changed sampling for dictionary compression to select unique data blocks when there is limited availability of data blocks - Made use of `BlockBuilder::SwapAndReset()` to save an allocation+memcpy when buffering data blocks for building a dictionary - Changed `ParseBoolean()` to accept an input containing characters after the boolean. This is necessary since, with this PR, a value for `CompressionOptions::enabled` is no longer necessarily the final component in the `CompressionOptions` string. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7970 Test Plan: - updated `CompressionOptions` unit tests to verify limit is respected (to the extent expected in the current implementation) in various scenarios of flush/compaction to bottommost/non-bottommost level - looked at jemalloc heap profiles right before and after switching to unbuffered mode during flush/compaction. Verified memory usage in buffering is proportional to the limit set. Reviewed By: pdillinger Differential Revision: D26467994 Pulled By: ajkr fbshipit-source-id: 3da4ef9fba59974e4ef40e40c01611002c861465 |

4 years ago |

|

|

4a09d632c4 |

Remove Legacy and Custom FileWrapper classes from header files (#7851)

Summary: Removed the uses of the Legacy FileWrapper classes from the source code. The wrappers were creating an additional layer of indirection/wrapping, as the Env already has a FileSystem. Moved the Custom FileWrapper classes into the CustomEnv, as these classes are really for the private use the the CustomEnv class. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7851 Reviewed By: anand1976 Differential Revision: D26114816 Pulled By: mrambacher fbshipit-source-id: db32840e58d969d3a0fa6c25aaf13d6dcdc74150 |

4 years ago |

|

|

55e99688cc |

No elide constructors (#7798)

Summary: Added "no-elide-constructors to the ASSERT_STATUS_CHECK builds. This flag gives more errors/warnings for some of the Status checks where an inner class checks a Status and later returns it. In this case, without the elide check on, the returned status may not have been checked in the caller, thereby bypassing the checked code. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7798 Reviewed By: jay-zhuang Differential Revision: D25680451 Pulled By: pdillinger fbshipit-source-id: c3f14ed9e2a13f0a8c54d839d5fb4d1fc1e93917 |

4 years ago |

|

|

9a690a74e1 |

In ParseInternalKey(), include corrupt key info in Status (#7515)

Summary: Fixes Issue https://github.com/facebook/rocksdb/issues/7497 When allow_data_in_errors db_options is set, log error key details in `ParseInternalKey()` Have fixed most of the calls. Have few TODOs still pending - because have to make more deeper changes to pass in the allow_data_in_errors flag. Will do those in a separate PR later. Tests: - make check - some of the existing tests that exercise the "internal key too small" condition are: dbformat_test, cuckoo_table_builder_test - some of the existing tests that exercise the corrupted key path are: corruption_test, merge_helper_test, compaction_iterator_test Example of new status returns: - Key too small - `Corrupted Key: Internal Key too small. Size=5` - Corrupt key with allow_data_in_errors option set to false: `Corrupted Key: '<redacted>' seq:3, type:3` - Corrupt key with allow_data_in_errors option set to true: `Corrupted Key: '61' seq:3, type:3` Pull Request resolved: https://github.com/facebook/rocksdb/pull/7515 Reviewed By: ajkr Differential Revision: D24240264 Pulled By: ramvadiv fbshipit-source-id: bc48f5d4475ac19d7713e16df37505b31aac42e7 |

4 years ago |

|

|

e04a50923d |

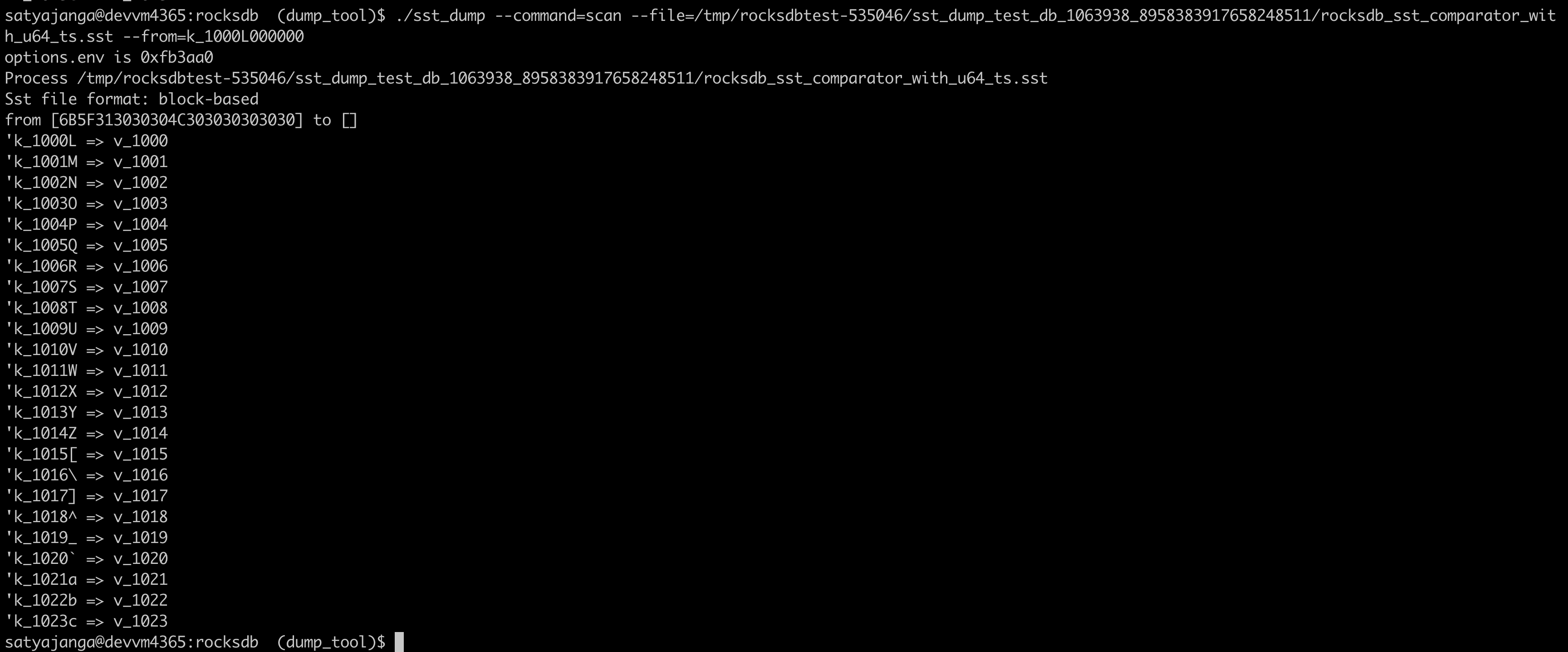

Change ParseInternalKey() to return Status instead of bool (#7457)

Summary: Fixes https://github.com/facebook/rocksdb/issues/7430 Change ParseInternalKey() to return Status instead of bool. db_bench (seekrandom) based before/after results with value size of 100 bytes and 16 bytes can be found at (tests ran on an udb server): https://www.dropbox.com/s/47bwamdy5ozngph/PIK_ret_Status_results.xlsx?dl=0  Pull Request resolved: https://github.com/facebook/rocksdb/pull/7457 Reviewed By: ajkr Differential Revision: D24002433 Pulled By: ramvadiv fbshipit-source-id: ac253ecf577a29044c47c3fe254a01e71404c44c |

4 years ago |

|

|

7d472accdc |

Bring the Configurable options together (#5753)

Summary: This PR merges the functionality of making the ColumnFamilyOptions, TableFactory, and DBOptions into Configurable into a single PR, resolving any merge conflicts Pull Request resolved: https://github.com/facebook/rocksdb/pull/5753 Reviewed By: ajkr Differential Revision: D23385030 Pulled By: zhichao-cao fbshipit-source-id: 8b977a7731556230b9b8c5a081b98e49ee4f160a |

4 years ago |

|

|

27aa443a15 |

Add sst_file_dumper status check (#7315)

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/7315 Test Plan: `ASSERT_STATUS_CHECKED=1 make sst_dump_test && ./sst_dump_test` And manually run `./sst_dump --file=*.sst` before and after the change. Reviewed By: pdillinger Differential Revision: D23361669 Pulled By: jay-zhuang fbshipit-source-id: 5bf51a2a90ee35c8c679e5f604732ec2aef5949a |

4 years ago |

|

|

af54c4092a |

fix SstFileWriter with dictionary compression (#7323)

Summary: In block-based table builder, the cut-over from buffered to unbuffered mode involves sampling the buffered blocks and generating a dictionary. There was a bug where `SstFileWriter` passed zero as the `target_file_size` causing the cutover to happen immediately, so there were no samples available for generating the dictionary. This PR changes the meaning of `target_file_size == 0` to mean buffer the whole file before cutting over. It also adds dictionary compression support to `sst_dump --command=recompress` for easy evaluation. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7323 Reviewed By: cheng-chang Differential Revision: D23412158 Pulled By: ajkr fbshipit-source-id: 3b232050e70ef3c2ee85a4b5f6fadb139c569873 |

4 years ago |

|

|

b35a2f9146 |

Fix GetFileDbIdentities (#7104)

Summary: Although PR https://github.com/facebook/rocksdb/issues/7032 fixes the construction of the `SstFileDumper` in `GetFileDbIdentities` by setting a proper `Env` of the `Options` passed in the constructor, the file path was not corrected accordingly. This actually disables backup engine to use db session ids in the file names since the `db_session_id` is always empty. Now it is fixed by setting the correct path in the construction of `SstFileDumper`. Furthermore, to preserve the Direct IO property that backup engine already has, parameter `EnvOptions` is added to `GetFileDbIdentities` and `SstFileDumper`. The `BackupUsingDirectIO` test is updated accordingly. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7104 Test Plan: backupable_db_test and some manual tests. Reviewed By: ajkr Differential Revision: D22443245 Pulled By: gg814 fbshipit-source-id: 056a9bb8b82947c5e73d7c3fbb62bfe23af5e562 |

4 years ago |

|

|

9a5886bd8c |

Extend Get/MultiGet deadline support to table open (#6982)

Summary: Current implementation of the ```read_options.deadline``` option only checks the deadline for random file reads during point lookups. This PR extends the checks to file opens, prefetches and preloads as part of table open. The main changes are in the ```BlockBasedTable```, partitioned index and filter readers, and ```TableCache``` to take ReadOptions as an additional parameter. In ```BlockBasedTable::Open```, in order to retain existing behavior w.r.t checksum verification and block cache usage, we filter out most of the options in ```ReadOptions``` except ```deadline```. However, having the ```ReadOptions``` gives us more flexibility to honor other options like verify_checksums, fill_cache etc. in the future. Additional changes in callsites due to function signature changes in ```NewTableReader()``` and ```FilePrefetchBuffer```. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6982 Test Plan: Add new unit tests in db_basic_test Reviewed By: riversand963 Differential Revision: D22219515 Pulled By: anand1976 fbshipit-source-id: 8a3b92f4a889808013838603aa3ca35229cd501b |

4 years ago |

|

|

be41c61f22 |

Add a new option for BackupEngine to store table files under shared_checksum using DB session id in the backup filenames (#6997)

Summary: `BackupableDBOptions::new_naming_for_backup_files` is added. This option is false by default. When it is true, backup table filenames under directory shared_checksum are of the form `<file_number>_<crc32c>_<db_session_id>.sst`. Note that when this option is true, it comes into effect only when both `share_files_with_checksum` and `share_table_files` are true. Three new test cases are added. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6997 Test Plan: Passed make check. Reviewed By: ajkr Differential Revision: D22098895 Pulled By: gg814 fbshipit-source-id: a1d9145e7fe562d71cde7ac995e17cb24fd42e76 |

4 years ago |