Summary:

According to https://github.com/facebook/rocksdb/issues/5907, each filter partition "should include the bloom of the prefix of the last

key in the previous partition" so that SeekForPrev() in prefix mode can return correct result.

The prefix of the last key in the previous partition does not necessarily have the same prefix

as the first key in the current partition. Regardless of the first key in current partition, the

prefix of the last key in the previous partition should be added. The existing code, however,

does not follow this. Furthermore, there is another issue: when finishing current filter partition,

`FullFilterBlockBuilder::AddPrefix()` is called for the first key in next filter partition, which effectively

overwrites `last_prefix_str_` prematurely. Consequently, when the filter block builder proceeds

to the next partition, `last_prefix_str_` will be the prefix of its first key, leaving no way of adding

the bloom of the prefix of the last key of the previous partition.

Prefix extractor is FixedLength.2.

```

[ filter part 1 ] [ filter part 2 ]

abc d

```

When SeekForPrev("abcd"), checking the filter partition will land on filter part 2 because "abcd" > "abc"

but smaller than "d".

If the filter in filter part 2 happens to return false for the test for "ab", then SeekForPrev("abcd") will build

incorrect iterator tree in non-total-order mode.

Also fix a unit test which starts to fail following this PR. `InDomain` should not fail due to assertion

error when checking on an arbitrary key.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8137

Test Plan:

```

make check

```

Without this fix, the following command will fail pretty soon.

```

./db_stress --acquire_snapshot_one_in=10000 --avoid_flush_during_recovery=0 \

--avoid_unnecessary_blocking_io=0 --backup_max_size=104857600 --backup_one_in=0 \

--batch_protection_bytes_per_key=0 --block_size=16384 --bloom_bits=17 \

--bottommost_compression_type=disable --cache_index_and_filter_blocks=1 --cache_size=1048576 \

--checkpoint_one_in=0 --checksum_type=kxxHash64 --clear_column_family_one_in=0 \

--compact_files_one_in=1000000 --compact_range_one_in=1000000 --compaction_ttl=0 \

--compression_max_dict_buffer_bytes=0 --compression_max_dict_bytes=0 \

--compression_parallel_threads=1 --compression_type=zstd --compression_zstd_max_train_bytes=0 \

--continuous_verification_interval=0 --db=/dev/shm/rocksdb/rocksdb_crashtest_whitebox \

--db_write_buffer_size=8388608 --delpercent=5 --delrangepercent=0 --destroy_db_initially=0 --enable_blob_files=0 \

--enable_compaction_filter=0 --enable_pipelined_write=1 --file_checksum_impl=big --flush_one_in=1000000 \

--format_version=5 --get_current_wal_file_one_in=0 --get_live_files_one_in=1000000 --get_property_one_in=1000000 \

--get_sorted_wal_files_one_in=0 --index_block_restart_interval=4 --index_type=2 --ingest_external_file_one_in=0 \

--iterpercent=10 --key_len_percent_dist=1,30,69 --level_compaction_dynamic_level_bytes=True \

--log2_keys_per_lock=10 --long_running_snapshots=1 --mark_for_compaction_one_file_in=0 \

--max_background_compactions=20 --max_bytes_for_level_base=10485760 --max_key=100000000 --max_key_len=3 \

--max_manifest_file_size=1073741824 --max_write_batch_group_size_bytes=16777216 --max_write_buffer_number=3 \

--max_write_buffer_size_to_maintain=8388608 --memtablerep=skip_list --mmap_read=1 --mock_direct_io=False \

--nooverwritepercent=0 --open_files=500000 --ops_per_thread=20000000 --optimize_filters_for_memory=0 --paranoid_file_checks=1 --partition_filters=1 --partition_pinning=0 --pause_background_one_in=1000000 \

--periodic_compaction_seconds=0 --prefixpercent=5 --progress_reports=0 --read_fault_one_in=0 --read_only=0 \

--readpercent=45 --recycle_log_file_num=0 --reopen=20 --secondary_catch_up_one_in=0 \

--snapshot_hold_ops=100000 --sst_file_manager_bytes_per_sec=104857600 \

--sst_file_manager_bytes_per_truncate=0 --subcompactions=2 --sync=0 --sync_fault_injection=False \

--target_file_size_base=2097152 --target_file_size_multiplier=2 --test_batches_snapshots=0 --test_cf_consistency=0 \

--top_level_index_pinning=0 --unpartitioned_pinning=1 --use_blob_db=0 --use_block_based_filter=0 \

--use_direct_io_for_flush_and_compaction=0 --use_direct_reads=0 --use_full_merge_v1=0 --use_merge=0 \

--use_multiget=0 --use_ribbon_filter=0 --use_txn=0 --user_timestamp_size=8 --verify_checksum=1 \

--verify_checksum_one_in=1000000 --verify_db_one_in=100000 --write_buffer_size=4194304 \

--write_dbid_to_manifest=1 --writepercent=35

```

Reviewed By: pdillinger

Differential Revision: D27553054

Pulled By: riversand963

fbshipit-source-id: 60e391e4a2d8d98a9a3172ec5d6176b90ec3de98

Summary:

Failures in `InvalidatePageCache` will change the API contract. So we remove the status check for `InvalidatePageCache` in `SstFileWriter::Add()`, `SstFileWriter::Finish` and `Rep::DeleteRange`

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8156

Reviewed By: riversand963

Differential Revision: D27597012

Pulled By: ajkr

fbshipit-source-id: 2872051695d50cc47ed0f2848dc582464c00076f

Summary:

Fixes https://github.com/facebook/rocksdb/issues/6548.

If we do not reset the pinnable slice before calling get, we will see the following assertion failure

while running the test with multiple column families.

```

db_bench: ./include/rocksdb/slice.h:168: void rocksdb::PinnableSlice::PinSlice(const rocksdb::Slice&, rocksdb::Cleanable*): Assertion `!pinned_' failed.

```

This happens in `BlockBasedTable::Get()`.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8154

Test Plan:

./db_bench --benchmarks=fillseq -num_column_families=3

./db_bench --benchmarks=readrandom -use_existing_db=1 -num_column_families=3

Reviewed By: ajkr

Differential Revision: D27587589

Pulled By: riversand963

fbshipit-source-id: 7379e7649ba40f046d6a4014c9ad629cb3f9a786

Summary:

The previous version of ZStd doesn't build correctly with Make 3.82. Updating it resolves the issue.

jay-zhuang This also needs to be cherry-picked to:

1. 6.17.fb

2. 6.18.fb

3. 6.19.fb

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8155

Reviewed By: riversand963

Differential Revision: D27596460

Pulled By: ajkr

fbshipit-source-id: ac8492245e6273f54efcc1587346a797a91c9441

Summary:

Before corrupting a file in the DB and expecting corruption to

be detected, open DB read-only to ensure file is not made obsolete by

compaction. Also, to avoid obsolete files not yet deleted, only select

live files to corrupt.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8151

Test Plan: watch CI

Reviewed By: akankshamahajan15

Differential Revision: D27568849

Pulled By: pdillinger

fbshipit-source-id: 39a69a2eafde0482b20a197949d24abe21952f27

Summary:

New tests should by default be expected to be parallelizeable

and passing with ASSERT_STATUS_CHECKED. Thus, I'm changing those two

lists to exclusions rather than inclusions.

For the set of exclusions, I only listed things that currently failed

for me when attempting not to exclude, or had some other documented

reason. This marks many more tests as "parallel," which will potentially

cause some failures from self-interference, but we can address those as

they are discovered.

Also changed CircleCI ASC test to be parallelized; the easy way to do

that is to exclude building tests that don't pass ASC, which is now a

small set.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8146

Test Plan: Watch CI, etc.

Reviewed By: riversand963

Differential Revision: D27542782

Pulled By: pdillinger

fbshipit-source-id: bdd74bcd912a963ee33f3fc0d2cad2567dc7740f

Summary:

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8143

The latter assume the location of the compile root, which can break

if the build root changes. Switch to the slightly more intelligent

`include_paths`, which should provide the same functionality, but do

with independent of include root.

Reviewed By: riversand963

Differential Revision: D27535869

fbshipit-source-id: 0129e47c0ce23e08528c9139114a591c14866fa8

Summary:

DBWALTestWithParam relies on `SstFileManager` to have the expected behavior. However, if this test shares

db directories with other DBSSTTest, then the SstFileManager may see non-empty data, thus will change its

behavior to be different from expectation, introducing flakiness.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8147

Test Plan: make check

Reviewed By: jay-zhuang

Differential Revision: D27553362

Pulled By: riversand963

fbshipit-source-id: a2d86343e8e2220bc553b6695ce87dd21a97ddec

Summary:

With thread/process-specific dirs. (Errors seen in FB infra.)

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8145

Test Plan: see in FB infra tests

Reviewed By: riversand963

Differential Revision: D27542355

Pulled By: pdillinger

fbshipit-source-id: b3c8e66f91a6a6b3a775f6fc0c3cf71e63c29ade

Summary:

Add request_id in IODebugContext which will be populated by

underlying FileSystem for IOTracing purposes. Update IOTracer to trace

request_id in the tracing records. Provided API

IODebugContext::SetRequestId which will set the request_id and enable

tracing for request_id. The API hides the implementation and underlying

file system needs to call this API directly.

Update DB::StartIOTrace API and remove redundant Env* from the

argument as its not used and DB already has Env that is passed down to

IOTracer.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8045

Test Plan: Update unit test.

Differential Revision: D26899871

Pulled By: akankshamahajan15

fbshipit-source-id: 56adef52ee5af0fb3060b607c3af1ec01635fa2b

Summary:

To propagate the IOStatus from file reads to RocksDB read logic, some of the existing status needs to be replaced by IOStatus.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8130

Test Plan: make check

Reviewed By: anand1976

Differential Revision: D27440188

Pulled By: zhichao-cao

fbshipit-source-id: bbe7622c2106fe4e46871d60f7c26944e5030d78

Summary:

Return early in case there are zero data blocks when

`BlockBasedTableBuilder::EnterUnbuffered()` is called. This crash can

only be triggered by applying dictionary compression to SST files that

contain only range tombstones. It cannot be triggered by a low buffer

limit alone since we only consider entering unbuffered mode after

buffering a data block causing the limit to be breached, or `Finish()`ing the file. It also cannot

be triggered by a totally empty file because those go through

`Abandon()` rather than `Finish()` so unbuffered mode is never entered.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8141

Test Plan: added a unit test that repro'd the "Floating point exception"

Reviewed By: riversand963

Differential Revision: D27495640

Pulled By: ajkr

fbshipit-source-id: a463cfba476919dc5c5c380800a75a86c31ffa23

Summary:

Added `TableProperties::{fast,slow}_compression_estimated_data_size`.

These properties are present in block-based tables when

`ColumnFamilyOptions::sample_for_compression > 0` and the necessary

compression library is supported when the file is generated. They

contain estimates of what `TableProperties::data_size` would be if the

"fast"/"slow" compression library had been used instead. One

limitation is we do not record exactly which "fast" (ZSTD or Zlib)

or "slow" (LZ4 or Snappy) compression library produced the result.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8139

Test Plan:

- new unit test

- ran `db_bench` with `sample_for_compression=1`; verified the `data_size` property matches the `{slow,fast}_compression_estimated_data_size` when the same compression type is used for the output file compression and the sampled compression

Reviewed By: riversand963

Differential Revision: D27454338

Pulled By: ajkr

fbshipit-source-id: 9529293de93ddac7f03b2e149d746e9f634abac4

Summary:

Which should return 2 long instead of an array.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8098

Reviewed By: mrambacher

Differential Revision: D27308741

Pulled By: jay-zhuang

fbshipit-source-id: 44beea2bd28cf6779b048bebc98f2426fe95e25c

Summary:

At least under MacOS, some things were excluded from the build (like Snappy) because the compilation flags were not passed in correctly. This PR does a few things:

- Passes the EXTRA_CXX/LDFLAGS into build_detect_platform. This means that if some tool (like TBB for example) is not installed in a standard place, it could still be detected by build_detect_platform. In this case, the developer would invoke: "EXTRA_CXXFLAGS=<path to TBB include> EXTRA_LDFLAGS=<path to TBB library> make", and the build script would find the tools in the extra location.

- Changes the compilation tests to use PLATFORM_CXXFLAGS. This change causes the EXTRA_FLAGS passed in to the script to be included in the compilation check. Additionally, flags set by the script itself (like --std=c++11) will be used during the checks.

Validated that the make_platform.mk file generated on Linux does not change with this change. On my MacOS machine, the SNAPPY libraries are now available (they were not before as they required --std=c++11 to build).

I also verified that I can build against TBB installed on my Mac by passing in the EXTRA CXX and LD FLAGS to the location in which TBB is installed.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8111

Reviewed By: jay-zhuang

Differential Revision: D27353516

Pulled By: mrambacher

fbshipit-source-id: b6b378c96dbf678bab1479556dcbcb49c47e807d

Summary:

Fix error_handler_fs_test failure due to statistics, it will fails due to multi-thread running and resume is different.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8136

Test Plan: make check

Reviewed By: akankshamahajan15

Differential Revision: D27448828

Pulled By: zhichao-cao

fbshipit-source-id: b94255c45e9e66e93334b5ca2e4e1bfcba23fc20

Summary:

In DBImpl::CloseHelper, we wait for bg_compaction_scheduled_

and bg_flush_scheduled_ to drop to 0. Unschedule is called prior

to cancel any unscheduled flushes/compactions. It is assumed that

anything in the high priority is a flush, and anything in the low

priority pool is a compaction. This assumption, however, is broken when

the high-pri pool is full.

As a result, bg_compaction_scheduled_ can go < 0 and bg_flush_scheduled_

will remain > 0 and DB can be in hang state.

The fix is, we decrement the `bg_{flush,compaction,bottom_compaction}_scheduled_`

inside the `Unschedule{Flush,Compaction,BottomCompaction}Callback()`s. DB

`mutex_` will make the counts atomic in `Unschedule`.

Related discussion: https://github.com/facebook/rocksdb/issues/7928

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8125

Test Plan: Added new test case which hangs without the fix.

Reviewed By: jay-zhuang

Differential Revision: D27390043

Pulled By: ajkr

fbshipit-source-id: 78a367fba9a59ac5607ad24bd1c46dc16d5ec110

Summary:

thread_id is only unique within a process. If we run the same test-set with multiple processes, it could cause db path collision between 2 runs, error message will be like:

```

...

IO error: While lock file: /tmp/rocksdbtest-501//deletefile_test_8093137327721791717/LOCK: Resource temporarily unavailable

...

```

This is could be likely reproduced by:

```

gtest-parallel ./deletefile_test --gtest_filter=DeleteFileTest.BackgroundPurgeCFDropTest -r 1000 -w 1000

```

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8124

Reviewed By: ajkr

Differential Revision: D27435195

Pulled By: jay-zhuang

fbshipit-source-id: 850fc72cdb660edf93be9a1ca9327008c16dd720

Summary:

GitHub has detected that a package defined in the

docs/Gemfile.lock file of the facebook/rocksdb repository contains a

security vulnerability.

This patch fixes it by upgrading the version of kramdown to 2.3.1

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8131

Reviewed By: jay-zhuang

Differential Revision: D27418776

Pulled By: akankshamahajan15

fbshipit-source-id: 0a4b0b85922b9958afcbc44560584701b1c6c82d

Summary:

BackupEngine previously had unclear but strict concurrency

requirements that the API user must follow for safe use. Now we make

that clear, by separating operations into "Read," "Append," and "Write"

operations, and specifying which combinations are safe across threads on

the same BackupEngine object (previously none; now all, using a

read-write lock), and which are safe across different BackupEngine

instances open on the same backup_dir.

The changes to backupable_db.h should be backward compatible. It is

mostly about eliminating copies of what should be the same function and

(unsurprisingly) useful documentation comments were often placed on

only one of the two copies. With the re-organization, we are also

grouping different categories of operations. In the future we might add

BackupEngineReadAppendOnly, but that didn't seem necessary.

To mark API Read operations 'const', I had to mark some implementation

functions 'const' and some fields mutable.

Functional changes:

* Added RWMutex locking around public API functions to implement thread

safety on a single object. To avoid future bugs, this is another

internal class layered on top (removing many "override" in

BackupEngineImpl). It would be possible to allow more concurrency

between operations, rather than mutual exclusion, but IMHO not worth the

work.

* Fixed a race between Open() (Initialize()) and CreateNewBackup() for

different objects on the same backup_dir, where Initialize() could

delete the temporary meta file created during CreateNewBackup().

(This was found by the new test.)

Also cleaned up a couple of "status checked" TODOs, and improved a

checksum mismatch error message to include involved files.

Potential follow-up work:

* CreateNewBackup has an API wart because it doesn't tell you the

BackupID it just created, which makes it of limited use in a multithreaded

setting.

* We could also consider a Refresh() function to catch up to

changes made from another BackupEngine object to the same dir.

* Use a lock file to prevent multiple writer BackupEngines, but this

won't work on remote filesystems not supporting lock files.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8115

Test Plan:

new mini-stress test in backup unit tests, run with gcc,

clang, ASC, TSAN, and UBSAN, 100 iterations each.

Reviewed By: ajkr

Differential Revision: D27347589

Pulled By: pdillinger

fbshipit-source-id: 28d82ed2ac672e44085a739ddb19d297dad14b15

Summary:

We have observed rocksdb databases creating info log files with world-writeable permissions.

The reason why the file is created like so is because stdio streams opened with fopen calls use mode 0666, and while normally most systems have a umask of 022, in some occasions (for instance, while running daemons), you may find that the application is running with a less restrictive umask. The result is that when opening the DB, the LOG file would be created with world-writeable perms:

```

$ ls -lh db/

total 6.4M

-rw-r--r-- 1 ibarba users 115 Mar 24 17:41 000004.log

-rw-r--r-- 1 ibarba users 16 Mar 24 17:41 CURRENT

-rw-r--r-- 1 ibarba users 37 Mar 24 17:41 IDENTITY

-rw-r--r-- 1 ibarba users 0 Mar 24 17:41 LOCK

-rw-rw-r-- 1 ibarba users 114K Mar 24 17:41 LOG

-rw-r--r-- 1 ibarba users 514 Mar 24 17:41 MANIFEST-000003

-rw-r--r-- 1 ibarba users 31K Mar 24 17:41 OPTIONS-000018

-rw-r--r-- 1 ibarba users 31K Mar 24 17:41 OPTIONS-000020

```

This diff replaces the fopen call with a regular open() call restricting mode, and then using fdopen to associate an stdio stream with that file descriptor. Resulting in the following files being created:

```

-rw-r--r-- 1 ibarba users 58 Mar 24 18:16 000004.log

-rw-r--r-- 1 ibarba users 16 Mar 24 18:16 CURRENT

-rw-r--r-- 1 ibarba users 37 Mar 24 18:16 IDENTITY

-rw-r--r-- 1 ibarba users 0 Mar 24 18:16 LOCK

-rw-r--r-- 1 ibarba users 111K Mar 24 18:16 LOG

-rw-r--r-- 1 ibarba users 514 Mar 24 18:16 MANIFEST-000003

-rw-r--r-- 1 ibarba users 31K Mar 24 18:16 OPTIONS-000018

-rw-r--r-- 1 ibarba users 31K Mar 24 18:16 OPTIONS-000020

```

With the correct permissions

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8106

Reviewed By: akankshamahajan15

Differential Revision: D27415377

Pulled By: mrambacher

fbshipit-source-id: 97ac6c215700a7ea306f4a1fdf9fcf64a3cbb202

Summary:

If the platform is ppc64 and the libc is not GNU libc, then we exclude the range_tree from compilation.

See https://jira.percona.com/browse/PS-7559

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8070

Reviewed By: jay-zhuang

Differential Revision: D27246004

Pulled By: mrambacher

fbshipit-source-id: 59d8433242ce7ce608988341becb4f83312445f5

Summary:

The check in db_bench for table_cache_numshardbits was 0 < bits <= 20, whereas the check in LRUCache was 0 < bits < 20. Changed the two values to match to avoid a crash in db_bench on a null cache.

Fixes https://github.com/facebook/rocksdb/issues/7393

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8110

Reviewed By: zhichao-cao

Differential Revision: D27353522

Pulled By: mrambacher

fbshipit-source-id: a414bd23b5bde1f071146b34cfca5e35c02de869

Summary:

Ran a spell check over the comments in the include/rocksdb directory and fixed any mis-spellings.

There are still some variable names that are spelled incorrectly (like SizeApproximationOptions::include_memtabtles, SstFileMetaData::oldest_ancester_time) that were not fixed, as those would break compilation.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8120

Reviewed By: zhichao-cao

Differential Revision: D27366034

Pulled By: mrambacher

fbshipit-source-id: 6a3f3674890bb6acc751e9c5887a8fbb6adca5df

Summary:

Currently, partitioned filter does not support user-defined timestamp. Disable it for now in ts stress test so that

the contrun jobs can proceed.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8127

Test Plan: make crash_test_with_ts

Reviewed By: ajkr

Differential Revision: D27388488

Pulled By: riversand963

fbshipit-source-id: 5ccff18121cb537bd82f2ac072cd25efb625c666

Summary:

Because build_version.cc is dependent on the library objects (to force a re-generation of it), the library objects would be built in order to satisfy this rule. Because there is a build_version.d file, it would need generated and included.

Change the ALL_DEPS/FILES to not include build_version.cc (meaning no .d file for it, which is okay since it is generated). Also changed the rule on whether or not to generate DEP files to skip tags.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8097

Reviewed By: ajkr

Differential Revision: D27299815

Pulled By: mrambacher

fbshipit-source-id: 1efbe8a56d062f57ae13b6c2944ad3faf775087e

Summary:

Currently, we only truncate the latest alive WAL files when the DB is opened. If the latest WAL file is empty or was flushed during Open, its not truncated since the file will be deleted later on in the Open path. However, before deletion, a new WAL file is created, and if the process crash loops between the new WAL file creation and deletion of the old WAL file, the preallocated space will keep accumulating and eventually use up all disk space. To prevent this, always truncate the latest WAL file, even if its empty or the data was flushed.

Tests:

Add unit tests to db_wal_test

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8122

Reviewed By: riversand963

Differential Revision: D27366132

Pulled By: anand1976

fbshipit-source-id: f923cc03ef033ccb32b140d36c6a63a8152f0e8e

Summary:

For some branches, I see an error during analyze on this code. I do not know why it is not persistent, but this should address the error:

Logic error | Result of operation is garbage or undefined | trace_replay.cc | Replay | 436 | 30 | View Report

DecodeCFAndKey(trace.payload, &get_payload.cf_id, &get_payload.get_key);

--

433 | } else {

434 | TracerHelper::DecodeGetPayload(&trace, &get_payload);

| 25←Calling 'TracerHelper::DecodeGetPayload'→ | 25 | ← | Calling 'TracerHelper::DecodeGetPayload' | →

25 | ← | Calling 'TracerHelper::DecodeGetPayload' | →

| 29←Returning from 'TracerHelper::DecodeGetPayload'→ | 29 | ← | Returning from 'TracerHelper::DecodeGetPayload' | →

29 | ← | Returning from 'TracerHelper::DecodeGetPayload' | →

435 | }

436 | if (get_payload.cf_id > 0 &&

| 30←The left operand of '>' is a garbage value | 30 | ← | The left operand of '>' is a garbage value

30 | ← | The left operand of '>' is a garbage value

437 | cf_map_.find(get_payload.cf_id) == cf_map_.end()) {

438 | return Status::Corruption("Invalid Column Family ID.");

439 | }

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8121

Reviewed By: zhichao-cao

Differential Revision: D27366022

Pulled By: mrambacher

fbshipit-source-id: 309c05dbab08cd7ab7f15389e8456f09196f37f6

Summary:

The snapshot structure returned by rocksdb_transaction_get_snapshot is

supposed to be freed by calling rocksdb_free(), so allocate using malloc

rather than new. Fixes https://github.com/facebook/rocksdb/issues/6112

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8114

Reviewed By: akankshamahajan15

Differential Revision: D27362923

Pulled By: anand1976

fbshipit-source-id: e93a8b1ffe26dafbe22529907f72b796ae971214

Summary:

The patch adds a resource management/RAII class called `ThreadGuard`,

which can be used to ensure that the managed thread is joined when the

`ThreadGuard` is destroyed, regardless of whether it is due to the

object going out of scope, an early return, an exception etc. This is

important because if an `std::thread` object is destroyed without having

been joined (or detached) first, the process is aborted (via

`std::terminate`).

For now, `ThreadGuard` is only used in the test case

`ExternalSSTFileTest.PickedLevelBug`; however, it could come in handy

elsewhere in the codebase as well (both in test code and "real" code).

Case in point: in the `PickedLevelBug` test case, with the earlier code we

could end up in the above situation when the following assertion (which is

before the threads are joined) is triggered:

```

ASSERT_FALSE(bg_compact_started.load());

```

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8112

Test Plan:

```

make check

gtest-parallel --repeat=10000 ./external_sst_file_test --gtest_filter="*PickedLevelBug"

```

Reviewed By: riversand963

Differential Revision: D27343185

Pulled By: ltamasi

fbshipit-source-id: 2a8c3aa68bc78cc03ec0dbae909fb25c2cd15c69

Summary:

There is bug in the current code base introduced in https://github.com/facebook/rocksdb/issues/8049 , we still set the SST file write IO Error only case as hard error. Fix it by removing the logic.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8107

Test Plan: make check, error_handler_fs_test

Reviewed By: anand1976

Differential Revision: D27321422

Pulled By: zhichao-cao

fbshipit-source-id: c014afc1553ca66b655e3bbf9d0bf6eb417ccf94

Summary:

Previously it only applied to block-based tables generated by flush. This restriction

was undocumented and blocked a new use case. Now compression sampling

applies to all block-based tables we generate when it is enabled.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8105

Test Plan: new unit test

Reviewed By: riversand963

Differential Revision: D27317275

Pulled By: ajkr

fbshipit-source-id: cd9fcc5178d6515e8cb59c6facb5ac01893cb5b0

Summary:

`strerror()` is not thread-safe, using `strerror_r()` instead. The API could be different on the different platforms, used the code from 0deef031cb/folly/String.cpp (L457)

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8087

Reviewed By: mrambacher

Differential Revision: D27267151

Pulled By: jay-zhuang

fbshipit-source-id: 4b8856d1ec069d5f239b764750682c56e5be9ddb

Summary:

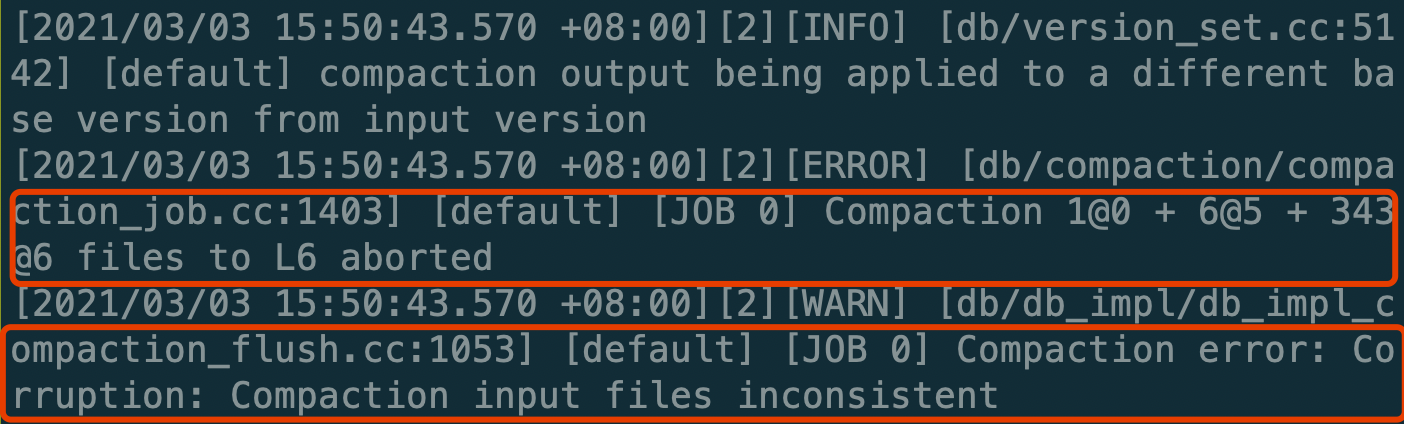

**Summary:**

When doing CompactFiles on the files of multiple levels(num_level > 2) with L0 is included, the compaction would fail like this.

The reason is that in `VerifyCompactionFileConsistency` it checks the levels between the L0 and base level should be empty, but it regards the compaction triggered by `CompactFiles` as an L0 -> base level compaction wrongly.

The condition is committed several years ago, whereas it isn't correct anymore.

```c++

if (vstorage->compaction_style_ == kCompactionStyleLevel &&

c->start_level() == 0 && c->num_input_levels() > 2U)

```

So this PR just deletes the incorrect check.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8024

Test Plan: make check

Reviewed By: jay-zhuang

Differential Revision: D26907060

Pulled By: ajkr

fbshipit-source-id: 538cef32faf464cd422e3f8de236ea3e58880c2b

Summary:

Improved handling of -bits_per_key other than 10, but at least

the OptimizeForMemory test is simply not designed for generally handling

other settings. (ribbon_test does have a statistical framework for this

kind of testing, but it's not important to do that same for Bloom right

now.)

Closes https://github.com/facebook/rocksdb/issues/7019

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8093

Test Plan: for I in `seq 1 20`; do ./bloom_test --gtest_filter=-*OptimizeForMemory* --bits_per_key=$I &> /dev/null || echo FAILED; done

Reviewed By: mrambacher

Differential Revision: D27275875

Pulled By: pdillinger

fbshipit-source-id: 7362e8ac2c41ea11f639412e4f30c8b375f04388

Summary:

Fix race condition in

DBSSTTest.DBWithMaxSpaceAllowedWithBlobFiles where background flush

thread updates delete_blob_file but in test thread Flush() already

completes after getting bg_error and delete_blob_file remains false.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8092

Test Plan: Ran ASAN job few times on CircleCI

Reviewed By: riversand963

Differential Revision: D27275815

Pulled By: akankshamahajan15

fbshipit-source-id: 2939ad1671403881573bbe07c71aa474c5019130