Tag:

Branch:

Tree:

b83263bbe4

main

oxigraph-8.1.1

oxigraph-8.3.2

oxigraph-main

${ noResults }

1996 Commits (b83263bbe4fc24c6b3100904efa655717e24ee80)

| Author | SHA1 | Message | Date |

|---|---|---|---|

|

|

a8a422e962 |

Add manifest fix-up utility for file temperatures (#9683)

Summary: The goal of this change is to allow changes to the "current" (in FileSystem) file temperatures to feed back into DB metadata, so that they can inform decisions and stats reporting. In part because of modular code factoring, it doesn't seem easy to do this automagically, where opening an SST file and observing current Temperature different from expected would trigger a change in metadata and DB manifest write (essentially giving the deep read path access to the write path). It is also difficult to do this while the DB is open because of the limitations of LogAndApply. This change allows updating file temperature metadata on a closed DB using an experimental utility function UpdateManifestForFilesState() or `ldb update_manifest --update_temperatures`. This should suffice for "migration" scenarios where outside tooling has placed or re-arranged DB files into a (different) tiered configuration without going through RocksDB itself (currently, only compaction can change temperature metadata). Some details: * Refactored and added unit test for `ldb unsafe_remove_sst_file` because of shared functionality * Pulled in autovector.h changes from https://github.com/facebook/rocksdb/issues/9546 to fix SuperVersionContext move constructor (related to an older draft of this change) Possible follow-up work: * Support updating manifest with file checksums, such as when a new checksum function is used and want existing DB metadata updated for it. * It's possible that for some repair scenarios, lighter weight than full repair, we might want to support UpdateManifestForFilesState() to modify critical file details like size or checksum using same algorithm. But let's make sure these are differentiated from modifying file details in ways that don't suspect corruption (or require extreme trust). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9683 Test Plan: unit tests added Reviewed By: jay-zhuang Differential Revision: D34798828 Pulled By: pdillinger fbshipit-source-id: cfd83e8fb10761d8c9e7f9c020d68c9106a95554 |

4 years ago |

|

|

2586585b0c |

Minor fix for Windows build with zlib (#9699)

Summary: ``` conversion from 'size_t' to 'uLong', possible loss of data ``` Fix https://github.com/facebook/rocksdb/issues/9688 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9699 Reviewed By: riversand963 Differential Revision: D34901116 Pulled By: jay-zhuang fbshipit-source-id: 969148a7a8c023449bd85055a1f0eec71d0a9b3f |

4 years ago |

|

|

09b0e8f2c7 |

Fix a timer crash caused by invalid memory management (#9656)

Summary: Timer crash when multiple DB instances doing heavy DB open and close operations concurrently. Which is caused by adding a timer task with smaller timestamp than the current running task. Fix it by moving the getting new task timestamp part within timer mutex protection. And other fixes: - Disallow adding duplicated function name to timer - Fix a minor memory leak in timer when a running task is cancelled Pull Request resolved: https://github.com/facebook/rocksdb/pull/9656 Reviewed By: ajkr Differential Revision: D34626296 Pulled By: jay-zhuang fbshipit-source-id: 6b6d96a5149746bf503546244912a9e41a0c5f6b |

4 years ago |

|

|

062396af15 |

Avoid popcnt on Windows when unavailable and in portable builds (#9680)

Summary: Fixes https://github.com/facebook/rocksdb/issues/9560. Only use popcnt intrinsic when HAVE_SSE42 is set. Also avoid setting it based on compiler test in portable builds because such test will pass on MSVC even without proper arch flags (ref: https://devblogs.microsoft.com/oldnewthing/20201026-00/?p=104397). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9680 Test Plan: verified the combinations of -DPORTABLE and -DFORCE_SSE42 produce expected compiler flags on Linux. Verified MSVC build using PORTABLE=1 (in CircleCI) does not set HAVE_SSE42. Reviewed By: pdillinger Differential Revision: D34739033 Pulled By: ajkr fbshipit-source-id: d10456f3392945fc3e59430a1777840f7b60b276 |

4 years ago |

|

|

fec4403ff1 |

Integrate WAL compression into log reader/writer. (#9642)

Summary: Integrate the streaming compress/uncompress API into WAL compression. The streaming compression object is stored in the log_writer along with a reusable output buffer to store the compressed buffer(s). The streaming uncompress object is stored in the log_reader along with a reusable output buffer to store the uncompressed buffer(s). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9642 Test Plan: Added unit tests to verify different scenarios - large buffers, split compressed buffers, etc. Future optimizations: The overhead for small records is quite high, so it makes sense to compress only buffers above a certain threshold and use a separate record type to indicate that those records are compressed. Reviewed By: anand1976 Differential Revision: D34709167 Pulled By: sidroyc fbshipit-source-id: a37a3cd1301adff6152fb3fcd23726106af07dd4 |

4 years ago |

|

|

926ee13811 |

Fix corruption error when compressing blob data with zlib. (#9572)

Summary: The plain data length may not be big enough if the compression actually expands data. So use deflateBound() to get the upper limit on the compressed output before deflate(). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9572 Reviewed By: riversand963 Differential Revision: D34326475 Pulled By: ajkr fbshipit-source-id: 4b679cb7a83a62782a127785b4d5eb9aa4646449 |

4 years ago |

|

|

21345d2823 |

Streaming Compression API for WAL compression. (#9619)

Summary: Implement a streaming compression API (compress/uncompress) to use for WAL compression. The log_writer would use the compress class/API to compress a record before writing it out in chunks. The log_reader would use the uncompress class/API to uncompress the chunks and combine into a single record. Added unit test to verify the API for different sizes/compression types. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9619 Test Plan: make -j24 check Reviewed By: anand1976 Differential Revision: D34437346 Pulled By: sidroyc fbshipit-source-id: b180569ad2ddcf3106380f8758b556cc0ad18382 |

4 years ago |

|

|

d795a730be |

Combine data members of IOStatus with Status (#9549)

Summary: Combine the data members retryable_, data_loss_ and scope_ of IOStatus with Status, as protected members. IOStatus is now defined as a derived class of Status with no new data, but additional methods. This will allow us to eventually track the result of FileSystem calls in RocksDB with one variable instead of two. Benchmark commands and results are below. The performance after changes seems slightly better. ```./db_bench -db=/data/mysql/rocksdb/prefix_scan -benchmarks="fillseq" -key_size=32 -value_size=512 -num=5000000 -use_direct_io_for_flush_and_compaction=true -target_file_size_base=16777216``` ```./db_bench -use_existing_db=true --db=/data/mysql/rocksdb/prefix_scan -benchmarks="readseq,seekrandom,readseq" -key_size=32 -value_size=512 -num=5000000 -seek_nexts=10000 -use_direct_reads=true -duration=60 -ops_between_duration_checks=1 -readonly=true -adaptive_readahead=false -threads=1 -cache_size=10485760000``` Before - seekrandom : 3715.432 micros/op 269 ops/sec; 1394.9 MB/s (16149 of 16149 found) seekrandom : 3687.177 micros/op 271 ops/sec; 1405.6 MB/s (16273 of 16273 found) seekrandom : 3709.646 micros/op 269 ops/sec; 1397.1 MB/s (16175 of 16175 found) readseq : 0.369 micros/op 2711321 ops/sec; 1406.6 MB/s readseq : 0.363 micros/op 2754092 ops/sec; 1428.8 MB/s readseq : 0.372 micros/op 2688046 ops/sec; 1394.6 MB/s After - seekrandom : 3606.830 micros/op 277 ops/sec; 1436.9 MB/s (16636 of 16636 found) seekrandom : 3594.467 micros/op 278 ops/sec; 1441.9 MB/s (16693 of 16693 found) seekrandom : 3597.919 micros/op 277 ops/sec; 1440.5 MB/s (16677 of 16677 found) readseq : 0.354 micros/op 2822809 ops/sec; 1464.5 MB/s readseq : 0.358 micros/op 2795080 ops/sec; 1450.1 MB/s readseq : 0.354 micros/op 2822889 ops/sec; 1464.5 MB/s Pull Request resolved: https://github.com/facebook/rocksdb/pull/9549 Reviewed By: pdillinger Differential Revision: D34310362 Pulled By: anand1976 fbshipit-source-id: 54b27756edf9c9ecfe730a2dce542a7a46743096 |

4 years ago |

|

|

30b08878d8 |

Make FilterPolicy Customizable (#9590)

Summary: Make FilterPolicy into a Customizable class. Allow new FilterPolicy to be discovered through the ObjectRegistry Pull Request resolved: https://github.com/facebook/rocksdb/pull/9590 Reviewed By: pdillinger Differential Revision: D34327367 Pulled By: mrambacher fbshipit-source-id: 37e7edac90ec9457422b72f359ab8ef48829c190 |

4 years ago |

|

|

39b0d92153 |

Add record to set WAL compression type if enabled (#9556)

Summary: When WAL compression is enabled, add a record (new record type) to store the compression type to indicate that all subsequent records are compressed. The log reader will store the compression type when this record is encountered and use the type to uncompress the subsequent records. Compress and uncompress to be implemented in subsequent diffs. Enabled WAL compression in some WAL tests to check for regressions. Some tests that rely on offsets have been disabled. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9556 Reviewed By: anand1976 Differential Revision: D34308216 Pulled By: sidroyc fbshipit-source-id: 7f10595e46f3277f1ea2d309fbf95e2e935a8705 |

4 years ago |

|

|

8c681087c7 |

Refactor FilterPolicies toward Customizable (#9567)

Summary: Some changes to make it easier to make FilterPolicy customizable. Especially, create distinct classes for the different testing-only and user-facing built-in FilterPolicy modes. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9567 Test Plan: tests updated, with no intended difference in functionality tested. No difference in test performance seen as a result of moving to string-based filter type configuration. Reviewed By: mrambacher Differential Revision: D34234694 Pulled By: pdillinger fbshipit-source-id: 8a94931a9e04c3bcca863a4f524cfd064aaf0122 |

4 years ago |

|

|

fe9d495112 |

Return different Status based on ObjectRegistry::NewObject calls (#9333)

Summary: This fix addresses https://github.com/facebook/rocksdb/issues/9299. If attempting to create a new object via the ObjectRegistry and a factory is not found, the ObjectRegistry will return a "NotSupported" status. This is the same behavior as previously. If the factory is found but could not successfully create the object, an "InvalidArgument" status is returned. If the factory returned a reason why (in the errmsg), this message will be in the returned status. In practice, there are two options in the ConfigOptions that control how these errors are propagated: - If "ignore_unknown_options=true", then both InvalidArgument and NotSupported status codes will be swallowed internally. Both cases will return success - If "ignore_unsupported_options=true", then having no factory will return success but a failing factory will return an error - If both options are false, both cases (no and failing factory) will return errors. In practice this likely only changes Customizable that may be partially available. For example, the JEMallocMemoryAllocator is a built-in allocator that is registered with the system but may not be compiled in. In this case, the status code for this allocator changed from NotSupported("JEMalloc not available") to InvalidArgumen("JEMalloc not available"). Other Customizable builtins/plugins would have the same semantics. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9333 Reviewed By: pdillinger Differential Revision: D33517681 Pulled By: mrambacher fbshipit-source-id: 8033052d4a4a7b88c2d9f90147b1b4467e51f6fd |

4 years ago |

|

|

68a9c186d0 |

FilterPolicy API changes for 7.0 (#9501)

Summary: * Inefficient block-based filter is no longer customizable in the public API, though (for now) can still be enabled. * Removed deprecated FilterPolicy::CreateFilter() and FilterPolicy::KeyMayMatch() * Removed `rocksdb_filterpolicy_create()` from C API * Change meaning of nullptr return from GetBuilderWithContext() from "use block-based filter" to "generate no filter in this case." This is a cleaner solution to the proposal in https://github.com/facebook/rocksdb/issues/8250. * Also, when user specifies bits_per_key < 0.5, we now round this down to "no filter" because we expect a filter with >= 80% FP rate is unlikely to be worth the CPU cost of accessing it (esp with cache_index_and_filter_blocks=1 or partition_filters=1). * bits_per_key >= 0.5 and < 1.0 is still rounded up to 1.0 (for 62% FP rate) * This also gives us some support for configuring filters from OPTIONS file as currently saved: `filter_policy=rocksdb.BuiltinBloomFilter`. Opening from such an options file will enable reading filters (an improvement) but not writing new ones. (See Customizable follow-up below.) * Also removed deprecated functions * FilterBitsBuilder::CalculateNumEntry() * FilterPolicy::GetFilterBitsBuilder() * NewExperimentalRibbonFilterPolicy() * Remove default implementations of * FilterBitsBuilder::EstimateEntriesAdded() * FilterBitsBuilder::ApproximateNumEntries() * FilterPolicy::GetBuilderWithContext() * Remove support for "filter_policy=experimental_ribbon" configuration string. * Allow "filter_policy=bloomfilter:n" without bool to discourage use of block-based filter. Some pieces for https://github.com/facebook/rocksdb/issues/9389 Likely follow-up (later PRs): * Refactoring toward FilterPolicy Customizable, so that we can generate filters with same configuration as before when configuring from options file. * Remove support for user enabling block-based filter (ignore `bool use_block_based_builder`) * Some months after this change, we could even remove read support for block-based filter, because it is not critical to DB data preservation. * Make FilterBitsBuilder::FinishV2 to avoid `using FilterBitsBuilder::Finish` mess and add support for specifying a MemoryAllocator (for cache warming) Pull Request resolved: https://github.com/facebook/rocksdb/pull/9501 Test Plan: A number of obsolete tests deleted and new tests or test cases added or updated. Reviewed By: hx235 Differential Revision: D34008011 Pulled By: pdillinger fbshipit-source-id: a39a720457c354e00d5b59166b686f7f59e392aa |

4 years ago |

|

|

036bbab6f7 |

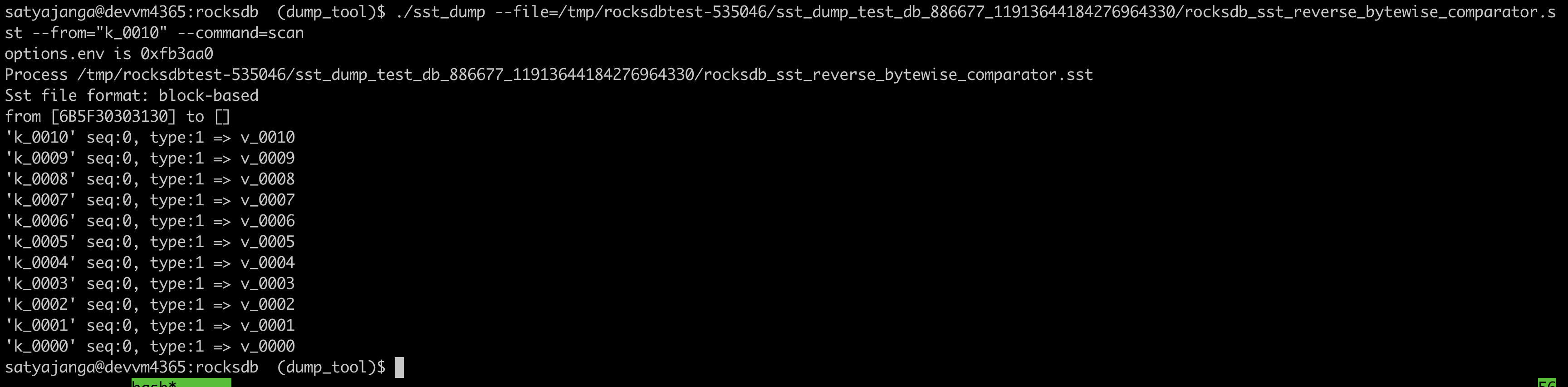

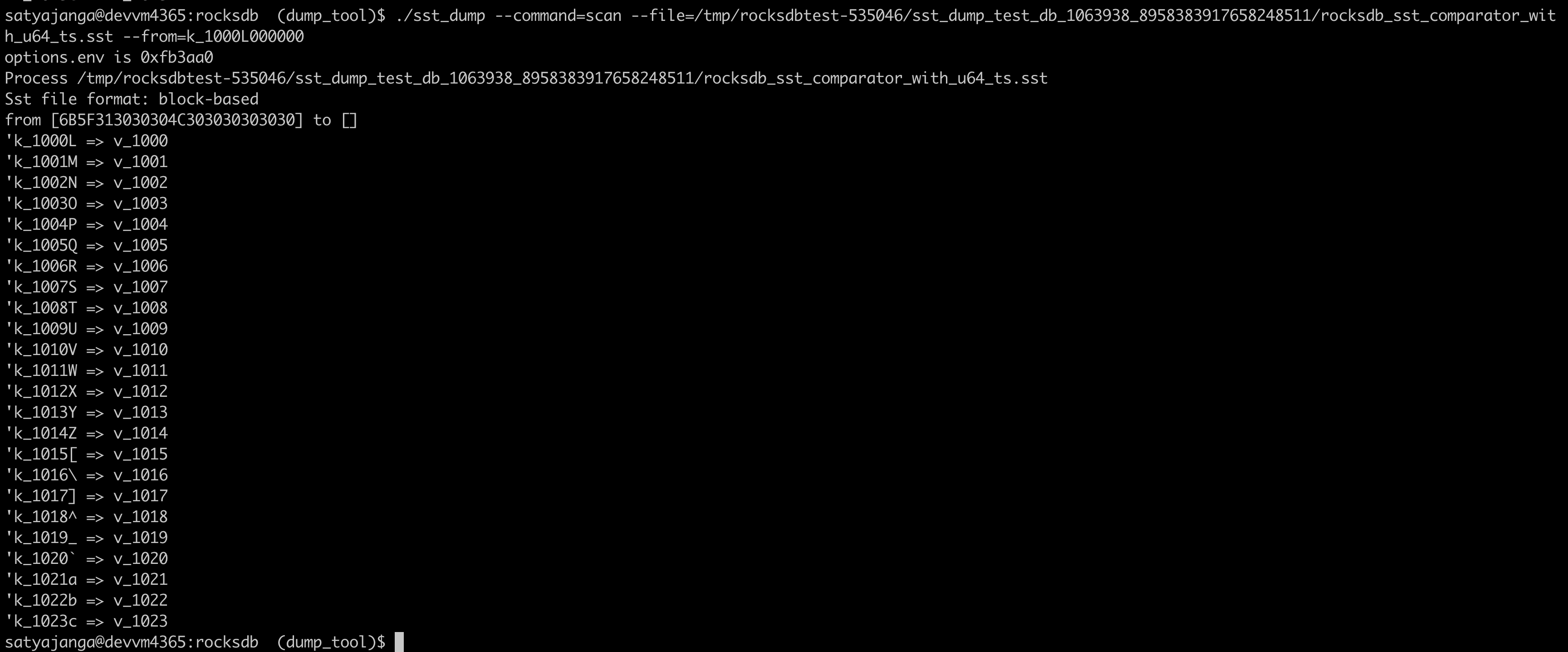

Use the comparator from the sst file table properties in sst_dump_tool (#9491)

Summary: We introduced a new Comparator for timestamp in user keys. In the sst_dump_tool by default we use BytewiseComparator to read sst files. This change allows us to read comparator_name from table properties in meta data block and use it to read. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9491 Test Plan: added unittests for new functionality. make check   Reviewed By: riversand963 Differential Revision: D33993614 Pulled By: satyajanga fbshipit-source-id: 4b5cf938e6d2cb3931d763bef5baccc900b8c536 |

4 years ago |

|

|

5cb137a860 |

Work around some new clang-analyze failures (#9515)

Summary: ... seen only in internal clang-analyze runs after https://github.com/facebook/rocksdb/issues/9481 * Mostly, this works around falsely reported leaks by using std::unique_ptr in some places where clang-analyze was getting confused. (I didn't see any changes in C++17 that could make our Status implementation leak memory.) * Also fixed SetBGError returning address of a stack variable. * Also fixed another false null deref report by adding an assert. Also, use SKIP_LINK=1 to speed up `make analyze` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9515 Test Plan: Was able to reproduce the reported errors locally and verify they're fixed (except SetBGError). Otherwise, existing tests Reviewed By: hx235 Differential Revision: D34054630 Pulled By: pdillinger fbshipit-source-id: 38600ef3da75ddca307dff96b7a1a523c2885c2e |

4 years ago |

|

|

fd3e0f43b3 |

Require C++17 (#9481)

Summary: Drop support for some old compilers by requiring C++17 standard (or higher). See https://github.com/facebook/rocksdb/issues/9388 First modification based on this is to remove some conditional compilation in slice.h (also better for ODR) Also in this PR: * Fix some Makefile formatting that seems to affect ASSERT_STATUS_CHECKED config in some cases * Add c_test to NON_PARALLEL_TEST in Makefile * Fix a clang-analyze reported "potential leak" in lru_cache_test * Better "compatibility" definition of DEFINE_uint32 for old versions of gflags * Fix a linking problem with shared libraries in Makefile (`./random_test: error while loading shared libraries: librocksdb.so.6.29: cannot open shared object file: No such file or directory`) * Always set ROCKSDB_SUPPORT_THREAD_LOCAL and use thread_local (from C++11) * TODO in later PR: clean up that obsolete flag * Fix a cosmetic typo in c.h (https://github.com/facebook/rocksdb/issues/9488) Pull Request resolved: https://github.com/facebook/rocksdb/pull/9481 Test Plan: CircleCI config substantially updated. * Upgrade to latest Ubuntu images for each release * Generally prefer Ubuntu 20, but keep a couple Ubuntu 16 builds with oldest supported compilers, to ensure compatibility * Remove .circleci/cat_ignore_eagain except for Ubuntu 16 builds, because this is to work around a kernel bug that should not affect anything but Ubuntu 16. * Remove designated gcc-9 build, because the default linux build now uses GCC 9 from Ubuntu 20. * Add some `apt-key add` to fix some apt "couldn't be verified" errors * Generally drop SKIP_LINK=1; work-around no longer needed * Generally `add-apt-repository` before `apt-get update` as manual testing indicated the reverse might not work. Travis: * Use gcc-7 by default (remove specific gcc-7 and gcc-4.8 builds) * TODO in later PR: fix s390x "Assembler messages: Error: invalid switch -march=z14" failure AppVeyor: * Completely dropped because we are dropping VS2015 support and CircleCI covers VS >= 2017 Also local testing with old gflags (out of necessity when using ROCKSDB_NO_FBCODE=1). Reviewed By: mrambacher Differential Revision: D33946377 Pulled By: pdillinger fbshipit-source-id: ae077c823905b45370a26c0103ada119459da6c1 |

4 years ago |

|

|

920386f2b7 |

Detect (new) Bloom/Ribbon Filter construction corruption (#9342)

Summary: Note: rebase on and merge after https://github.com/facebook/rocksdb/pull/9349, https://github.com/facebook/rocksdb/pull/9345, (optional) https://github.com/facebook/rocksdb/pull/9393 **Context:** (Quoted from pdillinger) Layers of information during new Bloom/Ribbon Filter construction in building block-based tables includes the following: a) set of keys to add to filter b) set of hashes to add to filter (64-bit hash applied to each key) c) set of Bloom indices to set in filter, with duplicates d) set of Bloom indices to set in filter, deduplicated e) final filter and its checksum This PR aims to detect corruption (e.g, unexpected hardware/software corruption on data structures residing in the memory for a long time) from b) to e) and leave a) as future works for application level. - b)'s corruption is detected by verifying the xor checksum of the hash entries calculated as the entries accumulate before being added to the filter. (i.e, `XXPH3FilterBitsBuilder::MaybeVerifyHashEntriesChecksum()`) - c) - e)'s corruption is detected by verifying the hash entries indeed exists in the constructed filter by re-querying these hash entries in the filter (i.e, `FilterBitsBuilder::MaybePostVerify()`) after computing the block checksum (except for PartitionFilter, which is done right after each `FilterBitsBuilder::Finish` for impl simplicity - see code comment for more). For this stage of detection, we assume hash entries are not corrupted after checking on b) since the time interval from b) to c) is relatively short IMO. Option to enable this feature of detection is `BlockBasedTableOptions::detect_filter_construct_corruption` which is false by default. **Summary:** - Implemented new functions `XXPH3FilterBitsBuilder::MaybeVerifyHashEntriesChecksum()` and `FilterBitsBuilder::MaybePostVerify()` - Ensured hash entries, final filter and banding and their [cache reservation ](https://github.com/facebook/rocksdb/issues/9073) are released properly despite corruption - See [Filter.construction.artifacts.release.point.pdf ](https://github.com/facebook/rocksdb/files/7923487/Design.Filter.construction.artifacts.release.point.pdf) for high-level design - Bundled and refactored hash entries's related artifact in XXPH3FilterBitsBuilder into `HashEntriesInfo` for better control on lifetime of these artifact during `SwapEntires`, `ResetEntries` - Ensured RocksDB block-based table builder calls `FilterBitsBuilder::MaybePostVerify()` after constructing the filter by `FilterBitsBuilder::Finish()` - When encountering such filter construction corruption, stop writing the filter content to files and mark such a block-based table building non-ok by storing the corruption status in the builder. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9342 Test Plan: - Added new unit test `DBFilterConstructionCorruptionTestWithParam.DetectCorruption` - Included this new feature in `DBFilterConstructionReserveMemoryTestWithParam.ReserveMemory` as this feature heavily touch ReserveMemory's impl - For fallback case, I run `./filter_bench -impl=3 -detect_filter_construct_corruption=true -reserve_table_builder_memory=true -strict_capacity_limit=true -quick -runs 10 | grep 'Build avg'` to make sure nothing break. - Added to `filter_bench`: increased filter construction time by **30%**, mostly by `MaybePostVerify()` - FastLocalBloom - Before change: `./filter_bench -impl=2 -quick -runs 10 | grep 'Build avg'`: **28.86643s** - After change: - `./filter_bench -impl=2 -detect_filter_construct_corruption=false -quick -runs 10 | grep 'Build avg'` (expect a tiny increase due to MaybePostVerify is always called regardless): **27.6644s (-4% perf improvement might be due to now we don't drop bloom hash entry in `AddAllEntries` along iteration but in bulk later, same with the bypassing-MaybePostVerify case below)** - `./filter_bench -impl=2 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'` (expect acceptable increase): **34.41159s (+20%)** - `./filter_bench -impl=2 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'` (by-passing MaybePostVerify, expect minor increase): **27.13431s (-6%)** - Standard128Ribbon - Before change: `./filter_bench -impl=3 -quick -runs 10 | grep 'Build avg'`: **122.5384s** - After change: - `./filter_bench -impl=3 -detect_filter_construct_corruption=false -quick -runs 10 | grep 'Build avg'` (expect a tiny increase due to MaybePostVerify is always called regardless - verified by removing MaybePostVerify under this case and found only +-1ns difference): **124.3588s (+2%)** - `./filter_bench -impl=3 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'`(expect acceptable increase): **159.4946s (+30%)** - `./filter_bench -impl=3 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'`(by-passing MaybePostVerify, expect minor increase) : **125.258s (+2%)** - Added to `db_stress`: `make crash_test`, `./db_stress --detect_filter_construct_corruption=true` - Manually smoke-tested: manually corrupted the filter construction in some db level tests with basic PUT and background flush. As expected, the error did get returned to users in subsequent PUT and Flush status. Reviewed By: pdillinger Differential Revision: D33746928 Pulled By: hx235 fbshipit-source-id: cb056426be5a7debc1cd16f23bc250f36a08ca57 |

4 years ago |

|

|

272ce445d6 |

remove unused instance variable in GenericRateLimiter (#9484)

Summary: As reported in https://github.com/facebook/rocksdb/pull/2899#issuecomment-1001467021, `prev_num_drains_` is confusing as we never set it to nonzero. So this PR removes it. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9484 Test Plan: `make check -j24` Reviewed By: hx235 Differential Revision: D33923203 Pulled By: ajkr fbshipit-source-id: 6277d50a198b90646583ee8094c2e6a1bbdadc7b |

4 years ago |

|

|

dd203ed604 |

Disallow a combination of options (#9348)

Summary: Disallow `immutable_db_opts.use_direct_io_for_flush_and_compaction == true` and `mutable_db_opts.writable_file_max_buffer_size == 0`, since it causes `WritableFileWriter::Append()` to loop forever and does not make much sense in direct IO. This combination of options itself does not make much sense: asking RocksDB to do direct IO but not allowing RocksDB to allocate a buffer. We should detect this false combination and warn user early, no matter whether the application is running on a platform that supports direct IO or not. In the case of platform **not** supporting direct IO, it's ok if the user learns about this and then finds that direct IO is not supported. One tricky thing: the constructor of `WritableFileWriter` is being used in our unit tests, and it's impossible to return status code from constructor. Since we do not throw, I put an assertion for now. Fortunately, the constructor is not exposed to external applications. Closing https://github.com/facebook/rocksdb/issues/7109 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9348 Test Plan: make check Reviewed By: ajkr Differential Revision: D33371924 Pulled By: riversand963 fbshipit-source-id: 2a3701ab541cee23bffda8a36cdf37b2d235edfa |

4 years ago |

|

|

7d7085c4e8 |

Fix LITE build for SliceTransform::AsString (#9460)

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9460 Reviewed By: pdillinger Differential Revision: D33830275 Pulled By: mrambacher fbshipit-source-id: 65dd1496e0291013085fdc3cce6ae3bf6dc955b5 |

4 years ago |

|

|

ea89c77f27 |

Fix major bug with MultiGet, DeleteRange, and memtable Bloom (#9453)

Summary: MemTable::MultiGet was not considering range tombstones before querying Bloom filter. This means range tombstones would be skipped for keys (or prefixes) with no other entries in the memtable. This could cause old values for a key (in SST files) to still show up until the range tombstone covering it has been flushed. This is fixed by essentially disabling the memtable Bloom filter when there are any range tombstones. (This could be better optimized in the future, but good enough for now.) Did some other cleanup/optimization in the same code to (more than) offset the cost of checking on range tombstones in more cases. There is now notable improvement when memtable_whole_key_filtering and prefix_extractor are used together (unusual), and this makes MultiGet closer to the Get implementation. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9453 Test Plan: new unit test added. Added memtable Bloom to crash test. Performance testing -------------------- Build WAL-only DB (recovers to memtable): ``` TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -benchmarks=fillrandom -num=1000000 -write_buffer_size=250000000 ``` Query test command, to maximize sensitivity to the changed code: ``` TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -use_existing_db -readonly -benchmarks=multireadrandom -num=10000000 -write_buffer_size=250000000 -memtable_bloom_size_ratio=0.015 -multiread_batched -batch_size=24 -threads=8 -memtable_whole_key_filtering=$MWKF -prefix_size=$PXS ``` (Note -num here is 10x larger for mostly memtable misses) Before & after run simultaneously, average over 10 iterations per data point, ops/sec. MWKF=0 PXS=0 (Bloom disabled) Before: 5724844 After: 6722066 MWKF=0 PXS=7 (prefixes hardly unique; Bloom not useful) Before: 9981319 After: 10237990 MWKF=0 PXS=8 (prefixes unique; Bloom useful) Before: 12081715 After: 12117603 MWKF=1 PXS=0 (whole key Bloom useful) Before: 11944354 After: 12096085 MWKF=1 PXS=7 (whole key Bloom useful in new version; prefixes not useful in old version) Before: 9444299 After: 11826029 MWKF=1 PXS=7 (whole key Bloom useful in new version; prefixes useful in old version) Before: 11784465 After: 11778591 Only in this last case is the 'before' *slightly* faster, perhaps because hashing prefixes is slightly faster than hashing whole keys. Otherwise, 'after' is faster. Reviewed By: ajkr Differential Revision: D33805025 Pulled By: pdillinger fbshipit-source-id: 597523cae4f4eafdf6ae6bb2bc6cb46f83b017bf |

4 years ago |

|

|

37ec9d0c12 |

Improve performance of SliceTransform::AsString (#9401)

Summary: 1. Removed the options from the Capped/Fixed SliceTransforms. Instead these classes are created with id.number. This allows the GetID() id to be calculated and stored at class construction time. This change puts the construction back to similar to how it was prior to the Customizable changes for SliceTransform. 2. Improve the performance of AsString by using the ID only if there are no option properties (which is the case for all of the builtin transforms). Ran tests of calling AsString in a loop 5M times and found approximately a 10x performance increase vs the original code. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9401 Reviewed By: pdillinger Differential Revision: D33668672 Pulled By: mrambacher fbshipit-source-id: d0075912c6ece8ed754ee543bc6b0b49a169b309 |

4 years ago |

|

|

449029f865 |

Remove deprecated ObjectLibrary::Register() (and Regex public API) (#9439)

Summary: Regexes are considered potentially problematic for use in registering RocksDB extensions, so we are removing ObjectLibrary::Register() and the Regex public API it depended on (now unused). In reference to https://github.com/facebook/rocksdb/issues/9389 Why? * The power of Regexes can make it hard to reason about which extension will match what. (The replacement API isn't perfect, but we are at least "holding the line" on patterns we have seen in practice.) * It is easy to make regexes that don't quite mean what you think they mean, such as forgetting that the `.` in `foo.bar` can match any character or that matching is nondeterministic, as in `a🅱️42` matching `.*:[0-9]+`. * Some regexes and implementations can have disastrously bad performance. This might not be much practical concern for ObjectLibray here, but we don't want to encourage potentially dangerous further use in production code. (Testing code is fine. See TestRegex.) Pull Request resolved: https://github.com/facebook/rocksdb/pull/9439 Test Plan: CI Reviewed By: mrambacher Differential Revision: D33792342 Pulled By: pdillinger fbshipit-source-id: 4f64dcb04764e639162c8977a5fa196f67754cec |

4 years ago |

|

|

fc9d4071f0 |

Fast path for detecting unchanged prefix_extractor (#9407)

Summary: Fixes a major performance regression in 6.26, where extra CPU is spent in SliceTransform::AsString when reads involve a prefix_extractor (Get, MultiGet, Seek). Common case performance is now better than 6.25. This change creates a "fast path" for verifying that the current prefix extractor is unchanged and compatible with what was used to generate a table file. This fast path detects the common case by pointer comparison on the current prefix_extractor and a "known good" prefix extractor (if applicable) that is saved at the time the table reader is opened. The "known good" prefix extractor is saved as another shared_ptr copy (in an existing field, however) to ensure the pointer is not recycled. When the prefix_extractor has changed to a different instance but same compatible configuration (rare, odd), performance is still a regression compared to 6.25, but this is likely acceptable because of the oddity of such a case. The performance of incompatible prefix_extractor is essentially unchanged. Also fixed a minor case (ForwardIterator) where a prefix_extractor could be used via a raw pointer after being freed as a shared_ptr, if replaced via SetOptions. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9407 Test Plan: ## Performance Populate DB with `TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -benchmarks=fillrandom -num=10000000 -disable_wal=1 -write_buffer_size=10000000 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=12` Running head-to-head comparisons simultaneously with `TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -use_existing_db -readonly -benchmarks=seekrandom -num=10000000 -duration=20 -disable_wal=1 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=12` Below each is compared by ops/sec vs. baseline which is version 6.25 (multiple baseline runs because of variable machine load) v6.26: 4833 vs. 6698 (<- major regression!) v6.27: 4737 vs. 6397 (still) New: 6704 vs. 6461 (better than baseline in common case) Disabled fastpath: 4843 vs. 6389 (e.g. if prefix extractor instance changes but is still compatible) Changed prefix size (no usable filter) in new: 787 vs. 5927 Changed prefix size (no usable filter) in new & baseline: 773 vs. 784 Reviewed By: mrambacher Differential Revision: D33677812 Pulled By: pdillinger fbshipit-source-id: 571d9711c461fb97f957378a061b7e7dbc4d6a76 |

4 years ago |

|

|

0376869f05 |

Remove using namespace (#9369)

Summary: As title. This is part of an fb-internal task. First, remove all `using namespace` statements if applicable. Next, utilize multiple build platforms and see if anything is broken. Should anything become broken, fix the compilation errors with as little extra change as possible. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9369 Test Plan: internal build and make check make clean && make static_lib && cd examples && make all Reviewed By: pdillinger Differential Revision: D33517260 Pulled By: riversand963 fbshipit-source-id: 3fc4ce6402a073421dfd9a9b2d1c79441dca7a40 |

4 years ago |

|

|

1973fcba11 |

Restore Regex support for ObjectLibrary::Register, rename new APIs to allow old one to be deprecated in the future (#9362)

Summary: In order to support old-style regex function registration, restored the original "Register<T>(string, Factory)" method using regular expressions. The PatternEntry methods were left in place but renamed to AddFactory. The goal is to allow for the deprecation of the original regex Registry method in an upcoming release. Added modes to the PatternEntry kMatchZeroOrMore and kMatchAtLeastOne to match * or +, respectively (kMatchAtLeastOne was the original behavior). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9362 Reviewed By: pdillinger Differential Revision: D33432562 Pulled By: mrambacher fbshipit-source-id: ed88ab3f9a2ad0d525c7bd1692873f9bb3209d02 |

4 years ago |

|

|

9110685e8c |

Release cache reservation of hash entries of the fall-back Ribbon Filter earlier (#9345)

Summary: Note: rebase on and merge after https://github.com/facebook/rocksdb/pull/9349, as part of https://github.com/facebook/rocksdb/pull/9342 **Context:** https://github.com/facebook/rocksdb/pull/9073 charged the hash entries' memory in block cache with `CacheReservationHandle`. However, in the edge case where Ribbon Filter falls back to Bloom Filter and swaps its hash entries to the embedded bloom filter object, the handles associated with those entries are not swapped and thus not released as soon as those entries are cleared during Bloom Filter's finish process. Although this is a minor issue since RocksDB internal calls `FilterBitsBuilder->Reset()` right after `FilterBitsBuilder->Finish()` on the main path, which releases all the cache reservation related to both the Ribbon Filter and its embedded Bloom Filter, it still worths this fix to avoid confusion. **Summary:** - Swapped the `CacheReservationHandle` associated with the hash entries on Ribbon Filter's fallback Pull Request resolved: https://github.com/facebook/rocksdb/pull/9345 Test Plan: - Added a unit test to verify the number of cache reservation after clearing hash entries, which failed before the change and now succeeds Reviewed By: pdillinger Differential Revision: D33377225 Pulled By: hx235 fbshipit-source-id: 7487f4c40dfb6ee7928232021f93ef2c5329cffa |

4 years ago |

|

|

1c39b7952b |

Remove/Reduce use of Regex in ObjectRegistry/Library (#9264)

Summary: Added new ObjectLibrary::Entry classes to replace/reduce the use of Regex. For simple factories that only do name matching, there are "StringEntry" and "AltStringEntry" classes. For classes that use some semblance of regular expressions, there is a PatternEntry class that can match a name and prefixes. There is also a class for Customizable::IndividualId format matches. Added tests for the new derivative classes and got all unit tests to pass. Resolves https://github.com/facebook/rocksdb/issues/9225. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9264 Reviewed By: pdillinger Differential Revision: D33062001 Pulled By: mrambacher fbshipit-source-id: c2d2143bd2d38bdf522705c8280c35381b135c03 |

4 years ago |

|

|

cc1d4e3d33 |

gcc-11 and cmake related cleanup (#9286)

Summary:

in hope to get rockdb compiled with GCC-11 without warning

* util/bloom_test: init a variable before using it

to silence the GCC warning like

```

util/bloom_test.cc:1253:31: error: ‘<anonymous>’ may be used uninitialized [-Werror=maybe-uninitialized]

1253 | Slice key_slice{key_bytes, 8};

| ^

...

include/rocksdb/slice.h:41:3: note: by argument 2 of type ‘const char*’ to ‘rocksdb::Slice::Slice(const char*, size_t)’ declared here

41 | Slice(const char* d, size_t n) : data_(d), size_(n) {}

| ^~~~~

util/bloom_test.cc:1249:3: note: ‘<anonymous>’ declared here

1249 | };

| ^

cc1plus: all warnings being treated as errors

```

* cmake: add find_package(uring ...)

find liburing in a more consistent way. also it is the encouraged way for finding a library.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9286

Reviewed By: mrambacher

Differential Revision: D33165241

Pulled By: jay-zhuang

fbshipit-source-id: 9f3487e11b4e40fd8f1c97c8facb24a190e5ce31

|

4 years ago |

|

|

0050a73a4f |

New stable, fixed-length cache keys (#9126)

Summary: This change standardizes on a new 16-byte cache key format for block cache (incl compressed and secondary) and persistent cache (but not table cache and row cache). The goal is a really fast cache key with practically ideal stability and uniqueness properties without external dependencies (e.g. from FileSystem). A fixed key size of 16 bytes should enable future optimizations to the concurrent hash table for block cache, which is a heavy CPU user / bottleneck, but there appears to be measurable performance improvement even with no changes to LRUCache. This change replaces a lot of disjointed and ugly code handling cache keys with calls to a simple, clean new internal API (cache_key.h). (Preserving the old cache key logic under an option would be very ugly and likely negate the performance gain of the new approach. Complete replacement carries some inherent risk, but I think that's acceptable with sufficient analysis and testing.) The scheme for encoding new cache keys is complicated but explained in cache_key.cc. Also: EndianSwapValue is moved to math.h to be next to other bit operations. (Explains some new include "math.h".) ReverseBits operation added and unit tests added to hash_test for both. Fixes https://github.com/facebook/rocksdb/issues/7405 (presuming a root cause) Pull Request resolved: https://github.com/facebook/rocksdb/pull/9126 Test Plan: ### Basic correctness Several tests needed updates to work with the new functionality, mostly because we are no longer relying on filesystem for stable cache keys so table builders & readers need more context info to agree on cache keys. This functionality is so core, a huge number of existing tests exercise the cache key functionality. ### Performance Create db with `TEST_TMPDIR=/dev/shm ./db_bench -bloom_bits=10 -benchmarks=fillrandom -num=3000000 -partition_index_and_filters` And test performance with `TEST_TMPDIR=/dev/shm ./db_bench -readonly -use_existing_db -bloom_bits=10 -benchmarks=readrandom -num=3000000 -duration=30 -cache_index_and_filter_blocks -cache_size=250000 -threads=4` using DEBUG_LEVEL=0 and simultaneous before & after runs. Before ops/sec, avg over 100 runs: 121924 After ops/sec, avg over 100 runs: 125385 (+2.8%) ### Collision probability I have built a tool, ./cache_bench -stress_cache_key to broadly simulate host-wide cache activity over many months, by making some pessimistic simplifying assumptions: * Every generated file has a cache entry for every byte offset in the file (contiguous range of cache keys) * All of every file is cached for its entire lifetime We use a simple table with skewed address assignment and replacement on address collision to simulate files coming & going, with quite a variance (super-Poisson) in ages. Some output with `./cache_bench -stress_cache_key -sck_keep_bits=40`: ``` Total cache or DBs size: 32TiB Writing 925.926 MiB/s or 76.2939TiB/day Multiply by 9.22337e+18 to correct for simulation losses (but still assume whole file cached) ``` These come from default settings of 2.5M files per day of 32 MB each, and `-sck_keep_bits=40` means that to represent a single file, we are only keeping 40 bits of the 128-bit cache key. With file size of 2\*\*25 contiguous keys (pessimistic), our simulation is about 2\*\*(128-40-25) or about 9 billion billion times more prone to collision than reality. More default assumptions, relatively pessimistic: * 100 DBs in same process (doesn't matter much) * Re-open DB in same process (new session ID related to old session ID) on average every 100 files generated * Restart process (all new session IDs unrelated to old) 24 times per day After enough data, we get a result at the end: ``` (keep 40 bits) 17 collisions after 2 x 90 days, est 10.5882 days between (9.76592e+19 corrected) ``` If we believe the (pessimistic) simulation and the mathematical generalization, we would need to run a billion machines all for 97 billion days to expect a cache key collision. To help verify that our generalization ("corrected") is robust, we can make our simulation more precise with `-sck_keep_bits=41` and `42`, which takes more running time to get enough data: ``` (keep 41 bits) 16 collisions after 4 x 90 days, est 22.5 days between (1.03763e+20 corrected) (keep 42 bits) 19 collisions after 10 x 90 days, est 47.3684 days between (1.09224e+20 corrected) ``` The generalized prediction still holds. With the `-sck_randomize` option, we can see that we are beating "random" cache keys (except offsets still non-randomized) by a modest amount (roughly 20x less collision prone than random), which should make us reasonably comfortable even in "degenerate" cases: ``` 197 collisions after 1 x 90 days, est 0.456853 days between (4.21372e+18 corrected) ``` I've run other tests to validate other conditions behave as expected, never behaving "worse than random" unless we start chopping off structured data. Reviewed By: zhichao-cao Differential Revision: D33171746 Pulled By: pdillinger fbshipit-source-id: f16a57e369ed37be5e7e33525ace848d0537c88f |

4 years ago |

|

|

653c392e47 |

More refactoring ahead of footer & meta changes (#9240)

Summary: I'm working on a new format_version=6 to support context checksum (https://github.com/facebook/rocksdb/issues/9058) and this includes much of the refactoring and test updates to support that change. Test coverage data and manual inspection agree on dead code in block_based_table_reader.cc (removed). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9240 Test Plan: tests enhanced to cover more cases etc. Extreme case performance testing indicates small % regression in fillseq (w/ compaction), though CPU profile etc. doesn't suggest any explanation. There is enhanced correctness checking in Footer::DecodeFrom, but this should be negligible. TEST_TMPDIR=/dev/shm/ ./db_bench -benchmarks=fillseq -memtablerep=vector -allow_concurrent_memtable_write=false -num=30000000 -checksum_type=1 --disable_wal={false,true} (Each is ops/s averaged over 50 runs, run simultaneously with competing configuration for load fairness) Before w/ wal: 454512 After w/ wal: 444820 (-2.1%) Before w/o wal: 1004560 After w/o wal: 998897 (-0.6%) Since this doesn't modify WAL code, one would expect real effects to be larger in w/o wal case. This regression will be corrected in a follow-up PR. Reviewed By: ajkr Differential Revision: D32813769 Pulled By: pdillinger fbshipit-source-id: 444a244eabf3825cd329b7d1b150cddce320862f |

4 years ago |

|

|

7cd5835a28 |

Make RateLimiter Customizable (#9141)

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9141 Reviewed By: zhichao-cao Differential Revision: D32432190 Pulled By: mrambacher fbshipit-source-id: 7930ed88a02412128cd407b5063522484e45c6ce |

4 years ago |

|

|

4a7c1dc375 |

Add listener API that notifies on IOError (#9177)

Summary: Add a new API in listener.h that notifies about IOErrors on Read/Write/Append/Flush etc. The API reports about IOStatus, filename, Operation name, offset and length. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9177 Test Plan: Added new unit tests Reviewed By: anand1976 Differential Revision: D32470627 Pulled By: akankshamahajan15 fbshipit-source-id: 189a717033590ae227b3beae8b1e7e185e4cdc12 |

4 years ago |

|

|

74544d582f |

Account Bloom/Ribbon filter construction memory in global memory limit (#9073)

Summary: Note: This PR is the 4th part of a bigger PR stack (https://github.com/facebook/rocksdb/pull/9073) and will rebase/merge only after the first three PRs (https://github.com/facebook/rocksdb/pull/9070, https://github.com/facebook/rocksdb/pull/9071, https://github.com/facebook/rocksdb/pull/9130) merge. **Context:** Similar to https://github.com/facebook/rocksdb/pull/8428, this PR is to track memory usage during (new) Bloom Filter (i.e,FastLocalBloom) and Ribbon Filter (i.e, Ribbon128) construction, moving toward the goal of [single global memory limit using block cache capacity](https://github.com/facebook/rocksdb/wiki/Projects-Being-Developed#improving-memory-efficiency). It also constrains the size of the banding portion of Ribbon Filter during construction by falling back to Bloom Filter if that banding is, at some point, larger than the available space in the cache under `LRUCacheOptions::strict_capacity_limit=true`. The option to turn on this feature is `BlockBasedTableOptions::reserve_table_builder_memory = true` which by default is set to `false`. We [decided](https://github.com/facebook/rocksdb/pull/9073#discussion_r741548409) not to have separate option for separate memory user in table building therefore their memory accounting are all bundled under one general option. **Summary:** - Reserved/released cache for creation/destruction of three main memory users with the passed-in `FilterBuildingContext::cache_res_mgr` during filter construction: - hash entries (i.e`hash_entries`.size(), we bucket-charge hash entries during insertion for performance), - banding (Ribbon Filter only, `bytes_coeff_rows` +`bytes_result_rows` + `bytes_backtrack`), - final filter (i.e, `mutable_buf`'s size). - Implementation details: in order to use `CacheReservationManager::CacheReservationHandle` to account final filter's memory, we have to store the `CacheReservationManager` object and `CacheReservationHandle` for final filter in `XXPH3BitsFilterBuilder` as well as explicitly delete the filter bits builder when done with the final filter in block based table. - Added option fo run `filter_bench` with this memory reservation feature Pull Request resolved: https://github.com/facebook/rocksdb/pull/9073 Test Plan: - Added new tests in `db_bloom_filter_test` to verify filter construction peak cache reservation under combination of `BlockBasedTable::Rep::FilterType` (e.g, `kFullFilter`, `kPartitionedFilter`), `BloomFilterPolicy::Mode`(e.g, `kFastLocalBloom`, `kStandard128Ribbon`, `kDeprecatedBlock`) and `BlockBasedTableOptions::reserve_table_builder_memory` - To address the concern for slow test: tests with memory reservation under `kFullFilter` + `kStandard128Ribbon` and `kPartitionedFilter` take around **3000 - 6000 ms** and others take around **1500 - 2000 ms**, in total adding **20000 - 25000 ms** to the test suit running locally - Added new test in `bloom_test` to verify Ribbon Filter fallback on large banding in FullFilter - Added test in `filter_bench` to verify that this feature does not significantly slow down Bloom/Ribbon Filter construction speed. Local result averaged over **20** run as below: - FastLocalBloom - baseline `./filter_bench -impl=2 -quick -runs 20 | grep 'Build avg'`: - **Build avg ns/key: 29.56295** (DEBUG_LEVEL=1), **29.98153** (DEBUG_LEVEL=0) - new feature (expected to be similar as above)`./filter_bench -impl=2 -quick -runs 20 -reserve_table_builder_memory=true | grep 'Build avg'`: - **Build avg ns/key: 30.99046** (DEBUG_LEVEL=1), **30.48867** (DEBUG_LEVEL=0) - new feature of RibbonFilter with fallback (expected to be similar as above) `./filter_bench -impl=2 -quick -runs 20 -reserve_table_builder_memory=true -strict_capacity_limit=true | grep 'Build avg'` : - **Build avg ns/key: 31.146975** (DEBUG_LEVEL=1), **30.08165** (DEBUG_LEVEL=0) - Ribbon128 - baseline `./filter_bench -impl=3 -quick -runs 20 | grep 'Build avg'`: - **Build avg ns/key: 129.17585** (DEBUG_LEVEL=1), **130.5225** (DEBUG_LEVEL=0) - new feature (expected to be similar as above) `./filter_bench -impl=3 -quick -runs 20 -reserve_table_builder_memory=true | grep 'Build avg' `: - **Build avg ns/key: 131.61645** (DEBUG_LEVEL=1), **132.98075** (DEBUG_LEVEL=0) - new feature of RibbonFilter with fallback (expected to be a lot faster than above due to fallback) `./filter_bench -impl=3 -quick -runs 20 -reserve_table_builder_memory=true -strict_capacity_limit=true | grep 'Build avg'` : - **Build avg ns/key: 52.032965** (DEBUG_LEVEL=1), **52.597825** (DEBUG_LEVEL=0) - And the warning message of `"Cache reservation for Ribbon filter banding failed due to cache full"` is indeed logged to console. Reviewed By: pdillinger Differential Revision: D31991348 Pulled By: hx235 fbshipit-source-id: 9336b2c60f44d530063da518ceaf56dac5f9df8e |

4 years ago |

|

|

2225f063d4 |

Remove incremental ID from background thread pool names (#9165)

Summary: `pthread_setname_np()` fails on attempts to assign oversized names like "rocksdb:bottom10", which resulted in some thread name updates being lost. We do not need the ID suffix so I removed it. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9165 Test Plan: ``` $ TEST_TMPDIR=/dev/shm ./db_bench -benchmarks=fillrandom -max_background_flushes=123 -max_background_compactions=456 -num_bottom_pri_threads=789 -duration=60 ``` While above is running: ``` $ ps -o 'comm' -Lp `pidof db_bench` | grep '^rocksdb:' | sort | uniq -c 789 rocksdb:bottom 123 rocksdb:high 456 rocksdb:low ``` Reviewed By: pdillinger Differential Revision: D32415077 Pulled By: ajkr fbshipit-source-id: a0e013101e26a78bc5eca73509293ef4bf22254f |

4 years ago |

|

|

cff7819dff |

Fix BackupEngine's internal callers of GenericRateLimiter::Request() not honoring bytes <= GetSingleBurstBytes() (#9063)

Summary: **Context:** Some existing internal calls of `GenericRateLimiter::Request()` in backupable_db.cc and newly added internal calls in https://github.com/facebook/rocksdb/pull/8722/ do not make sure `bytes <= GetSingleBurstBytes()` as required by rate_limiter https://github.com/facebook/rocksdb/blob/master/include/rocksdb/rate_limiter.h#L47. **Impacts of this bug include:** (1) In debug build, when `GenericRateLimiter::Request()` requests bytes greater than `GenericRateLimiter:: kMinRefillBytesPerPeriod = 100` byte, process will crash due to assertion failure. See https://github.com/facebook/rocksdb/pull/9063#discussion_r737034133 and for possible scenario (2) In production build, although there will not be the above crash due to disabled assertion, the bug can lead to a request of small bytes being blocked for a long time by a request of same priority with insanely large bytes from a different thread. See updated https://github.com/facebook/rocksdb/wiki/Rate-Limiter ("Notice that although....the maximum bytes that can be granted in a single request have to be bounded...") for more info. There is an on-going effort to move rate-limiting to file wrapper level so rate limiting in `BackupEngine` and this PR might be made obsolete in the future. **Summary:** - Implemented loop-calling `GenericRateLimiter::Request()` with `bytes <= GetSingleBurstBytes()` as a static private helper function `BackupEngineImpl::LoopRateLimitRequestHelper` -- Considering make this a util function in `RateLimiter` later or do something with `RateLimiter::RequestToken()` - Replaced buggy internal callers with this helper function wherever requested byte is not pre-limited by `GetSingleBurstBytes()` - Removed the minimum refill bytes per period enforced by `GenericRateLimiter` since it is useless and prevents testing `GenericRateLimiter` for extreme case with small refill bytes per period. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9063 Test Plan: - Added a new test that failed the assertion before this change and now passes - It exposed bugs in [the write during creation in `CopyOrCreateFile()`]( |

4 years ago |

|

|

a64c8ca7a8 |

Sanitize negative request bytes in GenericRateLimiter::Request and clarify API (#9112)

Summary: Context: Surprisingly, there isn't any sanitization against negative `int64_t bytes` in `GenericRateLimiter::Request(int64_t bytes, const Env::IOPriority pri, Statistics* stats)`. A negative `bytes` can be passed in and incorrectly increases `available_bytes_` by subtracting the negative `bytes` from `available_bytes_`, such as [here](https://github.com/facebook/rocksdb/blob/main/util/rate_limiter.cc#L138) and [here](https://github.com/facebook/rocksdb/blob/main/util/rate_limiter.cc#L283), which are incorrect behaviors. - Sanitized negative request bytes by rounding it up to 0 - Added notes to public and internal API Pull Request resolved: https://github.com/facebook/rocksdb/pull/9112 Test Plan: - Rely on existing tests Reviewed By: ajkr Differential Revision: D32085364 Pulled By: hx235 fbshipit-source-id: b1b6066b2dd5ffc7bcbfb07069ca65a33578251b |

4 years ago |

|

|

29102641dd |

Skip directory fsync for filesystem btrfs (#8903)

Summary: Directory fsync might be expensive on btrfs and it may not be needed. Here are 4 directory fsync cases: 1. creating a new file: dir-fsync is not needed on btrfs, as long as the new file itself is synced. 2. renaming a file: dir-fsync is not needed if the renamed file is synced. So an API `FsyncAfterFileRename(filename, ...)` is provided to sync the file on btrfs. By default, it just calls dir-fsync. 3. deleting files: dir-fsync is forced by set `IOOptions.force_dir_fsync = true` 4. renaming multiple files (like backup and checkpoint): dir-fsync is forced, the same as above. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8903 Test Plan: run tests on btrfs and non btrfs Reviewed By: ajkr Differential Revision: D30885059 Pulled By: jay-zhuang fbshipit-source-id: dd2730b31580b0bcaedffc318a762d7dbf25de4a |

4 years ago |

|

|

e970248602 |

Add support for building on s390x platform (#8962)

Summary: This PR adds support for building on s390x including updating travis CI. It uses the previous work in https://github.com/facebook/rocksdb/pull/6168 and adds some more changes to get all current tests (make check and jni tests) to pass. The tests were run with snappy, lz4, bzip2 and zstd all compiled in. There are a few pieces still needed to get the travis build working that I don't think I can do. adamretter is this something you could help with? 1. A prebuilt https://rocksdb-deps.s3-us-west-2.amazonaws.com/cmake/cmake-3.14.5-Linux-s390x.deb package 2. A https://hub.docker.com/r/evolvedbinary/rocksjava s390x image Not sure if there is more required for travis. Happy to help in any way I can. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8962 Reviewed By: mrambacher Differential Revision: D31802198 Pulled By: pdillinger fbshipit-source-id: 683511466fa6b505f85ba5a9964a268c6151f0c2 |

4 years ago |

|

|

8fb3fe8d39 |

Allow unregistered options to be ignored in DBOptions from files (#9045)

Summary: Adds changes to DBOptions (comparable to ColumnFamilyOptions) to allow some option values to be ignored on rehydration from the Options file. This is necessary for some customizable classes that were not registered with the ObjectRegistry but are saved/restored from the Options file. All tests pass. Will run check_format_compatible.sh shortly. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9045 Reviewed By: zhichao-cao Differential Revision: D31761664 Pulled By: mrambacher fbshipit-source-id: 300c2251639cce2b223481c3bb2a63877b1f3766 |

4 years ago |

|

|

ad5325a736 |

Experimental support for SST unique IDs (#8990)

Summary: * New public header unique_id.h and function GetUniqueIdFromTableProperties which computes a universally unique identifier based on table properties of table files from recent RocksDB versions. * Generation of DB session IDs is refactored so that they are guaranteed unique in the lifetime of a process running RocksDB. (SemiStructuredUniqueIdGen, new test included.) Along with file numbers, this enables SST unique IDs to be guaranteed unique among SSTs generated in a single process, and "better than random" between processes. See https://github.com/pdillinger/unique_id * In addition to public API producing 'external' unique IDs, there is a function for producing 'internal' unique IDs, with functions for converting between the two. In short, the external ID is "safe" for things people might do with it, and the internal ID enables more "power user" features for the future. Specifically, the external ID goes through a hashing layer so that any subset of bits in the external ID can be used as a hash of the full ID, while also preserving uniqueness guarantees in the first 128 bits (bijective both on first 128 bits and on full 192 bits). Intended follow-up: * Use the internal unique IDs in cache keys. (Avoid conflicts with https://github.com/facebook/rocksdb/issues/8912) (The file offset can be XORed into the third 64-bit value of the unique ID.) * Publish the external unique IDs in FileStorageInfo (https://github.com/facebook/rocksdb/issues/8968) Pull Request resolved: https://github.com/facebook/rocksdb/pull/8990 Test Plan: Unit tests added, and checking of unique ids in stress test. NOTE in stress test we do not generate nearly enough files to thoroughly stress uniqueness, but the test trims off pieces of the ID to check for uniqueness so that we can infer (with some assumptions) stronger properties in the aggregate. Reviewed By: zhichao-cao, mrambacher Differential Revision: D31582865 Pulled By: pdillinger fbshipit-source-id: 1f620c4c86af9abe2a8d177b9ccf2ad2b9f48243 |

4 years ago |

|

|

53e595d1f3 |

Cleanup multiple implementations of VectorIterator (#8901)

Summary: There were three implementations of VectorIterator (util/vector_iterator, test_util/testutil.h and LoggingForwardVectorIterator). Merged them into one class to increase code coverage/testing and reduce duplication. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8901 Reviewed By: pdillinger Differential Revision: D31022673 Pulled By: mrambacher fbshipit-source-id: 8e3acbd2dfd60b4df609d02cc72846de2389d531 |

4 years ago |

|

|

13ae16c315 |

Cleanup includes in dbformat.h (#8930)

Summary: This header file was including everything and the kitchen sink when it did not need to. This resulted in many places including this header when they needed other pieces instead. Cleaned up this header to only include what was needed and fixed up the remaining code to include what was now missing. Hopefully, this sort of code hygiene cleanup will speed up the builds... Pull Request resolved: https://github.com/facebook/rocksdb/pull/8930 Reviewed By: pdillinger Differential Revision: D31142788 Pulled By: mrambacher fbshipit-source-id: 6b45de3f300750c79f751f6227dece9cfd44085d |

4 years ago |

|

|

7fd68b7c39 |

Make WalFilter, SstPartitionerFactory, FileChecksumGenFactory, and TableProperties Customizable (#8638)

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/8638 Reviewed By: zhichao-cao Differential Revision: D31024729 Pulled By: mrambacher fbshipit-source-id: 954c04ccab0b8dee64050a27aadf78ed119106c0 |

4 years ago |

|

|

e0f697d2bd |

Make SliceTransform into a Customizable class (#8641)

Summary: Made SliceTransform into a Customizable class. Would be nice to write a test that stored and used a custom transform in an SST table. There are a set of tests (DBBlockFliterTest.PrefixExtractor*, SamePrefixTest.InDomainTest, PrefixTest.PrefixAndWholeKeyTest that run the same with or without a SliceTransform/PrefixFilter. Is this expected? Pull Request resolved: https://github.com/facebook/rocksdb/pull/8641 Reviewed By: zhichao-cao Differential Revision: D31142793 Pulled By: mrambacher fbshipit-source-id: bb08672fccbfdc263dcae21f25a62307e1facda1 |

4 years ago |

|

|

b25f2afeff |

Return Status::NotSupported() in RateLimiter::GetTotalPendingRequests default impl (#8950)

Summary: Context: After more discussion, a fix in https://github.com/facebook/rocksdb/issues/8938 might turn out to be too restrictive for the case where `GetTotalPendingRequests` might be invoked on RateLimiter classes that does not support the recently added API `RateLimiter::GetTotalPendingRequests` (https://github.com/facebook/rocksdb/issues/8890) due to the `assert(false)` in https://github.com/facebook/rocksdb/issues/8938. Furthermore, sentinel value like `-1` proposed in https://github.com/facebook/rocksdb/issues/8938 is easy to be ignored and unchecked. Therefore we decided to adopt `Status::NotSupported()`, which is also a convention of adding new API to public header in RocksDB. - Changed return value type of `RateLimiter::GetTotalPendingRequests` in related declaration/definition - Passed in pointer argument to hold the output instead of returning it as before - Adapted to the changes above in calling `RateLimiter::GetTotalPendingRequests` in test - Minor improvement to `TEST_F(RateLimiterTest, GetTotalPendingRequests)`: added failure message for assertion and replaced repetitive statements with a loop Pull Request resolved: https://github.com/facebook/rocksdb/pull/8950 Reviewed By: ajkr, pdillinger Differential Revision: D31128450 Pulled By: hx235 fbshipit-source-id: 282ac9c4f3dacaa0aec6d0a993161f77ad47a040 |

4 years ago |

|

|

6924869867 |

Make SystemClock into a Customizable Class (#8636)

Summary: Made SystemClock into a Customizable class, complete with CreateFromString. Cleaned up some of the existing SystemClock implementations that were redundant (NoSleep was the same as the internal one for MockEnv). Changed MockEnv construction to allow Clock to be passed to the Memory/MockFileSystem. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8636 Reviewed By: zhichao-cao Differential Revision: D30483360 Pulled By: mrambacher fbshipit-source-id: cd0e3a876c39f8c98fe13374c06e8edbd5b9f2a1 |

4 years ago |

|

|

65411b8d4e |

Improve rate_limiter_test.cc (#8904)

Summary: - Fixed a bug in `RateLimiterTest.GeneratePriorityIterationOrder` that the callbacks in this test were not called starting from `i = 1`. Fix by increasing `rate_bytes_per_sec` and requested bytes. - The bug is due to the previous `rate_bytes_per_sec` was set too small, resulting in `refill_bytes_per_period` less than `kMinRefillBytesPerPeriod`. Hence the actual `refill_bytes_per_period` was equal to `kMinRefillBytesPerPeriod` due to the logic [here](https://github.com/facebook/rocksdb/blob/main/util/rate_limiter.cc#L302-L303) and it ended up being greater than the previously set requested bytes. Therefore starting from `i = 1`, `RefillBytesAndGrantRequests()` and `GeneratePriorityIterationOrder` won't be called and the test callbacks was not triggered to execute the assertion. - Added internal flag to assert callbacks are called in `RateLimiterTest.GeneratePriorityIterationOrder` to prevent any future changes defeat the purpose of the test [as suggested](https://github.com/facebook/rocksdb/pull/8890#discussion_r704915134) - Increased `rate_bytes_per_sec` and bytes of each request in `RateLimiterTest.GetTotalBytesThrough`, `RateLimiterTest.GetTotalRequests`, `RateLimiterTest.GetTotalPendingRequests` to trigger the "long path" of execution (i.e, the one trigger RefillBytesAndGrantRequests()) to increase test coverage - This increased the running time of the three tests, see test plan for time difference running locally - Cleared up sync point effects after each test by calling `SyncPoint::GetInstance()->DisableProcessing();` and `SyncPoint::GetInstance()->ClearAllCallBacks();` in `~RateLimiterTest()` [as suggested](https://github.com/facebook/rocksdb/pull/8595/files#r697534279) - It's fine to call these two methods even when `EnableProcessing()` or `SetCallBack()` is not called in the test or is already cleaned up. In those cases, calling these two functions in destructor is effectively no-op. - This will allow cleaning up sync point effects of previous test even when the previous test failed in assertion. - Added missing `SyncPoint::GetInstance()->DisableProcessing();` and `SyncPoint::GetInstance()->ClearCallBacks(..);` in existing tests for completeness - Called `SyncPoint::GetInstance()->DisableProcessing();` and `SyncPoint::GetInstance()->ClearCallBacks(..);` in loop in `RateLimiterTest.GeneratePriorityIterationOrder` for completeness Pull Request resolved: https://github.com/facebook/rocksdb/pull/8904 Test Plan: - Passing existing tests - To verify the 1st change, run `RateLimiterTest.GeneratePriorityIterationOrder` with assertions of callbacks are indeed called under original `rate_bytes_per_sec` and request byte and under updated `rate_bytes_per_sec` and request byte. The former will fail the assertion while the latter succeeds. - Here is the increased test time due to the 3rd change mentioned above in the summary. The relevant 3 tests mentioned in total increase the test time by 6s (~6000/33848 = 17.7% of the original total test time), which IMO is acceptable for better test coverage through running the "long path". - current (run on branch rate_limiter_ut_improve locally) [ RUN ] RateLimiterTest.GetTotalBytesThrough [ OK ] RateLimiterTest.GetTotalBytesThrough (3000 ms) [ RUN ] RateLimiterTest.GetTotalRequests [ OK ] RateLimiterTest.GetTotalRequests (3001 ms) [ RUN ] RateLimiterTest.GetTotalPendingRequests [ OK ] RateLimiterTest.GetTotalPendingRequests (0 ms) ... [----------] 10 tests from RateLimiterTest (43349 ms total) [----------] Global test environment tear-down [==========] 10 tests from 1 test case ran. (43349 ms total) [ PASSED ] 10 tests. - previous (run on branch main locally) [ RUN ] RateLimiterTest.GetTotalBytesThrough [ OK ] RateLimiterTest.GetTotalBytesThrough (0 ms) [ RUN ] RateLimiterTest.GetTotalRequests [ OK ] RateLimiterTest.GetTotalRequests (0 ms) [ RUN ] RateLimiterTest.GetTotalPendingRequests [ OK ] RateLimiterTest.GetTotalPendingRequests (0 ms) ... [----------] 10 tests from RateLimiterTest (33848 ms total) [----------] Global test environment tear-down [==========] 10 tests from 1 test case ran. (33848 ms total) [ PASSED ] 10 tests. Reviewed By: ajkr Differential Revision: D30872544 Pulled By: hx235 fbshipit-source-id: ff894f5c1a4bef70e8e407d53b00be45f776b3e4 |

4 years ago |

|

|

bda8d93ba9 |

Fix and detect headers with missing dependencies (#8893)

Summary: It's always annoying to find a header does not include its own dependencies and only works when included after other includes. This change adds `make check-headers` which validates that each header can be included at the top of a file. Some headers are excluded e.g. because of platform or external dependencies. rocksdb_namespace.h had to be re-worked slightly to enable checking for failure to include it. (ROCKSDB_NAMESPACE is a valid namespace name.) Fixes mostly involve adding and cleaning up #includes, but for FileTraceWriter, a constructor was out-of-lined to make a forward declaration sufficient. This check is not currently run with `make check` but is added to CircleCI build-linux-unity since that one is already relatively fast. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8893 Test Plan: existing tests and resolving issues detected by new check Reviewed By: mrambacher Differential Revision: D30823300 Pulled By: pdillinger fbshipit-source-id: 9fff223944994c83c105e2e6496d24845dc8e572 |

4 years ago |

|

|

12542488ef |

Add public API RateLimiter::GetTotalPendingRequests() (#8890)

Summary: Context/Summary: As users requested, a public API RateLimiter::GetTotalPendingRequests() is added to expose the total number of pending requests for bytes in the rate limiter, which is the size of the request queue of that priority (or of all priorities, if IO_TOTAL is interested) at the time when this API is called. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8890 Test Plan: - Passing added new unit tests - Passing existing unit tests Reviewed By: ajkr Differential Revision: D30815500 Pulled By: hx235 fbshipit-source-id: 2dfa990f651c1c47378b6215c751ad76a5824300 |

4 years ago |