Tag:

Branch:

Tree:

f28d0c2020

main

oxigraph-8.1.1

oxigraph-8.3.2

oxigraph-main

${ noResults }

281 Commits (f28d0c2020d0314e66aed240f379f2a795b6c940)

| Author | SHA1 | Message | Date |

|---|---|---|---|

|

|

27f3af5966 |

Fix serious FSDirectory use-after-Close bug (missing fsync) (#10460)

Summary: TL;DR: due to a recent change, if you drop a column family, often that DB will no longer fsync after writing new SST files to remaining or new column families, which could lead to data loss on power loss. More bug detail: The intent of https://github.com/facebook/rocksdb/issues/10049 was to Close FSDirectory objects at DB::Close time rather than waiting for DB object destruction. Unfortunately, it also closes shared FSDirectory objects on DropColumnFamily (& destroy remaining handles), which can lead to use-after-Close on FSDirectory shared with remaining column families. Those "uses" are only Fsyncs (or redundant Closes). In the default Posix filesystem, an Fsync on a closed FSDirectory is a quiet no-op. Consequently (under most configurations), if you drop a column family, that DB will no longer fsync after writing new SST files to column families sharing the same directory (true under most configurations). More fix detail: Basically, this removes unnecessary Close ops on destroying ColumnFamilyData. We let `shared_ptr` take care of calling the destructor at the right time. If the intent was to require Close be called before destroying FSDirectory, that was not made clear by the author of FileSystem and was not at all enforced by https://github.com/facebook/rocksdb/issues/10049, which could have added `assert(fd_ == -1)` to `~PosixDirectory()` but did not. To keep this fix simple, we relax the unit test for https://github.com/facebook/rocksdb/issues/10049 to allow timely destruction of FSDirectory to suffice as Close (in CountedFileSystem). Added a TODO to revisit that. Also in this PR: * Added a TODO to share FSDirectory instances between DB and its column families. (Already shared among column families.) * Made DB::Close attempt to close all its open FSDirectory objects even if there is a failure in closing one. Also code clean-up around this logic. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10460 Test Plan: add an assert to check for use-after-Close. With that existing tests can detect the misuse. With fix, tests pass (except noted relaxing of unit test for https://github.com/facebook/rocksdb/issues/10049) Reviewed By: ajkr Differential Revision: D38357922 Pulled By: pdillinger fbshipit-source-id: d42079cadbedf0a969f03389bf586b3b4e1f9137 |

2 years ago |

|

|

bfc737da21 |

fix typos in some code and comment (#10139)

Summary: Minor issue, I just found a few typos on db_test and column_family while reading the code. And I have this PR opened to contribute. :) Pull Request resolved: https://github.com/facebook/rocksdb/pull/10139 Reviewed By: ajkr Differential Revision: D38007098 Pulled By: jay-zhuang fbshipit-source-id: 511947b32424c34348184691216640f32c410fb1 |

2 years ago |

|

|

6115254416 |

Fix A Bug Where Concurrent Compactions Cause Further Slowing Down (#10270)

Summary: Currently, when installing a new super version, when stalling condition triggers, we compare estimated compaction bytes to previously, and if the new value is larger or equal to the previous one, we reduce the slowdown write rate. However, if concurrent compactions happen, the same value might be used. The result is that, although some compactions reduce estimated compaction bytes, we treat them as a signal for further slowing down. In some cases, it causes slowdown rate drops all the way to the minimum, far lower than needed. Fix the bug by not triggering a re-calculation if a new super version doesn't have Version or a memtable change. With this fix, number of compaction finishes are still undercounted in this algorithm, but it is still better than the current bug where they are negatively counted. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10270 Test Plan: Run a benchmark where the slowdown rate is dropped to minimal unnessarily and see it is back to a normal value. Reviewed By: ajkr Differential Revision: D37497327 fbshipit-source-id: 9bca961cc38fed965c3af0fa6c9ca0efaa7637c4 |

2 years ago |

|

|

5879053fd0 |

Dynamically changeable `MemPurge` option (#10011)

Summary: **Summary** Make the mempurge option flag a Mutable Column Family option flag. Therefore, the mempurge feature can be dynamically toggled. **Motivation** RocksDB users prefer having the ability to switch features on and off without having to close and reopen the DB. This is particularly important if the feature causes issues and needs to be turned off. Dynamically changing a DB option flag does not seem currently possible. Moreover, with this new change, the MemPurge feature can be toggled on or off independently between column families, which we see as a major improvement. **Content of this PR** This PR includes removal of the `experimental_mempurge_threshold` flag as a DB option flag, and its re-introduction as a `MutableCFOption` flag. I updated the code to handle dynamic changes of the flag (in particular inside the `FlushJob` file). Additionally, this PR includes a new test to demonstrate the capacity of the code to toggle the MemPurge feature on and off, as well as the addition in the `db_stress` module of 2 different mempurge threshold values (0.0 and 1.0) that can be randomly changed with the `set_option_one_in` flag. This is useful to stress test the dynamic changes. **Benchmarking** I will add numbers to prove that there is no performance impact within the next 12 hours. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10011 Reviewed By: pdillinger Differential Revision: D36462357 Pulled By: bjlemaire fbshipit-source-id: 5e3d63bdadf085c0572ecc2349e7dd9729ce1802 |

2 years ago |

|

|

deff48bcef |

Add blob source to retrieve blobs in RocksDB (#10198)

Summary: There is currently no caching mechanism for blobs, which is not ideal especially when the database resides on remote storage (where we cannot rely on the OS page cache). As part of this task, we would like to make it possible for the application to configure a blob cache. In this task, we formally introduced the blob source to RocksDB. BlobSource is a new abstraction layer that provides universal access to blobs, regardless of whether they are in the blob cache, secondary cache, or (remote) storage. Depending on user settings, it always fetch blobs from multi-tier cache and storage with minimal cost. Note: The new `MultiGetBlob()` implementation is not included in the current PR. To go faster, we aim to create a separate PR for it in parallel! This PR is a part of https://github.com/facebook/rocksdb/issues/10156 Pull Request resolved: https://github.com/facebook/rocksdb/pull/10198 Reviewed By: ltamasi Differential Revision: D37294735 Pulled By: gangliao fbshipit-source-id: 9cb50422d9dd1bc03798501c2778b6c7520c7a1e |

2 years ago |

|

|

d665afdbf3 |

Account memory of FileMetaData in global memory limit (#9924)

Summary: **Context/Summary:** As revealed by heap profiling, allocation of `FileMetaData` for [newly created file added to a Version](https://github.com/facebook/rocksdb/pull/9924/files#diff-a6aa385940793f95a2c5b39cc670bd440c4547fa54fd44622f756382d5e47e43R774) can consume significant heap memory. This PR is to account that toward our global memory limit based on block cache capacity. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9924 Test Plan: - Previous `make check` verified there are only 2 places where the memory of the allocated `FileMetaData` can be released - New unit test `TEST_P(ChargeFileMetadataTestWithParam, Basic)` - db bench (CPU cost of `charge_file_metadata` in write and compact) - **write micros/op: -0.24%** : `TEST_TMPDIR=/dev/shm/testdb ./db_bench -benchmarks=fillseq -db=$TEST_TMPDIR -charge_file_metadata=1 (remove this option for pre-PR) -disable_auto_compactions=1 -write_buffer_size=100000 -num=4000000 | egrep 'fillseq'` - **compact micros/op -0.87%** : `TEST_TMPDIR=/dev/shm/testdb ./db_bench -benchmarks=fillseq -db=$TEST_TMPDIR -charge_file_metadata=1 -disable_auto_compactions=1 -write_buffer_size=100000 -num=4000000 -numdistinct=1000 && ./db_bench -benchmarks=compact -db=$TEST_TMPDIR -use_existing_db=1 -charge_file_metadata=1 -disable_auto_compactions=1 | egrep 'compact'` table 1 - write #-run | (pre-PR) avg micros/op | std micros/op | (post-PR) micros/op | std micros/op | change (%) -- | -- | -- | -- | -- | -- 10 | 3.9711 | 0.264408 | 3.9914 | 0.254563 | 0.5111933721 20 | 3.83905 | 0.0664488 | 3.8251 | 0.0695456 | -0.3633711465 40 | 3.86625 | 0.136669 | 3.8867 | 0.143765 | 0.5289363078 80 | 3.87828 | 0.119007 | 3.86791 | 0.115674 | **-0.2673865734** 160 | 3.87677 | 0.162231 | 3.86739 | 0.16663 | **-0.2419539978** table 2 - compact #-run | (pre-PR) avg micros/op | std micros/op | (post-PR) micros/op | std micros/op | change (%) -- | -- | -- | -- | -- | -- 10 | 2,399,650.00 | 96,375.80 | 2,359,537.00 | 53,243.60 | -1.67 20 | 2,410,480.00 | 89,988.00 | 2,433,580.00 | 91,121.20 | 0.96 40 | 2.41E+06 | 121811 | 2.39E+06 | 131525 | **-0.96** 80 | 2.40E+06 | 134503 | 2.39E+06 | 108799 | **-0.78** - stress test: `python3 tools/db_crashtest.py blackbox --charge_file_metadata=1 --cache_size=1` killed as normal Reviewed By: ajkr Differential Revision: D36055583 Pulled By: hx235 fbshipit-source-id: b60eab94707103cb1322cf815f05810ef0232625 |

2 years ago |

|

|

b6de139df5 |

Handle "NotSupported" status by default implementation of Close() in … (#10127)

Summary: The default implementation of Close() function in Directory/FSDirectory classes returns `NotSupported` status. However, we don't want operations that worked in older versions to begin failing after upgrading when run on FileSystems that have not implemented Directory::Close() yet. So we require the upper level that calls Close() function should properly handle "NotSupported" status instead of treating it as an error status. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10127 Reviewed By: ajkr Differential Revision: D36971112 Pulled By: littlepig2013 fbshipit-source-id: 100f0e6ad1191e1acc1ba6458c566a11724cf466 |

2 years ago |

|

|

e88d8935ae |

Add comments/permit unchecked error to close_db_dir pull requests (#10093)

Summary: In [close_db_dir](https://github.com/facebook/rocksdb/pull/10049) pull request, some merging conflicts occurred (some comments and one line `s.PermitUncheckedError()` are missing). This pull request aims to put them back. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10093 Reviewed By: ajkr Differential Revision: D36884117 Pulled By: littlepig2013 fbshipit-source-id: 8c0e2a8793fc52804067c511843bd1ff4912c1c3 |

2 years ago |

|

|

65893ad959 |

Explicitly closing all directory file descriptors (#10049)

Summary: Currently, the DB directory file descriptor is left open until the deconstruction process (`DB::Close()` does not close the file descriptor). To verify this, comment out the lines between `db_ = nullptr` and `db_->Close()` (line 512, 513, 514, 515 in ldb_cmd.cc) to leak the ``db_'' object, build `ldb` tool and run ``` strace --trace=open,openat,close ./ldb --db=$TEST_TMPDIR --ignore_unknown_options put K1 V1 --create_if_missing ``` There is one directory file descriptor that is not closed in the strace log. Pull Request resolved: https://github.com/facebook/rocksdb/pull/10049 Test Plan: Add a new unit test DBBasicTest.DBCloseAllDirectoryFDs: Open a database with different WAL directory and three different data directories, and all directory file descriptors should be closed after calling Close(). Explicitly call Close() after a directory file descriptor is not used so that the counter of directory open and close should be equivalent. Reviewed By: ajkr, hx235 Differential Revision: D36722135 Pulled By: littlepig2013 fbshipit-source-id: 07bdc2abc417c6b30997b9bbef1f79aa757b21ff |

2 years ago |

|

|

cc23b46da1 |

Support using ZDICT_finalizeDictionary to generate zstd dictionary (#9857)

Summary:

An untrained dictionary is currently simply the concatenation of several samples. The ZSTD API, ZDICT_finalizeDictionary(), can improve such a dictionary's effectiveness at low cost. This PR changes how dictionary is created by calling the ZSTD ZDICT_finalizeDictionary() API instead of creating raw content dictionary (when max_dict_buffer_bytes > 0), and pass in all buffered uncompressed data blocks as samples.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9857

Test Plan:

#### db_bench test for cpu/memory of compression+decompression and space saving on synthetic data:

Set up: change the parameter [here](

|

2 years ago |

|

|

49628c9a83 |

Use std::numeric_limits<> (#9954)

Summary: Right now we still don't fully use std::numeric_limits but use a macro, mainly for supporting VS 2013. Right now we only support VS 2017 and up so it is not a problem. The code comment claims that MinGW still needs it. We don't have a CI running MinGW so it's hard to validate. since we now require C++17, it's hard to imagine MinGW would still build RocksDB but doesn't support std::numeric_limits<>. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9954 Test Plan: See CI Runs. Reviewed By: riversand963 Differential Revision: D36173954 fbshipit-source-id: a35a73af17cdcae20e258cdef57fcf29a50b49e0 |

3 years ago |

|

|

cad809978a |

Fix heap use-after-free race with DropColumnFamily (#9730)

Summary: Although ColumnFamilySet comments say that DB mutex can be freed during iteration, as long as you hold a ref while releasing DB mutex, this is not quite true because UnrefAndTryDelete might delete cfd right before it is needed to get ->next_ for the next iteration of the loop. This change solves the problem by making a wrapper class that makes such iteration easier while handling the tricky details of UnrefAndTryDelete on the previous cfd only after getting next_ in operator++. FreeDeadColumnFamilies should already have been obsolete; this removes it for good. Similarly, ColumnFamilySet::iterator doesn't need to check for cfd with 0 refs, because those are immediately deleted. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9730 Test Plan: was reported with ASAN on unit tests like DBLogicalBlockSizeCacheTest.CreateColumnFamily (very rare); keep watching Reviewed By: ltamasi Differential Revision: D35038143 Pulled By: pdillinger fbshipit-source-id: 0a5478d5be96c135343a00603711b7df43ae19c9 |

3 years ago |

|

|

4dff279b19 |

DisableManualCompaction may fail to cancel an unscheduled task (#9659)

Summary: https://github.com/facebook/rocksdb/issues/9625 didn't change the unschedule condition which was waiting for the background thread to clean-up the compaction. make sure we only unschedule the task when it's scheduled. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9659 Reviewed By: ajkr Differential Revision: D34651820 Pulled By: jay-zhuang fbshipit-source-id: 23f42081b15ec8886cd81cbf131b116e0c74dc2f |

3 years ago |

|

|

09b0e8f2c7 |

Fix a timer crash caused by invalid memory management (#9656)

Summary: Timer crash when multiple DB instances doing heavy DB open and close operations concurrently. Which is caused by adding a timer task with smaller timestamp than the current running task. Fix it by moving the getting new task timestamp part within timer mutex protection. And other fixes: - Disallow adding duplicated function name to timer - Fix a minor memory leak in timer when a running task is cancelled Pull Request resolved: https://github.com/facebook/rocksdb/pull/9656 Reviewed By: ajkr Differential Revision: D34626296 Pulled By: jay-zhuang fbshipit-source-id: 6b6d96a5149746bf503546244912a9e41a0c5f6b |

3 years ago |

|

|

95305c44a1 |

Add OpenAndTrimHistory API to support trimming data with specified timestamp (#9410)

Summary: As disscussed in (https://github.com/facebook/rocksdb/issues/9223), Here added a new API named DB::OpenAndTrimHistory, this API will open DB and trim data to the timestamp specofied by **trim_ts** (The data with newer timestamp than specified trim bound will be removed). This API should only be used at a timestamp-enabled db instance recovery. And this PR implemented a new iterator named HistoryTrimmingIterator to support trimming history with a new API named DB::OpenAndTrimHistory. HistoryTrimmingIterator wrapped around the underlying InternalITerator such that keys whose timestamps newer than **trim_ts** should not be returned to the compaction iterator while **trim_ts** is not null. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9410 Reviewed By: ltamasi Differential Revision: D34410207 Pulled By: riversand963 fbshipit-source-id: e54049dc234eccd673244c566b15df58df5a6236 |

3 years ago |

|

|

2a67d475f1 |

Fix bug affecting GetSortedWalFiles, Backups, Checkpoint (#9208)

Summary: Saw error like this: `Backup failed -- IO error: No such file or directory: While opening a file for sequentially reading: /dev/shm/rocksdb/rocksdb_crashtest_blackbox/004426.log: No such file or directory` Unfortunately, GetSortedWalFiles (used by Backups, Checkpoint, etc.) relies on no file deletions happening while its operating, which means not only disabling (more) deletions, but ensuring any pending deletions are completed. Two fixes related to this: * There was a gap in several places between decrementing pending_purge_obsolete_files_ and incrementing bg_purge_scheduled_ where the db mutex would be released and GetSortedWalFiles (and others) could get false information that no deletions are pending. * The fix to https://github.com/facebook/rocksdb/issues/8591 (disabling deletions in GetSortedWalFiles) seems incomplete because it doesn't prevent pending deletions from occuring during the operation (if deletions not already disabled, the case that was to be fixed by the change). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9208 Test Plan: existing tests (it's hard to write a test for interleavings that are now excluded - this is what stress test is for) Reviewed By: ajkr Differential Revision: D32630675 Pulled By: pdillinger fbshipit-source-id: a121e3da648de130cd24d44c524232f4eb22f178 |

3 years ago |

|

|

2035798834 |

Update TransactionUtil::CheckKeyForConflict to also use timestamps (#9162)

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9162 Existing TransactionUtil::CheckKeyForConflict() performs only seq-based conflict checking. If user-defined timestamp is enabled, it should perform conflict checking based on timestamps too. Update TransactionUtil::CheckKey-related methods to verify the timestamp of the latest version of a key is smaller than the read timestamp. Note that CheckKeysForConflict() is not updated since it's used only by optimistic transaction, and we do not plan to update it in this upcoming batch of diffs. Existing GetLatestSequenceForKey() returns the sequence of the latest version of a specific user key. Since we support user-defined timestamp, we need to update this method to also return the timestamp (if enabled) of the latest version of the key. This will be needed for snapshot validation. Reviewed By: ltamasi Differential Revision: D31567960 fbshipit-source-id: 2e4a14aed267435a9aa91bc632d2411c01946d44 |

3 years ago |

|

|

3e1bf771a3 |

Make it possible to force the garbage collection of the oldest blob files (#8994)

Summary: The current BlobDB garbage collection logic works by relocating the valid blobs from the oldest blob files as they are encountered during compaction, and cleaning up blob files once they contain nothing but garbage. However, with sufficiently skewed workloads, it is theoretically possible to end up in a situation when few or no compactions get scheduled for the SST files that contain references to the oldest blob files, which can lead to increased space amp due to the lack of GC. In order to efficiently handle such workloads, the patch adds a new BlobDB configuration option called `blob_garbage_collection_force_threshold`, which signals to BlobDB to schedule targeted compactions for the SST files that keep alive the oldest batch of blob files if the overall ratio of garbage in the given blob files meets the threshold *and* all the given blob files are eligible for GC based on `blob_garbage_collection_age_cutoff`. (For example, if the new option is set to 0.9, targeted compactions will get scheduled if the sum of garbage bytes meets or exceeds 90% of the sum of total bytes in the oldest blob files, assuming all affected blob files are below the age-based cutoff.) The net result of these targeted compactions is that the valid blobs in the oldest blob files are relocated and the oldest blob files themselves cleaned up (since *all* SST files that rely on them get compacted away). These targeted compactions are similar to periodic compactions in the sense that they force certain SST files that otherwise would not get picked up to undergo compaction and also in the sense that instead of merging files from multiple levels, they target a single file. (Note: such compactions might still include neighboring files from the same level due to the need of having a "clean cut" boundary but they never include any files from any other level.) This functionality is currently only supported with the leveled compaction style and is inactive by default (since the default value is set to 1.0, i.e. 100%). Pull Request resolved: https://github.com/facebook/rocksdb/pull/8994 Test Plan: Ran `make check` and tested using `db_bench` and the stress/crash tests. Reviewed By: riversand963 Differential Revision: D31489850 Pulled By: ltamasi fbshipit-source-id: 44057d511726a0e2a03c5d9313d7511b3f0c4eab |

3 years ago |

|

|

8717c26823 |

Warning about incompatible options with level_compaction_dynamic_level_bytes (#8329)

Summary: This change introduces warnings instead of a silent override when trying to use level_compaction_dynamic_level_bytes with multiple cf_paths/db_paths. I have completed the CLA. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8329 Reviewed By: hx235 Differential Revision: D31399713 Pulled By: ajkr fbshipit-source-id: 29c6fe5258d1f739b4590ecd44aee44f55415595 |

3 years ago |

|

|

13ae16c315 |

Cleanup includes in dbformat.h (#8930)

Summary: This header file was including everything and the kitchen sink when it did not need to. This resulted in many places including this header when they needed other pieces instead. Cleaned up this header to only include what was needed and fixed up the remaining code to include what was now missing. Hopefully, this sort of code hygiene cleanup will speed up the builds... Pull Request resolved: https://github.com/facebook/rocksdb/pull/8930 Reviewed By: pdillinger Differential Revision: D31142788 Pulled By: mrambacher fbshipit-source-id: 6b45de3f300750c79f751f6227dece9cfd44085d |

3 years ago |

|

|

0cb0fc6fd3 |

Add DB properties for BlobDB (#8734)

Summary: RocksDB exposes certain internal statistics via the DB property interface. However, there are currently no properties related to BlobDB. For starters, we would like to add the following BlobDB properties: `rocksdb.num-blob-files`: number of blob files in the current Version (kind of like `num-files-at-level` but note this is not per level, since blob files are not part of the LSM tree). `rocksdb.blob-stats`: this could return the total number and size of all blob files, and potentially also the total amount of garbage (in bytes) in the blob files in the current Version. `rocksdb.total-blob-file-size`: the total size of all blob files (as a blob counterpart for `total-sst-file-size`) of all Versions. `rocksdb.live-blob-file-size`: the total size of all blob files in the current Version. `rocksdb.estimate-live-data-size`: this is actually an existing property that we can extend so it considers blob files as well. When it comes to blobs, we actually have an exact value for live bytes. Namely, live bytes can be computed simply as total bytes minus garbage bytes, summed over the entire set of blob files in the Version. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8734 Test Plan: ``` ➜ rocksdb git:(new_feature_blobDB_properties) ./db_blob_basic_test [==========] Running 16 tests from 2 test cases. [----------] Global test environment set-up. [----------] 10 tests from DBBlobBasicTest [ RUN ] DBBlobBasicTest.GetBlob [ OK ] DBBlobBasicTest.GetBlob (12 ms) [ RUN ] DBBlobBasicTest.MultiGetBlobs [ OK ] DBBlobBasicTest.MultiGetBlobs (11 ms) [ RUN ] DBBlobBasicTest.GetBlob_CorruptIndex [ OK ] DBBlobBasicTest.GetBlob_CorruptIndex (10 ms) [ RUN ] DBBlobBasicTest.GetBlob_InlinedTTLIndex [ OK ] DBBlobBasicTest.GetBlob_InlinedTTLIndex (12 ms) [ RUN ] DBBlobBasicTest.GetBlob_IndexWithInvalidFileNumber [ OK ] DBBlobBasicTest.GetBlob_IndexWithInvalidFileNumber (9 ms) [ RUN ] DBBlobBasicTest.GenerateIOTracing [ OK ] DBBlobBasicTest.GenerateIOTracing (11 ms) [ RUN ] DBBlobBasicTest.BestEffortsRecovery_MissingNewestBlobFile [ OK ] DBBlobBasicTest.BestEffortsRecovery_MissingNewestBlobFile (13 ms) [ RUN ] DBBlobBasicTest.GetMergeBlobWithPut [ OK ] DBBlobBasicTest.GetMergeBlobWithPut (11 ms) [ RUN ] DBBlobBasicTest.MultiGetMergeBlobWithPut [ OK ] DBBlobBasicTest.MultiGetMergeBlobWithPut (14 ms) [ RUN ] DBBlobBasicTest.BlobDBProperties [ OK ] DBBlobBasicTest.BlobDBProperties (21 ms) [----------] 10 tests from DBBlobBasicTest (124 ms total) [----------] 6 tests from DBBlobBasicTest/DBBlobBasicIOErrorTest [ RUN ] DBBlobBasicTest/DBBlobBasicIOErrorTest.GetBlob_IOError/0 [ OK ] DBBlobBasicTest/DBBlobBasicIOErrorTest.GetBlob_IOError/0 (12 ms) [ RUN ] DBBlobBasicTest/DBBlobBasicIOErrorTest.GetBlob_IOError/1 [ OK ] DBBlobBasicTest/DBBlobBasicIOErrorTest.GetBlob_IOError/1 (10 ms) [ RUN ] DBBlobBasicTest/DBBlobBasicIOErrorTest.MultiGetBlobs_IOError/0 [ OK ] DBBlobBasicTest/DBBlobBasicIOErrorTest.MultiGetBlobs_IOError/0 (10 ms) [ RUN ] DBBlobBasicTest/DBBlobBasicIOErrorTest.MultiGetBlobs_IOError/1 [ OK ] DBBlobBasicTest/DBBlobBasicIOErrorTest.MultiGetBlobs_IOError/1 (10 ms) [ RUN ] DBBlobBasicTest/DBBlobBasicIOErrorTest.CompactionFilterReadBlob_IOError/0 [ OK ] DBBlobBasicTest/DBBlobBasicIOErrorTest.CompactionFilterReadBlob_IOError/0 (1011 ms) [ RUN ] DBBlobBasicTest/DBBlobBasicIOErrorTest.CompactionFilterReadBlob_IOError/1 [ OK ] DBBlobBasicTest/DBBlobBasicIOErrorTest.CompactionFilterReadBlob_IOError/1 (1013 ms) [----------] 6 tests from DBBlobBasicTest/DBBlobBasicIOErrorTest (2066 ms total) [----------] Global test environment tear-down [==========] 16 tests from 2 test cases ran. (2190 ms total) [ PASSED ] 16 tests. ``` Reviewed By: ltamasi Differential Revision: D30690849 Pulled By: Zhiyi-Zhang fbshipit-source-id: a7567319487ad76bd1a2e24bf143afdbbd9e4346 |

3 years ago |

|

|

beed86473a |

Make MemTableRepFactory into a Customizable class (#8419)

Summary: This PR does the following: -> Makes the MemTableRepFactory into a Customizable class and creatable/configurable via CreateFromString -> Makes the existing implementations compatible with configurations -> Moves the "SpecialRepFactory" test class into testutil, accessible via the ObjectRegistry or a NewSpecial API New tests were added to validate the functionality and all existing tests pass. db_bench and memtablerep_bench were hand-tested to verify the functionality in those tools. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8419 Reviewed By: zhichao-cao Differential Revision: D29558961 Pulled By: mrambacher fbshipit-source-id: 81b7229636e4e649a0c914e73ac7b0f8454c931c |

3 years ago |

|

|

c9cd5d25a8 |

Remove some unneeded code (#8736)

Summary: * FullKey and ParseFullKey appear to serve no purpose in the public API (or anything else) so removed. Only use in one test updated. * NumberToString serves no purpose vs. ToString so removed, numerous calls updated * Remove unnecessary forward declarations in metadata.h by re-arranging class definitions. * Remove some unneeded semicolons Pull Request resolved: https://github.com/facebook/rocksdb/pull/8736 Test Plan: existing tests Reviewed By: mrambacher Differential Revision: D30700039 Pulled By: pdillinger fbshipit-source-id: 1e436a576f511a6ed8b4d97af7cc8216bc729af2 |

3 years ago |

|

|

3f7e929865 |

Fix a race in ColumnFamilyData::UnrefAndTryDelete (#8605)

Summary: The `ColumnFamilyData::UnrefAndTryDelete` code currently on the trunk unlocks the DB mutex before destroying the `ThreadLocalPtr` holding the per-thread `SuperVersion` pointers when the only remaining reference is the back reference from `super_version_`. The idea behind this was to break the circular dependency between `ColumnFamilyData` and `SuperVersion`: when the penultimate reference goes away, `ColumnFamilyData` can clean up the `SuperVersion`, which can in turn clean up `ColumnFamilyData`. (Assuming there is a `SuperVersion` and it is not referenced by anything else.) However, unlocking the mutex throws a wrench in this plan by making it possible for another thread to jump in and take another reference to the `ColumnFamilyData`, keeping the object alive in a zombie `ThreadLocalPtr`-less state. This can cause issues like https://github.com/facebook/rocksdb/issues/8440 , https://github.com/facebook/rocksdb/issues/8382 , and might also explain the `was_last_ref` assertion failures from the `ColumnFamilySet` destructor we sometimes observe during close in our stress tests. Digging through the archives, this unlocking goes way back to 2014 (or earlier). The original rationale was that `SuperVersionUnrefHandle` used to lock the mutex so it can call `SuperVersion::Cleanup`; however, this logic turned out to be deadlock-prone. https://github.com/facebook/rocksdb/pull/3510 fixed the deadlock but left the unlocking in place. https://github.com/facebook/rocksdb/pull/6147 then introduced the circular dependency and associated cleanup logic described above (in order to enable iterators to keep the `ColumnFamilyData` for dropped column families alive), and moved the unlocking-relocking snippet to its present location in `UnrefAndTryDelete`. Finally, https://github.com/facebook/rocksdb/pull/7749 fixed a memory leak but apparently exacerbated the race by (otherwise correctly) switching to `UnrefAndTryDelete` in `SuperVersion::Cleanup`. The patch simply eliminates the unlocking and relocking, which has been unnecessary ever since https://github.com/facebook/rocksdb/issues/3510 made `SuperVersionUnrefHandle` lock-free. This closes the window during which another thread could increase the reference count, and hopefully fixes the issues above. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8605 Test Plan: Ran `make check` and stress tests locally. Reviewed By: pdillinger Differential Revision: D30051035 Pulled By: ltamasi fbshipit-source-id: 8fe559e4b4ad69fc142579f8bc393ef525918528 |

3 years ago |

|

|

74b7c0d249 |

Fix use-after-free on implicit temporary FileOptions (#8571)

Summary: FileOptions has an implicit conversion from EnvOptions and some internal APIs take `const FileOptions&` and save the reference, which is counter to Google C++ guidelines, > Avoid defining functions that require a const reference parameter to outlive the call, because const reference parameters bind to temporaries. Instead, find a way to eliminate the lifetime requirement (for example, by copying the parameter), or pass it by const pointer and document the lifetime and non-null requirements. This is at least a problem for repair.cc, which passes an EnvOptions to TableCache(), which would save a reference to the temporary copy as FileOptions. This was unfortunately only caught as a side effect of changes in https://github.com/facebook/rocksdb/issues/8544. This change fixes the repair.cc case and updates the involved internal APIs that save a reference to use `const FileOptions*` instead. Unfortunately, I don't know how to get any of our sanitizers to reliably report bugs like this, so I can't rule out more existing in our codebase. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8571 Test Plan: Test that issues seen with https://github.com/facebook/rocksdb/issues/8544 are fixed (can reproduce on AWS EC2) Reviewed By: ajkr Differential Revision: D29943890 Pulled By: pdillinger fbshipit-source-id: 95f9c5251548777b4dc994c1a083dd2add5799c9 |

3 years ago |

|

|

c521a9ab2b |

Retire superfluous functions introduced in earlier mempurge PRs. (#8558)

Summary: The main challenge to make the memtable garbage collection prototype (nicknamed `mempurge`) was to not get rid of WAL files that contain unflushed (but mempurged) data. That was successfully guaranteed by not writing the VersionEdit to the MANIFEST file after a successful mempurge. By not writing VersionEdits to the `MANIFEST` file after a succesful mempurge operation, we do not change the earliest log file number that contains unflushed data: `cfd->GetLogNumber()` (`cfd->SetLogNumber()` is only called in `VersionSet::ProcessManifestWrites`). As a result, a number of functions introduced earlier just for the mempurge operation are not obscolete/redundant. (e.g.: `FlushJob::ExtractEarliestLogFileNumber`), and this PR aims at cleaning up all these now-unnecessary functions. In particular, we no longer need to store the earliest log file number in the `MemTable` struct itself. This PR therefore also reverts the `MemTable` struct to its original form. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8558 Test Plan: Already included in `db_flush_test.cc`. Reviewed By: anand1976 Differential Revision: D29764351 Pulled By: bjlemaire fbshipit-source-id: 0f43b260fa270251862512f397d3f24ee62e8437 |

3 years ago |

|

|

206845c057 |

Mempurge support for wal (#8528)

Summary: In this PR, `mempurge` is made compatible with the Write Ahead Log: in case of recovery, the DB is now capable of recovering the data that was "mempurged" and kept in the `imm()` list of immutable memtables. The twist was to add a uint64_t to the `memtable` struct to store the number of the earliest log file containing entries from the `memtable`. When a `Flush` operation is replaced with a `MemPurge`, the `VersionEdit` (which usually contains the new min log file number to pick up for recovery and the level 0 file path of the newly created SST file) is no longer appended to the manifest log, and every time the `deleteWal` method is called, a check is made on the list of immutable memtables. This PR also includes a unit test that verifies that no data is lost upon Reopening of the database when the mempurge feature is activated. This extensive unit test includes two column families, with valid data contained in the imm() at time of "crash"/reopening (recovery). Pull Request resolved: https://github.com/facebook/rocksdb/pull/8528 Reviewed By: pdillinger Differential Revision: D29701097 Pulled By: bjlemaire fbshipit-source-id: 072a900fb6ccc1edcf5eef6caf88f3060238edf9 |

3 years ago |

|

|

837705ad80 |

Make mempurge a background process (equivalent to in-memory compaction). (#8505)

Summary: In https://github.com/facebook/rocksdb/issues/8454, I introduced a new process baptized `MemPurge` (memtable garbage collection). This new PR is built upon this past mempurge prototype. In this PR, I made the `mempurge` process a background task, which provides superior performance since the mempurge process does not cling on the db_mutex anymore, and addresses severe restrictions from the past iteration (including a scenario where the past mempurge was failling, when a memtable was mempurged but was still referred to by an iterator/snapshot/...). Now the mempurge process ressembles an in-memory compaction process: the stack of immutable memtables is filtered out, and the useful payload is used to populate an output memtable. If the output memtable is filled at more than 60% capacity (arbitrary heuristic) the mempurge process is aborted and a regular flush process takes place, else the output memtable is kept in the immutable memtable stack. Note that adding this output memtable to the `imm()` memtable stack does not trigger another flush process, so that the flush thread can go to sleep at the end of a successful mempurge. MemPurge is activated by making the `experimental_allow_mempurge` flag `true`. When activated, the `MemPurge` process will always happen when the flush reason is `kWriteBufferFull`. The 3 unit tests confirm that this process supports `Put`, `Get`, `Delete`, `DeleteRange` operators and is compatible with `Iterators` and `CompactionFilters`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8505 Reviewed By: pdillinger Differential Revision: D29619283 Pulled By: bjlemaire fbshipit-source-id: 8a99bee76b63a8211bff1a00e0ae32360aaece95 |

3 years ago |

|

|

9dc887ece0 |

Memtable "MemPurge" prototype (#8454)

Summary: Implement an experimental feature called "MemPurge", which consists in purging "garbage" bytes out of a memtable and reuse the memtable struct instead of making it immutable and eventually flushing its content to storage. The prototype is by default deactivated and is not intended for use. It is intended for correctness and validation testing. At the moment, the "MemPurge" feature can be switched on by using the `options.experimental_allow_mempurge` flag. For this early stage, when the allow_mempurge flag is set to `true`, all the flush operations will be rerouted to perform a MemPurge. This is a temporary design decision that will give us the time to explore meaningful heuristics to use MemPurge at the right time for relevant workloads . Moreover, the current MemPurge operation only supports `Puts`, `Deletes`, `DeleteRange` operations, and handles `Iterators` as well as `CompactionFilter`s that are invoked at flush time . Three unit tests are added to `db_flush_test.cc` to test if MemPurge works correctly (and checks that the previously mentioned operations are fully supported thoroughly tested). One noticeable design decision is the timing of the MemPurge operation in the memtable workflow: for this prototype, the mempurge happens when the memtable is switched (and usually made immutable). This is an inefficient process because it implies that the entirety of the MemPurge operation happens while holding the db_mutex. Future commits will make the MemPurge operation a background task (akin to the regular flush operation) and aim at drastically enhancing the performance of this operation. The MemPurge is also not fully "WAL-compatible" yet, but when the WAL is full, or when the regular MemPurge operation fails (or when the purged memtable still needs to be flushed), a regular flush operation takes place. Later commits will also correct these behaviors. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8454 Reviewed By: anand1976 Differential Revision: D29433971 Pulled By: bjlemaire fbshipit-source-id: 6af48213554e35048a7e03816955100a80a26dc5 |

3 years ago |

|

|

f44e69c64a |

Use DbSessionId as cache key prefix when secondary cache is enabled (#8360)

Summary: Currently, we either use the file system inode or a monotonically incrementing runtime ID as the block cache key prefix. However, if we use a monotonically incrementing runtime ID (in the case that the file system does not support inode id generation), in some cases, it cannot ensure uniqueness (e.g., we have secondary cache migrated from host to host). We use DbSessionID (20 bytes) + current file number (at most 10 bytes) as the new cache block key prefix when the secondary cache is enabled. So can accommodate scenarios such as transfer of cache state across hosts. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8360 Test Plan: add the test to lru_cache_test Reviewed By: pdillinger Differential Revision: D29006215 Pulled By: zhichao-cao fbshipit-source-id: 6cff686b38d83904667a2bd39923cd030df16814 |

3 years ago |

|

|

d83542ca83 |

Make it possible to apply only a subrange of table property collectors (#8298)

Summary: This patch does two things: 1) Introduces some aliases in order to eliminate/prevent long-winded type names w/r/t the internal table property collectors (see e.g. `std::vector<std::unique_ptr<IntTblPropCollectorFactory>>`). 2) Makes it possible to apply only a subrange of table property collectors during table building by turning `TableBuilderOptions::int_tbl_prop_collector_factories` from a pointer to a `vector` into a range (i.e. a pair of iterators). Rationale: I plan to introduce a BlobDB related table property collector, which should only be applied during table creation if blob storage is enabled at the moment (which can be changed dynamically). This change will make it possible to include/ exclude the BlobDB related collector as needed without having to introduce a second `vector` of collectors in `ColumnFamilyData` with pretty much the same contents. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8298 Test Plan: `make check` Reviewed By: jay-zhuang Differential Revision: D28430910 Pulled By: ltamasi fbshipit-source-id: a81d28f2c59495865300f43deb2257d2e6977c8e |

4 years ago |

|

|

a4919d6b62 |

Cap automatic arena block size to 1 MB (#7907)

Summary: Larger arena block size does provide the benefit of reducing allocation overhead, however it may cause other troubles. For example, allocator is more likely not to allocate them to physical memory and trigger page fault. Weighing the risk, we cap the arena block size to 1MB. Users can always use a larger value if they want. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7907 Test Plan: Run all existing tests Reviewed By: pdillinger Differential Revision: D26135269 fbshipit-source-id: b7f55afd03e6ee1d8715f90fa11b6c33944e9ea8 |

4 years ago |

|

|

8948dc8524 |

Make ImmutableOptions struct that inherits from ImmutableCFOptions and ImmutableDBOptions (#8262)

Summary: The ImmutableCFOptions contained a bunch of fields that belonged to the ImmutableDBOptions. This change cleans that up by introducing an ImmutableOptions struct. Following the pattern of Options struct, this class inherits from the DB and CFOption structs (of the Immutable form). Only one structural change (the ImmutableCFOptions::fs was changed to a shared_ptr from a raw one) is in this PR. All of the other changes involve moving the member variables from the ImmutableCFOptions into the ImmutableOptions and changing member variables or function parameters as required for compilation purposes. Follow-on PRs may do a further clean-up of the code, such as renaming variables (such as "ImmutableOptions cf_options") and potentially eliminating un-needed function parameters (there is no longer a need to pass both an ImmutableDBOptions and an ImmutableOptions to a function). Pull Request resolved: https://github.com/facebook/rocksdb/pull/8262 Reviewed By: pdillinger Differential Revision: D28226540 Pulled By: mrambacher fbshipit-source-id: 18ae71eadc879dedbe38b1eb8e6f9ff5c7147dbf |

4 years ago |

|

|

0ca6d6297f |

Rename variables in ImmutableCFOptions to avoid conflicts with ImmutableDBOptions (#8227)

Summary: Renaming ImmutableCFOptions::info_log and statistics to logger and stats. This is stage 2 in creating an ImmutableOptions class. It is necessary because the names match those in ImmutableOptions and have different types. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8227 Reviewed By: jay-zhuang Differential Revision: D28000967 Pulled By: mrambacher fbshipit-source-id: 3bf2aa04e8f1e8724d825b7deacf41080c14420b |

4 years ago |

|

|

d89483098f |

Assert unlimited max_open_files for FIFO compaction. (#8172)

Summary: Resolves https://github.com/facebook/rocksdb/issues/8014 - Add an assertion on `DB::Open` to ensure `db_options.max_open_files` is unlimited if FIFO Compaction is being used. - This is to align with what the docs mention and to prevent premature data deletion. - Update tests to work with this assertion. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8172 Test Plan: ```bash $ make check -j$(nproc) Generated TARGETS Summary: - 6 libs - 0 binarys - 180 tests ``` Reviewed By: ajkr Differential Revision: D27768792 Pulled By: thejchap fbshipit-source-id: cf6350535e3a3577fec72bcba75b3c094dc7a6f3 |

4 years ago |

|

|

3dff28cf9b |

Use SystemClock* instead of std::shared_ptr<SystemClock> in lower level routines (#8033)

Summary: For performance purposes, the lower level routines were changed to use a SystemClock* instead of a std::shared_ptr<SystemClock>. The shared ptr has some performance degradation on certain hardware classes. For most of the system, there is no risk of the pointer being deleted/invalid because the shared_ptr will be stored elsewhere. For example, the ImmutableDBOptions stores the Env which has a std::shared_ptr<SystemClock> in it. The SystemClock* within the ImmutableDBOptions is essentially a "short cut" to gain access to this constant resource. There were a few classes (PeriodicWorkScheduler?) where the "short cut" property did not hold. In those cases, the shared pointer was preserved. Using db_bench readrandom perf_level=3 on my EC2 box, this change performed as well or better than 6.17: 6.17: readrandom : 28.046 micros/op 854902 ops/sec; 61.3 MB/s (355999 of 355999 found) 6.18: readrandom : 32.615 micros/op 735306 ops/sec; 52.7 MB/s (290999 of 290999 found) PR: readrandom : 27.500 micros/op 871909 ops/sec; 62.5 MB/s (367999 of 367999 found) (Note that the times for 6.18 are prior to revert of the SystemClock). Pull Request resolved: https://github.com/facebook/rocksdb/pull/8033 Reviewed By: pdillinger Differential Revision: D27014563 Pulled By: mrambacher fbshipit-source-id: ad0459eba03182e454391b5926bf5cdd45657b67 |

4 years ago |

|

|

64517d184a |

Make secondary instance use ManifestTailer (#7998)

Summary: This PR - adds a class `ManifestTailer` that inherits from `VersionEditHandlerPointInTime`. `ManifestTailer::Iterate()` can be called multiple times to tail the primary instance's MANIFEST and apply the changes to the secondary, - updates the implementation of `ReactiveVersionSet::ReadAndApply` to use this class, - removes unused code in version_set.cc, - updates existing tests, e.g. removing deleted sync points from unit tests, - adds a new test to address the bug in https://github.com/facebook/rocksdb/issues/7815. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7998 Test Plan: make check Existing and newly-added tests in version_set_test.cc and db_secondary_test.cc Reviewed By: jay-zhuang Differential Revision: D26926641 Pulled By: riversand963 fbshipit-source-id: 8d4dd15db0ba863c213f743e33b5a207e948c980 |

4 years ago |

|

|

67d72fb5dc |

Fix checkpoint stuck (#7921)

Summary:

## 1. Bug description:

When RocksDB Checkpoint, it may be stuck in `WaitUntilFlushWouldNotStallWrites` method.

## 2. Simple analysis of the reasons:

### 2.1 Configuration parameters:

```yaml

Compaction Style : Universal

max_write_buffer_number : 4

min_write_buffer_number_to_merge : 3

```

Checkpoint is usually very fast. When the Checkpoint is executed, `WaitUntilFlushWouldNotStallWrites` is called. If there are 2 Immutable MemTables, which are less than `min_write_buffer_number_to_merge`, they will not be flushed. But will enter this code.

```c++

// method: GetWriteStallConditionAndCause

if (mutable_cf_options.max_write_buffer_number> 3 &&

num_unflushed_memtables >=

mutable_cf_options.max_write_buffer_number-1) {

return {WriteStallCondition::kDelayed, WriteStallCause::kMemtableLimit};

}

```

code link:

|

4 years ago |

|

|

4bc9df9459 |

Fix handling of Mutable options; Allow DB::SetOptions to update mutable TableFactory Options (#7936)

Summary: Added a "only_mutable_options" flag to the ConfigOptions. When set, the Configurable methods will only look at/update options that are marked as kMutable. Fixed DB::SetOptions to allow for the update of any mutable TableFactory options. Fixes https://github.com/facebook/rocksdb/issues/7385. Added tests for the new flag. Updated HISTORY.md Pull Request resolved: https://github.com/facebook/rocksdb/pull/7936 Reviewed By: akankshamahajan15 Differential Revision: D26389646 Pulled By: mrambacher fbshipit-source-id: 6dc247f6e999fa2814059ebbd0af8face109fea0 |

4 years ago |

|

|

ea8bb82fc7 |

Add support for IOTracing in blob files (#7958)

Summary: Add support for IOTracing in blob files Pull Request resolved: https://github.com/facebook/rocksdb/pull/7958 Test Plan: Add a new test and checked manually the trace_file for blob files being recorded during read and write. Reviewed By: ltamasi Differential Revision: D26415950 Pulled By: akankshamahajan15 fbshipit-source-id: 49c2859b3a4f8307e7cb69a92704403a4da46d44 |

4 years ago |

|

|

12f1137355 |

Add a SystemClock class to capture the time functions of an Env (#7858)

Summary: Introduces and uses a SystemClock class to RocksDB. This class contains the time-related functions of an Env and these functions can be redirected from the Env to the SystemClock. Many of the places that used an Env (Timer, PerfStepTimer, RepeatableThread, RateLimiter, WriteController) for time-related functions have been changed to use SystemClock instead. There are likely more places that can be changed, but this is a start to show what can/should be done. Over time it would be nice to migrate most (if not all) of the uses of the time functions from the Env to the SystemClock. There are several Env classes that implement these functions. Most of these have not been converted yet to SystemClock implementations; that will come in a subsequent PR. It would be good to unify many of the Mock Timer implementations, so that they behave similarly and be tested similarly (some override Sleep, some use a MockSleep, etc). Additionally, this change will allow new methods to be introduced to the SystemClock (like https://github.com/facebook/rocksdb/issues/7101 WaitFor) in a consistent manner across a smaller number of classes. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7858 Reviewed By: pdillinger Differential Revision: D26006406 Pulled By: mrambacher fbshipit-source-id: ed10a8abbdab7ff2e23d69d85bd25b3e7e899e90 |

4 years ago |

|

|

55e99688cc |

No elide constructors (#7798)

Summary: Added "no-elide-constructors to the ASSERT_STATUS_CHECK builds. This flag gives more errors/warnings for some of the Status checks where an inner class checks a Status and later returns it. In this case, without the elide check on, the returned status may not have been checked in the caller, thereby bypassing the checked code. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7798 Reviewed By: jay-zhuang Differential Revision: D25680451 Pulled By: pdillinger fbshipit-source-id: c3f14ed9e2a13f0a8c54d839d5fb4d1fc1e93917 |

4 years ago |

|

|

b1ee191405 |

Fix memory leak for ColumnFamily drop with live iterator (#7749)

Summary: Uncommon bug seen by ASAN with ColumnFamilyTest.LiveIteratorWithDroppedColumnFamily, if the last two references to a ColumnFamilyData are both SuperVersions (during InstallSuperVersion). The fix is to use UnrefAndTryDelete even in SuperVersion::Cleanup but with a parameter to avoid re-entering Cleanup on the same SuperVersion being cleaned up. ColumnFamilyData::Unref is considered unsafe so removed. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7749 Test Plan: ./column_family_test --gtest_filter=*LiveIter* --gtest_repeat=100 Reviewed By: jay-zhuang Differential Revision: D25354304 Pulled By: pdillinger fbshipit-source-id: e78f3a3f67c40013b8432f31d0da8bec55c5321c |

4 years ago |

|

|

70f2e0916a |

Write min_log_number_to_keep to MANIFEST during atomic flush under 2 phase commit (#7570)

Summary: When 2 phase commit is enabled, if there are prepared data in a WAL, the WAL should be kept, the minimum log number for such a WAL is written to MANIFEST during flush. In atomic flush, such information is not written to MANIFEST. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7570 Test Plan: Added a new unit test `DBAtomicFlushTest.ManualFlushUnder2PC`, this test fails in atomic flush without this PR, after this PR, it succeeds. Reviewed By: riversand963 Differential Revision: D24394222 Pulled By: cheng-chang fbshipit-source-id: 60ce74b21b704804943be40c8de01b41269cf116 |

4 years ago |

|

|

51a8dc6d14 |

Integrated blob garbage collection: relocate blobs (#7694)

Summary: The patch adds basic garbage collection support to the integrated BlobDB implementation. Valid blobs residing in the oldest blob files are relocated as they are encountered during compaction. The threshold that determines which blob files qualify is computed based on the configuration option `blob_garbage_collection_age_cutoff`, which was introduced in https://github.com/facebook/rocksdb/issues/7661 . Once a blob is retrieved for the purposes of relocation, it passes through the same logic that extracts large values to blob files in general. This means that if, for instance, the size threshold for key-value separation (`min_blob_size`) got changed or writing blob files got disabled altogether, it is possible for the value to be moved back into the LSM tree. In particular, one way to re-inline all blob values if needed would be to perform a full manual compaction with `enable_blob_files` set to `false`, `enable_blob_garbage_collection` set to `true`, and `blob_file_garbage_collection_age_cutoff` set to `1.0`. Some TODOs that I plan to address in separate PRs: 1) We'll have to measure the amount of new garbage in each blob file and log `BlobFileGarbage` entries as part of the compaction job's `VersionEdit`. (For the time being, blob files are cleaned up solely based on the `oldest_blob_file_number` relationships.) 2) When compression is used for blobs, the compression type hasn't changed, and the blob still qualifies for being written to a blob file, we can simply copy the compressed blob to the new file instead of going through decompression and compression. 3) We need to update the formula for computing write amplification to account for the amount of data read from blob files as part of GC. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7694 Test Plan: `make check` Reviewed By: riversand963 Differential Revision: D25069663 Pulled By: ltamasi fbshipit-source-id: bdfa8feb09afcf5bca3b4eba2ba72ce2f15cd06a |

4 years ago |

|

|

9a690a74e1 |

In ParseInternalKey(), include corrupt key info in Status (#7515)

Summary: Fixes Issue https://github.com/facebook/rocksdb/issues/7497 When allow_data_in_errors db_options is set, log error key details in `ParseInternalKey()` Have fixed most of the calls. Have few TODOs still pending - because have to make more deeper changes to pass in the allow_data_in_errors flag. Will do those in a separate PR later. Tests: - make check - some of the existing tests that exercise the "internal key too small" condition are: dbformat_test, cuckoo_table_builder_test - some of the existing tests that exercise the corrupted key path are: corruption_test, merge_helper_test, compaction_iterator_test Example of new status returns: - Key too small - `Corrupted Key: Internal Key too small. Size=5` - Corrupt key with allow_data_in_errors option set to false: `Corrupted Key: '<redacted>' seq:3, type:3` - Corrupt key with allow_data_in_errors option set to true: `Corrupted Key: '61' seq:3, type:3` Pull Request resolved: https://github.com/facebook/rocksdb/pull/7515 Reviewed By: ajkr Differential Revision: D24240264 Pulled By: ramvadiv fbshipit-source-id: bc48f5d4475ac19d7713e16df37505b31aac42e7 |

4 years ago |

|

|

e8cb32ed67 |

Introduce BlobFileCache and add support for blob files to Get() (#7540)

Summary: The patch adds blob file support to the `Get` API by extending `Version` so that whenever a blob reference is read from a file, the blob is retrieved from the corresponding blob file and passed back to the caller. (This is assuming the blob reference is valid and the blob file is actually part of the given `Version`.) It also introduces a cache of `BlobFileReader`s called `BlobFileCache` that enables sharing `BlobFileReader`s between callers. `BlobFileCache` uses the same backing cache as `TableCache`, so `max_open_files` (if specified) limits the total number of open (table + blob) files. TODO: proactively open/cache blob files and pin the cache handles of the readers in the metadata objects similarly to what `VersionBuilder::LoadTableHandlers` does for table files. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7540 Test Plan: `make check` Reviewed By: riversand963 Differential Revision: D24260219 Pulled By: ltamasi fbshipit-source-id: a8a2a4f11d3d04d6082201b52184bc4d7b0857ba |

4 years ago |

|

|

e04a50923d |

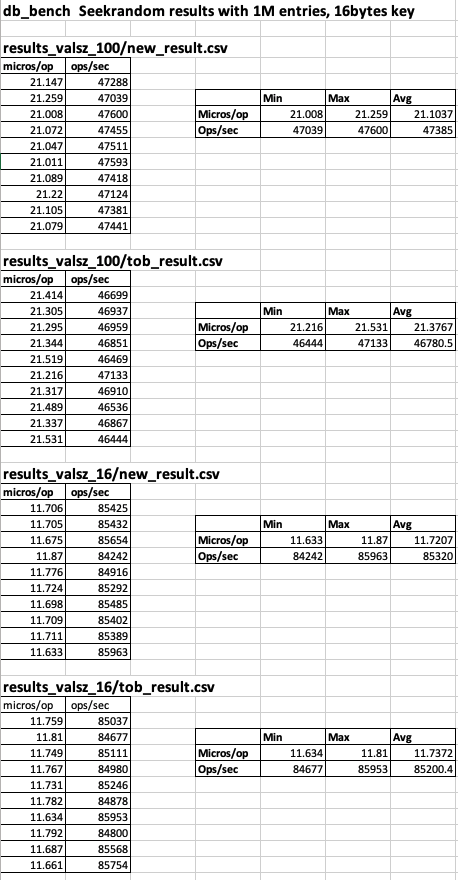

Change ParseInternalKey() to return Status instead of bool (#7457)

Summary: Fixes https://github.com/facebook/rocksdb/issues/7430 Change ParseInternalKey() to return Status instead of bool. db_bench (seekrandom) based before/after results with value size of 100 bytes and 16 bytes can be found at (tests ran on an udb server): https://www.dropbox.com/s/47bwamdy5ozngph/PIK_ret_Status_results.xlsx?dl=0  Pull Request resolved: https://github.com/facebook/rocksdb/pull/7457 Reviewed By: ajkr Differential Revision: D24002433 Pulled By: ramvadiv fbshipit-source-id: ac253ecf577a29044c47c3fe254a01e71404c44c |

4 years ago |

|

|

9a63bbd391 |

Add few unit test cases in ASSERT_STATUS_CHECKED build (#7427)

Summary: Fix few test cases and add them in ASSERT_STATUS_CHECKED build. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7427 Test Plan: 1. ASSERT_STATUS_CHECKED=1 make -j48 check, 2. travis build for ASSERT_STATUS_CHECKED, 3. Without ASSERT_STATUS_CHECKED: make check -j64, CircleCI build and travis build Reviewed By: pdillinger Differential Revision: D23909983 Pulled By: akankshamahajan15 fbshipit-source-id: 42d7e4aea972acb9fcddb7ca73fcb82f93272434 |

4 years ago |

|

|

7d472accdc |

Bring the Configurable options together (#5753)

Summary: This PR merges the functionality of making the ColumnFamilyOptions, TableFactory, and DBOptions into Configurable into a single PR, resolving any merge conflicts Pull Request resolved: https://github.com/facebook/rocksdb/pull/5753 Reviewed By: ajkr Differential Revision: D23385030 Pulled By: zhichao-cao fbshipit-source-id: 8b977a7731556230b9b8c5a081b98e49ee4f160a |

4 years ago |