Summary:

Fix issues for reproducing synthetic ZippyDB workloads in the FAST20' paper using db_bench. Details changes as follows.

1, add a separate random mode in MixGraph to produce all_random workload.

2, fix power inverse function for generating prefix_dist workload.

3, make sure key_offset in prefix mode is always unsigned.

note: Need to carefully choose key_dist_a/b to avoid aliasing. Power inverse function range should be close to overall key space.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6795

Reviewed By: akankshamahajan15

Differential Revision: D21371095

Pulled By: zhichao-cao

fbshipit-source-id: 80744381e242392c8c7cf8ac3d68fe67fe876048

Summary:

This commit adds an `compression_parallel_threads` option in

db_stress. It also fixes the naming of parallel compression

option in db_bench to keep it aligned with others.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6722

Reviewed By: pdillinger

Differential Revision: D21091385

fbshipit-source-id: c9ba8c4e5cc327ff9e6094a6dc6a15fcff70f100

Summary:

The dynamic_cast in the filter benchmark causes release mode to fail due to

no-rtti. Replace with static_cast_with_check.

Signed-off-by: Derrick Pallas <derrick@pallas.us>

Addition by peterd: Remove unnecessary 2nd template arg on all static_cast_with_check

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6732

Reviewed By: ltamasi

Differential Revision: D21304260

Pulled By: pdillinger

fbshipit-source-id: 6e8eb437c4ca5a16dbbfa4053d67c4ad55f1608c

Summary:

Summary : 1. Add two arguments --compression_level_from and --compression_level_to to check

the compression size with different compression level in the given range. Users must

specify one compression type else it will error out. Both from and to levels must

also be specified together.

2. Display the time taken to compress each file with different compressions by default.

Test Plan : make -j64 check

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6634

Test Plan: make -j64 check

Reviewed By: anand1976

Differential Revision: D20810282

Pulled By: akankshamahajan15

fbshipit-source-id: ac9098d3c079a1fad098f6678dbedb4d888a791b

Summary:

In crash test, the db directory might be set to /dev/shm or /tmp, in certain environments such as internal testing infrastructure, neither of these directories support direct IO, so direct IO is never enabled in crash test.

This PR sets up SyncPoints in direct IO related code paths to disable O_DIRECT flag in calls to `open`, so the direct IO code paths will be executed, all direct IO related assertions will be checked, but no real direct IO request will be issued to the file system.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6727

Test Plan:

export CRASH_TEST_EXT_ARGS="--use_direct_reads=1 --mmap_read=0"

make -j24 crash_test

Reviewed By: zhichao-cao

Differential Revision: D21139250

Pulled By: cheng-chang

fbshipit-source-id: db9adfe78d91aa4759835b1af91c5db7b27b62ee

Summary:

The methods in convenience.h are used to compare/convert objects to/from strings. There is a mishmash of parameters in use here with more needed in the future. This PR replaces those parameters with a single structure.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6389

Reviewed By: siying

Differential Revision: D21163707

Pulled By: zhichao-cao

fbshipit-source-id: f807b4cc7e2b0af3871536b69546b2604dfa81bd

Summary:

Based on https://github.com/facebook/rocksdb/issues/6648 (CLA Signed), but heavily modified / extended:

* Implicit capture of this via [=] deprecated in C++20, and [=,this] not standard before C++20 -> now using explicit capture lists

* Implicit copy operator deprecated in gcc 9 -> add explicit '= default' definition

* std::random_shuffle deprecated in C++17 and removed in C++20 -> migrated to a replacement in RocksDB random.h API

* Add the ability to build with different std version though -DCMAKE_CXX_STANDARD=11/14/17/20 on the cmake command line

* Minimal rebuild flag of MSVC is deprecated and is forbidden with /std:c++latest (C++20)

* Added MSVC 2019 C++11 & MSVC 2019 C++20 in AppVeyor

* Added GCC 9 C++11 & GCC9 C++20 in Travis

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6697

Test Plan: make check and CI

Reviewed By: cheng-chang

Differential Revision: D21020318

Pulled By: pdillinger

fbshipit-source-id: 12311be5dbd8675a0e2c817f7ec50fa11c18ab91

Summary:

Recently index_type kBinarySearchWithFirstKey is improved so that the API guarantee is exactly the same as other types and it is ready for wide production. We should cover it in crash tst.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6721

Test Plan: Run crash_test

Reviewed By: anand1976

Differential Revision: D21099781

fbshipit-source-id: fda91eba831d9eacbb140c703e9768bb1701f935

Summary:

RocksDB behavior is different while max_open_files is small or large. Add the coverage to small max_open_files.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6719

Test Plan: Run crash_test

Reviewed By: pdillinger

Differential Revision: D21081021

fbshipit-source-id: e3e211761a9bd25d93d19a61c1f7b62d48cf5e3c

Summary:

Options.avoid_flush_during_recovery is uncovered in crash_test. Add the coverage with a chance of 1/8, as it is a less frequently used options.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6712

Test Plan: Run crash_test and see the option can be used or not used by chance.

Reviewed By: ltamasi

Differential Revision: D21056566

fbshipit-source-id: c3b1521517cfc204786e6ef8c6acd7fffda64793

Summary:

Add env_fault_injection argument to db_stress. When enabled,

FaultInjectionTestEnv will be used instead. Currently this

option does not support running with other env setting.

This will allow

us to later manually produce error when running db_crashtest.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6687

Test Plan:

make db_stress -j32

./db_stress --env_fault_injection

./db_stress --env_fault_injection --hdfs // expect error message

Reviewed By: ajkr

Differential Revision: D21014683

Pulled By: yhchiang

fbshipit-source-id: 0724aeac37efd57adb72a37defe6dbd3bfa8106a

Summary:

Context: Index type `kBinarySearchWithFirstKey` added the ability for sst file iterator to sometimes report a key from index without reading the corresponding data block. This is useful when sst blocks are cut at some meaningful boundaries (e.g. one block per key prefix), and many seeks land between blocks (e.g. for each prefix, the ranges of keys in different sst files are nearly disjoint, so a typical seek needs to read a data block from only one file even if all files have the prefix). But this added a new error condition, which rocksdb code was really not equipped to deal with: `InternalIterator::value()` may fail with an IO error or Status::Incomplete, but it's just a method returning a Slice, with no way to report error instead. Before this PR, this type of error wasn't handled at all (an empty slice was returned), and kBinarySearchWithFirstKey implementation was considered a prototype.

Now that we (LogDevice) have experimented with kBinarySearchWithFirstKey for a while and confirmed that it's really useful, this PR is adding the missing error handling.

It's a pretty inconvenient situation implementation-wise. The error needs to be reported from InternalIterator when trying to access value. But there are ~700 call sites of `InternalIterator::value()`, most of which either can't hit the error condition (because the iterator is reading from memtable or from index or something) or wouldn't benefit from the deferred loading of the value (e.g. compaction iterator that reads all values anyway). Adding error handling to all these call sites would needlessly bloat the code. So instead I made the deferred value loading optional: only the call sites that may use deferred loading have to call the new method `PrepareValue()` before calling `value()`. The feature is enabled with a new bool argument `allow_unprepared_value` to a bunch of methods that create iterators (it wouldn't make sense to put it in ReadOptions because it's completely internal to iterators, with virtually no user-visible effect). Lmk if you have better ideas.

Note that the deferred value loading only happens for *internal* iterators. The user-visible iterator (DBIter) always prepares the value before returning from Seek/Next/etc. We could go further and add an API to defer that value loading too, but that's most likely not useful for LogDevice, so it doesn't seem worth the complexity for now.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6621

Test Plan: make -j5 check . Will also deploy to some logdevice test clusters and look at stats.

Reviewed By: siying

Differential Revision: D20786930

Pulled By: al13n321

fbshipit-source-id: 6da77d918bad3780522e918f17f4d5513d3e99ee

Summary:

This was causing db_crashtest.py to wrongly assume an error by parsing the output. Hopefully this will stabilize the crash tests.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6705

Test Plan: make blackbox_crash_test

Reviewed By: ltamasi

Differential Revision: D21043335

Pulled By: anand1976

fbshipit-source-id: 5cddd112b124d4e2ebd11724a17d4ef0f50c1cf8

Summary:

Improve it in two ways:

1. tools/check_format_compatible.sh is not friendly to run outside FB environment. remove the hard-coded http proxy setting. Instead, move it to Legocastle configuration

2. Always disable warning as error, so that older build is more likely to pass.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6702

Test Plan: Run the test and make sure at least it doesn't break.

Reviewed By: riversand963

Differential Revision: D21033329

fbshipit-source-id: 88b4ec1ec49547b772790050a165466bdc4a62a0

Summary:

Add NewFileChecksumGenCrc32cFactory to file checksum public interface such that applications can use the build in crc32 checksum factory.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6688

Test Plan: pass make asan_check

Reviewed By: riversand963

Differential Revision: D21006859

Pulled By: zhichao-cao

fbshipit-source-id: ea8a45196a8b77c310728ab05f6cc0f49f3baef0

Summary:

This PR implements a fault injection mechanism for injecting errors in reads in db_stress. The FaultInjectionTestFS is used for this purpose. A thread local structure is used to track the errors, so that each db_stress thread can independently enable/disable error injection and verify observed errors against expected errors. This is initially enabled only for Get and MultiGet, but can be extended to iterator as well once its proven stable.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6538

Test Plan:

crash_test

make check

Reviewed By: riversand963

Differential Revision: D20714347

Pulled By: anand1976

fbshipit-source-id: d7598321d4a2d72bda0ced57411a337a91d87dc7

Summary:

When investigating https://github.com/facebook/rocksdb/issues/6666, we encounter an error for sst_dump to dump an ingested SST file with global seqno.

```

Corruption: An external sst file with version 2 have global seqno property with value ��/, while largest seqno in the file is 0)

```

Same as https://github.com/facebook/rocksdb/pull/5097, it is due to SstFileReader don't know the largest seqno of a file, it will fail this check when it open a file with global seqno. ca89ac2ba9/table/block_based_table_reader.cc (L730)

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6673

Test Plan: run it manually

Reviewed By: cheng-chang

Differential Revision: D20937546

Pulled By: ajkr

fbshipit-source-id: c3fd04d60916a738533ee1885f3ea844669a9479

Summary:

New memory technologies are being developed by various hardware vendors (Intel DCPMM is one such technology currently available). These new memory types require different libraries for allocation and management (such as PMDK and memkind). The high capacities available make it possible to provision large caches (up to several TBs in size), beyond what is achievable with DRAM.

The new allocator provided in this PR uses the memkind library to allocate memory on different media.

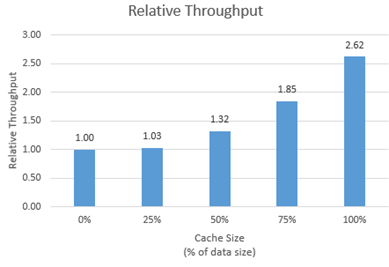

**Performance**

We tested the new allocator using db_bench.

- For each test, we vary the size of the block cache (relative to the size of the uncompressed data in the database).

- The database is filled sequentially. Throughput is then measured with a readrandom benchmark.

- We use a uniform distribution as a worst-case scenario.

The plot shows throughput (ops/s) relative to a configuration with no block cache and default allocator.

For all tests, p99 latency is below 500 us.

**Changes**

- Add MemkindKmemAllocator

- Add --use_cache_memkind_kmem_allocator db_bench option (to create an LRU block cache with the new allocator)

- Add detection of memkind library with KMEM DAX support

- Add test for MemkindKmemAllocator

**Minimum Requirements**

- kernel 5.3.12

- ndctl v67 - https://github.com/pmem/ndctl

- memkind v1.10.0 - https://github.com/memkind/memkind

**Memory Configuration**

The allocator uses the MEMKIND_DAX_KMEM memory kind. Follow the instructions on[ memkind’s GitHub page](https://github.com/memkind/memkind) to set up NVDIMM memory accordingly.

Note on memory allocation with NVDIMM memory exposed as system memory.

- The MemkindKmemAllocator will only allocate from NVDIMM memory (using memkind_malloc with MEMKIND_DAX_KMEM kind).

- The default allocator is not restricted to RAM by default. Based on NUMA node latency, the kernel should allocate from local RAM preferentially, but it’s a kernel decision. numactl --preferred/--membind can be used to allocate preferentially/exclusively from the local RAM node.

**Usage**

When creating an LRU cache, pass a MemkindKmemAllocator object as argument.

For example (replace capacity with the desired value in bytes):

```

#include "rocksdb/cache.h"

#include "memory/memkind_kmem_allocator.h"

NewLRUCache(

capacity /*size_t*/,

6 /*cache_numshardbits*/,

false /*strict_capacity_limit*/,

false /*cache_high_pri_pool_ratio*/,

std::make_shared<MemkindKmemAllocator>());

```

Refer to [RocksDB’s block cache documentation](https://github.com/facebook/rocksdb/wiki/Block-Cache) to assign the LRU cache as block cache for a database.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6214

Reviewed By: cheng-chang

Differential Revision: D19292435

fbshipit-source-id: 7202f47b769e7722b539c86c2ffd669f64d7b4e1

Summary:

This commit is fixing a bug that readrandom test returns many NotFound in db_bench from Version 6.2.

Pull Request resolved: https://github.com/facebook/rocksdb/issues/6664

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6665

Reviewed By: cheng-chang

Differential Revision: D20911298

Pulled By: ajkr

fbshipit-source-id: c2658d4dbb35798ccbf67dff6e64923fb731ef81

Summary:

This PR adds support for pipelined & parallel compression optimization for `BlockBasedTableBuilder`. This optimization makes block building, block compression and block appending a pipeline, and uses multiple threads to accelerate block compression. Users can set `CompressionOptions::parallel_threads` greater than 1 to enable compression parallelism.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6262

Reviewed By: ajkr

Differential Revision: D20651306

fbshipit-source-id: 62125590a9c15b6d9071def9dc72589c1696a4cb

Summary:

In the current implementation, sst file checksum is calculated by a shared checksum function object, which may make some checksum function hard to be applied here such as SHA1. In this implementation, each sst file will have its own checksum generator obejct, created by FileChecksumGenFactory. User needs to implement its own FilechecksumGenerator and Factory to plugin the in checksum calculation method.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6600

Test Plan: tested with make asan_check

Reviewed By: riversand963

Differential Revision: D20717670

Pulled By: zhichao-cao

fbshipit-source-id: 2a74c1c280ac11a07a1980185b43b671acaa71c6

Summary:

Forward compatibility with new defaults only starts from 5.16

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6598

Test Plan: facebook automated test (so much easier than running myself)

Reviewed By: riversand963

Differential Revision: D20665553

Pulled By: pdillinger

fbshipit-source-id: b846bfaccf4d0946f92d323a3b4ee6e3e548df93

Summary:

And add releases that should have been added before (6.6 - 6.8)

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6594

Test Plan: facebook automated test (so much easier than running myself)

Reviewed By: riversand963

Differential Revision: D20649106

Pulled By: pdillinger

fbshipit-source-id: 78832449d9295580282cebf117e3968362fbdc69

Summary:

The current Env/FileSystem API separation has a couple of issues -

1. It requires the user to specify 2 options - ```Options::env``` and ```Options::file_system``` - which means they have to make code changes to benefit from the new APIs. Furthermore, there is a risk of accessing the same APIs in two different ways, through Env in the old way and through FileSystem in the new way. The two may not always match, for example, if env is ```PosixEnv``` and FileSystem is a custom implementation. Any stray RocksDB calls to env will use the ```PosixEnv``` implementation rather than the file_system implementation.

2. There needs to be a simple way for the FileSystem developer to instantiate an Env for backward compatibility purposes.

This PR solves the above issues and simplifies the migration in the following ways -

1. Embed a shared_ptr to the ```FileSystem``` in the ```Env```, and remove ```Options::file_system``` as a configurable option. This way, no code changes will be required in application code to benefit from the new API. The default Env constructor uses a ```LegacyFileSystemWrapper``` as the embedded ```FileSystem```.

1a. - This also makes it more robust by ensuring that even if RocksDB

has some stray calls to Env APIs rather than FileSystem, they will go

through the same object and thus there is no risk of getting out of

sync.

2. Provide a ```NewCompositeEnv()``` API that can be used to construct a

PosixEnv with a custom FileSystem implementation. This eliminates an

indirection to call Env APIs, and relieves the FileSystem developer of

the burden of having to implement wrappers for the Env APIs.

3. Add a couple of missing FileSystem APIs - ```SanitizeEnvOptions()``` and

```NewLogger()```

Tests:

1. New unit tests

2. make check and make asan_check

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6552

Reviewed By: riversand963

Differential Revision: D20592038

Pulled By: anand1976

fbshipit-source-id: c3801ad4153f96d21d5a3ae26c92ba454d1bf1f7

Summary:

Currently, `db_stress` tests a randomly picked one of `GetLiveFiles`,

`GetSortedWalFiles`, and `GetCurrentWalFile` with a 1/N chance when the

command line parameter `get_live_files_and_wal_files_one_in` is specified.

The problem is that `GetSortedWalFiles` and `GetCurrentWalFile` are unreliable

in the sense that they can return errors if another thread removes a WAL file

while they are executing (which is a perfectly plausible and legitimate scenario).

The patch splits this command line parameter into three (one for each API),

and changes the crash test script so that only `GetLiveFiles` is tested during

our continuous crash test runs.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6491

Test Plan:

```

make check

python tools/db_crashtest.py whitebox

```

Reviewed By: siying

Differential Revision: D20312200

Pulled By: ltamasi

fbshipit-source-id: e7c3481eddfe3bd3d5349476e34abc9eee5b7dc8

Summary:

ldb and sst_dump are most important tools and they don't dependend on gflags. In cmake, we don't have an way to only build these two tools and exclude other tools. This is inconvenient if the environment has a problem with gflags. Add such an option WITH_CORE_TOOLS.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6506

Test Plan: cmake and build with WITH_TOOLS and without.

Differential Revision: D20473029

fbshipit-source-id: 3d730fd14bbae6eeeae7f9cc9aec50a4e488ad72

Summary:

I start to see following failures:

tools/db_bench_tool.cc: In constructor ‘rocksdb::NormalDistribution::NormalDistribution(unsigned int, unsigned int)’:

tools/db_bench_tool.cc:1528:58: error: declaration of ‘max’ shadows a member of 'this' [-Werror=shadow]

NormalDistribution(unsigned int min, unsigned int max) :

^

tools/db_bench_tool.cc:1528:58: error: declaration of ‘min’ shadows a member of 'this' [-Werror=shadow]

tools/db_bench_tool.cc: In constructor ‘rocksdb::UniformDistribution::UniformDistribution(unsigned int, unsigned int)’:

tools/db_bench_tool.cc:1546:59: error: declaration of ‘max’ shadows a member of 'this' [-Werror=shadow]

UniformDistribution(unsigned int min, unsigned int max) :

^

tools/db_bench_tool.cc:1546:59: error: declaration of ‘min’ shadows a member of 'this' [-Werror=shadow]

when I build from GCC 4.8. Rename those variables to fix the problem.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6537

Test Plan: make all with the compiler that used to show the failure.

Differential Revision: D20448741

fbshipit-source-id: 18bcf012dbe020f22f79038a9b08f447befa2574

Summary:

fix a few build warnings that are treated as failures with more strict MSVC warning settings

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6517

Differential Revision: D20401325

Pulled By: pdillinger

fbshipit-source-id: b44979dfaafdc7b3b8cb44a565400a99b331dd30

Summary:

Preliminary support for iterator with user timestamp. Current implementation does not consider merge operator and reverse iterator. Auto compaction is also disabled in unit tests.

Create an iterator with timestamp.

```

...

read_opts.timestamp = &ts;

auto* iter = db->NewIterator(read_opts);

// target is key without timestamp.

for (iter->Seek(target); iter->Valid(); iter->Next()) {}

for (iter->SeekToFirst(); iter->Valid(); iter->Next()) {}

delete iter;

read_opts.timestamp = &ts1;

// lower_bound and upper_bound are without timestamp.

read_opts.iterate_lower_bound = &lower_bound;

read_opts.iterate_upper_bound = &upper_bound;

auto* iter1 = db->NewIterator(read_opts);

// Do Seek or SeekToFirst()

delete iter1;

```

Test plan (dev server)

```

$make check

```

Simple benchmarking (dev server)

1. The overhead introduced by this PR even when timestamp is disabled.

key size: 16 bytes

value size: 100 bytes

Entries: 1000000

Data reside in main memory, and try to stress iterator.

Repeated three times on master and this PR.

- Seek without next

```

./db_bench -db=/dev/shm/rocksdbtest-1000 -benchmarks=fillseq,seekrandom -enable_pipelined_write=false -disable_wal=true -format_version=3

```

master: 159047.0 ops/sec

this PR: 158922.3 ops/sec (2% drop in throughput)

- Seek and next 10 times

```

./db_bench -db=/dev/shm/rocksdbtest-1000 -benchmarks=fillseq,seekrandom -enable_pipelined_write=false -disable_wal=true -format_version=3 -seek_nexts=10

```

master: 109539.3 ops/sec

this PR: 107519.7 ops/sec (2% drop in throughput)

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6255

Differential Revision: D19438227

Pulled By: riversand963

fbshipit-source-id: b66b4979486f8474619f4aa6bdd88598870b0746

Summary:

Some combinatino of --index_with_first_key and --index_shortening_mode can signifcantly improve performance for large values. Expose them in db_bench.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/5859

Test Plan: Run them with the new options and observe the behavior.

Differential Revision: D20104434

fbshipit-source-id: 21d48a732a9caf20b82312c7d7557d747ea3c304

Summary:

When dynamically linking two binaries together, different builds of RocksDB from two sources might cause errors. To provide a tool for user to solve the problem, the RocksDB namespace is changed to a flag which can be overridden in build time.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6433

Test Plan: Build release, all and jtest. Try to build with ROCKSDB_NAMESPACE with another flag.

Differential Revision: D19977691

fbshipit-source-id: aa7f2d0972e1c31d75339ac48478f34f6cfcfb3e

Summary:

In the current code base, RocksDB generate the checksum for each block and verify the checksum at usage. Current PR enable SST file checksum. After a SST file is generated by Flush or Compaction, RocksDB generate the SST file checksum and store the checksum value and checksum method name in the vs_info and MANIFEST as part for the FileMetadata.

Added the enable_sst_file_checksum to Options to enable or disable file checksum. Added sst_file_checksum to Options such that user can plugin their own SST file checksum calculate method via overriding the SstFileChecksum class. The checksum information inlcuding uint32_t checksum value and a checksum name (string). A new tool is added to LDB such that user can dump out a list of file checksum information from MANIFEST. If user enables the file checksum but does not provide the sst_file_checksum instance, RocksDB will use the default crc32checksum implemented in table/sst_file_checksum_crc32c.h

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6216

Test Plan: Added the testing case in table_test and ldb_cmd_test to verify checksum is correct in different level. Pass make asan_check.

Differential Revision: D19171461

Pulled By: zhichao-cao

fbshipit-source-id: b2e53479eefc5bb0437189eaa1941670e5ba8b87

Summary:

Right, when reading from option files, no readahead is used and 8KB buffer is used. It might introduce high latency if the file system provide high latency and doesn't do readahead. Instead, introduce a readahead to the file. When calling inside DB, infer the value from options.log_readahead. Otherwise, a default 512KB readahead size is used.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6372

Test Plan: Add --log_readahead_size in db_bench. Run it with several options and observe read size from option files using strace.

Differential Revision: D19727739

fbshipit-source-id: e6d8053b0a64259abc087f1f388b9cd66fa8a583

Summary:

We see some odd errors complaining math. However, it doesn't seem that it is needed to be included. Remove the include of math.h. Just removing it from db_bench doesn't seem to break anything. Replacing sqrt from std::sqrt seems to work for histogram.cc

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6373

Test Plan: Watch Travis and appveyor to run.

Differential Revision: D19730068

fbshipit-source-id: d3ad41defcdd9f51c2da1a3673fb258f5dfacf47

Summary:

This reverts commit 8e309b35bb.

The stress tests are failing . Revert it until we figure the root cause.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6327

Differential Revision: D19537657

Pulled By: maysamyabandeh

fbshipit-source-id: bf34a5dd720825957729e136e9a5a729a240e61a

Summary:

kHashSearch is incompatible with larger than 1 values for index_block_restart_interval. Setting it to 1 in stress tests would avoid confusion about the test parameters.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6324

Differential Revision: D19525669

Pulled By: maysamyabandeh

fbshipit-source-id: fbf3a797e0ebcebb4d32eba3728cf3583906fc8a

Summary:

Block-based table has index has been disabled in crash test due to bugs. We fixed a bug and re-enable it.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6310

Test Plan: Finish one round of "crash_test_with_atomic_flush" test successfully while exclusively running has index. Another run also ran for several hours without failure.

Differential Revision: D19455856

fbshipit-source-id: 1192752d2c1e81ed7e5c5c7a9481c841582d5274

Summary:

A previous change meant to make db_stress to run on sync=1 mode for 1/20 of the time in crash_test, but a bug caused to to always run on sync=1 mode. Fix it.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6304

Test Plan: Start and kill "python -u tools/db_crashtest.py --simple whitebox" multiple times and observe that most times sync=0 is used while some times sync=1 is used.

Differential Revision: D19433000

fbshipit-source-id: 7a0adba39b17a1b3acbbd791bb0cdb743b91fa95