|

|

|

|

#!/usr/bin/env bash

|

|

|

|

|

#

|

|

|

|

|

# Detects OS we're compiling on and outputs a file specified by the first

|

|

|

|

|

# argument, which in turn gets read while processing Makefile.

|

|

|

|

|

#

|

|

|

|

|

# The output will set the following variables:

|

|

|

|

|

# CC C Compiler path

|

|

|

|

|

# CXX C++ Compiler path

|

|

|

|

|

# PLATFORM_LDFLAGS Linker flags

|

|

|

|

|

# JAVA_LDFLAGS Linker flags for RocksDBJava

|

|

|

|

|

# JAVA_STATIC_LDFLAGS Linker flags for RocksDBJava static build

|

|

|

|

|

# JAVAC_ARGS Arguments for javac

|

|

|

|

|

# PLATFORM_SHARED_EXT Extension for shared libraries

|

|

|

|

|

# PLATFORM_SHARED_LDFLAGS Flags for building shared library

|

|

|

|

|

# PLATFORM_SHARED_CFLAGS Flags for compiling objects for shared library

|

|

|

|

|

# PLATFORM_CCFLAGS C compiler flags

|

|

|

|

|

# PLATFORM_CXXFLAGS C++ compiler flags. Will contain:

|

|

|

|

|

# PLATFORM_SHARED_VERSIONED Set to 'true' if platform supports versioned

|

|

|

|

|

# shared libraries, empty otherwise.

|

|

|

|

|

# FIND Command for the find utility

|

|

|

|

|

# WATCH Command for the watch utility

|

|

|

|

|

#

|

|

|

|

|

# The PLATFORM_CCFLAGS and PLATFORM_CXXFLAGS might include the following:

|

|

|

|

|

#

|

|

|

|

|

# -DROCKSDB_PLATFORM_POSIX if posix-platform based

|

|

|

|

|

# -DSNAPPY if the Snappy library is present

|

|

|

|

|

# -DLZ4 if the LZ4 library is present

|

|

|

|

|

# -DZSTD if the ZSTD library is present

|

|

|

|

|

# -DNUMA if the NUMA library is present

|

|

|

|

|

# -DTBB if the TBB library is present

|

Provide an allocator for new memory type to be used with RocksDB block cache (#6214)

Summary:

New memory technologies are being developed by various hardware vendors (Intel DCPMM is one such technology currently available). These new memory types require different libraries for allocation and management (such as PMDK and memkind). The high capacities available make it possible to provision large caches (up to several TBs in size), beyond what is achievable with DRAM.

The new allocator provided in this PR uses the memkind library to allocate memory on different media.

**Performance**

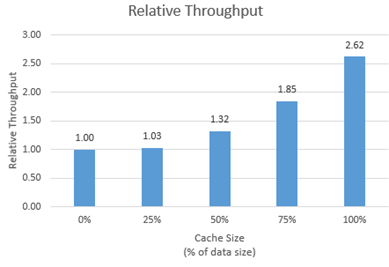

We tested the new allocator using db_bench.

- For each test, we vary the size of the block cache (relative to the size of the uncompressed data in the database).

- The database is filled sequentially. Throughput is then measured with a readrandom benchmark.

- We use a uniform distribution as a worst-case scenario.

The plot shows throughput (ops/s) relative to a configuration with no block cache and default allocator.

For all tests, p99 latency is below 500 us.

**Changes**

- Add MemkindKmemAllocator

- Add --use_cache_memkind_kmem_allocator db_bench option (to create an LRU block cache with the new allocator)

- Add detection of memkind library with KMEM DAX support

- Add test for MemkindKmemAllocator

**Minimum Requirements**

- kernel 5.3.12

- ndctl v67 - https://github.com/pmem/ndctl

- memkind v1.10.0 - https://github.com/memkind/memkind

**Memory Configuration**

The allocator uses the MEMKIND_DAX_KMEM memory kind. Follow the instructions on[ memkind’s GitHub page](https://github.com/memkind/memkind) to set up NVDIMM memory accordingly.

Note on memory allocation with NVDIMM memory exposed as system memory.

- The MemkindKmemAllocator will only allocate from NVDIMM memory (using memkind_malloc with MEMKIND_DAX_KMEM kind).

- The default allocator is not restricted to RAM by default. Based on NUMA node latency, the kernel should allocate from local RAM preferentially, but it’s a kernel decision. numactl --preferred/--membind can be used to allocate preferentially/exclusively from the local RAM node.

**Usage**

When creating an LRU cache, pass a MemkindKmemAllocator object as argument.

For example (replace capacity with the desired value in bytes):

```

#include "rocksdb/cache.h"

#include "memory/memkind_kmem_allocator.h"

NewLRUCache(

capacity /*size_t*/,

6 /*cache_numshardbits*/,

false /*strict_capacity_limit*/,

false /*cache_high_pri_pool_ratio*/,

std::make_shared<MemkindKmemAllocator>());

```

Refer to [RocksDB’s block cache documentation](https://github.com/facebook/rocksdb/wiki/Block-Cache) to assign the LRU cache as block cache for a database.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6214

Reviewed By: cheng-chang

Differential Revision: D19292435

fbshipit-source-id: 7202f47b769e7722b539c86c2ffd669f64d7b4e1

5 years ago

|

|

|

# -DMEMKIND if the memkind library is present

|

|

|

|

|

#

|

|

|

|

|

# Using gflags in rocksdb:

|

|

|

|

|

# Our project depends on gflags, which requires users to take some extra steps

|

|

|

|

|

# before they can compile the whole repository:

|

|

|

|

|

# 1. Install gflags. You may download it from here:

|

|

|

|

|

# https://gflags.github.io/gflags/ (Mac users can `brew install gflags`)

|

|

|

|

|

# 2. Once installed, add the include path for gflags to your CPATH env var and

|

|

|

|

|

# the lib path to LIBRARY_PATH. If installed with default settings, the lib

|

|

|

|

|

# will be /usr/local/lib and the include path will be /usr/local/include

|

|

|

|

|

|

|

|

|

|

OUTPUT=$1

|

|

|

|

|

if test -z "$OUTPUT"; then

|

|

|

|

|

echo "usage: $0 <output-filename>" >&2

|

|

|

|

|

exit 1

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

# we depend on C++17, but should be compatible with newer standards

|

|

|

|

|

if [ "$ROCKSDB_CXX_STANDARD" ]; then

|

|

|

|

|

PLATFORM_CXXFLAGS="-std=$ROCKSDB_CXX_STANDARD"

|

|

|

|

|

else

|

|

|

|

|

PLATFORM_CXXFLAGS="-std=c++17"

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

# we currently depend on POSIX platform

|

|

|

|

|

COMMON_FLAGS="-DROCKSDB_PLATFORM_POSIX -DROCKSDB_LIB_IO_POSIX"

|

|

|

|

|

|

|

|

|

|

# Default to fbcode gcc on internal fb machines

|

|

|

|

|

if [ -z "$ROCKSDB_NO_FBCODE" -a -d /mnt/gvfs/third-party ]; then

|

|

|

|

|

FBCODE_BUILD="true"

|

|

|

|

|

# If we're compiling with TSAN or shared lib, we need pic build

|

|

|

|

|

PIC_BUILD=$COMPILE_WITH_TSAN

|

|

|

|

|

if [ "$LIB_MODE" == "shared" ]; then

|

|

|

|

|

PIC_BUILD=1

|

|

|

|

|

fi

|

|

|

|

|

source "$PWD/build_tools/fbcode_config_platform010.sh"

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

# Delete existing output, if it exists

|

|

|

|

|

rm -f "$OUTPUT"

|

|

|

|

|

touch "$OUTPUT"

|

|

|

|

|

|

|

|

|

|

if test -z "$CC"; then

|

|

|

|

|

if [ -x "$(command -v cc)" ]; then

|

|

|

|

|

CC=cc

|

|

|

|

|

elif [ -x "$(command -v clang)" ]; then

|

|

|

|

|

CC=clang

|

|

|

|

|

else

|

|

|

|

|

CC=cc

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if test -z "$CXX"; then

|

|

|

|

|

if [ -x "$(command -v g++)" ]; then

|

|

|

|

|

CXX=g++

|

|

|

|

|

elif [ -x "$(command -v clang++)" ]; then

|

|

|

|

|

CXX=clang++

|

|

|

|

|

else

|

|

|

|

|

CXX=g++

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if test -z "$AR"; then

|

|

|

|

|

if [ -x "$(command -v gcc-ar)" ]; then

|

|

|

|

|

AR=gcc-ar

|

|

|

|

|

elif [ -x "$(command -v llvm-ar)" ]; then

|

|

|

|

|

AR=llvm-ar

|

|

|

|

|

else

|

|

|

|

|

AR=ar

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

# Detect OS

|

|

|

|

|

if test -z "$TARGET_OS"; then

|

|

|

|

|

TARGET_OS=`uname -s`

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if test -z "$TARGET_ARCHITECTURE"; then

|

|

|

|

|

TARGET_ARCHITECTURE=`uname -m`

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if test -z "$CLANG_SCAN_BUILD"; then

|

|

|

|

|

CLANG_SCAN_BUILD=scan-build

|

|

|

|

|

fi

|

|

|

|

|

|

build: do not relink every single binary just for a timestamp

Summary:

Prior to this change, "make check" would always waste a lot of

time relinking 60+ binaries. With this change, it does that

only when the generated file, util/build_version.cc, changes,

and that happens only when the date changes or when the

current git SHA changes.

This change makes some other improvements: before, there was no

rule to build a deleted util/build_version.cc. If it was somehow

removed, any attempt to link a program would fail.

There is no longer any need for the separate file,

build_tools/build_detect_version. Its functionality is

now in the Makefile.

* Makefile (DEPFILES): Don't filter-out util/build_version.cc.

No need, and besides, removing that dependency was wrong.

(date, git_sha, gen_build_version): New helper variables.

(util/build_version.cc): New rule, to create this file

and update it only if it would contain new information.

* build_tools/build_detect_platform: Remove file.

* db/db_impl.cc: Now, print only date (not the time).

* util/build_version.h (rocksdb_build_compile_time): Remove

declaration. No longer used.

Test Plan:

- Run "make check" twice, and note that the second time no linking is performed.

- Remove util/build_version.cc and ensure that any "make"

command regenerates it before doing anything else.

- Run this: strings librocksdb.a|grep _build_.

That prints output including the following:

rocksdb_build_git_date:2015-02-19

rocksdb_build_git_sha:2.8.fb-1792-g3cb6cc0

Reviewers: ljin, sdong, igor

Reviewed By: igor

Subscribers: dhruba

Differential Revision: https://reviews.facebook.net/D33591

10 years ago

|

|

|

if test -z "$CLANG_ANALYZER"; then

|

|

|

|

|

CLANG_ANALYZER=$(command -v clang++ 2> /dev/null)

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if test -z "$FIND"; then

|

|

|

|

|

FIND=find

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if test -z "$WATCH"; then

|

|

|

|

|

WATCH=watch

|

|

|

|

|

fi

|

|

|

|

|

|

fPIC in x64 environment

Summary:

Check https://github.com/facebook/rocksdb/pull/15 for context.

Apparently [1], we need -fPIC in x64 environments (this is added only in non-fbcode).

In fbcode, I removed -fPIC per @dhruba's suggestion, since it introduces perf regression. I'm not sure what would are the implications of doing that, but looks like it works, and when releasing to the third-party, we're disabling -fPIC either way [2].

Would love a suggestion from someone who knows more about this

[1] http://eli.thegreenplace.net/2011/11/11/position-independent-code-pic-in-shared-libraries-on-x64/

[2] https://our.intern.facebook.com/intern/wiki/index.php/Database/RocksDB/Third_Party

Test Plan: make check works

Reviewers: dhruba, emayanke, kailiu

Reviewed By: dhruba

CC: leveldb, dhruba, reconnect.grayhat

Differential Revision: https://reviews.facebook.net/D14337

11 years ago

|

|

|

COMMON_FLAGS="$COMMON_FLAGS ${CFLAGS}"

|

|

|

|

|

CROSS_COMPILE=

|

|

|

|

|

PLATFORM_CCFLAGS=

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS"

|

|

|

|

|

PLATFORM_SHARED_EXT="so"

|

|

|

|

|

PLATFORM_SHARED_LDFLAGS="-Wl,--no-as-needed -shared -Wl,-soname -Wl,"

|

|

|

|

|

PLATFORM_SHARED_CFLAGS="-fPIC"

|

|

|

|

|

PLATFORM_SHARED_VERSIONED=true

|

|

|

|

|

|

|

|

|

|

# generic port files (working on all platform by #ifdef) go directly in /port

|

|

|

|

|

GENERIC_PORT_FILES=`cd "$ROCKSDB_ROOT"; find port -name '*.cc' | tr "\n" " "`

|

|

|

|

|

|

|

|

|

|

# On GCC, we pick libc's memcmp over GCC's memcmp via -fno-builtin-memcmp

|

|

|

|

|

case "$TARGET_OS" in

|

|

|

|

|

Darwin)

|

|

|

|

|

PLATFORM=OS_MACOSX

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DOS_MACOSX"

|

|

|

|

|

PLATFORM_SHARED_EXT=dylib

|

|

|

|

|

PLATFORM_SHARED_LDFLAGS="-dynamiclib -install_name "

|

|

|

|

|

# PORT_FILES=port/darwin/darwin_specific.cc

|

|

|

|

|

;;

|

|

|

|

|

IOS)

|

|

|

|

|

PLATFORM=IOS

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DOS_MACOSX -DIOS_CROSS_COMPILE "

|

|

|

|

|

PLATFORM_SHARED_EXT=dylib

|

|

|

|

|

PLATFORM_SHARED_LDFLAGS="-dynamiclib -install_name "

|

|

|

|

|

CROSS_COMPILE=true

|

|

|

|

|

PLATFORM_SHARED_VERSIONED=

|

|

|

|

|

;;

|

|

|

|

|

Linux)

|

|

|

|

|

PLATFORM=OS_LINUX

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DOS_LINUX"

|

|

|

|

|

if [ -z "$USE_CLANG" ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -fno-builtin-memcmp"

|

|

|

|

|

else

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -latomic"

|

|

|

|

|

fi

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lpthread -lrt -ldl"

|

|

|

|

|

if test -z "$ROCKSDB_USE_IO_URING"; then

|

|

|

|

|

ROCKSDB_USE_IO_URING=1

|

|

|

|

|

fi

|

|

|

|

|

if test "$ROCKSDB_USE_IO_URING" -ne 0; then

|

|

|

|

|

# check for liburing

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS -x c++ - -luring -o test.o 2>/dev/null <<EOF

|

|

|

|

|

#include <liburing.h>

|

|

|

|

|

int main() {

|

|

|

|

|

struct io_uring ring;

|

|

|

|

|

io_uring_queue_init(1, &ring, 0);

|

|

|

|

|

return 0;

|

|

|

|

|

}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -luring"

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DROCKSDB_IOURING_PRESENT"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

# PORT_FILES=port/linux/linux_specific.cc

|

|

|

|

|

;;

|

|

|

|

|

SunOS)

|

|

|

|

|

PLATFORM=OS_SOLARIS

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -fno-builtin-memcmp -D_REENTRANT -DOS_SOLARIS -m64"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lpthread -lrt -static-libstdc++ -static-libgcc -m64"

|

|

|

|

|

# PORT_FILES=port/sunos/sunos_specific.cc

|

|

|

|

|

;;

|

|

|

|

|

AIX)

|

|

|

|

|

PLATFORM=OS_AIX

|

|

|

|

|

CC=gcc

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -maix64 -pthread -fno-builtin-memcmp -D_REENTRANT -DOS_AIX -D__STDC_FORMAT_MACROS"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -pthread -lpthread -lrt -maix64 -static-libstdc++ -static-libgcc"

|

|

|

|

|

# PORT_FILES=port/aix/aix_specific.cc

|

|

|

|

|

;;

|

|

|

|

|

FreeBSD)

|

|

|

|

|

PLATFORM=OS_FREEBSD

|

|

|

|

|

CXX=clang++

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -fno-builtin-memcmp -D_REENTRANT -DOS_FREEBSD"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lpthread"

|

|

|

|

|

# PORT_FILES=port/freebsd/freebsd_specific.cc

|

|

|

|

|

;;

|

|

|

|

|

GNU/kFreeBSD)

|

|

|

|

|

PLATFORM=OS_GNU_KFREEBSD

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DOS_GNU_KFREEBSD"

|

|

|

|

|

if [ -z "$USE_CLANG" ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -fno-builtin-memcmp"

|

|

|

|

|

else

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -latomic"

|

|

|

|

|

fi

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lpthread -lrt"

|

|

|

|

|

# PORT_FILES=port/gnu_kfreebsd/gnu_kfreebsd_specific.cc

|

|

|

|

|

;;

|

|

|

|

|

NetBSD)

|

|

|

|

|

PLATFORM=OS_NETBSD

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -fno-builtin-memcmp -D_REENTRANT -DOS_NETBSD"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lpthread -lgcc_s"

|

|

|

|

|

# PORT_FILES=port/netbsd/netbsd_specific.cc

|

|

|

|

|

;;

|

|

|

|

|

OpenBSD)

|

|

|

|

|

PLATFORM=OS_OPENBSD

|

|

|

|

|

CXX=clang++

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -fno-builtin-memcmp -D_REENTRANT -DOS_OPENBSD"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -pthread"

|

|

|

|

|

# PORT_FILES=port/openbsd/openbsd_specific.cc

|

|

|

|

|

FIND=gfind

|

|

|

|

|

WATCH=gnuwatch

|

|

|

|

|

;;

|

|

|

|

|

DragonFly)

|

|

|

|

|

PLATFORM=OS_DRAGONFLYBSD

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -fno-builtin-memcmp -D_REENTRANT -DOS_DRAGONFLYBSD"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lpthread"

|

|

|

|

|

# PORT_FILES=port/dragonfly/dragonfly_specific.cc

|

|

|

|

|

;;

|

|

|

|

|

Cygwin)

|

|

|

|

|

PLATFORM=CYGWIN

|

|

|

|

|

PLATFORM_SHARED_CFLAGS=""

|

|

|

|

|

PLATFORM_CXXFLAGS="-std=gnu++11"

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DCYGWIN"

|

|

|

|

|

if [ -z "$USE_CLANG" ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -fno-builtin-memcmp"

|

|

|

|

|

else

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -latomic"

|

|

|

|

|

fi

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lpthread -lrt"

|

|

|

|

|

# PORT_FILES=port/linux/linux_specific.cc

|

|

|

|

|

;;

|

|

|

|

|

OS_ANDROID_CROSSCOMPILE)

|

|

|

|

|

PLATFORM=OS_ANDROID

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -fno-builtin-memcmp -D_REENTRANT -DOS_ANDROID -DROCKSDB_PLATFORM_POSIX"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS " # All pthread features are in the Android C library

|

|

|

|

|

# PORT_FILES=port/android/android.cc

|

|

|

|

|

CROSS_COMPILE=true

|

|

|

|

|

;;

|

|

|

|

|

*)

|

|

|

|

|

echo "Unknown platform!" >&2

|

|

|

|

|

exit 1

|

|

|

|

|

esac

|

|

|

|

|

|

|

|

|

|

PLATFORM_CXXFLAGS="$PLATFORM_CXXFLAGS ${CXXFLAGS}"

|

|

|

|

|

JAVA_LDFLAGS="$PLATFORM_LDFLAGS"

|

|

|

|

|

JAVA_STATIC_LDFLAGS="$PLATFORM_LDFLAGS"

|

|

|

|

|

JAVAC_ARGS="-source 8"

|

|

|

|

|

|

|

|

|

|

if [ "$CROSS_COMPILE" = "true" -o "$FBCODE_BUILD" = "true" ]; then

|

|

|

|

|

# Cross-compiling; do not try any compilation tests.

|

|

|

|

|

# Also don't need any compilation tests if compiling on fbcode

|

|

|

|

|

if [ "$FBCODE_BUILD" = "true" ]; then

|

|

|

|

|

# Enable backtrace on fbcode since the necessary libraries are present

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DROCKSDB_BACKTRACE"

|

|

|

|

|

FOLLY_DIR="third-party/folly"

|

|

|

|

|

fi

|

|

|

|

|

true

|

|

|

|

|

else

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_FALLOCATE; then

|

|

|

|

|

# Test whether fallocate is available

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS -x c++ - -o test.o 2>/dev/null <<EOF

|

|

|

|

|

#include <fcntl.h>

|

|

|

|

|

#include <linux/falloc.h>

|

|

|

|

|

int main() {

|

|

|

|

|

int fd = open("/dev/null", 0);

|

|

|

|

|

fallocate(fd, FALLOC_FL_KEEP_SIZE, 0, 1024);

|

|

|

|

|

}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DROCKSDB_FALLOCATE_PRESENT"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_SNAPPY; then

|

|

|

|

|

# Test whether Snappy library is installed

|

|

|

|

|

# http://code.google.com/p/snappy/

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS -x c++ - -o test.o 2>/dev/null <<EOF

|

|

|

|

|

#include <snappy.h>

|

|

|

|

|

int main() {}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DSNAPPY"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lsnappy"

|

|

|

|

|

JAVA_LDFLAGS="$JAVA_LDFLAGS -lsnappy"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_GFLAGS; then

|

|

|

|

|

# Test whether gflags library is installed

|

|

|

|

|

# http://gflags.github.io/gflags/

|

|

|

|

|

# check if the namespace is gflags

|

|

|

|

|

if $CXX $PLATFORM_CXXFLAGS -x c++ - -o test.o 2>/dev/null << EOF

|

|

|

|

|

#include <gflags/gflags.h>

|

|

|

|

|

using namespace GFLAGS_NAMESPACE;

|

|

|

|

|

int main() {}

|

|

|

|

|

EOF

|

|

|

|

|

then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DGFLAGS=1"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lgflags"

|

|

|

|

|

# check if namespace is gflags

|

|

|

|

|

elif $CXX $PLATFORM_CXXFLAGS -x c++ - -o test.o 2>/dev/null << EOF

|

|

|

|

|

#include <gflags/gflags.h>

|

|

|

|

|

using namespace gflags;

|

|

|

|

|

int main() {}

|

|

|

|

|

EOF

|

|

|

|

|

then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DGFLAGS=1 -DGFLAGS_NAMESPACE=gflags"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lgflags"

|

|

|

|

|

# check if namespace is google

|

|

|

|

|

elif $CXX $PLATFORM_CXXFLAGS -x c++ - -o test.o 2>/dev/null << EOF

|

|

|

|

|

#include <gflags/gflags.h>

|

|

|

|

|

using namespace google;

|

|

|

|

|

int main() {}

|

|

|

|

|

EOF

|

|

|

|

|

then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DGFLAGS=1 -DGFLAGS_NAMESPACE=google"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lgflags"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_ZLIB; then

|

|

|

|

|

# Test whether zlib library is installed

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS $COMMON_FLAGS -x c++ - -o test.o 2>/dev/null <<EOF

|

|

|

|

|

#include <zlib.h>

|

|

|

|

|

int main() {}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DZLIB"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lz"

|

|

|

|

|

JAVA_LDFLAGS="$JAVA_LDFLAGS -lz"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_BZIP; then

|

|

|

|

|

# Test whether bzip library is installed

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS $COMMON_FLAGS -x c++ - -o test.o 2>/dev/null <<EOF

|

|

|

|

|

#include <bzlib.h>

|

|

|

|

|

int main() {}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DBZIP2"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lbz2"

|

|

|

|

|

JAVA_LDFLAGS="$JAVA_LDFLAGS -lbz2"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_LZ4; then

|

|

|

|

|

# Test whether lz4 library is installed

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS $COMMON_FLAGS -x c++ - -o test.o 2>/dev/null <<EOF

|

|

|

|

|

#include <lz4.h>

|

|

|

|

|

#include <lz4hc.h>

|

|

|

|

|

int main() {}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DLZ4"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -llz4"

|

|

|

|

|

JAVA_LDFLAGS="$JAVA_LDFLAGS -llz4"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_ZSTD; then

|

|

|

|

|

# Test whether zstd library is installed

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS $COMMON_FLAGS -x c++ - -o /dev/null 2>/dev/null <<EOF

|

|

|

|

|

#include <zstd.h>

|

|

|

|

|

int main() {}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DZSTD"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lzstd"

|

|

|

|

|

JAVA_LDFLAGS="$JAVA_LDFLAGS -lzstd"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_NUMA; then

|

|

|

|

|

# Test whether numa is available

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS -x c++ - -o test.o -lnuma 2>/dev/null <<EOF

|

|

|

|

|

#include <numa.h>

|

|

|

|

|

#include <numaif.h>

|

|

|

|

|

int main() {}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DNUMA"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lnuma"

|

|

|

|

|

JAVA_LDFLAGS="$JAVA_LDFLAGS -lnuma"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_TBB; then

|

|

|

|

|

# Test whether tbb is available

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS $LDFLAGS -x c++ - -o test.o -ltbb 2>/dev/null <<EOF

|

|

|

|

|

#include <tbb/tbb.h>

|

|

|

|

|

int main() {}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DTBB"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -ltbb"

|

|

|

|

|

JAVA_LDFLAGS="$JAVA_LDFLAGS -ltbb"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_JEMALLOC; then

|

|

|

|

|

# Test whether jemalloc is available

|

|

|

|

|

if echo 'int main() {}' | $CXX $PLATFORM_CXXFLAGS -x c++ - -o test.o -ljemalloc \

|

|

|

|

|

2>/dev/null; then

|

|

|

|

|

# This will enable some preprocessor identifiers in the Makefile

|

|

|

|

|

JEMALLOC=1

|

|

|

|

|

# JEMALLOC can be enabled either using the flag (like here) or by

|

|

|

|

|

# providing direct link to the jemalloc library

|

|

|

|

|

WITH_JEMALLOC_FLAG=1

|

|

|

|

|

# check for JEMALLOC installed with HomeBrew

|

|

|

|

|

if [ "$PLATFORM" == "OS_MACOSX" ]; then

|

|

|

|

|

if hash brew 2>/dev/null && brew ls --versions jemalloc > /dev/null; then

|

|

|

|

|

JEMALLOC_VER=$(brew ls --versions jemalloc | tail -n 1 | cut -f 2 -d ' ')

|

|

|

|

|

JEMALLOC_INCLUDE="-I/usr/local/Cellar/jemalloc/${JEMALLOC_VER}/include"

|

|

|

|

|

JEMALLOC_LIB="/usr/local/Cellar/jemalloc/${JEMALLOC_VER}/lib/libjemalloc_pic.a"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS $JEMALLOC_LIB"

|

|

|

|

|

JAVA_STATIC_LDFLAGS="$JAVA_STATIC_LDFLAGS $JEMALLOC_LIB"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

if ! test $JEMALLOC && ! test $ROCKSDB_DISABLE_TCMALLOC; then

|

|

|

|

|

# jemalloc is not available. Let's try tcmalloc

|

|

|

|

|

if echo 'int main() {}' | $CXX $PLATFORM_CXXFLAGS -x c++ - -o test.o \

|

|

|

|

|

-ltcmalloc 2>/dev/null; then

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -ltcmalloc"

|

|

|

|

|

JAVA_LDFLAGS="$JAVA_LDFLAGS -ltcmalloc"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

Use malloc_usable_size() for accounting block cache size

Summary:

Currently, when we insert something into block cache, we say that the block cache capacity decreased by the size of the block. However, size of the block might be less than the actual memory used by this object. For example, 4.5KB block will actually use 8KB of memory. So even if we configure block cache to 10GB, our actually memory usage of block cache will be 20GB!

This problem showed up a lot in testing and just recently also showed up in MongoRocks production where we were using 30GB more memory than expected.

This diff will fix the problem. Instead of counting the block size, we will count memory used by the block. That way, a block cache configured to be 10GB will actually use only 10GB of memory.

I'm using non-portable function and I couldn't find info on portability on Google. However, it seems to work on Linux, which will cover majority of our use-cases.

Test Plan:

1. fill up mongo instance with 80GB of data

2. restart mongo with block cache size configured to 10GB

3. do a table scan in mongo

4. memory usage before the diff: 12GB. memory usage after the diff: 10.5GB

Reviewers: sdong, MarkCallaghan, rven, yhchiang

Reviewed By: yhchiang

Subscribers: dhruba, leveldb

Differential Revision: https://reviews.facebook.net/D40635

10 years ago

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_MALLOC_USABLE_SIZE; then

|

|

|

|

|

# Test whether malloc_usable_size is available

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS -x c++ - -o test.o 2>/dev/null <<EOF

|

|

|

|

|

#include <malloc.h>

|

|

|

|

|

int main() {

|

|

|

|

|

size_t res = malloc_usable_size(0);

|

|

|

|

|

(void)res;

|

|

|

|

|

return 0;

|

|

|

|

|

}

|

Use malloc_usable_size() for accounting block cache size

Summary:

Currently, when we insert something into block cache, we say that the block cache capacity decreased by the size of the block. However, size of the block might be less than the actual memory used by this object. For example, 4.5KB block will actually use 8KB of memory. So even if we configure block cache to 10GB, our actually memory usage of block cache will be 20GB!

This problem showed up a lot in testing and just recently also showed up in MongoRocks production where we were using 30GB more memory than expected.

This diff will fix the problem. Instead of counting the block size, we will count memory used by the block. That way, a block cache configured to be 10GB will actually use only 10GB of memory.

I'm using non-portable function and I couldn't find info on portability on Google. However, it seems to work on Linux, which will cover majority of our use-cases.

Test Plan:

1. fill up mongo instance with 80GB of data

2. restart mongo with block cache size configured to 10GB

3. do a table scan in mongo

4. memory usage before the diff: 12GB. memory usage after the diff: 10.5GB

Reviewers: sdong, MarkCallaghan, rven, yhchiang

Reviewed By: yhchiang

Subscribers: dhruba, leveldb

Differential Revision: https://reviews.facebook.net/D40635

10 years ago

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DROCKSDB_MALLOC_USABLE_SIZE"

|

|

|

|

|

fi

|

Use malloc_usable_size() for accounting block cache size

Summary:

Currently, when we insert something into block cache, we say that the block cache capacity decreased by the size of the block. However, size of the block might be less than the actual memory used by this object. For example, 4.5KB block will actually use 8KB of memory. So even if we configure block cache to 10GB, our actually memory usage of block cache will be 20GB!

This problem showed up a lot in testing and just recently also showed up in MongoRocks production where we were using 30GB more memory than expected.

This diff will fix the problem. Instead of counting the block size, we will count memory used by the block. That way, a block cache configured to be 10GB will actually use only 10GB of memory.

I'm using non-portable function and I couldn't find info on portability on Google. However, it seems to work on Linux, which will cover majority of our use-cases.

Test Plan:

1. fill up mongo instance with 80GB of data

2. restart mongo with block cache size configured to 10GB

3. do a table scan in mongo

4. memory usage before the diff: 12GB. memory usage after the diff: 10.5GB

Reviewers: sdong, MarkCallaghan, rven, yhchiang

Reviewed By: yhchiang

Subscribers: dhruba, leveldb

Differential Revision: https://reviews.facebook.net/D40635

10 years ago

|

|

|

fi

|

|

|

|

|

|

Provide an allocator for new memory type to be used with RocksDB block cache (#6214)

Summary:

New memory technologies are being developed by various hardware vendors (Intel DCPMM is one such technology currently available). These new memory types require different libraries for allocation and management (such as PMDK and memkind). The high capacities available make it possible to provision large caches (up to several TBs in size), beyond what is achievable with DRAM.

The new allocator provided in this PR uses the memkind library to allocate memory on different media.

**Performance**

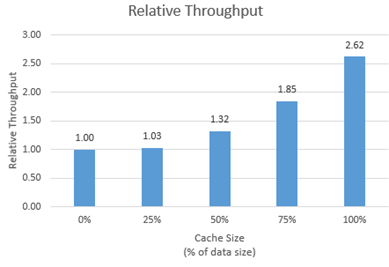

We tested the new allocator using db_bench.

- For each test, we vary the size of the block cache (relative to the size of the uncompressed data in the database).

- The database is filled sequentially. Throughput is then measured with a readrandom benchmark.

- We use a uniform distribution as a worst-case scenario.

The plot shows throughput (ops/s) relative to a configuration with no block cache and default allocator.

For all tests, p99 latency is below 500 us.

**Changes**

- Add MemkindKmemAllocator

- Add --use_cache_memkind_kmem_allocator db_bench option (to create an LRU block cache with the new allocator)

- Add detection of memkind library with KMEM DAX support

- Add test for MemkindKmemAllocator

**Minimum Requirements**

- kernel 5.3.12

- ndctl v67 - https://github.com/pmem/ndctl

- memkind v1.10.0 - https://github.com/memkind/memkind

**Memory Configuration**

The allocator uses the MEMKIND_DAX_KMEM memory kind. Follow the instructions on[ memkind’s GitHub page](https://github.com/memkind/memkind) to set up NVDIMM memory accordingly.

Note on memory allocation with NVDIMM memory exposed as system memory.

- The MemkindKmemAllocator will only allocate from NVDIMM memory (using memkind_malloc with MEMKIND_DAX_KMEM kind).

- The default allocator is not restricted to RAM by default. Based on NUMA node latency, the kernel should allocate from local RAM preferentially, but it’s a kernel decision. numactl --preferred/--membind can be used to allocate preferentially/exclusively from the local RAM node.

**Usage**

When creating an LRU cache, pass a MemkindKmemAllocator object as argument.

For example (replace capacity with the desired value in bytes):

```

#include "rocksdb/cache.h"

#include "memory/memkind_kmem_allocator.h"

NewLRUCache(

capacity /*size_t*/,

6 /*cache_numshardbits*/,

false /*strict_capacity_limit*/,

false /*cache_high_pri_pool_ratio*/,

std::make_shared<MemkindKmemAllocator>());

```

Refer to [RocksDB’s block cache documentation](https://github.com/facebook/rocksdb/wiki/Block-Cache) to assign the LRU cache as block cache for a database.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6214

Reviewed By: cheng-chang

Differential Revision: D19292435

fbshipit-source-id: 7202f47b769e7722b539c86c2ffd669f64d7b4e1

5 years ago

|

|

|

if ! test $ROCKSDB_DISABLE_MEMKIND; then

|

|

|

|

|

# Test whether memkind library is installed

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS $LDFLAGS -x c++ - -o test.o -lmemkind 2>/dev/null <<EOF

|

Provide an allocator for new memory type to be used with RocksDB block cache (#6214)

Summary:

New memory technologies are being developed by various hardware vendors (Intel DCPMM is one such technology currently available). These new memory types require different libraries for allocation and management (such as PMDK and memkind). The high capacities available make it possible to provision large caches (up to several TBs in size), beyond what is achievable with DRAM.

The new allocator provided in this PR uses the memkind library to allocate memory on different media.

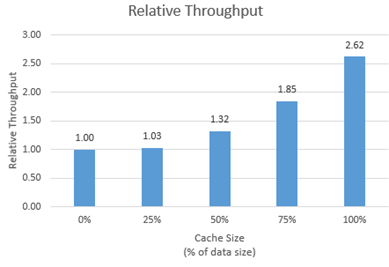

**Performance**

We tested the new allocator using db_bench.

- For each test, we vary the size of the block cache (relative to the size of the uncompressed data in the database).

- The database is filled sequentially. Throughput is then measured with a readrandom benchmark.

- We use a uniform distribution as a worst-case scenario.

The plot shows throughput (ops/s) relative to a configuration with no block cache and default allocator.

For all tests, p99 latency is below 500 us.

**Changes**

- Add MemkindKmemAllocator

- Add --use_cache_memkind_kmem_allocator db_bench option (to create an LRU block cache with the new allocator)

- Add detection of memkind library with KMEM DAX support

- Add test for MemkindKmemAllocator

**Minimum Requirements**

- kernel 5.3.12

- ndctl v67 - https://github.com/pmem/ndctl

- memkind v1.10.0 - https://github.com/memkind/memkind

**Memory Configuration**

The allocator uses the MEMKIND_DAX_KMEM memory kind. Follow the instructions on[ memkind’s GitHub page](https://github.com/memkind/memkind) to set up NVDIMM memory accordingly.

Note on memory allocation with NVDIMM memory exposed as system memory.

- The MemkindKmemAllocator will only allocate from NVDIMM memory (using memkind_malloc with MEMKIND_DAX_KMEM kind).

- The default allocator is not restricted to RAM by default. Based on NUMA node latency, the kernel should allocate from local RAM preferentially, but it’s a kernel decision. numactl --preferred/--membind can be used to allocate preferentially/exclusively from the local RAM node.

**Usage**

When creating an LRU cache, pass a MemkindKmemAllocator object as argument.

For example (replace capacity with the desired value in bytes):

```

#include "rocksdb/cache.h"

#include "memory/memkind_kmem_allocator.h"

NewLRUCache(

capacity /*size_t*/,

6 /*cache_numshardbits*/,

false /*strict_capacity_limit*/,

false /*cache_high_pri_pool_ratio*/,

std::make_shared<MemkindKmemAllocator>());

```

Refer to [RocksDB’s block cache documentation](https://github.com/facebook/rocksdb/wiki/Block-Cache) to assign the LRU cache as block cache for a database.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6214

Reviewed By: cheng-chang

Differential Revision: D19292435

fbshipit-source-id: 7202f47b769e7722b539c86c2ffd669f64d7b4e1

5 years ago

|

|

|

#include <memkind.h>

|

|

|

|

|

int main() {

|

|

|

|

|

memkind_malloc(MEMKIND_DAX_KMEM, 1024);

|

|

|

|

|

return 0;

|

|

|

|

|

}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DMEMKIND"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lmemkind"

|

|

|

|

|

JAVA_LDFLAGS="$JAVA_LDFLAGS -lmemkind"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_PTHREAD_MUTEX_ADAPTIVE_NP; then

|

|

|

|

|

# Test whether PTHREAD_MUTEX_ADAPTIVE_NP mutex type is available

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS -x c++ - -o test.o 2>/dev/null <<EOF

|

|

|

|

|

#include <pthread.h>

|

|

|

|

|

int main() {

|

|

|

|

|

int x = PTHREAD_MUTEX_ADAPTIVE_NP;

|

|

|

|

|

(void)x;

|

|

|

|

|

return 0;

|

|

|

|

|

}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DROCKSDB_PTHREAD_ADAPTIVE_MUTEX"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_BACKTRACE; then

|

|

|

|

|

# Test whether backtrace is available

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS -x c++ - -o test.o 2>/dev/null <<EOF

|

|

|

|

|

#include <execinfo.h>

|

|

|

|

|

int main() {

|

|

|

|

|

void* frames[1];

|

|

|

|

|

backtrace_symbols(frames, backtrace(frames, 1));

|

|

|

|

|

return 0;

|

|

|

|

|

}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DROCKSDB_BACKTRACE"

|

|

|

|

|

else

|

|

|

|

|

# Test whether execinfo library is installed

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS -lexecinfo -x c++ - -o test.o 2>/dev/null <<EOF

|

|

|

|

|

#include <execinfo.h>

|

|

|

|

|

int main() {

|

|

|

|

|

void* frames[1];

|

|

|

|

|

backtrace_symbols(frames, backtrace(frames, 1));

|

|

|

|

|

}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DROCKSDB_BACKTRACE"

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lexecinfo"

|

|

|

|

|

JAVA_LDFLAGS="$JAVA_LDFLAGS -lexecinfo"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_PG; then

|

|

|

|

|

# Test if -pg is supported

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS -pg -x c++ - -o test.o 2>/dev/null <<EOF

|

|

|

|

|

int main() {

|

|

|

|

|

return 0;

|

|

|

|

|

}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

PROFILING_FLAGS=-pg

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_SYNC_FILE_RANGE; then

|

|

|

|

|

# Test whether sync_file_range is supported for compatibility with an old glibc

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS -x c++ - -o test.o 2>/dev/null <<EOF

|

|

|

|

|

#include <fcntl.h>

|

|

|

|

|

int main() {

|

|

|

|

|

int fd = open("/dev/null", 0);

|

|

|

|

|

sync_file_range(fd, 0, 1024, SYNC_FILE_RANGE_WRITE);

|

|

|

|

|

}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DROCKSDB_RANGESYNC_PRESENT"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_SCHED_GETCPU; then

|

|

|

|

|

# Test whether sched_getcpu is supported

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS -x c++ - -o test.o 2>/dev/null <<EOF

|

|

|

|

|

#include <sched.h>

|

|

|

|

|

int main() {

|

|

|

|

|

int cpuid = sched_getcpu();

|

|

|

|

|

(void)cpuid;

|

|

|

|

|

}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DROCKSDB_SCHED_GETCPU_PRESENT"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_AUXV_GETAUXVAL; then

|

|

|

|

|

# Test whether getauxval is supported

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS -x c++ - -o test.o 2>/dev/null <<EOF

|

|

|

|

|

#include <sys/auxv.h>

|

|

|

|

|

int main() {

|

|

|

|

|

uint64_t auxv = getauxval(AT_HWCAP);

|

|

|

|

|

(void)auxv;

|

|

|

|

|

}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -DROCKSDB_AUXV_GETAUXVAL_PRESENT"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_ALIGNED_NEW; then

|

|

|

|

|

# Test whether c++17 aligned-new is supported

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS -faligned-new -x c++ - -o test.o 2>/dev/null <<EOF

|

|

|

|

|

struct alignas(1024) t {int a;};

|

|

|

|

|

int main() {}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

PLATFORM_CXXFLAGS="$PLATFORM_CXXFLAGS -faligned-new -DHAVE_ALIGNED_NEW"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

if ! test $ROCKSDB_DISABLE_BENCHMARK; then

|

|

|

|

|

# Test whether google benchmark is available

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS -x c++ - -o /dev/null -lbenchmark -lpthread 2>/dev/null <<EOF

|

|

|

|

|

#include <benchmark/benchmark.h>

|

|

|

|

|

int main() {}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

PLATFORM_LDFLAGS="$PLATFORM_LDFLAGS -lbenchmark"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

if test $USE_FOLLY; then

|

|

|

|

|

# Test whether libfolly library is installed

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS $COMMON_FLAGS -x c++ - -o /dev/null 2>/dev/null <<EOF

|

|

|

|

|

#include <folly/synchronization/DistributedMutex.h>

|

|

|

|

|

int main() {}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" != 0 ]; then

|

|

|

|

|

FOLLY_DIR="./third-party/folly"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

fi

|

|

|

|

|

|

|

|

|

|

# TODO(tec): Fix -Wshorten-64-to-32 errors on FreeBSD and enable the warning.

|

|

|

|

|

# -Wshorten-64-to-32 breaks compilation on FreeBSD aarch64 and i386

|

|

|

|

|

if ! { [ "$TARGET_OS" = FreeBSD ] && [ "$TARGET_ARCHITECTURE" = arm64 -o "$TARGET_ARCHITECTURE" = i386 ]; }; then

|

|

|

|

|

# Test whether -Wshorten-64-to-32 is available

|

|

|

|

|

$CXX $PLATFORM_CXXFLAGS -x c++ - -o test.o -Wshorten-64-to-32 2>/dev/null <<EOF

|

|

|

|

|

int main() {}

|

|

|

|

|

EOF

|

|

|

|

|

if [ "$?" = 0 ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -Wshorten-64-to-32"

|

|

|

|

|

fi

|

|

|

|

|

fi

|

|

|

|

|

|

Simplify detection of x86 CPU features (#11419)

Summary:

**Background** - runtime detection of certain x86 CPU features was added for optimizing CRC32c checksums, where performance is dramatically affected by the availability of certain CPU instructions and code using intrinsics for those instructions. And Java builds with native library try to be broadly compatible but performant.

What has changed is that CRC32c is no longer the most efficient cheecksum on contemporary x86_64 hardware, nor the default checksum. XXH3 is generally faster and not as dramatically impacted by the availability of certain CPU instructions. For example, on my Skylake system using db_bench (similar on an older Skylake system without AVX512):

PORTABLE=1 empty USE_SSE : xxh3->8 GB/s crc32c->0.8 GB/s (no SSE4.2 nor AVX2 instructions)

PORTABLE=1 USE_SSE=1 : xxh3->19 GB/s crc32c->16 GB/s (with SSE4.2 and AVX2)

PORTABLE=0 USE_SSE ignored: xxh3->28 GB/s crc32c->16 GB/s (also some AVX512)

Testing a ~10 year old system, with SSE4.2 but without AVX2, crc32c is a similar speed to the new systems but xxh3 is only about half that speed, also 8GB/s like the non-AVX2 compile above. Given that xxh3 has specific optimization for AVX2, I think we can infer that that crc32c is only fastest for that ~2008-2013 period when SSE4.2 was included but not AVX2. And given that xxh3 is only about 2x slower on these systems (not like >10x slower for unoptimized crc32c), I don't think we need to invest too much in optimally adapting to these old cases.

x86 hardware that doesn't support fast CRC32c is now extremely rare, so requiring a custom build to support such hardware is fine IMHO.

**This change** does two related things:

* Remove runtime CPU detection for optimizing CRC32c on x86. Maintaining this code is non-zero work, and compiling special code that doesn't work on the configured target instruction set for code generation is always dubious. (On the one hand we have to ensure the CRC32c code uses SSE4.2 but on the other hand we have to ensure nothing else does.)

* Detect CPU features in source code, not in build scripts. Although there are some hypothetical advantages to detectiong in build scripts (compiler generality), RocksDB supports at least three build systems: make, cmake, and buck. It's not practical to support feature detection on all three, and we have suffered from missed optimization opportunities by relying on missing or incomplete detection in cmake and buck. We also depend on some components like xxhash that do source code detection anyway.

**In more detail:**

* `HAVE_SSE42`, `HAVE_AVX2`, and `HAVE_PCLMUL` replaced by standard macros `__SSE4_2__`, `__AVX2__`, and `__PCLMUL__`.

* MSVC does not provide high fidelity defines for SSE, PCLMUL, or POPCNT, but we can infer those from `__AVX__` or `__AVX2__` in a compatibility header. In rare cases of false negative or false positive feature detection, a build engineer should be able to set defines to work around the issue.

* `__POPCNT__` is another standard define, but we happen to only need it on MSVC, where it is set by that compatibility header, or can be set by the build engineer.

* `PORTABLE` can be set to a CPU type, e.g. "haswell", to compile for that CPU type.

* `USE_SSE` is deprecated, now equivalent to PORTABLE=haswell, which roughly approximates its old behavior.

Notably, this change should enable more builds to use the AVX2-optimized Bloom filter implementation.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/11419

Test Plan:

existing tests, CI

Manual performance tests after the change match the before above (none expected with make build).

We also see AVX2 optimized Bloom filter code enabled when expected, by injecting a compiler error. (Performance difference is not big on my current CPU.)

Reviewed By: ajkr

Differential Revision: D45489041

Pulled By: pdillinger

fbshipit-source-id: 60ceb0dd2aa3b365c99ed08a8b2a087a9abb6a70

2 years ago

|

|

|

if [ "$PORTABLE" == "" ] || [ "$PORTABLE" == 0 ]; then

|

|

|

|

|

if test -n "`echo $TARGET_ARCHITECTURE | grep ^ppc64`"; then

|

|

|

|

|

# Tune for this POWER processor, treating '+' models as base models

|

|

|

|

|

POWER=`LD_SHOW_AUXV=1 /bin/true | grep AT_PLATFORM | grep -E -o power[0-9]+`

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -mcpu=$POWER -mtune=$POWER "

|

|

|

|

|

elif test -n "`echo $TARGET_ARCHITECTURE | grep -e^arm -e^aarch64`"; then

|

|

|

|

|

# TODO: Handle this with approprite options.

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS"

|

|

|

|

|

elif test -n "`echo $TARGET_ARCHITECTURE | grep ^aarch64`"; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS"

|

|

|

|

|

elif test -n "`echo $TARGET_ARCHITECTURE | grep ^s390x`"; then

|

|

|

|

|

if echo 'int main() {}' | $CXX $PLATFORM_CXXFLAGS -x c++ \

|

|

|

|

|

-march=native - -o /dev/null 2>/dev/null; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -march=native "

|

|

|

|

|

else

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -march=z196 "

|

|

|

|

|

fi

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS"

|

Improve build detect for RISCV (#9366)

Summary:

Related to: https://github.com/facebook/rocksdb/pull/9215

* Adds build_detect_platform support for RISCV on Linux (at least on SiFive Unmatched platforms)

This still leaves some linking issues on RISCV remaining (e.g. when building `db_test`):

```

/usr/bin/ld: ./librocksdb_debug.a(memtable.o): in function `__gnu_cxx::new_allocator<char>::deallocate(char*, unsigned long)':

/usr/include/c++/10/ext/new_allocator.h:133: undefined reference to `__atomic_compare_exchange_1'

/usr/bin/ld: ./librocksdb_debug.a(memtable.o): in function `std::__atomic_base<bool>::compare_exchange_weak(bool&, bool, std::memory_order, std::memory_order)':

/usr/include/c++/10/bits/atomic_base.h:464: undefined reference to `__atomic_compare_exchange_1'

/usr/bin/ld: /usr/include/c++/10/bits/atomic_base.h:464: undefined reference to `__atomic_compare_exchange_1'

/usr/bin/ld: /usr/include/c++/10/bits/atomic_base.h:464: undefined reference to `__atomic_compare_exchange_1'

/usr/bin/ld: /usr/include/c++/10/bits/atomic_base.h:464: undefined reference to `__atomic_compare_exchange_1'

/usr/bin/ld: ./librocksdb_debug.a(memtable.o):/usr/include/c++/10/bits/atomic_base.h:464: more undefined references to `__atomic_compare_exchange_1' follow

/usr/bin/ld: ./librocksdb_debug.a(db_impl.o): in function `rocksdb::DBImpl::NewIteratorImpl(rocksdb::ReadOptions const&, rocksdb::ColumnFamilyData*, unsigned long, rocksdb::ReadCallback*, bool, bool)':

/home/adamretter/rocksdb/db/db_impl/db_impl.cc:3019: undefined reference to `__atomic_exchange_1'

/usr/bin/ld: ./librocksdb_debug.a(write_thread.o): in function `rocksdb::WriteThread::Writer::CreateMutex()':

/home/adamretter/rocksdb/./db/write_thread.h:205: undefined reference to `__atomic_compare_exchange_1'

/usr/bin/ld: ./librocksdb_debug.a(write_thread.o): in function `rocksdb::WriteThread::SetState(rocksdb::WriteThread::Writer*, unsigned char)':

/home/adamretter/rocksdb/db/write_thread.cc:222: undefined reference to `__atomic_compare_exchange_1'

collect2: error: ld returned 1 exit status

make: *** [Makefile:1449: db_test] Error 1

```

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9366

Reviewed By: jay-zhuang

Differential Revision: D34377664

Pulled By: mrambacher

fbshipit-source-id: c86f9d0cd1cb0c18de72b06f1bf5847f23f51118

3 years ago

|

|

|

elif test -n "`echo $TARGET_ARCHITECTURE | grep ^riscv64`"; then

|

|

|

|

|

RISC_ISA=$(cat /proc/cpuinfo | grep isa | head -1 | cut --delimiter=: -f 2 | cut -b 2-)

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -march=${RISC_ISA}"

|

|

|

|

|

elif [ "$TARGET_OS" == "IOS" ]; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS"

|

|

|

|

|

else

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -march=native "

|

|

|

|

|

fi

|

|

|

|

|

else

|

Simplify detection of x86 CPU features (#11419)

Summary:

**Background** - runtime detection of certain x86 CPU features was added for optimizing CRC32c checksums, where performance is dramatically affected by the availability of certain CPU instructions and code using intrinsics for those instructions. And Java builds with native library try to be broadly compatible but performant.

What has changed is that CRC32c is no longer the most efficient cheecksum on contemporary x86_64 hardware, nor the default checksum. XXH3 is generally faster and not as dramatically impacted by the availability of certain CPU instructions. For example, on my Skylake system using db_bench (similar on an older Skylake system without AVX512):

PORTABLE=1 empty USE_SSE : xxh3->8 GB/s crc32c->0.8 GB/s (no SSE4.2 nor AVX2 instructions)

PORTABLE=1 USE_SSE=1 : xxh3->19 GB/s crc32c->16 GB/s (with SSE4.2 and AVX2)

PORTABLE=0 USE_SSE ignored: xxh3->28 GB/s crc32c->16 GB/s (also some AVX512)

Testing a ~10 year old system, with SSE4.2 but without AVX2, crc32c is a similar speed to the new systems but xxh3 is only about half that speed, also 8GB/s like the non-AVX2 compile above. Given that xxh3 has specific optimization for AVX2, I think we can infer that that crc32c is only fastest for that ~2008-2013 period when SSE4.2 was included but not AVX2. And given that xxh3 is only about 2x slower on these systems (not like >10x slower for unoptimized crc32c), I don't think we need to invest too much in optimally adapting to these old cases.

x86 hardware that doesn't support fast CRC32c is now extremely rare, so requiring a custom build to support such hardware is fine IMHO.

**This change** does two related things:

* Remove runtime CPU detection for optimizing CRC32c on x86. Maintaining this code is non-zero work, and compiling special code that doesn't work on the configured target instruction set for code generation is always dubious. (On the one hand we have to ensure the CRC32c code uses SSE4.2 but on the other hand we have to ensure nothing else does.)

* Detect CPU features in source code, not in build scripts. Although there are some hypothetical advantages to detectiong in build scripts (compiler generality), RocksDB supports at least three build systems: make, cmake, and buck. It's not practical to support feature detection on all three, and we have suffered from missed optimization opportunities by relying on missing or incomplete detection in cmake and buck. We also depend on some components like xxhash that do source code detection anyway.

**In more detail:**

* `HAVE_SSE42`, `HAVE_AVX2`, and `HAVE_PCLMUL` replaced by standard macros `__SSE4_2__`, `__AVX2__`, and `__PCLMUL__`.

* MSVC does not provide high fidelity defines for SSE, PCLMUL, or POPCNT, but we can infer those from `__AVX__` or `__AVX2__` in a compatibility header. In rare cases of false negative or false positive feature detection, a build engineer should be able to set defines to work around the issue.

* `__POPCNT__` is another standard define, but we happen to only need it on MSVC, where it is set by that compatibility header, or can be set by the build engineer.

* `PORTABLE` can be set to a CPU type, e.g. "haswell", to compile for that CPU type.

* `USE_SSE` is deprecated, now equivalent to PORTABLE=haswell, which roughly approximates its old behavior.

Notably, this change should enable more builds to use the AVX2-optimized Bloom filter implementation.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/11419

Test Plan:

existing tests, CI

Manual performance tests after the change match the before above (none expected with make build).

We also see AVX2 optimized Bloom filter code enabled when expected, by injecting a compiler error. (Performance difference is not big on my current CPU.)

Reviewed By: ajkr

Differential Revision: D45489041

Pulled By: pdillinger

fbshipit-source-id: 60ceb0dd2aa3b365c99ed08a8b2a087a9abb6a70

2 years ago

|

|

|

# PORTABLE specified

|

|

|

|

|

if [ "$PORTABLE" == 1 ]; then

|

|

|

|

|

if test -n "`echo $TARGET_ARCHITECTURE | grep ^s390x`"; then

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -march=z196 "

|

|

|

|

|

elif test -n "`echo $TARGET_ARCHITECTURE | grep ^riscv64`"; then

|

|

|

|

|

RISC_ISA=$(cat /proc/cpuinfo | grep isa | head -1 | cut --delimiter=: -f 2 | cut -b 2-)

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -march=${RISC_ISA}"

|

|

|

|

|

elif test "$USE_SSE"; then

|

|

|

|

|

# USE_SSE is DEPRECATED

|

|

|

|

|

# This is a rough approximation of the old USE_SSE behavior

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -march=haswell"

|

|

|

|

|

fi

|

|

|

|

|

# Other than those cases, not setting -march= here.

|

|

|

|

|

else

|

|

|

|

|

# Assume PORTABLE is a minimum assumed cpu type, e.g. PORTABLE=haswell

|

|

|

|

|

COMMON_FLAGS="$COMMON_FLAGS -march=${PORTABLE}"

|

Improve build detect for RISCV (#9366)

Summary:

Related to: https://github.com/facebook/rocksdb/pull/9215